https://github.com/deeplearning4j/dl4j-examples

Tip revision: bf53c259f6ba09f10e8fb03def6d1c797cb84f7b authored by Shams Ul Azeem on 24 November 2017, 06:31:35 UTC

Updated: Determining cloud cover notebook + added ipynb format

Updated: Determining cloud cover notebook + added ipynb format

Tip revision: bf53c25

10. Image classification with CNN.json

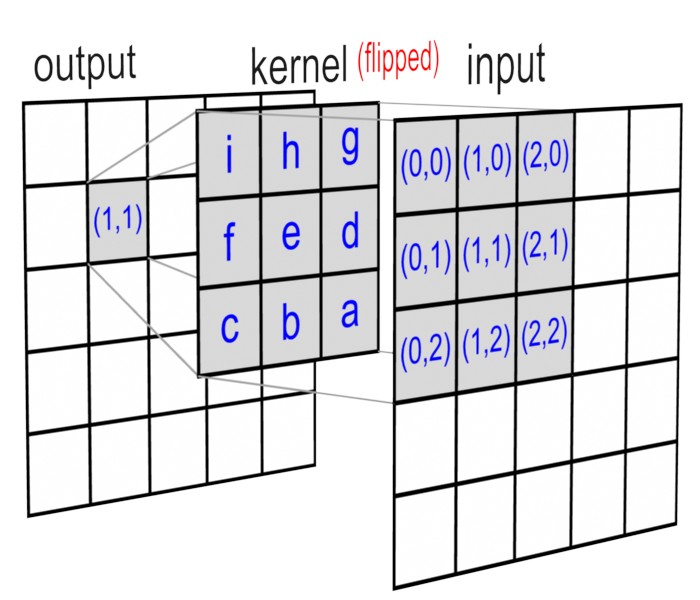

{"paragraphs":[{"text":"%md\n### Note\n\nPlease view the [README](https://github.com/deeplearning4j/deeplearning4j/tree/master/dl4j-examples/tutorials/README.md) to learn about installing, setting up dependencies, and importing notebooks in Zeppelin","user":"anonymous","dateUpdated":"2017-11-21T19:01:33+0000","config":{"tableHide":false,"editorSetting":{"language":"markdown","editOnDblClick":true},"colWidth":12,"editorMode":"ace/mode/markdown","fontSize":9,"editorHide":true,"results":{},"enabled":true},"settings":{"params":{},"forms":{}},"results":{"code":"SUCCESS","msg":[{"type":"HTML","data":"<div class=\"markdown-body\">\n<h3>Note</h3>\n<p>Please view the <a href=\"https://github.com/deeplearning4j/deeplearning4j/tree/master/dl4j-examples/tutorials/README.md\">README</a> to learn about installing, setting up dependencies, and importing notebooks in Zeppelin</p>\n</div>"}]},"apps":[],"jobName":"paragraph_1511006694416_-1542005133","id":"20171020-070156_1850232313","dateCreated":"2017-11-18T12:04:54+0000","dateStarted":"2017-11-21T19:01:33+0000","dateFinished":"2017-11-21T19:01:33+0000","status":"FINISHED","progressUpdateIntervalMs":500,"focus":true,"$$hashKey":"object:2419"},{"text":"%md\n\n### Background\n\n#### Convolutional Neural Networks (CNN)\nMainly neural networks extract features from the data they are fed with. Previously, we dealt with fully-connected feedforward networks which multiply inputs with weights, add biases, learns and adjust them, iteratively. In effect, the network parameters are only concerned with individual features and doesn't concern themselves with surrounding features, relative to the particular feature in focus. But considering surrounding features is important for improving network's performance.\n\nTo accomplish this, we use convolutional neural networks (CNN for short). CNN use a matrix of weights for producing more general features from the input features. Such a set of weights is called a kernel (also known as filters in 2D convolutions). These kernels are best suited for training with images. They slide over the image pixels in 2D and give responses as another feature matrix. \n\nAt the last few layers of a CNN we have fully-connected layers which then transforms our learned feature matrix into a set of predictions on which we can analyse our network's performance.\n\n--- \n\n#### Goals\n- Learn about how CNNs work\n- Working with CNN in DL4J","user":"anonymous","dateUpdated":"2017-11-21T19:01:33+0000","config":{"tableHide":false,"editorSetting":{"language":"markdown","editOnDblClick":true},"colWidth":12,"editorMode":"ace/mode/markdown","fontSize":9,"editorHide":true,"results":{},"enabled":true},"settings":{"params":{},"forms":{}},"results":{"code":"SUCCESS","msg":[{"type":"HTML","data":"<div class=\"markdown-body\">\n<h3>Background</h3>\n<h4>Convolutional Neural Networks (CNN)</h4>\n<p>Mainly neural networks extract features from the data they are fed with. Previously, we dealt with fully-connected feedforward networks which multiply inputs with weights, add biases, learns and adjust them, iteratively. In effect, the network parameters are only concerned with individual features and doesn’t concern themselves with surrounding features, relative to the particular feature in focus. But considering surrounding features is important for improving network’s performance.</p>\n<p>To accomplish this, we use convolutional neural networks (CNN for short). CNN use a matrix of weights for producing more general features from the input features. Such a set of weights is called a kernel (also known as filters in 2D convolutions). These kernels are best suited for training with images. They slide over the image pixels in 2D and give responses as another feature matrix. </p>\n<p>At the last few layers of a CNN we have fully-connected layers which then transforms our learned feature matrix into a set of predictions on which we can analyse our network’s performance.</p>\n<hr/>\n<h4>Goals</h4>\n<ul>\n <li>Learn about how CNNs work</li>\n <li>Working with CNN in DL4J</li>\n</ul>\n</div>"}]},"apps":[],"jobName":"paragraph_1511006694423_-598692174","id":"20171020-070208_2069142559","dateCreated":"2017-11-18T12:04:54+0000","dateStarted":"2017-11-21T19:01:33+0000","dateFinished":"2017-11-21T19:01:33+0000","status":"FINISHED","progressUpdateIntervalMs":500,"$$hashKey":"object:2420"},{"text":"%md\n\n## 1. How CNNs work\n\n### Basic concept\n\nAt each convolutional layer, our network learns about general features from input. As we go deeper, the CNN layers computes more general features. For example, the first CNN might be computing edges present in the image. The next layer might learn about shapes generated through the edges. The following image shows a visual representation of features learned at each CNN layer.\n\n|---|---|---|---|\n|Features learned by CNN Layers||[Source](http://parse.ele.tue.nl/cluster/0/fig1.png)|[Site](http://derekjanni.github.io/Easy-Neural-Nets/)|\n\n___Figure 1:___ The above network shows how more generalized features are learned at each layer of the network.\n\n|---|---|---|---|\n|Basic convolution operation||[Source](http://www.songho.ca/dsp/convolution/files/conv2d_matrix.jpg)|[Site](http://www.songho.ca/dsp/convolution/convolution.html)|\n\n___Figure 2:___ The above figure shows how a basic convolution operation is computed. It's just a weighted sum of corresponding matrix values (between input and kernel)\n\n### CNN related terms\n\n- ___Stride___\n _It tells the convolutional layer how many columns or rows (or both) it should skip while sliding the kernel over the inputs. This results in decreasing the output volume. Which further results in lesser network computations._\n\n- ___Padding___\n _It tells us how many zeros to append at the matrix's boundary. This also help us in controlling the output volume._\n\n- ___Pooling___\n _Pooling layer (also subsampling layer) also lets us reduce the output volume by appling different types of filtering or other mathematical operations to the output convolutional responses. Pooling has different types - such as, max pooling, average pooling, L2-norm. Max pooling is the most commonly used pooling type. It gets the maximum of all the values covered by the kernel specified._","user":"anonymous","dateUpdated":"2017-11-21T19:01:33+0000","config":{"tableHide":false,"editorSetting":{"language":"markdown","editOnDblClick":true},"colWidth":12,"editorMode":"ace/mode/markdown","fontSize":9,"editorHide":true,"results":{},"enabled":true},"settings":{"params":{},"forms":{}},"results":{"code":"SUCCESS","msg":[{"type":"HTML","data":"<div class=\"markdown-body\">\n<h2>1. How CNNs work</h2>\n<h3>Basic concept</h3>\n<p>At each convolutional layer, our network learns about general features from input. As we go deeper, the CNN layers computes more general features. For example, the first CNN might be computing edges present in the image. The next layer might learn about shapes generated through the edges. The following image shows a visual representation of features learned at each CNN layer.</p>\n<table>\n <tbody>\n <tr>\n <td>Features learned by CNN Layers</td>\n <td><img src=\"http://parse.ele.tue.nl/cluster/0/fig1.png\" alt=\"Features learned by CNN Layers\" /></td>\n <td><a href=\"http://parse.ele.tue.nl/cluster/0/fig1.png\">Source</a></td>\n <td><a href=\"http://derekjanni.github.io/Easy-Neural-Nets/\">Site</a></td>\n </tr>\n </tbody>\n</table>\n<p><strong><em>Figure 1:</em></strong> The above network shows how more generalized features are learned at each layer of the network.</p>\n<table>\n <tbody>\n <tr>\n <td>Basic convolution operation</td>\n <td><img src=\"http://www.songho.ca/dsp/convolution/files/conv2d_matrix.jpg\" alt=\"Basic convolution operation\" /></td>\n <td><a href=\"http://www.songho.ca/dsp/convolution/files/conv2d_matrix.jpg\">Source</a></td>\n <td><a href=\"http://www.songho.ca/dsp/convolution/convolution.html\">Site</a></td>\n </tr>\n </tbody>\n</table>\n<p><strong><em>Figure 2:</em></strong> The above figure shows how a basic convolution operation is computed. It’s just a weighted sum of corresponding matrix values (between input and kernel)</p>\n<h3>CNN related terms</h3>\n<ul>\n <li>\n <p><strong><em>Stride</em></strong><br/><em>It tells the convolutional layer how many columns or rows (or both) it should skip while sliding the kernel over the inputs. This results in decreasing the output volume. Which further results in lesser network computations.</em></p></li>\n <li>\n <p><strong><em>Padding</em></strong><br/><em>It tells us how many zeros to append at the matrix’s boundary. This also help us in controlling the output volume.</em></p></li>\n <li>\n <p><strong><em>Pooling</em></strong><br/><em>Pooling layer (also subsampling layer) also lets us reduce the output volume by appling different types of filtering or other mathematical operations to the output convolutional responses. Pooling has different types - such as, max pooling, average pooling, L2-norm. Max pooling is the most commonly used pooling type. It gets the maximum of all the values covered by the kernel specified.</em></p></li>\n</ul>\n</div>"}]},"apps":[],"jobName":"paragraph_1511006694424_53043252","id":"20171116-134509_791025875","dateCreated":"2017-11-18T12:04:54+0000","dateStarted":"2017-11-21T19:01:33+0000","dateFinished":"2017-11-21T19:01:33+0000","status":"FINISHED","progressUpdateIntervalMs":500,"$$hashKey":"object:2421"},{"text":"%md\n## 2. CNN in DL4J\nLet's see how all of this works in DL4J\n\nYou can build a convolutional layer in DL4J as:\n```\nval convLayer = new ConvolutionLayer.Builder(Array[Int](5, 5), Array[Int](1, 1), Array[Int](0, 0))\n .name(\"cnn\").nOut(50).biasInit(0).build\n```\nHere the convolutional layer is created with a kernel size of _5x5_, _1x1_ stride and a padding of _0x0_ with _50_ kernels and all biases initialized to _0_\n__Note:__ Kernels also has a depth. The depth is equal to the depth of inputs coming from the previous layer. So, on the first layer, this kernel would have a size of 5x5x3 with 3-channel RGB image being fed as an input.\n\nThe output for a convolutional layer is of the shape (WoxHoxDo), where:\n__Wo=(W−Fw+2Ph)/Sh+1__ -> __W__ is the input width, __Fw__ is the kernel width, __Ph__ is the horizontal padding, __Sh__ is the horizontal stride\n__Ho=(H−Fh+2Pv)/Sv+1__ -> __H__ is the input height, __Fh__ is the kernel height, __Pv__ is the vertical padding, __Sv__ is the vertical stride\n__Do=K__ -> __K__ is the number of kernels applied\n\nFor a pooling layer we can do something like this:\n```\nval poolLayer = new SubsamplingLayer.Builder(Array[Int](2, 2), Array[Int](2, 2)).name(\"maxpool\").build\n```\nThe default type of pooling is max pooling. Here we're building a pooling layer with a kernel size of _2x2_ and a stride of _2x2_\n\nThe output is of the shape (WoxHoxDo), for a pooling layer, where: \n__Wo = (W−Fw)/Sh+1__ -> __W__ is the input width, __Fw__ is the kernel width, __Sh__ is the horizontal stride\n__Ho = (H−Fh)/Sv+1__ -> __H__ is the input height, __Fh__ is the kernel height, __Sv__ is the vertical stride\n__Do = Di__ -> __Di__ is the input depth\n\nAlso, we have to configure how we pass inputs to it. If it's already an image with multiple channels, we use:\n```\nval conf = new NeuralNetConfiguration.Builder()\n// Hyperparameters code here\n.list\n// Layers code here\n.setInputType(InputType.convolutional(32, 32, 3)) // Setting our input type here (32x32x3 image)\n.build\n```\n\nOtherwise, if it's a linear array of inputs, we can do something like this:\n```\nval conf = new NeuralNetConfiguration.Builder()\n// Hyperparameters code here\n.list\n// Layers code here\n.setInputType(InputType.convolutionalFlat(28, 28, 1)) // Setting our input type here (784 linear array to 28x28x1 input)\n.build\n```","user":"anonymous","dateUpdated":"2017-11-21T19:01:33+0000","config":{"tableHide":false,"editorSetting":{"language":"markdown","editOnDblClick":true},"colWidth":12,"editorMode":"ace/mode/markdown","fontSize":9,"editorHide":true,"results":{"0":{"graph":{"mode":"table","height":386.188,"optionOpen":false}}},"enabled":true},"settings":{"params":{},"forms":{}},"results":{"code":"SUCCESS","msg":[{"type":"HTML","data":"<div class=\"markdown-body\">\n<h2>2. CNN in DL4J</h2>\n<p>Let’s see how all of this works in DL4J</p>\n<p>You can build a convolutional layer in DL4J as:</p>\n<pre><code>val convLayer = new ConvolutionLayer.Builder(Array[Int](5, 5), Array[Int](1, 1), Array[Int](0, 0))\n .name("cnn").nOut(50).biasInit(0).build\n</code></pre>\n<p>Here the convolutional layer is created with a kernel size of <em>5x5</em>, <em>1x1</em> stride and a padding of <em>0x0</em> with <em>50</em> kernels and all biases initialized to <em>0</em><br/><strong>Note:</strong> Kernels also has a depth. The depth is equal to the depth of inputs coming from the previous layer. So, on the first layer, this kernel would have a size of 5x5x3 with 3-channel RGB image being fed as an input.</p>\n<p>The output for a convolutional layer is of the shape (WoxHoxDo), where:<br/><strong>Wo=(W−Fw+2Ph)/Sh+1</strong> -> <strong>W</strong> is the input width, <strong>Fw</strong> is the kernel width, <strong>Ph</strong> is the horizontal padding, <strong>Sh</strong> is the horizontal stride<br/><strong>Ho=(H−Fh+2Pv)/Sv+1</strong> -> <strong>H</strong> is the input height, <strong>Fh</strong> is the kernel height, <strong>Pv</strong> is the vertical padding, <strong>Sv</strong> is the vertical stride<br/><strong>Do=K</strong> -> <strong>K</strong> is the number of kernels applied</p>\n<p>For a pooling layer we can do something like this:</p>\n<pre><code>val poolLayer = new SubsamplingLayer.Builder(Array[Int](2, 2), Array[Int](2, 2)).name("maxpool").build\n</code></pre>\n<p>The default type of pooling is max pooling. Here we’re building a pooling layer with a kernel size of <em>2x2</em> and a stride of <em>2x2</em></p>\n<p>The output is of the shape (WoxHoxDo), for a pooling layer, where:<br/><strong>Wo = (W−Fw)/Sh+1</strong> -> <strong>W</strong> is the input width, <strong>Fw</strong> is the kernel width, <strong>Sh</strong> is the horizontal stride<br/><strong>Ho = (H−Fh)/Sv+1</strong> -> <strong>H</strong> is the input height, <strong>Fh</strong> is the kernel height, <strong>Sv</strong> is the vertical stride<br/><strong>Do = Di</strong> -> <strong>Di</strong> is the input depth</p>\n<p>Also, we have to configure how we pass inputs to it. If it’s already an image with multiple channels, we use:</p>\n<pre><code>val conf = new NeuralNetConfiguration.Builder()\n// Hyperparameters code here\n.list\n// Layers code here\n.setInputType(InputType.convolutional(32, 32, 3)) // Setting our input type here (32x32x3 image)\n.build\n</code></pre>\n<p>Otherwise, if it’s a linear array of inputs, we can do something like this:</p>\n<pre><code>val conf = new NeuralNetConfiguration.Builder()\n// Hyperparameters code here\n.list\n// Layers code here\n.setInputType(InputType.convolutionalFlat(28, 28, 1)) // Setting our input type here (784 linear array to 28x28x1 input)\n.build\n</code></pre>\n</div>"}]},"apps":[],"jobName":"paragraph_1511006694424_-2042624967","id":"20171020-070710_1843650237","dateCreated":"2017-11-18T12:04:54+0000","dateStarted":"2017-11-21T19:01:33+0000","dateFinished":"2017-11-21T19:01:33+0000","status":"FINISHED","progressUpdateIntervalMs":500,"$$hashKey":"object:2422"},{"text":"%md\n\n### Imports","user":"anonymous","dateUpdated":"2017-11-21T19:01:33+0000","config":{"colWidth":12,"fontSize":9,"enabled":true,"results":{},"editorSetting":{"language":"markdown","editOnDblClick":true},"editorMode":"ace/mode/markdown","editorHide":true,"tableHide":false},"settings":{"params":{},"forms":{}},"results":{"code":"SUCCESS","msg":[{"type":"HTML","data":"<div class=\"markdown-body\">\n<h3>Imports</h3>\n</div>"}]},"apps":[],"jobName":"paragraph_1511188634263_-474174516","id":"20171120-143714_472543342","dateCreated":"2017-11-20T14:37:14+0000","dateStarted":"2017-11-21T19:01:33+0000","dateFinished":"2017-11-21T19:01:33+0000","status":"FINISHED","progressUpdateIntervalMs":500,"$$hashKey":"object:2423"},{"text":"import java.{lang, util}\r\n\r\nimport org.deeplearning4j.datasets.iterator.impl.MnistDataSetIterator\r\nimport org.deeplearning4j.nn.api.{Model, OptimizationAlgorithm}\r\nimport org.deeplearning4j.nn.conf.inputs.InputType\r\nimport org.deeplearning4j.nn.conf.layers.{ConvolutionLayer, DenseLayer, OutputLayer, SubsamplingLayer}\r\nimport org.deeplearning4j.nn.conf.{LearningRatePolicy, NeuralNetConfiguration, Updater}\r\nimport org.deeplearning4j.nn.multilayer.MultiLayerNetwork\r\nimport org.deeplearning4j.nn.weights.WeightInit\r\nimport org.deeplearning4j.optimize.api.IterationListener\r\nimport org.nd4j.linalg.activations.Activation\r\nimport org.nd4j.linalg.lossfunctions.LossFunctions","user":"anonymous","dateUpdated":"2017-11-21T19:01:33+0000","config":{"tableHide":true,"editorSetting":{"language":"scala","editOnDblClick":true},"colWidth":12,"editorMode":"ace/mode/scala","fontSize":9,"editorHide":false,"results":{},"enabled":true},"settings":{"params":{},"forms":{}},"results":{"code":"SUCCESS","msg":[{"type":"TEXT","data":"import java.{lang, util}\nimport org.deeplearning4j.datasets.iterator.impl.MnistDataSetIterator\nimport org.deeplearning4j.nn.api.{Model, OptimizationAlgorithm}\nimport org.deeplearning4j.nn.conf.inputs.InputType\nimport org.deeplearning4j.nn.conf.layers.{ConvolutionLayer, DenseLayer, OutputLayer, SubsamplingLayer}\nimport org.deeplearning4j.nn.conf.{LearningRatePolicy, NeuralNetConfiguration, Updater}\nimport org.deeplearning4j.nn.multilayer.MultiLayerNetwork\nimport org.deeplearning4j.nn.weights.WeightInit\nimport org.deeplearning4j.optimize.api.IterationListener\nimport org.nd4j.linalg.activations.Activation\nimport org.nd4j.linalg.lossfunctions.LossFunctions\n"}]},"apps":[],"jobName":"paragraph_1511006694425_1809240874","id":"20171020-071303_1517144370","dateCreated":"2017-11-18T12:04:54+0000","dateStarted":"2017-11-21T19:01:33+0000","dateFinished":"2017-11-21T19:01:55+0000","status":"FINISHED","progressUpdateIntervalMs":500,"$$hashKey":"object:2424"},{"text":"%md\n\n### Setting up the network inputs\nWe'll use the __MNIST__ dataset for this tutorial.","user":"anonymous","dateUpdated":"2017-11-21T19:01:33+0000","config":{"tableHide":false,"editorSetting":{"language":"markdown","editOnDblClick":true},"colWidth":12,"editorMode":"ace/mode/markdown","fontSize":9,"editorHide":true,"results":{},"enabled":true},"settings":{"params":{},"forms":{}},"results":{"code":"SUCCESS","msg":[{"type":"HTML","data":"<div class=\"markdown-body\">\n<h3>Setting up the network inputs</h3>\n<p>We’ll use the <strong>MNIST</strong> dataset for this tutorial.</p>\n</div>"}]},"apps":[],"jobName":"paragraph_1511006694425_522093421","id":"20171116-181214_1098994224","dateCreated":"2017-11-18T12:04:54+0000","dateStarted":"2017-11-21T19:01:55+0000","dateFinished":"2017-11-21T19:01:55+0000","status":"FINISHED","progressUpdateIntervalMs":500,"$$hashKey":"object:2425"},{"text":"// Hyperparameters\r\nval seed = 123\r\nval epochs = 1\r\nval batchSize = 64\r\nval learningRate = 0.1\r\nval learningRateDecay = 0.1\r\n\r\n// MNIST Dataset\r\nval mnistTrain = new MnistDataSetIterator(batchSize, true, seed)\r\nval mnistTest = new MnistDataSetIterator(batchSize, false, seed)","user":"anonymous","dateUpdated":"2017-11-21T19:01:33+0000","config":{"tableHide":true,"editorSetting":{"language":"scala","editOnDblClick":true},"colWidth":12,"editorMode":"ace/mode/scala","fontSize":9,"editorHide":false,"results":{},"enabled":true},"settings":{"params":{},"forms":{}},"results":{"code":"SUCCESS","msg":[{"type":"TEXT","data":"seed: Int = 123\nepochs: Int = 1\nbatchSize: Int = 64\nlearningRate: Double = 0.1\nlearningRateDecay: Double = 0.1\nmnistTrain: org.deeplearning4j.datasets.iterator.impl.MnistDataSetIterator = org.deeplearning4j.datasets.iterator.impl.MnistDataSetIterator@2c4d2e07\nmnistTest: org.deeplearning4j.datasets.iterator.impl.MnistDataSetIterator = org.deeplearning4j.datasets.iterator.impl.MnistDataSetIterator@7e3cb6f4\n"}]},"apps":[],"jobName":"paragraph_1511006694425_-1882966720","id":"20171116-181442_125385967","dateCreated":"2017-11-18T12:04:54+0000","dateStarted":"2017-11-21T19:01:55+0000","dateFinished":"2017-11-21T19:01:57+0000","status":"FINISHED","progressUpdateIntervalMs":500,"$$hashKey":"object:2426"},{"text":"%md\n\n### Creating a CNN network","user":"anonymous","dateUpdated":"2017-11-21T19:01:34+0000","config":{"tableHide":false,"editorSetting":{"language":"markdown","editOnDblClick":true},"colWidth":12,"editorMode":"ace/mode/markdown","fontSize":9,"editorHide":true,"results":{},"enabled":true},"settings":{"params":{},"forms":{}},"results":{"code":"SUCCESS","msg":[{"type":"HTML","data":"<div class=\"markdown-body\">\n<h3>Creating a CNN network</h3>\n</div>"}]},"apps":[],"jobName":"paragraph_1511006694426_375114696","id":"20171020-072208_966782035","dateCreated":"2017-11-18T12:04:54+0000","dateStarted":"2017-11-21T19:01:57+0000","dateFinished":"2017-11-21T19:01:57+0000","status":"FINISHED","progressUpdateIntervalMs":500,"$$hashKey":"object:2427"},{"text":"// Learning rate schedule\r\nval lrSchedule: util.Map[Integer, lang.Double] = new util.HashMap[Integer, lang.Double]\r\nlrSchedule.put(0, learningRate)\r\nlrSchedule.put(1000, learningRate * learningRateDecay)\r\nlrSchedule.put(4000, learningRate * Math.pow(learningRateDecay, 2))\r\n\r\n// Network Configuration\r\nval conf = new NeuralNetConfiguration.Builder()\r\n .seed(seed)\r\n .iterations(1)\r\n .regularization(true).l2(0.005)\r\n .activation(Activation.RELU)\r\n .learningRateDecayPolicy(LearningRatePolicy.Schedule)\r\n .learningRateSchedule(lrSchedule)\r\n .weightInit(WeightInit.XAVIER)\r\n .optimizationAlgo(OptimizationAlgorithm.STOCHASTIC_GRADIENT_DESCENT)\r\n .updater(Updater.ADAM)\r\n .list\r\n .layer(0, new ConvolutionLayer.Builder(Array[Int](5, 5), Array[Int](1, 1), Array[Int](0, 0))\r\n .name(\"cnn1\").nOut(50).biasInit(0).build)\r\n .layer(1, new SubsamplingLayer.Builder(Array[Int](2, 2), Array[Int](2, 2)).name(\"maxpool1\").build)\r\n .layer(2, new ConvolutionLayer.Builder(Array(5, 5), Array[Int](1, 1), Array[Int](0, 0))\r\n .name(\"cnn2\").nOut(100).biasInit(0).build)\r\n .layer(3, new SubsamplingLayer.Builder(Array[Int](2, 2), Array[Int](2, 2)).name(\"maxpool2\").build)\r\n .layer(4, new DenseLayer.Builder().nOut(500).build)\r\n .layer(5, new OutputLayer.Builder(LossFunctions.LossFunction.NEGATIVELOGLIKELIHOOD)\r\n .nOut(10).activation(Activation.SOFTMAX).build)\r\n .backprop(true).pretrain(false)\r\n .setInputType(InputType.convolutionalFlat(28, 28, 1))\r\n .build\r\n\r\n// Initializing model and setting custom listeners\r\nval model = new MultiLayerNetwork(conf)\r\nmodel.init()\r\nmodel.setListeners(new IterationListener {\r\n override def invoke(): Unit = ??? \r\n override def iterationDone(model: Model, iteration: Int): Unit = {\r\n if(iteration % 100 == 0) {\r\n println(\"Score at iteration \" + iteration + \" is \" + model.score())\r\n }\r\n } \r\n override def invoked(): Nothing = ???\r\n})","user":"anonymous","dateUpdated":"2017-11-21T19:01:34+0000","config":{"tableHide":true,"editorSetting":{"language":"scala"},"colWidth":12,"editorMode":"ace/mode/scala","fontSize":9,"editorHide":false,"results":{},"enabled":true},"settings":{"params":{},"forms":{}},"results":{"code":"SUCCESS","msg":[{"type":"TEXT","data":"lrSchedule: java.util.Map[Integer,Double] = {}\nres2: Double = null\nres3: Double = null\nres4: Double = null\nconf: org.deeplearning4j.nn.conf.MultiLayerConfiguration =\n{\n \"backprop\" : true,\n \"backpropType\" : \"Standard\",\n \"cacheMode\" : \"NONE\",\n \"confs\" : [ {\n \"cacheMode\" : \"NONE\",\n \"iterationCount\" : 0,\n \"l1ByParam\" : { },\n \"l2ByParam\" : { },\n \"layer\" : {\n \"convolution\" : {\n \"activationFn\" : {\n \"ReLU\" : { }\n },\n \"adamMeanDecay\" : 0.9,\n \"adamVarDecay\" : 0.999,\n \"biasInit\" : 0.0,\n \"biasLearningRate\" : 0.1,\n \"convolutionMode\" : \"Truncate\",\n \"cudnnAlgoMode\" : \"PREFER_FASTEST\",\n \"cudnnBwdDataAlgo\" : null,\n \"cudnnBwdFilterAlgo\" : null,\n \"cudnnFwdAlgo\" : null,\n \"dist\" : null,\n \"dropOut\" : 0.0,\n \"epsilon\" : 1.0E-8,\n \"gradientNormalization\" : \"None\",\n \"gradient...model: org.deeplearning4j.nn.multilayer.MultiLayerNetwork = org.deeplearning4j.nn.multilayer.MultiLayerNetwork@3b87d85b\n"}]},"apps":[],"jobName":"paragraph_1511006694426_-908562333","id":"20171020-071349_473511535","dateCreated":"2017-11-18T12:04:54+0000","dateStarted":"2017-11-21T19:01:57+0000","dateFinished":"2017-11-21T19:02:15+0000","status":"FINISHED","progressUpdateIntervalMs":500,"$$hashKey":"object:2428"},{"user":"anonymous","config":{"colWidth":12,"fontSize":9,"enabled":true,"results":{},"editorSetting":{"language":"markdown","editOnDblClick":true},"editorMode":"ace/mode/markdown","editorHide":true,"tableHide":false},"settings":{"params":{},"forms":{}},"apps":[],"jobName":"paragraph_1511290867408_1434041576","id":"20171121-190107_1061328323","dateCreated":"2017-11-21T19:01:07+0000","status":"FINISHED","progressUpdateIntervalMs":500,"focus":true,"$$hashKey":"object:3732","text":"%md\n\n### Training and Evaluation","dateUpdated":"2017-11-21T19:01:34+0000","dateFinished":"2017-11-21T19:02:15+0000","dateStarted":"2017-11-21T19:02:15+0000","results":{"code":"SUCCESS","msg":[{"type":"HTML","data":"<div class=\"markdown-body\">\n<h3>Training and Evaluation</h3>\n</div>"}]}},{"text":"(1 to epochs).foreach(epochStep => {\r\n println(\"Epoch: \" + epochStep)\r\n model.fit(mnistTrain) // Training\r\n // print the basic statistics about the trained classifier\r\n println(\"Training Stats for epoch: \" + epochStep + \" -> \" + model.evaluate(mnistTest).stats(true)) // Evaluation\r\n mnistTest.reset()\r\n})","user":"anonymous","dateUpdated":"2017-11-21T19:01:34+0000","config":{"colWidth":12,"fontSize":9,"enabled":true,"results":{},"editorSetting":{"language":"scala","editOnDblClick":true},"editorMode":"ace/mode/scala"},"settings":{"params":{},"forms":{}},"apps":[],"jobName":"paragraph_1511290880185_-1051973364","id":"20171121-190120_608905762","dateCreated":"2017-11-21T19:01:20+0000","status":"FINISHED","progressUpdateIntervalMs":500,"focus":true,"$$hashKey":"object:3812","dateFinished":"2017-11-21T19:08:42+0000","dateStarted":"2017-11-21T19:02:15+0000","results":{"code":"SUCCESS","msg":[{"type":"TEXT","data":"Epoch: 1\nScore at iteration 0 is 2.3379802977228348\nScore at iteration 100 is 0.2669561267795874\nScore at iteration 200 is 0.21754097031737135\nScore at iteration 300 is 0.21722672677511565\nScore at iteration 400 is 0.11637009504284164\nScore at iteration 500 is 0.13475708434868477\nScore at iteration 600 is 0.07702370940008041\nScore at iteration 700 is 0.06152160806975777\nScore at iteration 800 is 0.10351134828877057\nScore at iteration 900 is 0.13980447216689168\nTraining Stats for epoch: 1 -> \nExamples labeled as 0 classified by model as 0: 977 times\nExamples labeled as 0 classified by model as 1: 1 times\nExamples labeled as 0 classified by model as 7: 1 times\nExamples labeled as 0 classified by model as 8: 1 times\nExamples labeled as 1 classified by model as 1: 1133 times\nExamples labeled as 1 classified by model as 2: 1 times\nExamples labeled as 1 classified by model as 7: 1 times\nExamples labeled as 2 classified by model as 0: 3 times\nExamples labeled as 2 classified by model as 1: 5 times\nExamples labeled as 2 classified by model as 2: 1018 times\nExamples labeled as 2 classified by model as 7: 5 times\nExamples labeled as 2 classified by model as 8: 1 times\nExamples labeled as 3 classified by model as 0: 1 times\nExamples labeled as 3 classified by model as 1: 1 times\nExamples labeled as 3 classified by model as 2: 2 times\nExamples labeled as 3 classified by model as 3: 1004 times\nExamples labeled as 3 classified by model as 8: 2 times\nExamples labeled as 4 classified by model as 4: 982 times\nExamples labeled as 5 classified by model as 0: 3 times\nExamples labeled as 5 classified by model as 3: 21 times\nExamples labeled as 5 classified by model as 5: 852 times\nExamples labeled as 5 classified by model as 6: 8 times\nExamples labeled as 5 classified by model as 7: 1 times\nExamples labeled as 5 classified by model as 8: 3 times\nExamples labeled as 5 classified by model as 9: 4 times\nExamples labeled as 6 classified by model as 0: 6 times\nExamples labeled as 6 classified by model as 1: 4 times\nExamples labeled as 6 classified by model as 4: 2 times\nExamples labeled as 6 classified by model as 5: 1 times\nExamples labeled as 6 classified by model as 6: 944 times\nExamples labeled as 6 classified by model as 8: 1 times\nExamples labeled as 7 classified by model as 1: 4 times\nExamples labeled as 7 classified by model as 3: 1 times\nExamples labeled as 7 classified by model as 4: 1 times\nExamples labeled as 7 classified by model as 7: 1020 times\nExamples labeled as 7 classified by model as 8: 1 times\nExamples labeled as 7 classified by model as 9: 1 times\nExamples labeled as 8 classified by model as 0: 13 times\nExamples labeled as 8 classified by model as 1: 2 times\nExamples labeled as 8 classified by model as 2: 2 times\nExamples labeled as 8 classified by model as 3: 1 times\nExamples labeled as 8 classified by model as 4: 2 times\nExamples labeled as 8 classified by model as 7: 2 times\nExamples labeled as 8 classified by model as 8: 945 times\nExamples labeled as 8 classified by model as 9: 7 times\nExamples labeled as 9 classified by model as 0: 4 times\nExamples labeled as 9 classified by model as 1: 2 times\nExamples labeled as 9 classified by model as 3: 1 times\nExamples labeled as 9 classified by model as 4: 25 times\nExamples labeled as 9 classified by model as 5: 2 times\nExamples labeled as 9 classified by model as 7: 7 times\nExamples labeled as 9 classified by model as 8: 2 times\nExamples labeled as 9 classified by model as 9: 966 times\n\n\n==========================Scores========================================\n # of classes: 10\n Accuracy: 0.9841\n Precision: 0.9844\n Recall: 0.9836\n F1 Score: 0.9839\nPrecision, recall & F1: macro-averaged (equally weighted avg. of 10 classes)\n========================================================================\n"}]}},{"text":"%md\n\n### Summary\n\nIn short, CNNs give better accuracy than simple fully-connected networks. In the example above, we got nearly 99% accuracy with just a simple CNN on the MNIST dataset. \nSome famous and successful CNNs to study are AlexNet, LeNet, InceptionNet etc.","user":"anonymous","dateUpdated":"2017-11-21T19:01:34+0000","config":{"tableHide":false,"editorSetting":{"language":"markdown","editOnDblClick":true},"colWidth":12,"editorMode":"ace/mode/markdown","fontSize":9,"editorHide":true,"results":{},"enabled":true},"settings":{"params":{},"forms":{}},"results":{"code":"SUCCESS","msg":[{"type":"HTML","data":"<div class=\"markdown-body\">\n<h3>Summary</h3>\n<p>In short, CNNs give better accuracy than simple fully-connected networks. In the example above, we got nearly 99% accuracy with just a simple CNN on the MNIST dataset.<br/>Some famous and successful CNNs to study are AlexNet, LeNet, InceptionNet etc.</p>\n</div>"}]},"apps":[],"jobName":"paragraph_1511006694427_1208664961","id":"20171117-073125_214586916","dateCreated":"2017-11-18T12:04:54+0000","dateStarted":"2017-11-21T19:08:42+0000","dateFinished":"2017-11-21T19:08:43+0000","status":"FINISHED","progressUpdateIntervalMs":500,"$$hashKey":"object:2429"},{"text":"%md\n\n### What's next?\n\n- Check out all of our tutorials available [on Github](https://github.com/deeplearning4j/deeplearning4j/tree/master/dl4j-examples/tutorials). Notebooks are numbered for easy following.","user":"anonymous","dateUpdated":"2017-11-21T19:01:34+0000","config":{"tableHide":false,"editorSetting":{"language":"markdown","editOnDblClick":true},"colWidth":12,"editorMode":"ace/mode/markdown","fontSize":9,"editorHide":true,"results":{},"enabled":true},"settings":{"params":{},"forms":{}},"results":{"code":"SUCCESS","msg":[{"type":"HTML","data":"<div class=\"markdown-body\">\n<h3>What’s next?</h3>\n<ul>\n <li>Check out all of our tutorials available <a href=\"https://github.com/deeplearning4j/deeplearning4j/tree/master/dl4j-examples/tutorials\">on Github</a>. Notebooks are numbered for easy following.</li>\n</ul>\n</div>"}]},"apps":[],"jobName":"paragraph_1511006694437_1371916728","id":"20171020-072151_195526063","dateCreated":"2017-11-18T12:04:54+0000","dateStarted":"2017-11-21T19:08:43+0000","dateFinished":"2017-11-21T19:08:43+0000","status":"FINISHED","progressUpdateIntervalMs":500,"$$hashKey":"object:2430"},{"text":"%md\n","user":"anonymous","dateUpdated":"2017-11-20T15:07:10+0000","config":{"editorSetting":{"language":"markdown","editOnDblClick":true},"colWidth":12,"editorMode":"ace/mode/markdown","fontSize":9,"editorHide":true,"results":{},"enabled":true},"settings":{"params":{},"forms":{}},"apps":[],"jobName":"paragraph_1511006694438_2126393092","id":"20171020-072158_2072802023","dateCreated":"2017-11-18T12:04:54+0000","status":"FINISHED","errorMessage":"","progressUpdateIntervalMs":500,"$$hashKey":"object:2431"}],"name":"Image classification with CNN","id":"2CZ3T5FJR","angularObjects":{"2CZQ7ZY6S:shared_process":[],"2D1CXP1PP:shared_process":[],"2CZ2UUD41:shared_process":[],"2D1AKPRZA:shared_process":[],"2CX1MZBK8:shared_process":[],"2CXVEN2YD:shared_process":[],"2CX4DY6NZ:shared_process":[],"2CXVDPAZN:shared_process":[],"2CXK9GBTB:shared_process":[],"2CXAT5DWH:shared_process":[],"2CXRBFGBY:shared_process":[],"2CZDYJPG9:shared_process":[],"2CY9RSS9K:shared_process":[],"2CZSZQC4M:shared_process":[],"2CYBYW659:shared_process":[],"2CZCD55JD:shared_process":[],"2CYAJA9NF:shared_process":[],"2D1FESVJD:shared_process":[],"2CYGUF5SA:shared_process":[],"2CZ4X76GG:shared_process":[]},"config":{"looknfeel":"default","personalizedMode":"false"},"info":{}}