https://github.com/apache/spark

- HEAD

- refs/heads/branch-0.5

- refs/heads/branch-0.6

- refs/heads/branch-0.7

- refs/heads/branch-0.8

- refs/heads/branch-0.9

- refs/heads/branch-1.0

- refs/heads/branch-1.0-jdbc

- refs/heads/branch-1.1

- refs/heads/branch-1.2

- refs/heads/branch-1.3

- refs/heads/branch-1.4

- refs/heads/branch-1.5

- refs/heads/branch-1.6

- refs/heads/branch-2.0

- refs/heads/branch-2.1

- refs/heads/branch-2.2

- refs/heads/branch-2.3

- refs/heads/branch-2.4

- refs/heads/branch-3.0

- refs/heads/branch-3.1

- refs/heads/branch-3.2

- refs/heads/branch-3.3

- refs/heads/branch-3.4

- refs/heads/branch-3.5

- refs/heads/master

- refs/remotes/origin/branch-0.8

- refs/remotes/origin/td-rdd-save

- refs/tags/0.3-scala-2.8

- refs/tags/0.3-scala-2.9

- refs/tags/2.0.0-preview

- refs/tags/alpha-0.1

- refs/tags/alpha-0.2

- refs/tags/v0.5.0

- refs/tags/v0.5.1

- refs/tags/v0.5.2

- refs/tags/v0.6.0

- refs/tags/v0.6.0-yarn

- refs/tags/v0.6.1

- refs/tags/v0.6.2

- refs/tags/v0.7.0

- refs/tags/v0.7.0-bizo-1

- refs/tags/v0.7.1

- refs/tags/v0.7.2

- refs/tags/v0.9.1

- refs/tags/v0.9.2

- refs/tags/v1.0.0

- refs/tags/v1.0.1

- refs/tags/v1.0.2

- refs/tags/v1.1.0

- refs/tags/v1.1.1

- refs/tags/v1.2.0

- refs/tags/v1.2.1

- refs/tags/v1.2.2

- refs/tags/v1.3.0

- refs/tags/v1.3.1

- refs/tags/v1.4.0

- refs/tags/v1.4.1

- refs/tags/v1.5.0-rc1

- refs/tags/v1.5.0-rc2

- refs/tags/v1.5.0-rc3

- refs/tags/v1.5.1

- refs/tags/v1.6.0

- refs/tags/v1.6.1

- refs/tags/v1.6.2

- refs/tags/v1.6.3

- refs/tags/v2.0.0

- refs/tags/v2.0.1

- refs/tags/v2.0.2

- refs/tags/v2.1.0

- refs/tags/v2.1.1

- refs/tags/v2.1.2

- refs/tags/v2.1.2-rc1

- refs/tags/v2.1.2-rc2

- refs/tags/v2.1.2-rc3

- refs/tags/v2.1.2-rc4

- refs/tags/v2.1.3

- refs/tags/v2.1.3-rc1

- refs/tags/v2.1.3-rc2

- refs/tags/v2.2.0

- refs/tags/v2.2.1

- refs/tags/v2.2.1-rc1

- refs/tags/v2.2.1-rc2

- refs/tags/v2.2.2

- refs/tags/v2.2.2-rc1

- refs/tags/v2.2.2-rc2

- refs/tags/v2.2.3

- refs/tags/v2.2.3-rc1

- refs/tags/v2.3.0

- refs/tags/v2.3.0-rc1

- refs/tags/v2.3.0-rc2

- refs/tags/v2.3.0-rc3

- refs/tags/v2.3.0-rc4

- refs/tags/v2.3.1

- refs/tags/v2.3.1-rc1

- refs/tags/v2.3.1-rc2

- refs/tags/v2.3.1-rc3

- refs/tags/v2.3.1-rc4

- refs/tags/v2.3.2

- refs/tags/v2.3.2-rc1

- refs/tags/v2.3.2-rc2

- refs/tags/v2.3.2-rc3

- refs/tags/v2.3.2-rc4

- refs/tags/v2.3.2-rc5

- refs/tags/v2.3.2-rc6

- refs/tags/v2.3.3

- refs/tags/v2.3.3-rc1

- refs/tags/v2.3.3-rc2

- refs/tags/v2.3.4

- refs/tags/v2.3.4-rc1

- refs/tags/v2.4.0

- refs/tags/v2.4.0-rc1

- refs/tags/v2.4.0-rc2

- refs/tags/v2.4.0-rc3

- refs/tags/v2.4.0-rc4

- refs/tags/v2.4.0-rc5

- refs/tags/v2.4.1

- refs/tags/v2.4.1-rc1

- refs/tags/v2.4.1-rc2

- refs/tags/v2.4.1-rc3

- refs/tags/v2.4.1-rc4

- refs/tags/v2.4.1-rc5

- refs/tags/v2.4.1-rc6

- refs/tags/v2.4.1-rc7

- refs/tags/v2.4.1-rc8

- refs/tags/v2.4.1-rc9

- refs/tags/v2.4.2

- refs/tags/v2.4.2-rc1

- refs/tags/v2.4.3

- refs/tags/v2.4.3-rc1

- refs/tags/v2.4.4

- refs/tags/v2.4.4-rc1

- refs/tags/v2.4.4-rc2

- refs/tags/v2.4.4-rc3

- refs/tags/v2.4.5

- refs/tags/v2.4.5-rc1

- refs/tags/v2.4.5-rc2

- refs/tags/v2.4.6

- refs/tags/v2.4.6-rc1

- refs/tags/v2.4.6-rc2

- refs/tags/v2.4.6-rc3

- refs/tags/v2.4.6-rc4

- refs/tags/v2.4.6-rc5

- refs/tags/v2.4.6-rc6

- refs/tags/v2.4.6-rc7

- refs/tags/v2.4.6-rc8

- refs/tags/v2.4.7

- refs/tags/v2.4.7-rc1

- refs/tags/v2.4.7-rc2

- refs/tags/v2.4.7-rc3

- refs/tags/v2.4.8

- refs/tags/v2.4.8-rc1

- refs/tags/v2.4.8-rc2

- refs/tags/v2.4.8-rc3

- refs/tags/v2.4.8-rc4

- refs/tags/v3.0.0

- refs/tags/v3.0.0-preview2

- refs/tags/v3.0.0-preview2-rc1

- refs/tags/v3.0.0-preview2-rc2

- refs/tags/v3.0.0-rc1

- refs/tags/v3.0.0-rc2

- refs/tags/v3.0.0-rc3

- refs/tags/v3.0.1

- refs/tags/v3.0.1-rc1

- refs/tags/v3.0.1-rc2

- refs/tags/v3.0.1-rc3

- refs/tags/v3.0.2

- refs/tags/v3.0.2-rc1

- refs/tags/v3.0.3

- refs/tags/v3.0.3-rc1

- refs/tags/v3.1.0-rc1

- refs/tags/v3.1.1

- refs/tags/v3.1.1-rc1

- refs/tags/v3.1.1-rc2

- refs/tags/v3.1.1-rc3

- refs/tags/v3.1.2

- refs/tags/v3.1.2-rc1

- refs/tags/v3.1.3

- refs/tags/v3.1.3-rc1

- refs/tags/v3.1.3-rc2

- refs/tags/v3.1.3-rc3

- refs/tags/v3.1.3-rc4

- refs/tags/v3.2.0

- refs/tags/v3.2.0-rc1

- refs/tags/v3.2.0-rc2

- refs/tags/v3.2.0-rc3

- refs/tags/v3.2.0-rc4

- refs/tags/v3.2.0-rc5

- refs/tags/v3.2.0-rc6

- refs/tags/v3.2.0-rc7

- refs/tags/v3.2.1

- refs/tags/v3.2.1-rc1

- refs/tags/v3.2.1-rc2

- refs/tags/v3.2.2

- refs/tags/v3.2.2-rc1

- refs/tags/v3.2.3

- refs/tags/v3.2.3-rc1

- refs/tags/v3.2.4

- refs/tags/v3.2.4-rc1

- refs/tags/v3.3.0

- refs/tags/v3.3.0-rc1

- refs/tags/v3.3.0-rc2

- refs/tags/v3.3.0-rc3

- refs/tags/v3.3.0-rc4

- refs/tags/v3.3.0-rc5

- refs/tags/v3.3.0-rc6

- refs/tags/v3.3.1

- refs/tags/v3.3.1-rc1

- refs/tags/v3.3.1-rc2

- refs/tags/v3.3.1-rc3

- refs/tags/v3.3.1-rc4

- refs/tags/v3.3.2

- refs/tags/v3.3.2-rc1

- refs/tags/v3.3.3

- refs/tags/v3.3.3-rc1

- refs/tags/v3.3.4

- refs/tags/v3.3.4-rc1

- refs/tags/v3.4.0

- refs/tags/v3.4.0-rc1

- refs/tags/v3.4.0-rc2

- refs/tags/v3.4.0-rc3

- refs/tags/v3.4.0-rc4

- refs/tags/v3.4.0-rc5

- refs/tags/v3.4.0-rc6

- refs/tags/v3.4.0-rc7

- refs/tags/v3.4.1

- refs/tags/v3.4.1-rc1

- refs/tags/v3.4.2

- refs/tags/v3.4.2-rc1

- refs/tags/v3.4.3

- refs/tags/v3.4.3-rc1

- refs/tags/v3.4.3-rc2

- refs/tags/v3.5.0

- refs/tags/v3.5.0-rc1

- refs/tags/v3.5.0-rc2

- refs/tags/v3.5.0-rc3

- refs/tags/v3.5.0-rc4

- refs/tags/v3.5.0-rc5

- refs/tags/v3.5.1

- refs/tags/v3.5.1-rc1

- refs/tags/v3.5.1-rc2

- refs/tags/v4.0.0-preview1

- refs/tags/v4.0.0-preview1-rc1

- refs/tags/v4.0.0-preview1-rc2

- refs/tags/v4.0.0-preview1-rc3

Take a new snapshot of a software origin

If the archived software origin currently browsed is not synchronized with its upstream version (for instance when new commits have been issued), you can explicitly request Software Heritage to take a new snapshot of it.

Use the form below to proceed. Once a request has been submitted and accepted, it will be processed as soon as possible. You can then check its processing state by visiting this dedicated page.

Processing "take a new snapshot" request ...

Permalinks

To reference or cite the objects present in the Software Heritage archive, permalinks based on SoftWare Hash IDentifiers (SWHIDs) must be used.

Select below a type of object currently browsed in order to display its associated SWHID and permalink.

| Revision | Author | Date | Message | Commit Date |

|---|---|---|---|---|

| 4be5660 | Sean Owen | 11 August 2021, 23:04:55 UTC | Update Spark key negotiation protocol | 21 August 2021, 03:29:51 UTC |

| 163fbd2 | Liang-Chi Hsieh | 09 May 2021, 17:45:27 UTC | Preparing Spark release v2.4.8-rc4 | 09 May 2021, 17:45:27 UTC |

| 7733510 | Liang-Chi Hsieh | 07 May 2021, 16:07:57 UTC | [SPARK-35288][SQL] StaticInvoke should find the method without exact argument classes match ### What changes were proposed in this pull request? This patch proposes to make StaticInvoke able to find method with given method name even the parameter types do not exactly match to argument classes. ### Why are the changes needed? Unlike `Invoke`, `StaticInvoke` only tries to get the method with exact argument classes. If the calling method's parameter types are not exactly matched with the argument classes, `StaticInvoke` cannot find the method. `StaticInvoke` should be able to find the method under the cases too. ### Does this PR introduce _any_ user-facing change? Yes. `StaticInvoke` can find a method even the argument classes are not exactly matched. ### How was this patch tested? Unit test. Closes #32413 from viirya/static-invoke. Authored-by: Liang-Chi Hsieh <viirya@gmail.com> Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com> (cherry picked from commit 33fbf5647b4a5587c78ac51339c0cbc9d70547a4) Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com> | 07 May 2021, 16:09:25 UTC |

| eaf0553 | Liang-Chi Hsieh | 02 May 2021, 00:47:05 UTC | Preparing development version 2.4.9-SNAPSHOT Closes #32414 from viirya/prepare-rc4. Authored-by: Liang-Chi Hsieh <viirya@apache.org> Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com> | 02 May 2021, 00:47:05 UTC |

| e41f3c1 | Liang-Chi Hsieh | 01 May 2021, 21:58:25 UTC | [SPARK-35278][SQL][2.4] Invoke should find the method with correct number of parameters ### What changes were proposed in this pull request? This patch fixes `Invoke` expression when the target object has more than one method with the given method name. This is 2.4 backport of #32404. ### Why are the changes needed? `Invoke` will find out the method on the target object with given method name. If there are more than one method with the name, currently it is undeterministic which method will be used. We should add the condition of parameter number when finding the method. ### Does this PR introduce _any_ user-facing change? Yes, fixed a bug when using `Invoke` on a object where more than one method with the given method name. ### How was this patch tested? Unit test. Closes #32412 from viirya/SPARK-35278-2.4. Authored-by: Liang-Chi Hsieh <viirya@gmail.com> Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com> | 01 May 2021, 21:58:25 UTC |

| e89526d | Liang-Chi Hsieh | 28 April 2021, 08:22:14 UTC | Preparing Spark release v2.4.8-rc3 | 28 April 2021, 08:22:14 UTC |

| 183eb75 | Bo Zhang | 27 April 2021, 01:59:30 UTC | [SPARK-35227][BUILD] Update the resolver for spark-packages in SparkSubmit This change is to use repos.spark-packages.org instead of Bintray as the repository service for spark-packages. The change is needed because Bintray will no longer be available from May 1st. This should be transparent for users who use SparkSubmit. Tested running spark-shell with --packages manually. Closes #32346 from bozhang2820/replace-bintray. Authored-by: Bo Zhang <bo.zhang@databricks.com> Signed-off-by: hyukjinkwon <gurwls223@apache.org> (cherry picked from commit f738fe07b6fc85c880b64a1cc2f6c7cc1cc1379b) Signed-off-by: hyukjinkwon <gurwls223@apache.org> | 27 April 2021, 02:02:42 UTC |

| 101c9e7 | Chao Sun | 26 April 2021, 20:48:49 UTC | [SPARK-35233][2.4][BUILD] Switch from bintray to scala.jfrog.io for SBT download in branch 2.4 and 3.0 ### What changes were proposed in this pull request? Move SBT download URL from `https://dl.bintray.com/typesafe` to `https://scala.jfrog.io/artifactory`. ### Why are the changes needed? As [bintray is sunsetting](https://jfrog.com/blog/into-the-sunset-bintray-jcenter-gocenter-and-chartcenter/), we should migrate SBT download location away from it. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Tested manually and it is working, while previously it was failing because SBT 0.13.17 can't be found: ``` Attempting to fetch sbt Our attempt to download sbt locally to build/sbt-launch-0.13.17.jar failed. Please install sbt manually from http://www.scala-sbt.org/ ``` Closes #32352 from sunchao/SPARK-35233. Authored-by: Chao Sun <sunchao@apple.com> Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com> | 26 April 2021, 20:48:49 UTC |

| 67aad59 | Kousuke Saruta | 25 April 2021, 05:46:35 UTC | [SPARK-35210][BUILD][2.4] Upgrade Jetty to 9.4.40 to fix ERR_CONNECTION_RESET issue ### What changes were proposed in this pull request? This PR backports SPARK-35210 (#32318). This PR proposes to upgrade Jetty to 9.4.40. ### Why are the changes needed? SPARK-34988 (#32091) upgraded Jetty to 9.4.39 for CVE-2021-28165. But after the upgrade, Jetty 9.4.40 was released to fix the ERR_CONNECTION_RESET issue (https://github.com/eclipse/jetty.project/issues/6152). This issue seems to affect Jetty 9.4.39 when POST method is used with SSL. For Spark, job submission using REST and ThriftServer with HTTPS protocol can be affected. ### Does this PR introduce _any_ user-facing change? No. No released version uses Jetty 9.3.39. ### How was this patch tested? CI. Closes #32322 from sarutak/backport-SPARK-35210. Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com> Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com> | 25 April 2021, 05:46:35 UTC |

| bec7389 | Liang-Chi Hsieh | 22 April 2021, 16:20:59 UTC | Preparing development version 2.4.9-SNAPSHOT Closes #32293 from viirya/prepare-rc3. Authored-by: Liang-Chi Hsieh <viirya@apache.org> Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com> | 22 April 2021, 16:20:59 UTC |

| 1630d64 | Kent Yao | 21 April 2021, 07:22:51 UTC | [SPARK-31225][SQL][2.4] Override sql method of OuterReference ### What changes were proposed in this pull request? Per https://github.com/apache/spark/pull/32179#issuecomment-822954063 's request to backport SPARK-31225 to branch 2.4 ### Why are the changes needed? ### Does this PR introduce _any_ user-facing change? ### How was this patch tested? Closes #32256 from yaooqinn/SPARK-31225. Authored-by: Kent Yao <yao@apache.org> Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 21 April 2021, 07:22:51 UTC |

| c438f5f | allisonwang-db | 20 April 2021, 03:11:40 UTC | [SPARK-35080][SQL] Only allow a subset of correlated equality predicates when a subquery is aggregated This PR updated the `foundNonEqualCorrelatedPred` logic for correlated subqueries in `CheckAnalysis` to only allow correlated equality predicates that guarantee one-to-one mapping between inner and outer attributes, instead of all equality predicates. To fix correctness bugs. Before this fix Spark can give wrong results for certain correlated subqueries that pass CheckAnalysis: Example 1: ```sql create or replace view t1(c) as values ('a'), ('b') create or replace view t2(c) as values ('ab'), ('abc'), ('bc') select c, (select count(*) from t2 where t1.c = substring(t2.c, 1, 1)) from t1 ``` Correct results: [(a, 2), (b, 1)] Spark results: ``` +---+-----------------+ |c |scalarsubquery(c)| +---+-----------------+ |a |1 | |a |1 | |b |1 | +---+-----------------+ ``` Example 2: ```sql create or replace view t1(a, b) as values (0, 6), (1, 5), (2, 4), (3, 3); create or replace view t2(c) as values (6); select c, (select count(*) from t1 where a + b = c) from t2; ``` Correct results: [(6, 4)] Spark results: ``` +---+-----------------+ |c |scalarsubquery(c)| +---+-----------------+ |6 |1 | |6 |1 | |6 |1 | |6 |1 | +---+-----------------+ ``` Yes. Users will not be able to run queries that contain unsupported correlated equality predicates. Added unit tests. Closes #32179 from allisonwang-db/spark-35080-subquery-bug. Lead-authored-by: allisonwang-db <66282705+allisonwang-db@users.noreply.github.com> Co-authored-by: Wenchen Fan <cloud0fan@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit bad4b6f025de4946112a0897892a97d5ae6822cf) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 20 April 2021, 03:51:15 UTC |

| d4b9719 | weixiuli | 14 April 2021, 16:44:48 UTC | [SPARK-34834][NETWORK] Fix a potential Netty memory leak in TransportResponseHandler ### What changes were proposed in this pull request? There is a potential Netty memory leak in TransportResponseHandler. ### Why are the changes needed? Fix a potential Netty memory leak in TransportResponseHandler. ### Does this PR introduce _any_ user-facing change? NO ### How was this patch tested? NO Closes #31942 from weixiuli/SPARK-34834. Authored-by: weixiuli <weixiuli@jd.com> Signed-off-by: Sean Owen <srowen@gmail.com> (cherry picked from commit bf9f3b884fcd6bd3428898581d4b5dca9bae6538) Signed-off-by: Sean Owen <srowen@gmail.com> | 14 April 2021, 16:45:31 UTC |

| 63ebabb | Yuming Wang | 13 April 2021, 09:04:47 UTC | [SPARK-34212][SQL][FOLLOWUP] Move the added test to ParquetQuerySuite This pr moves the added test from `SQLQuerySuite` to `ParquetQuerySuite`. 1. It can be tested by `ParquetV1QuerySuite` and `ParquetV2QuerySuite`. 2. Reduce the testing time of `SQLQuerySuite`(SQLQuerySuite ~ 3 min 17 sec, ParquetV1QuerySuite ~ 27 sec). No. Unit test. Closes #32090 from wangyum/SPARK-34212. Authored-by: Yuming Wang <yumwang@ebay.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 13 April 2021, 09:34:30 UTC |

| a0ab27c | Liang-Chi Hsieh | 11 April 2021, 23:55:04 UTC | Preparing Spark release v2.4.8-rc2 | 11 April 2021, 23:55:04 UTC |

| ae5568e | Liang-Chi Hsieh | 10 April 2021, 00:19:14 UTC | [SPARK-34963][SQL][2.4] Fix nested column pruning for extracting case-insensitive struct field from array of struct ### What changes were proposed in this pull request? This patch proposes a fix of nested column pruning for extracting case-insensitive struct field from array of struct. This is the backport of #32059 to branch-2.4. ### Why are the changes needed? Under case-insensitive mode, nested column pruning rule cannot correctly push down extractor of a struct field of an array of struct, e.g., ```scala val query = spark.table("contacts").select("friends.First", "friends.MiDDle") ``` Error stack: ``` [info] java.lang.IllegalArgumentException: Field "First" does not exist. [info] Available fields: [info] at org.apache.spark.sql.types.StructType$$anonfun$apply$1.apply(StructType.scala:274) [info] at org.apache.spark.sql.types.StructType$$anonfun$apply$1.apply(StructType.scala:274) [info] at scala.collection.MapLike$class.getOrElse(MapLike.scala:128) [info] at scala.collection.AbstractMap.getOrElse(Map.scala:59) [info] at org.apache.spark.sql.types.StructType.apply(StructType.scala:273) [info] at org.apache.spark.sql.execution.ProjectionOverSchema$$anonfun$getProjection$3.apply(ProjectionOverSchema.scala:44) [info] at org.apache.spark.sql.execution.ProjectionOverSchema$$anonfun$getProjection$3.apply(ProjectionOverSchema.scala:41) ``` ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Unit test Closes #32112 from viirya/fix-array-nested-pruning-2.4. Authored-by: Liang-Chi Hsieh <viirya@gmail.com> Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com> | 10 April 2021, 00:19:14 UTC |

| b4d9d4a | Liang-Chi Hsieh | 08 April 2021, 23:26:23 UTC | [SPARK-34994][BUILD][2.4] Fix git error when pushing the tag after release script succeeds ### What changes were proposed in this pull request? This patch proposes to fix an error when running release-script in 2.4 branch. ### Why are the changes needed? When I ran release script for cutting 2.4.8 RC1, either in dry-run or normal run at the last step "push the tag after success", I encounter the following error: ``` fatal: Not a git repository (or any parent up to mount parent ....) Stopping at filesystem boundary (GIT_DISCOVERY_ACROSS_FILESYSTEM not set). ``` ### Does this PR introduce _any_ user-facing change? No, dev only. ### How was this patch tested? Manual test. Closes #32100 from viirya/fix-release-script-2. Authored-by: Liang-Chi Hsieh <viirya@gmail.com> Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com> | 08 April 2021, 23:26:23 UTC |

| f7ac0db | Kousuke Saruta | 08 April 2021, 15:42:12 UTC | [SPARK-34988][CORE][2.4] Upgrade Jetty for CVE-2021-28165 ### What changes were proposed in this pull request? This PR backports #32091. This PR upgrades the version of Jetty to 9.4.39. ### Why are the changes needed? CVE-2021-28165 affects the version of Jetty that Spark uses and it seems to be a little bit serious. https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2021-28165 ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Existing tests. Closes #32093 from sarutak/backport-SPARK-34988. Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com> Signed-off-by: Sean Owen <srowen@gmail.com> | 08 April 2021, 15:42:12 UTC |

| c36cea9 | Liang-Chi Hsieh | 07 April 2021, 03:59:51 UTC | Preparing development version 2.4.9-SNAPSHOT | 07 April 2021, 03:59:51 UTC |

| 53d37e4 | Liang-Chi Hsieh | 07 April 2021, 03:59:43 UTC | Preparing Spark release v2.4.8-rc1 | 07 April 2021, 03:59:43 UTC |

| 30436b5 | Liang-Chi Hsieh | 04 April 2021, 01:36:38 UTC | [SPARK-34939][CORE][2.4] Throw fetch failure exception when unable to deserialize broadcasted map statuses ### What changes were proposed in this pull request? This patch catches `IOException`, which is possibly thrown due to unable to deserialize map statuses (e.g., broadcasted value is destroyed), when deserilizing map statuses. Once `IOException` is caught, `MetadataFetchFailedException` is thrown to let Spark handle it. This is a backport of #32033 to branch-2.4. ### Why are the changes needed? One customer encountered application error. From the log, it is caused by accessing non-existing broadcasted value. The broadcasted value is map statuses. E.g., ``` [info] Cause: java.io.IOException: org.apache.spark.SparkException: Failed to get broadcast_0_piece0 of broadcast_0 [info] at org.apache.spark.util.Utils$.tryOrIOException(Utils.scala:1410) [info] at org.apache.spark.broadcast.TorrentBroadcast.readBroadcastBlock(TorrentBroadcast.scala:226) [info] at org.apache.spark.broadcast.TorrentBroadcast.getValue(TorrentBroadcast.scala:103) [info] at org.apache.spark.broadcast.Broadcast.value(Broadcast.scala:70) [info] at org.apache.spark.MapOutputTracker$.$anonfun$deserializeMapStatuses$3(MapOutputTracker.scala:967) [info] at org.apache.spark.internal.Logging.logInfo(Logging.scala:57) [info] at org.apache.spark.internal.Logging.logInfo$(Logging.scala:56) [info] at org.apache.spark.MapOutputTracker$.logInfo(MapOutputTracker.scala:887) [info] at org.apache.spark.MapOutputTracker$.deserializeMapStatuses(MapOutputTracker.scala:967) ``` There is a race-condition. After map statuses are broadcasted and the executors obtain serialized broadcasted map statuses. If any fetch failure happens after, Spark scheduler invalidates cached map statuses and destroy broadcasted value of the map statuses. Then any executor trying to deserialize serialized broadcasted map statuses and access broadcasted value, `IOException` will be thrown. Currently we don't catch it in `MapOutputTrackerWorker` and above exception will fail the application. Normally we should throw a fetch failure exception for such case. Spark scheduler will handle this. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Unit test. Closes #32045 from viirya/fix-broadcast. Authored-by: Liang-Chi Hsieh <viirya@gmail.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 04 April 2021, 01:36:38 UTC |

| 04485fe | Kousuke Saruta | 01 April 2021, 22:12:49 UTC | [SPARK-24931][INFRA][2.4] Fix the GA failure related to R linter ### What changes were proposed in this pull request? This PR backports the change of #32028 . This PR fixes the GA failure related to R linter which happens on some PRs (e.g. #32023, #32025). The reason seems `Rscript -e "devtools::install_github('jimhester/lintrv2.0.0')"` fails to download `lintrv2.0.0`. I don't know why but I confirmed we can download `v2.0.1`. ### Why are the changes needed? To keep GA healthy. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? GA itself. Closes #32029 from sarutak/backport-SPARK-24931. Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com> Signed-off-by: Sean Owen <srowen@gmail.com> | 01 April 2021, 22:12:49 UTC |

| 58a859a | Liang-Chi Hsieh | 01 April 2021, 08:36:48 UTC | Revert "[SPARK-33935][SQL][2.4] Fix CBO cost function" This reverts commit 3e6a6b76ef1b25f80133e6cb8561ba943f3b29de per the discussion at https://github.com/apache/spark/pull/30965#discussion_r605396265. Closes #32020 from viirya/revert-SPARK-33935. Authored-by: Liang-Chi Hsieh <viirya@gmail.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> | 01 April 2021, 08:36:48 UTC |

| f2ddbab | Tim Armstrong | 31 March 2021, 04:58:29 UTC | [SPARK-34909][SQL] Fix conversion of negative to unsigned in conv() Use `java.lang.Long.divideUnsigned()` to do integer division in `NumberConverter` to avoid a bug in `unsignedLongDiv` that produced invalid results. The previous results are incorrect, the result of the below query should be 45012021522523134134555 ``` scala> spark.sql("select conv('-10', 11, 7)").show(20, 150) +-----------------------+ | conv(-10, 11, 7)| +-----------------------+ |4501202152252313413456| +-----------------------+ scala> spark.sql("select hex(conv('-10', 11, 7))").show(20, 150) +----------------------------------------------+ | hex(conv(-10, 11, 7))| +----------------------------------------------+ |3435303132303231353232353233313334313334353600| +----------------------------------------------+ ``` `conv()` will produce different results because the bug is fixed. Added a simple unit test. Closes #32006 from timarmstrong/conv-unsigned. Authored-by: Tim Armstrong <tim.armstrong@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 13b255fefd881beb68fd8bb6741c7f88318baf9b) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 31 March 2021, 05:01:21 UTC |

| 38238d5 | Tanel Kiis | 29 March 2021, 11:14:35 UTC | [SPARK-34876][SQL][2.4] Fill defaultResult of non-nullable aggregates ### What changes were proposed in this pull request? Filled the `defaultResult` field on non-nullable aggregates ### Why are the changes needed? The `defaultResult` defaults to `None` and in some situations (like correlated scalar subqueries) it is used for the value of the aggregation. The UT result before the fix: ``` -- !query SELECT t1a, (SELECT count(t2d) FROM t2 WHERE t2a = t1a) count_t2, (SELECT approx_count_distinct(t2d) FROM t2 WHERE t2a = t1a) approx_count_distinct_t2, (SELECT collect_list(t2d) FROM t2 WHERE t2a = t1a) collect_list_t2, (SELECT collect_set(t2d) FROM t2 WHERE t2a = t1a) collect_set_t2, (SELECT hex(count_min_sketch(t2d, 0.5d, 0.5d, 1)) FROM t2 WHERE t2a = t1a) collect_set_t2 FROM t1 -- !query schema struct<t1a:string,count_t2:bigint,approx_count_distinct_t2:bigint,collect_list_t2:array<bigint>,collect_set_t2:array<bigint>,collect_set_t2:string> -- !query output val1a 0 NULL NULL NULL NULL val1a 0 NULL NULL NULL NULL val1a 0 NULL NULL NULL NULL val1a 0 NULL NULL NULL NULL val1b 6 3 [19,119,319,19,19,19] [19,119,319] 0000000100000000000000060000000100000004000000005D8D6AB90000000000000000000000000000000400000000000000010000000000000001 val1c 2 2 [219,19] [219,19] 0000000100000000000000020000000100000004000000005D8D6AB90000000000000000000000000000000100000000000000000000000000000001 val1d 0 NULL NULL NULL NULL val1d 0 NULL NULL NULL NULL val1d 0 NULL NULL NULL NULL val1e 1 1 [19] [19] 0000000100000000000000010000000100000004000000005D8D6AB90000000000000000000000000000000100000000000000000000000000000000 val1e 1 1 [19] [19] 0000000100000000000000010000000100000004000000005D8D6AB90000000000000000000000000000000100000000000000000000000000000000 val1e 1 1 [19] [19] 0000000100000000000000010000000100000004000000005D8D6AB90000000000000000000000000000000100000000000000000000000000000000 ``` ### Does this PR introduce _any_ user-facing change? Bugfix ### How was this patch tested? UT Closes #31991 from tanelk/SPARK-34876_non_nullable_agg_subquery_2.4. Authored-by: Tanel Kiis <tanel.kiis@gmail.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> | 29 March 2021, 11:14:35 UTC |

| 3e65ba9 | Liang-Chi Hsieh | 29 March 2021, 11:12:47 UTC | [SPARK-34855][CORE] Avoid local lazy variable in SparkContext.getCallSite ### What changes were proposed in this pull request? `SparkContext.getCallSite` uses local lazy variable. In Scala 2.11, local lazy val requires synchronization so for large number of job submissions in the same context, it will be a bottleneck. This only for branch-2.4 as we drop Scala 2.11 support at SPARK-26132. ### Why are the changes needed? To avoid possible bottleneck for large number of job submissions in the same context. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Existing tests. Closes #31988 from viirya/SPARK-34855. Authored-by: Liang-Chi Hsieh <viirya@gmail.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> | 29 March 2021, 11:12:47 UTC |

| 102b723 | HyukjinKwon | 29 March 2021, 07:05:02 UTC | Revert "[SPARK-34876][SQL] Fill defaultResult of non-nullable aggregates" This reverts commit b83ab63384e4a6f235f7d3c7a53ed098b8e1b87e. | 29 March 2021, 07:05:02 UTC |

| b83ab63 | Tanel Kiis | 29 March 2021, 02:47:08 UTC | [SPARK-34876][SQL] Fill defaultResult of non-nullable aggregates ### What changes were proposed in this pull request? Filled the `defaultResult` field on non-nullable aggregates ### Why are the changes needed? The `defaultResult` defaults to `None` and in some situations (like correlated scalar subqueries) it is used for the value of the aggregation. The UT result before the fix: ``` -- !query SELECT t1a, (SELECT count(t2d) FROM t2 WHERE t2a = t1a) count_t2, (SELECT count_if(t2d > 0) FROM t2 WHERE t2a = t1a) count_if_t2, (SELECT approx_count_distinct(t2d) FROM t2 WHERE t2a = t1a) approx_count_distinct_t2, (SELECT collect_list(t2d) FROM t2 WHERE t2a = t1a) collect_list_t2, (SELECT collect_set(t2d) FROM t2 WHERE t2a = t1a) collect_set_t2, (SELECT hex(count_min_sketch(t2d, 0.5d, 0.5d, 1)) FROM t2 WHERE t2a = t1a) collect_set_t2 FROM t1 -- !query schema struct<t1a:string,count_t2:bigint,count_if_t2:bigint,approx_count_distinct_t2:bigint,collect_list_t2:array<bigint>,collect_set_t2:array<bigint>,collect_set_t2:string> -- !query output val1a 0 0 NULL NULL NULL NULL val1a 0 0 NULL NULL NULL NULL val1a 0 0 NULL NULL NULL NULL val1a 0 0 NULL NULL NULL NULL val1b 6 6 3 [19,119,319,19,19,19] [19,119,319] 0000000100000000000000060000000100000004000000005D8D6AB90000000000000000000000000000000400000000000000010000000000000001 val1c 2 2 2 [219,19] [219,19] 0000000100000000000000020000000100000004000000005D8D6AB90000000000000000000000000000000100000000000000000000000000000001 val1d 0 0 NULL NULL NULL NULL val1d 0 0 NULL NULL NULL NULL val1d 0 0 NULL NULL NULL NULL val1e 1 1 1 [19] [19] 0000000100000000000000010000000100000004000000005D8D6AB90000000000000000000000000000000100000000000000000000000000000000 val1e 1 1 1 [19] [19] 0000000100000000000000010000000100000004000000005D8D6AB90000000000000000000000000000000100000000000000000000000000000000 val1e 1 1 1 [19] [19] 0000000100000000000000010000000100000004000000005D8D6AB90000000000000000000000000000000100000000000000000000000000000000 ``` ### Does this PR introduce _any_ user-facing change? Bugfix ### How was this patch tested? UT Closes #31973 from tanelk/SPARK-34876_non_nullable_agg_subquery. Authored-by: Tanel Kiis <tanel.kiis@gmail.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit 4b9e94c44412f399ba19e0ea90525d346942bf71) Signed-off-by: HyukjinKwon <gurwls223@apache.org> | 29 March 2021, 02:47:46 UTC |

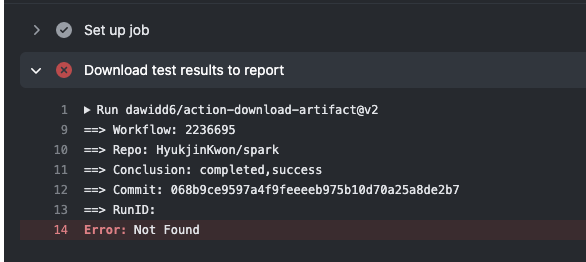

| 8062ab0 | HyukjinKwon | 26 March 2021, 09:12:28 UTC | [SPARK-34874][INFRA] Recover test reports for failed GA builds ### What changes were proposed in this pull request? https://github.com/dawidd6/action-download-artifact/commit/621becc6d7c440318382ce6f4cb776f27dd3fef3#r48726074 there was a behaviour change in the download artifact plugin and it disabled the test reporting in failed builds. This PR recovers it by explicitly setting the conclusion from the workflow runs to search for the artifacts to download. ### Why are the changes needed? In order to properly report the failed test cases. ### Does this PR introduce _any_ user-facing change? No, it's dev only. ### How was this patch tested? Manually tested at https://github.com/HyukjinKwon/spark/pull/30 Before:  After:  Closes #31970 from HyukjinKwon/SPARK-34874. Authored-by: HyukjinKwon <gurwls223@apache.org> Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit c8233f1be5c2f853f42cda367475eb135a83afd5) Signed-off-by: HyukjinKwon <gurwls223@apache.org> | 26 March 2021, 09:13:06 UTC |

| 615dbe1 | Takeshi Yamamuro | 26 March 2021, 02:20:19 UTC | [SPARK-34607][SQL][2.4] Add `Utils.isMemberClass` to fix a malformed class name error on jdk8u ### What changes were proposed in this pull request? This PR intends to fix a bug of `objects.NewInstance` if a user runs Spark on jdk8u and a given `cls` in `NewInstance` is a deeply-nested inner class, e.g.,. ``` object OuterLevelWithVeryVeryVeryLongClassName1 { object OuterLevelWithVeryVeryVeryLongClassName2 { object OuterLevelWithVeryVeryVeryLongClassName3 { object OuterLevelWithVeryVeryVeryLongClassName4 { object OuterLevelWithVeryVeryVeryLongClassName5 { object OuterLevelWithVeryVeryVeryLongClassName6 { object OuterLevelWithVeryVeryVeryLongClassName7 { object OuterLevelWithVeryVeryVeryLongClassName8 { object OuterLevelWithVeryVeryVeryLongClassName9 { object OuterLevelWithVeryVeryVeryLongClassName10 { object OuterLevelWithVeryVeryVeryLongClassName11 { object OuterLevelWithVeryVeryVeryLongClassName12 { object OuterLevelWithVeryVeryVeryLongClassName13 { object OuterLevelWithVeryVeryVeryLongClassName14 { object OuterLevelWithVeryVeryVeryLongClassName15 { object OuterLevelWithVeryVeryVeryLongClassName16 { object OuterLevelWithVeryVeryVeryLongClassName17 { object OuterLevelWithVeryVeryVeryLongClassName18 { object OuterLevelWithVeryVeryVeryLongClassName19 { object OuterLevelWithVeryVeryVeryLongClassName20 { case class MalformedNameExample2(x: Int) }}}}}}}}}}}}}}}}}}}} ``` The root cause that Kris (rednaxelafx) investigated is as follows (Kudos to Kris); The reason why the test case above is so convoluted is in the way Scala generates the class name for nested classes. In general, Scala generates a class name for a nested class by inserting the dollar-sign ( `$` ) in between each level of class nesting. The problem is that this format can concatenate into a very long string that goes beyond certain limits, so Scala will change the class name format beyond certain length threshold. For the example above, we can see that the first two levels of class nesting have class names that look like this: ``` org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassName1$ org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassName1$OuterLevelWithVeryVeryVeryLongClassName2$ ``` If we leave out the fact that Scala uses a dollar-sign ( `$` ) suffix for the class name of the companion object, `OuterLevelWithVeryVeryVeryLongClassName1`'s full name is a prefix (substring) of `OuterLevelWithVeryVeryVeryLongClassName2`. But if we keep going deeper into the levels of nesting, you'll find names that look like: ``` org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassNam$$$$2a1321b953c615695d7442b2adb1$$$$ryVeryLongClassName8$OuterLevelWithVeryVeryVeryLongClassName9$OuterLevelWithVeryVeryVeryLongClassName10$ org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassNam$$$$2a1321b953c615695d7442b2adb1$$$$ryVeryLongClassName8$OuterLevelWithVeryVeryVeryLongClassName9$OuterLevelWithVeryVeryVeryLongClassName10$OuterLevelWithVeryVeryVeryLongClassName11$ org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassNam$$$$85f068777e7ecf112afcbe997d461b$$$$VeryLongClassName11$OuterLevelWithVeryVeryVeryLongClassName12$ org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassNam$$$$85f068777e7ecf112afcbe997d461b$$$$VeryLongClassName11$OuterLevelWithVeryVeryVeryLongClassName12$OuterLevelWithVeryVeryVeryLongClassName13$ org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassNam$$$$85f068777e7ecf112afcbe997d461b$$$$VeryLongClassName11$OuterLevelWithVeryVeryVeryLongClassName12$OuterLevelWithVeryVeryVeryLongClassName13$OuterLevelWithVeryVeryVeryLongClassName14$ org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassNam$$$$5f7ad51804cb1be53938ea804699fa$$$$VeryLongClassName14$OuterLevelWithVeryVeryVeryLongClassName15$ org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassNam$$$$5f7ad51804cb1be53938ea804699fa$$$$VeryLongClassName14$OuterLevelWithVeryVeryVeryLongClassName15$OuterLevelWithVeryVeryVeryLongClassName16$ org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassNam$$$$5f7ad51804cb1be53938ea804699fa$$$$VeryLongClassName14$OuterLevelWithVeryVeryVeryLongClassName15$OuterLevelWithVeryVeryVeryLongClassName16$OuterLevelWithVeryVeryVeryLongClassName17$ org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassNam$$$$69b54f16b1965a31e88968df1a58d8$$$$VeryLongClassName17$OuterLevelWithVeryVeryVeryLongClassName18$ org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassNam$$$$69b54f16b1965a31e88968df1a58d8$$$$VeryLongClassName17$OuterLevelWithVeryVeryVeryLongClassName18$OuterLevelWithVeryVeryVeryLongClassName19$ org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassNam$$$$69b54f16b1965a31e88968df1a58d8$$$$VeryLongClassName17$OuterLevelWithVeryVeryVeryLongClassName18$OuterLevelWithVeryVeryVeryLongClassName19$OuterLevelWithVeryVeryVeryLongClassName20$ ``` with a hash code in the middle and various levels of nesting omitted. The `java.lang.Class.isMemberClass` method is implemented in JDK8u as: http://hg.openjdk.java.net/jdk8u/jdk8u/jdk/file/tip/src/share/classes/java/lang/Class.java#l1425 ``` /** * Returns {code true} if and only if the underlying class * is a member class. * * return {code true} if and only if this class is a member class. * since 1.5 */ public boolean isMemberClass() { return getSimpleBinaryName() != null && !isLocalOrAnonymousClass(); } /** * Returns the "simple binary name" of the underlying class, i.e., * the binary name without the leading enclosing class name. * Returns {code null} if the underlying class is a top level * class. */ private String getSimpleBinaryName() { Class<?> enclosingClass = getEnclosingClass(); if (enclosingClass == null) // top level class return null; // Otherwise, strip the enclosing class' name try { return getName().substring(enclosingClass.getName().length()); } catch (IndexOutOfBoundsException ex) { throw new InternalError("Malformed class name", ex); } } ``` and the problematic code is `getName().substring(enclosingClass.getName().length())` -- if a class's enclosing class's full name is *longer* than the nested class's full name, this logic would end up going out of bounds. The bug has been fixed in JDK9 by https://bugs.java.com/bugdatabase/view_bug.do?bug_id=8057919 , but still exists in the latest JDK8u release. So from the Spark side we'd need to do something to avoid hitting this problem. This is the backport of #31733. ### Why are the changes needed? Bugfix on jdk8u. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Added tests. Closes #31747 from maropu/SPARK34607-BRANCH2.4. Authored-by: Takeshi Yamamuro <yamamuro@apache.org> Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com> | 26 March 2021, 02:20:19 UTC |

| 6ee1c08 | Takeshi Yamamuro | 24 March 2021, 17:37:32 UTC | [SPARK-34596][SQL][2.4] Use Utils.getSimpleName to avoid hitting Malformed class name in NewInstance.doGenCode ### What changes were proposed in this pull request? Use `Utils.getSimpleName` to avoid hitting `Malformed class name` error in `NewInstance.doGenCode`. NOTE: branch-2.4 does not have the interpreted implementation of `SafeProjection`, so it does not fall back into the interpreted mode if the compilation fails. Therefore, the test in this PR just checks that the compilation error happens instead of checking that the interpreted mode works well. This is the backport PR of #31709 and the credit should be rednaxelafx . ### Why are the changes needed? On older JDK versions (e.g. JDK8u), nested Scala classes may trigger `java.lang.Class.getSimpleName` to throw an `java.lang.InternalError: Malformed class name` error. In this particular case, creating an `ExpressionEncoder` on such a nested Scala class would create a `NewInstance` expression under the hood, which will trigger the problem during codegen. Similar to https://github.com/apache/spark/pull/29050, we should use Spark's `Utils.getSimpleName` utility function in place of `Class.getSimpleName` to avoid hitting the issue. There are two other occurrences of `java.lang.Class.getSimpleName` in the same file, but they're safe because they're only guaranteed to be only used on Java classes, which don't have this problem, e.g.: ```scala // Make a copy of the data if it's unsafe-backed def makeCopyIfInstanceOf(clazz: Class[_ <: Any], value: String) = s"$value instanceof ${clazz.getSimpleName}? ${value}.copy() : $value" val genFunctionValue: String = lambdaFunction.dataType match { case StructType(_) => makeCopyIfInstanceOf(classOf[UnsafeRow], genFunction.value) case ArrayType(_, _) => makeCopyIfInstanceOf(classOf[UnsafeArrayData], genFunction.value) case MapType(_, _, _) => makeCopyIfInstanceOf(classOf[UnsafeMapData], genFunction.value) case _ => genFunction.value } ``` The Unsafe-* family of types are all Java types, so they're okay. ### Does this PR introduce _any_ user-facing change? Fixes a bug that throws an error when using `ExpressionEncoder` on some nested Scala types, otherwise no changes. ### How was this patch tested? Added a test case to `org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite`. It'll fail on JDK8u before the fix, and pass after the fix. Closes #31888 from maropu/SPARK-34596-BRANCH2.4. Lead-authored-by: Takeshi Yamamuro <yamamuro@apache.org> Co-authored-by: Kris Mok <kris.mok@databricks.com> Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com> | 24 March 2021, 17:37:32 UTC |

| e756130 | Lena | 23 March 2021, 07:13:32 UTC | [MINOR][DOCS] Updating the link for Azure Data Lake Gen 2 in docs Current link for `Azure Blob Storage and Azure Datalake Gen 2` leads to AWS information. Replacing the link to point to the right page. For users to access to the correct link. Yes, it fixes the link correctly. N/A Closes #31938 from lenadroid/patch-1. Authored-by: Lena <alehall@microsoft.com> Signed-off-by: Max Gekk <max.gekk@gmail.com> (cherry picked from commit d32bb4e5ee4718741252c46c50a40810b722f12d) Signed-off-by: Max Gekk <max.gekk@gmail.com> | 23 March 2021, 07:18:00 UTC |

| 5685d84 | Peter Toth | 22 March 2021, 16:28:05 UTC | [SPARK-34726][SQL][2.4] Fix collectToPython timeouts ### What changes were proposed in this pull request? One of our customers frequently encounters `"serve-DataFrame" java.net.SocketTimeoutException: Accept timed` errors in PySpark because `DataSet.collectToPython()` in Spark 2.4 does the following: 1. Collects the results 2. Opens up a socket server that is then listening to the connection from Python side 3. Runs the event listeners as part of `withAction` on the same thread as SPARK-25680 is not available in Spark 2.4 4. Returns the address of the socket server to Python 5. The Python side connects to the socket server and fetches the data As the customer has a custom, long running event listener the time between 2. and 5. is frequently longer than the default connection timeout and increasing the connect timeout is not a good solution as we don't know how long running the listeners can take. ### Why are the changes needed? This PR simply moves the socket server creation (2.) after running the listeners (3.). I think this approach has has a minor side effect that errors in socket server creation are not reported as `onFailure` events, but currently errors happening during opening the connection from Python side or data transfer from JVM to Python are also not reported as events so IMO this is not a big change. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Added new UT + manual test. Closes #31818 from peter-toth/SPARK-34726-fix-collectToPython-timeouts-2.4. Lead-authored-by: Peter Toth <ptoth@cloudera.com> Co-authored-by: Peter Toth <peter.toth@gmail.com> Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com> | 22 March 2021, 16:28:05 UTC |

| ce58e05 | Wenchen Fan | 20 March 2021, 02:09:50 UTC | [SPARK-34719][SQL][2.4] Correctly resolve the view query with duplicated column names backport https://github.com/apache/spark/pull/31811 to 2.4 For permanent views (and the new SQL temp view in Spark 3.1), we store the view SQL text and re-parse/analyze the view SQL text when reading the view. In the case of `SELECT * FROM ...`, we want to avoid view schema change (e.g. the referenced table changes its schema) and will record the view query output column names when creating the view, so that when reading the view we can add a `SELECT recorded_column_names FROM ...` to retain the original view query schema. In Spark 3.1 and before, the final SELECT is added after the analysis phase: https://github.com/apache/spark/blob/branch-3.1/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/view.scala#L67 If the view query has duplicated output column names, we always pick the first column when reading a view. A simple repro: ``` scala> sql("create view c(x, y) as select 1 a, 2 a") res0: org.apache.spark.sql.DataFrame = [] scala> sql("select * from c").show +---+---+ | x| y| +---+---+ | 1| 1| +---+---+ ``` In the master branch, we will fail at the view reading time due to https://github.com/apache/spark/commit/b891862fb6b740b103d5a09530626ee4e0e8f6e3 , which adds the final SELECT during analysis, so that the query fails with `Reference 'a' is ambiguous` This PR proposes to resolve the view query output column names from the matching attributes by ordinal. For example, `create view c(x, y) as select 1 a, 2 a`, the view query output column names are `[a, a]`. When we reading the view, there are 2 matching attributes (e.g.`[a#1, a#2]`) and we can simply match them by ordinal. A negative example is ``` create table t(a int) create view v as select *, 1 as col from t replace table t(a int, col int) ``` When reading the view, the view query output column names are `[a, col]`, and there are two matching attributes of `col`, and we should fail the query. See the tests for details. bug fix yes new test Closes #31894 from cloud-fan/backport. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org> | 22 March 2021, 15:52:02 UTC |

| 29b981b | Dongjoon Hyun | 21 March 2021, 21:08:34 UTC | [SPARK-34811][CORE] Redact fs.s3a.access.key like secret and token ### What changes were proposed in this pull request? Like we redact secrets and tokens, this PR aims to redact access key. ### Why are the changes needed? Access key is also worth to hide. ### Does this PR introduce _any_ user-facing change? This will hide this information from SparkUI (`Spark Properties` and `Hadoop Properties` and logs). ### How was this patch tested? Pass the newly updated UT. Closes #31912 from dongjoon-hyun/SPARK-34811. Authored-by: Dongjoon Hyun <dhyun@apple.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit 3c32b54a0fbdc55c503bc72a3d39d58bf99e3bfa) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 21 March 2021, 21:09:13 UTC |

| 7879a0c | Vinoo Ganesh | 16 January 2019, 19:43:10 UTC | [SPARK-26625] Add oauthToken to spark.redaction.regex ## What changes were proposed in this pull request? The regex (spark.redaction.regex) that is used to decide which config properties or environment settings are sensitive should also include oauthToken to match spark.kubernetes.authenticate.submission.oauthToken ## How was this patch tested? Simple regex addition - happy to add a test if needed. Author: Vinoo Ganesh <vganesh@palantir.com> Closes #23555 from vinooganesh/vinooganesh/SPARK-26625. (cherry picked from commit 01301d09721cc12f1cc66ab52de3da117f5d33e6) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 21 March 2021, 07:01:20 UTC |

| 59e4ae4 | Liang-Chi Hsieh | 20 March 2021, 02:26:01 UTC | [SPARK-34776][SQL][3.0][2.4] Window class should override producedAttributes ### What changes were proposed in this pull request? This patch proposes to override `producedAttributes` of `Window` class. ### Why are the changes needed? This is a backport of #31897 to branch-3.0/2.4. Unlike original PR, nested column pruning does not allow pushing through `Window` in branch-3.0/2.4 yet. But `Window` doesn't override `producedAttributes`. It's wrong and could cause potential issue. So backport `Window` related change. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Existing tests. Closes #31904 from viirya/SPARK-34776-3.0. Authored-by: Liang-Chi Hsieh <viirya@gmail.com> Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org> (cherry picked from commit 828cf76bced1b70769b0453f3e9ba95faaa84e39) Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org> | 20 March 2021, 02:26:24 UTC |

| c5d81cb | yangjie01 | 19 March 2021, 21:12:51 UTC | [SPARK-34774][BUILD][2.4] Ensure change-scala-version.sh update scala.version in parent POM correctly ### What changes were proposed in this pull request? After SPARK-34507, execute` change-scala-version.sh` script will update `scala.version` in parent pom, but if we execute the following commands in order: ``` dev/change-scala-version.sh 2.12 dev/change-scala-version.sh 2.11 git status ``` there will generate git diff as follow: ``` diff --git a/pom.xml b/pom.xml index f4a50dc5c1..89fd7d88af 100644 --- a/pom.xml +++ b/pom.xml -155,7 +155,7 <commons.math3.version>3.4.1</commons.math3.version> <commons.collections.version>3.2.2</commons.collections.version> - <scala.version>2.11.12</scala.version> + <scala.version>2.12.10</scala.version> <scala.binary.version>2.11</scala.binary.version> <codehaus.jackson.version>1.9.13</codehaus.jackson.version> <fasterxml.jackson.version>2.6.7</fasterxml.jackson.version> ``` seem 'scala.version' property was not update correctly. So this pr add an extra 'scala.version' to scala-2.11 profile to ensure change-scala-version.sh can update the public `scala.version` property correctly. ### Why are the changes needed? Bug fix. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? **Manual test** Execute the following commands in order: ``` dev/change-scala-version.sh 2.12 dev/change-scala-version.sh 2.11 git status ``` **Before** ``` diff --git a/pom.xml b/pom.xml index f4a50dc5c1..89fd7d88af 100644 --- a/pom.xml +++ b/pom.xml -155,7 +155,7 <commons.math3.version>3.4.1</commons.math3.version> <commons.collections.version>3.2.2</commons.collections.version> - <scala.version>2.11.12</scala.version> + <scala.version>2.12.10</scala.version> <scala.binary.version>2.11</scala.binary.version> <codehaus.jackson.version>1.9.13</codehaus.jackson.version> <fasterxml.jackson.version>2.6.7</fasterxml.jackson.version> ``` **After** No git diff. Closes #31893 from LuciferYang/SPARK-34774-24. Authored-by: yangjie01 <yangjie01@baidu.com> Signed-off-by: Sean Owen <srowen@gmail.com> | 19 March 2021, 21:12:51 UTC |

| 3c627ad | Dongjoon Hyun | 16 March 2021, 01:49:54 UTC | [MINOR][SQL] Remove unused variable in NewInstance.constructor ### What changes were proposed in this pull request? This PR removes one unused variable in `NewInstance.constructor`. ### Why are the changes needed? This looks like a variable for debugging at the initial commit of SPARK-23584 . - https://github.com/apache/spark/commit/1b08c4393cf48e21fea9914d130d8d3bf544061d#diff-2a36e31684505fd22e2d12a864ce89fd350656d716a3f2d7789d2cdbe38e15fbR461 ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Pass the CIs. Closes #31838 from dongjoon-hyun/minor-object. Authored-by: Dongjoon Hyun <dhyun@apple.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit 0a70dff0663320cabdbf4e4ecb771071582f9417) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 16 March 2021, 01:50:43 UTC |

| 7b7a8fe | Dongjoon Hyun | 15 March 2021, 09:30:54 UTC | [SPARK-34743][SQL][TESTS] ExpressionEncoderSuite should use deepEquals when we expect `array of array` ### What changes were proposed in this pull request? This PR aims to make `ExpressionEncoderSuite` to use `deepEquals` instead of `equals` when `input` is `array of array`. This comparison code itself was added by SPARK-11727 at Apache Spark 1.6.0. ### Why are the changes needed? Currently, the interpreted mode fails for `array of array` because the following line is used. ``` Arrays.equals(b1.asInstanceOf[Array[AnyRef]], b2.asInstanceOf[Array[AnyRef]]) ``` ### Does this PR introduce _any_ user-facing change? No. This is a test-only PR. ### How was this patch tested? Pass the existing CIs. Closes #31837 from dongjoon-hyun/SPARK-34743. Authored-by: Dongjoon Hyun <dhyun@apple.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit 363a7f0722522898337b926224bf46bb9c32176c) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 15 March 2021, 09:38:52 UTC |

| 41f46cf | Dongjoon Hyun | 12 March 2021, 12:30:46 UTC | [SPARK-34724][SQL] Fix Interpreted evaluation by using getMethod instead of getDeclaredMethod This bug was introduced by SPARK-23583 at Apache Spark 2.4.0. This PR aims to use `getMethod` instead of `getDeclaredMethod`. ```scala - obj.getClass.getDeclaredMethod(functionName, argClasses: _*) + obj.getClass.getMethod(functionName, argClasses: _*) ``` `getDeclaredMethod` does not search the super class's method. To invoke `GenericArrayData.toIntArray`, we need to use `getMethod` because it's declared at the super class `ArrayData`. ``` [info] - encode/decode for array of int: [I74655d03 (interpreted path) *** FAILED *** (14 milliseconds) [info] Exception thrown while decoding [info] Converted: [0,1000000020,3,0,ffffff850000001f,4] [info] Schema: value#680 [info] root [info] -- value: array (nullable = true) [info] |-- element: integer (containsNull = false) [info] [info] [info] Encoder: [info] class[value[0]: array<int>] (ExpressionEncoderSuite.scala:578) [info] org.scalatest.exceptions.TestFailedException: [info] at org.scalatest.Assertions.newAssertionFailedException(Assertions.scala:472) [info] at org.scalatest.Assertions.newAssertionFailedException$(Assertions.scala:471) [info] at org.scalatest.funsuite.AnyFunSuite.newAssertionFailedException(AnyFunSuite.scala:1563) [info] at org.scalatest.Assertions.fail(Assertions.scala:949) [info] at org.scalatest.Assertions.fail$(Assertions.scala:945) [info] at org.scalatest.funsuite.AnyFunSuite.fail(AnyFunSuite.scala:1563) [info] at org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite.$anonfun$encodeDecodeTest$1(ExpressionEncoderSuite.scala:578) [info] at org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite.verifyNotLeakingReflectionObjects(ExpressionEncoderSuite.scala:656) [info] at org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite.$anonfun$testAndVerifyNotLeakingReflectionObjects$2(ExpressionEncoderSuite.scala:669) [info] at org.apache.spark.sql.catalyst.plans.CodegenInterpretedPlanTest.$anonfun$test$4(PlanTest.scala:50) [info] at org.apache.spark.sql.catalyst.plans.SQLHelper.withSQLConf(SQLHelper.scala:54) [info] at org.apache.spark.sql.catalyst.plans.SQLHelper.withSQLConf$(SQLHelper.scala:38) [info] at org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite.withSQLConf(ExpressionEncoderSuite.scala:118) [info] at org.apache.spark.sql.catalyst.plans.CodegenInterpretedPlanTest.$anonfun$test$3(PlanTest.scala:50) ... [info] Cause: java.lang.RuntimeException: Error while decoding: java.lang.NoSuchMethodException: org.apache.spark.sql.catalyst.util.GenericArrayData.toIntArray() [info] mapobjects(lambdavariable(MapObject, IntegerType, false, -1), assertnotnull(lambdavariable(MapObject, IntegerType, false, -1)), input[0, array<int>, true], None).toIntArray [info] at org.apache.spark.sql.catalyst.encoders.ExpressionEncoder$Deserializer.apply(ExpressionEncoder.scala:186) [info] at org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite.$anonfun$encodeDecodeTest$1(ExpressionEncoderSuite.scala:576) [info] at org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite.verifyNotLeakingReflectionObjects(ExpressionEncoderSuite.scala:656) [info] at org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite.$anonfun$testAndVerifyNotLeakingReflectionObjects$2(ExpressionEncoderSuite.scala:669) ``` This causes a runtime exception when we use the interpreted mode. Pass the modified unit test case. Closes #31816 from dongjoon-hyun/SPARK-34724. Authored-by: Dongjoon Hyun <dhyun@apple.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit 9a7977933fd08d0e95ffa59161bed3b10bc9ca61) Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit 99a6a1af6d3f2c28eb65decc17ee1a015f89f0d2) Signed-off-by: HyukjinKwon <gurwls223@apache.org> | 12 March 2021, 12:32:42 UTC |

| 7e0fbe0 | William Hyun | 25 May 2020, 01:25:43 UTC | [SPARK-31807][INFRA] Use python 3 style in release-build.sh ### What changes were proposed in this pull request? This PR aims to use the style that is compatible with both python 2 and 3. ### Why are the changes needed? This will help python 3 migration. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Manual. Closes #28632 from williamhyun/use_python3_style. Authored-by: William Hyun <williamhyun3@gmail.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit 753636e86b1989a9b43ec691de5136a70d11f323) Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit 40e5de54e6109762d3ee85197da4fa1a8b20e13b) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 12 March 2021, 00:32:16 UTC |

| 6ec74e2 | Dongjoon Hyun | 11 March 2021, 07:41:49 UTC | [SPARK-34696][SQL][TESTS] Fix CodegenInterpretedPlanTest to generate correct test cases SPARK-23596 added `CodegenInterpretedPlanTest` at Apache Spark 2.4.0 in a wrong way because `withSQLConf` depends on the execution time `SQLConf.get` instead of `test` function declaration time. So, the following code executes the test twice without controlling the `CodegenObjectFactoryMode`. This PR aims to fix it correct and introduce a new function `testFallback`. ```scala trait CodegenInterpretedPlanTest extends PlanTest { override protected def test( testName: String, testTags: Tag*)(testFun: => Any)(implicit pos: source.Position): Unit = { val codegenMode = CodegenObjectFactoryMode.CODEGEN_ONLY.toString val interpretedMode = CodegenObjectFactoryMode.NO_CODEGEN.toString withSQLConf(SQLConf.CODEGEN_FACTORY_MODE.key -> codegenMode) { super.test(testName + " (codegen path)", testTags: _*)(testFun)(pos) } withSQLConf(SQLConf.CODEGEN_FACTORY_MODE.key -> interpretedMode) { super.test(testName + " (interpreted path)", testTags: _*)(testFun)(pos) } } } ``` 1. We need to use like the following. ```scala super.test(testName + " (codegen path)", testTags: _*)( withSQLConf(SQLConf.CODEGEN_FACTORY_MODE.key -> codegenMode) { testFun })(pos) super.test(testName + " (interpreted path)", testTags: _*)( withSQLConf(SQLConf.CODEGEN_FACTORY_MODE.key -> interpretedMode) { testFun })(pos) ``` 2. After we fix this behavior with the above code, several test cases including SPARK-34596 and SPARK-34607 fail because they didn't work at both `CODEGEN` and `INTERPRETED` mode. Those test cases only work at `FALLBACK` mode. So, inevitably, we need to introduce `testFallback`. No. Pass the CIs. Closes #31766 from dongjoon-hyun/SPARK-34596-SPARK-34607. Lead-authored-by: Dongjoon Hyun <dhyun@apple.com> Co-authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit 5c4d8f95385ac97a66e5b491b5883ec770ae85bd) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 11 March 2021, 07:51:45 UTC |

| 906df15 | Liang-Chi Hsieh | 11 March 2021, 02:42:11 UTC | [SPARK-34703][PYSPARK][2.4] Fix pyspark test when using sort_values on Pandas ### What changes were proposed in this pull request? This patch fixes a few PySpark test error related to Pandas, in order to restore 2.4 Jenkins builds. ### Why are the changes needed? There are APIs changed since Pandas 0.24. If there are index and column name are the same, `sort_values` will throw error. Three PySpark tests are currently failed in Jenkins 2.4 build: `test_column_order`, `test_complex_groupby`, `test_udf_with_key`: ``` ====================================================================== ERROR: test_column_order (pyspark.sql.tests.GroupedMapPandasUDFTests) ---------------------------------------------------------------------- Traceback (most recent call last): File "/spark/python/pyspark/sql/tests.py", line 5996, in test_column_order expected = pd_result.sort_values(['id', 'v']).reset_index(drop=True) File "/usr/local/lib/python2.7/dist-packages/pandas/core/frame.py", line 4711, in sort_values for x in by] File "/usr/local/lib/python2.7/dist-packages/pandas/core/generic.py", line 1702, in _get_label_or_level_values self._check_label_or_level_ambiguity(key, axis=axis) File "/usr/local/lib/python2.7/dist-packages/pandas/core/generic.py", line 1656, in _check_label_or_level_ambiguity raise ValueError(msg) ValueError: 'id' is both an index level and a column label, which is ambiguous. ====================================================================== ERROR: test_complex_groupby (pyspark.sql.tests.GroupedMapPandasUDFTests) ---------------------------------------------------------------------- Traceback (most recent call last): File "/spark/python/pyspark/sql/tests.py", line 5765, in test_complex_groupby expected = expected.sort_values(['id', 'v']).reset_index(drop=True) File "/usr/local/lib/python2.7/dist-packages/pandas/core/frame.py", line 4711, in sort_values for x in by] File "/usr/local/lib/python2.7/dist-packages/pandas/core/generic.py", line 1702, in _get_label_or_level_values self._check_label_or_level_ambiguity(key, axis=axis) File "/usr/local/lib/python2.7/dist-packages/pandas/core/generic.py", line 1656, in _check_label_or_level_ambiguity raise ValueError(msg) ValueError: 'id' is both an index level and a column label, which is ambiguous. ====================================================================== ERROR: test_udf_with_key (pyspark.sql.tests.GroupedMapPandasUDFTests) ---------------------------------------------------------------------- Traceback (most recent call last): File "/spark/python/pyspark/sql/tests.py", line 5922, in test_udf_with_key .sort_values(['id', 'v']).reset_index(drop=True) File "/usr/local/lib/python2.7/dist-packages/pandas/core/frame.py", line 4711, in sort_values for x in by] File "/usr/local/lib/python2.7/dist-packages/pandas/core/generic.py", line 1702, in _get_label_or_level_values self._check_label_or_level_ambiguity(key, axis=axis) File "/usr/local/lib/python2.7/dist-packages/pandas/core/generic.py", line 1656, in _check_label_or_level_ambiguity raise ValueError(msg) ValueError: 'id' is both an index level and a column label, which is ambiguous. ``` ### Does this PR introduce _any_ user-facing change? No, dev only. ### How was this patch tested? Verified by running the tests locally. Closes #31803 from viirya/SPARK-34703. Authored-by: Liang-Chi Hsieh <viirya@gmail.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 11 March 2021, 02:42:11 UTC |

| 7985360 | Sean Owen | 11 March 2021, 01:02:24 UTC | [SPARK-34507][BUILD] Update scala.version in parent POM when changing Scala version for cross-build ### What changes were proposed in this pull request? The `change-scala-version.sh` script updates Scala versions across the build for cross-build purposes. It manually changes `scala.binary.version` but not `scala.version`. ### Why are the changes needed? It seems that this has always been an oversight, and the cross-built builds of Spark have an incorrect scala.version. See 2.4.5's 2.12 POM for example, which shows a Scala 2.11 version. https://search.maven.org/artifact/org.apache.spark/spark-core_2.12/2.4.5/pom More comments in the JIRA. ### Does this PR introduce _any_ user-facing change? Should be a build-only bug fix. ### How was this patch tested? Existing tests, but really N/A Closes #31801 from srowen/SPARK-34507. Authored-by: Sean Owen <srowen@gmail.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> | 11 March 2021, 01:39:32 UTC |

| 191b24c | Liang-Chi Hsieh | 09 March 2021, 12:30:42 UTC | [SPARK-34672][BUILD][2.4] Fix docker file for creating release ### What changes were proposed in this pull request? This patch pins the pip and setuptools versions during building docker image of `spark-rm`. ### Why are the changes needed? The `Dockerfile` of `spark-rm` in `branch-2.4` seems not working for now. It tries to install latest `pip` and `setuptools` which are not compatible with Python2. So it fails the docker image building. ### Does this PR introduce _any_ user-facing change? No, dev only. ### How was this patch tested? Ran release script locally. Closes #31787 from viirya/fix-release-script. Authored-by: Liang-Chi Hsieh <viirya@gmail.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> | 09 March 2021, 12:30:42 UTC |

| eb4601e | Baohe Zhang | 04 March 2021, 23:37:33 UTC | [SPARK-32924][WEBUI] Make duration column in master UI sorted in the correct order ### What changes were proposed in this pull request? Make the "duration" column in standalone mode master UI sorted by numeric duration, hence the column can be sorted by the correct order. Before changes:  After changes:  ### Why are the changes needed? Fix a UI bug to make the sorting consistent across different pages. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Ran several apps with different durations and verified the duration column on the master page can be sorted correctly. Closes #31743 from baohe-zhang/SPARK-32924. Authored-by: Baohe Zhang <baohe.zhang@verizonmedia.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit 9ac5ee2e17ca491eabf2e6e7d33ce7cfb5a002a7) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 04 March 2021, 23:38:10 UTC |

| 96f5137 | yuhaiyang | 25 February 2021, 19:00:51 UTC | [SPARK-30228][BUILD][2.4] Update zstd-jni to 1.4.4-3 ### What changes were proposed in this pull request? Change zstd-jni version to version 1.4.4-3 ### Why are the changes needed? This old zstd-jni(tag 1.3.3-2) has probability to read less data when shuffle read. The `ZstdInputStream` in zstd-jni(tag 1.3.3-2) maybe return 0 after a read function call, this doesn't meet the standard of `InputStream` and the `InputStream` will not return 0 unless len is 0; Spark will use a BufferedInputStream wrapped to ZstdInputStream, when ZstdInputStream read call return 0, BufferedInputStream will consider the 0 as the end of read and exit, this can lead data loss. zstd-jni issues: https://github.com/luben/zstd-jni/issues/159 zstd-jni commits: https://github.com/luben/zstd-jni/commit/7eec5581b8ccb0d98350ad5ba422337eebbbe70e ### Does this PR introduce _any_ user-facing change? ### How was this patch tested? Closes #31645 from seayoun/yuhaiyang_update_zstd_jni. Authored-by: yuhaiyang <yuhaiyang@yuhaiyangs-MacBook-Pro.local> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 25 February 2021, 19:00:51 UTC |

| 9807250 | Kousuke Saruta | 20 February 2021, 03:43:18 UTC | [SPARK-34449][BUILD][2.4] Upgrade Jetty to fix CVE-2020-27218 ### What changes were proposed in this pull request? This PR backports #31574 (SPARK-34449) for `branch-2.4`, upgrading Jetty from `9.4.34` to `9.4.36`. ### Why are the changes needed? CVE-2020-27218 affects currently used Jetty 9.4.34. https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2020-27218 ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Jenkins and GA. Closes #31583 from sarutak/SPARK-34449-branch-2.4. Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> | 20 February 2021, 03:43:18 UTC |

| c9683be | yzjg | 19 February 2021, 01:45:21 UTC | [MINOR][SQL][DOCS] Fix the comments in the example at window function `functions.scala` window function has an comment error in the field name. The column should be `time` per `timestamp:TimestampType`. To deliver the correct documentation and examples. Yes, it fixes the user-facing docs. CI builds in this PR should test the documentation build. Closes #31582 from yzjg/yzjg-patch-1. Authored-by: yzjg <785246661@qq.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit 26548edfa2445b009f63bbdbe810cdb6c289c18d) Signed-off-by: HyukjinKwon <gurwls223@apache.org> | 19 February 2021, 01:46:40 UTC |

| fa78e68 | Dongjoon Hyun | 09 February 2021, 05:47:23 UTC | [SPARK-34407][K8S] KubernetesClusterSchedulerBackend.stop should clean up K8s resources This PR aims to fix `KubernetesClusterSchedulerBackend.stop` to wrap `super.stop` with `Utils.tryLogNonFatalError`. [CoarseGrainedSchedulerBackend.stop](https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/scheduler/cluster/CoarseGrainedSchedulerBackend.scala#L559) may throw `SparkException` and this causes K8s resource (pod and configmap) leakage. No. This is a bug fix. Pass the CI with the newly added test case. Closes #31533 from dongjoon-hyun/SPARK-34407. Authored-by: Dongjoon Hyun <dhyun@apple.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit ea339c38b43c59931257386efdd490507f7de64d) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 09 February 2021, 05:53:26 UTC |

| e7acca2 | Kousuke Saruta | 04 February 2021, 01:51:56 UTC | [SPARK-34318][SQL][2.4] Dataset.colRegex should work with column names and qualifiers which contain newlines ### What changes were proposed in this pull request? Backport of #31426 for the record. This PR fixes an issue that `Dataset.colRegex` doesn't work with column names or qualifiers which contain newlines. In the current master, if column names or qualifiers passed to `colRegex` contain newlines, it throws exception. ``` val df = Seq(1, 2, 3).toDF("test\n_column").as("test\n_table") val col1 = df.colRegex("`tes.*\n.*mn`") org.apache.spark.sql.AnalysisException: Cannot resolve column name "`tes.* .*mn`" among (test _column) at org.apache.spark.sql.Dataset.org$apache$spark$sql$Dataset$$resolveException(Dataset.scala:272) at org.apache.spark.sql.Dataset.$anonfun$resolve$1(Dataset.scala:263) at scala.Option.getOrElse(Option.scala:189) at org.apache.spark.sql.Dataset.resolve(Dataset.scala:263) at org.apache.spark.sql.Dataset.colRegex(Dataset.scala:1407) ... 47 elided val col2 = df.colRegex("test\n_table.`tes.*\n.*mn`") org.apache.spark.sql.AnalysisException: Cannot resolve column name "test _table.`tes.* .*mn`" among (test _column) at org.apache.spark.sql.Dataset.org$apache$spark$sql$Dataset$$resolveException(Dataset.scala:272) at org.apache.spark.sql.Dataset.$anonfun$resolve$1(Dataset.scala:263) at scala.Option.getOrElse(Option.scala:189) at org.apache.spark.sql.Dataset.resolve(Dataset.scala:263) at org.apache.spark.sql.Dataset.colRegex(Dataset.scala:1407) ... 47 elided ``` ### Why are the changes needed? Column names and qualifiers can contain newlines but `colRegex` can't work with them, so it's a bug. ### Does this PR introduce _any_ user-facing change? Yes. users can pass column names and qualifiers even though they contain newlines. ### How was this patch tested? New test. Closes #31459 from sarutak/SPARK-34318-branch-2.4. Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> | 04 February 2021, 01:51:56 UTC |

| 90db0ab | Prashant Sharma | 03 February 2021, 06:02:35 UTC | [SPARK-34327][BUILD] Strip passwords from inlining into build information while releasing ### What changes were proposed in this pull request? Strip passwords from getting inlined into build information, inadvertently. ` https://user:passdomain/foo -> https://domain/foo` ### Why are the changes needed? This can be a serious security issue, esp. during a release. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Tested by executing the following command on both Mac OSX and Ubuntu. ``` echo url=$(git config --get remote.origin.url | sed 's|https://\(.*\)\(.*\)|https://\2|') ``` Closes #31436 from ScrapCodes/strip_pass. Authored-by: Prashant Sharma <prashsh1@in.ibm.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit 89bf2afb3337a44f34009a36cae16dd0ff86b353) Signed-off-by: HyukjinKwon <gurwls223@apache.org> | 03 February 2021, 06:03:23 UTC |

| 5f4e9ea | Wenchen Fan | 03 February 2021, 00:26:36 UTC | [SPARK-34212][SQL][FOLLOWUP] Parquet vectorized reader can read decimal fields with a larger precision ### What changes were proposed in this pull request? This is a followup of https://github.com/apache/spark/pull/31357 #31357 added a very strong restriction to the vectorized parquet reader, that the spark data type must exactly match the physical parquet type, when reading decimal fields. This restriction is actually not necessary, as we can safely read parquet decimals with a larger precision. This PR releases this restriction a little bit. ### Why are the changes needed? To not fail queries unnecessarily. ### Does this PR introduce _any_ user-facing change? Yes, now users can read parquet decimals with mismatched `DecimalType` as long as the scale is the same and precision is larger. ### How was this patch tested? updated test. Closes #31443 from cloud-fan/improve. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit 00120ea53748d84976e549969f43cf2a50778c1c) Signed-off-by: HyukjinKwon <gurwls223@apache.org> | 03 February 2021, 00:27:36 UTC |

| 3696ba8 | yangjie01 | 02 February 2021, 00:26:36 UTC | [SPARK-34310][CORE][SQL][2.4] Replaces map and flatten with flatMap ### What changes were proposed in this pull request? Replaces `collection.map(f1).flatten(f2)` with `collection.flatMap` if possible. it's semantically consistent, but looks simpler. ### Why are the changes needed? Code Simpilefications. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Pass the Jenkins or GitHub Action Closes #31417 from LuciferYang/SPARK-34310-24. Authored-by: yangjie01 <yangjie01@baidu.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> | 02 February 2021, 00:26:36 UTC |

| da3ccab | Liang-Chi Hsieh | 30 January 2021, 04:50:39 UTC | [SPARK-34270][SS] Combine StateStoreMetrics should not override StateStoreCustomMetric This patch proposes to sum up custom metric values instead of taking arbitrary one when combining `StateStoreMetrics`. For stateful join in structured streaming, we need to combine `StateStoreMetrics` from both left and right side. Currently we simply take arbitrary one from custom metrics with same name from left and right. By doing this we miss half of metric number. Yes, this corrects metrics collected for stateful join. Unit test. Closes #31369 from viirya/SPARK-34270. Authored-by: Liang-Chi Hsieh <viirya@gmail.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit 50d14c98c3828d8d9cc62ebc61ad4d20398ee6c6) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 30 January 2021, 05:16:54 UTC |

| 6c8bc7c | Linhong Liu | 29 January 2021, 03:28:44 UTC | [SPARK-34260][SQL][2.4] Fix UnresolvedException when creating temp view twice ### What changes were proposed in this pull request? In PR #30140, it will compare new and old plans when replacing view and uncache data if the view has changed. But the compared new plan is not analyzed which will cause `UnresolvedException` when calling `sameResult`. So in this PR, we use the analyzed plan to compare to fix this problem. ### Why are the changes needed? bug fix ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? newly added tests Closes #31388 from linhongliu-db/SPARK-34260-2.4. Authored-by: Linhong Liu <linhong.liu@databricks.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 29 January 2021, 03:28:44 UTC |

| 953cc1c | Dongjoon Hyun | 28 January 2021, 21:06:42 UTC | [SPARK-34273][CORE] Do not reregister BlockManager when SparkContext is stopped This PR aims to prevent `HeartbeatReceiver` asks `Executor` to re-register blocker manager when the SparkContext is already stopped. Currently, `HeartbeatReceiver` blindly asks re-registration for the new heartbeat message. However, when SparkContext is stopped, we don't need to re-register new block manager. Re-registration causes unnecessary executors' logs and and a delay on job termination. No. Pass the CIs with the newly added test case. Closes #31373 from dongjoon-hyun/SPARK-34273. Authored-by: Dongjoon Hyun <dhyun@apple.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit bc41c5a0e598e6b697ed61c33e1bea629dabfc57) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 28 January 2021, 21:14:35 UTC |

| 78bf448 | yangjie01 | 28 January 2021, 10:01:45 UTC | [SPARK-34275][CORE][SQL][MLLIB][2.4] Replaces filter and size with count ### What changes were proposed in this pull request? Use `count` to simplify `find + size(or length)` operation, it's semantically consistent, but looks simpler. **Before** ``` seq.filter(p).size ``` **After** ``` seq.count(p) ``` ### Why are the changes needed? Code Simpilefications. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Pass the Jenkins or GitHub Action Closes #31376 from LuciferYang/SPARK-34275-24. Authored-by: yangjie01 <yangjie01@baidu.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> | 28 January 2021, 10:01:45 UTC |

| 86eb199 | Yuming Wang | 28 January 2021, 05:06:36 UTC | [SPARK-34268][SQL][DOCS] Correct the documentation of the concat_ws function ### What changes were proposed in this pull request? This pr correct the documentation of the `concat_ws` function. ### Why are the changes needed? `concat_ws` doesn't need any str or array(str) arguments: ``` scala> sql("""select concat_ws("s")""").show +------------+ |concat_ws(s)| +------------+ | | +------------+ ``` ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? ``` build/sbt "sql/testOnly *.ExpressionInfoSuite" ``` Closes #31370 from wangyum/SPARK-34268. Authored-by: Yuming Wang <yumwang@ebay.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit 01d11da84ef7c3abbfd1072c421505589ac1e9b2) Signed-off-by: HyukjinKwon <gurwls223@apache.org> | 28 January 2021, 05:07:27 UTC |