https://github.com/apache/spark

- HEAD

- refs/heads/branch-0.5

- refs/heads/branch-0.6

- refs/heads/branch-0.7

- refs/heads/branch-0.8

- refs/heads/branch-0.9

- refs/heads/branch-1.0

- refs/heads/branch-1.0-jdbc

- refs/heads/branch-1.1

- refs/heads/branch-1.2

- refs/heads/branch-1.3

- refs/heads/branch-1.4

- refs/heads/branch-1.5

- refs/heads/branch-1.6

- refs/heads/branch-2.0

- refs/heads/branch-2.1

- refs/heads/branch-2.2

- refs/heads/branch-2.3

- refs/heads/branch-2.4

- refs/heads/branch-3.0

- refs/heads/branch-3.1

- refs/heads/branch-3.2

- refs/heads/branch-3.3

- refs/heads/branch-3.4

- refs/heads/branch-3.5

- refs/heads/master

- refs/remotes/origin/branch-0.8

- refs/remotes/origin/td-rdd-save

- refs/tags/0.3-scala-2.8

- refs/tags/0.3-scala-2.9

- refs/tags/2.0.0-preview

- refs/tags/alpha-0.1

- refs/tags/alpha-0.2

- refs/tags/v0.5.0

- refs/tags/v0.5.1

- refs/tags/v0.5.2

- refs/tags/v0.6.0

- refs/tags/v0.6.0-yarn

- refs/tags/v0.6.1

- refs/tags/v0.6.2

- refs/tags/v0.7.0

- refs/tags/v0.7.0-bizo-1

- refs/tags/v0.7.1

- refs/tags/v0.7.2

- refs/tags/v0.9.1

- refs/tags/v0.9.2

- refs/tags/v1.0.0

- refs/tags/v1.0.1

- refs/tags/v1.0.2

- refs/tags/v1.1.0

- refs/tags/v1.1.1

- refs/tags/v1.2.0

- refs/tags/v1.2.1

- refs/tags/v1.2.2

- refs/tags/v1.3.0

- refs/tags/v1.3.1

- refs/tags/v1.4.0

- refs/tags/v1.4.1

- refs/tags/v1.5.0-rc1

- refs/tags/v1.5.0-rc2

- refs/tags/v1.5.0-rc3

- refs/tags/v1.5.1

- refs/tags/v1.6.0

- refs/tags/v1.6.1

- refs/tags/v1.6.2

- refs/tags/v1.6.3

- refs/tags/v2.0.0

- refs/tags/v2.0.1

- refs/tags/v2.0.2

- refs/tags/v2.1.0

- refs/tags/v2.1.1

- refs/tags/v2.1.2

- refs/tags/v2.1.2-rc1

- refs/tags/v2.1.2-rc2

- refs/tags/v2.1.2-rc3

- refs/tags/v2.1.2-rc4

- refs/tags/v2.1.3

- refs/tags/v2.1.3-rc1

- refs/tags/v2.1.3-rc2

- refs/tags/v2.2.0

- refs/tags/v2.2.1

- refs/tags/v2.2.1-rc1

- refs/tags/v2.2.1-rc2

- refs/tags/v2.2.2

- refs/tags/v2.2.2-rc1

- refs/tags/v2.2.2-rc2

- refs/tags/v2.2.3

- refs/tags/v2.2.3-rc1

- refs/tags/v2.3.0

- refs/tags/v2.3.0-rc1

- refs/tags/v2.3.0-rc2

- refs/tags/v2.3.0-rc3

- refs/tags/v2.3.0-rc4

- refs/tags/v2.3.1

- refs/tags/v2.3.1-rc1

- refs/tags/v2.3.1-rc2

- refs/tags/v2.3.1-rc3

- refs/tags/v2.3.1-rc4

- refs/tags/v2.3.2

- refs/tags/v2.3.2-rc1

- refs/tags/v2.3.2-rc2

- refs/tags/v2.3.2-rc3

- refs/tags/v2.3.2-rc4

- refs/tags/v2.3.2-rc5

- refs/tags/v2.3.2-rc6

- refs/tags/v2.3.3

- refs/tags/v2.3.3-rc1

- refs/tags/v2.3.3-rc2

- refs/tags/v2.3.4

- refs/tags/v2.3.4-rc1

- refs/tags/v2.4.0

- refs/tags/v2.4.0-rc1

- refs/tags/v2.4.0-rc2

- refs/tags/v2.4.0-rc3

- refs/tags/v2.4.0-rc4

- refs/tags/v2.4.0-rc5

- refs/tags/v2.4.1

- refs/tags/v2.4.1-rc1

- refs/tags/v2.4.1-rc2

- refs/tags/v2.4.1-rc3

- refs/tags/v2.4.1-rc4

- refs/tags/v2.4.1-rc5

- refs/tags/v2.4.1-rc6

- refs/tags/v2.4.1-rc7

- refs/tags/v2.4.1-rc8

- refs/tags/v2.4.1-rc9

- refs/tags/v2.4.2

- refs/tags/v2.4.2-rc1

- refs/tags/v2.4.3

- refs/tags/v2.4.3-rc1

- refs/tags/v2.4.4

- refs/tags/v2.4.4-rc1

- refs/tags/v2.4.4-rc2

- refs/tags/v2.4.4-rc3

- refs/tags/v2.4.5

- refs/tags/v2.4.5-rc1

- refs/tags/v2.4.5-rc2

- refs/tags/v2.4.6

- refs/tags/v2.4.6-rc1

- refs/tags/v2.4.6-rc2

- refs/tags/v2.4.6-rc3

- refs/tags/v2.4.6-rc4

- refs/tags/v2.4.6-rc5

- refs/tags/v2.4.6-rc6

- refs/tags/v2.4.6-rc7

- refs/tags/v2.4.6-rc8

- refs/tags/v2.4.7

- refs/tags/v2.4.7-rc1

- refs/tags/v2.4.7-rc2

- refs/tags/v2.4.7-rc3

- refs/tags/v2.4.8

- refs/tags/v2.4.8-rc1

- refs/tags/v2.4.8-rc2

- refs/tags/v2.4.8-rc3

- refs/tags/v2.4.8-rc4

- refs/tags/v3.0.0

- refs/tags/v3.0.0-preview2

- refs/tags/v3.0.0-preview2-rc1

- refs/tags/v3.0.0-preview2-rc2

- refs/tags/v3.0.0-rc1

- refs/tags/v3.0.0-rc2

- refs/tags/v3.0.0-rc3

- refs/tags/v3.0.1

- refs/tags/v3.0.1-rc1

- refs/tags/v3.0.1-rc2

- refs/tags/v3.0.1-rc3

- refs/tags/v3.0.2

- refs/tags/v3.0.2-rc1

- refs/tags/v3.0.3

- refs/tags/v3.0.3-rc1

- refs/tags/v3.1.0-rc1

- refs/tags/v3.1.1

- refs/tags/v3.1.1-rc1

- refs/tags/v3.1.1-rc2

- refs/tags/v3.1.1-rc3

- refs/tags/v3.1.2

- refs/tags/v3.1.2-rc1

- refs/tags/v3.1.3

- refs/tags/v3.1.3-rc1

- refs/tags/v3.1.3-rc2

- refs/tags/v3.1.3-rc3

- refs/tags/v3.1.3-rc4

- refs/tags/v3.2.0

- refs/tags/v3.2.0-rc1

- refs/tags/v3.2.0-rc2

- refs/tags/v3.2.0-rc3

- refs/tags/v3.2.0-rc4

- refs/tags/v3.2.0-rc5

- refs/tags/v3.2.0-rc6

- refs/tags/v3.2.0-rc7

- refs/tags/v3.2.1

- refs/tags/v3.2.1-rc1

- refs/tags/v3.2.1-rc2

- refs/tags/v3.2.2

- refs/tags/v3.2.2-rc1

- refs/tags/v3.2.3

- refs/tags/v3.2.3-rc1

- refs/tags/v3.2.4

- refs/tags/v3.2.4-rc1

- refs/tags/v3.3.0

- refs/tags/v3.3.0-rc1

- refs/tags/v3.3.0-rc2

- refs/tags/v3.3.0-rc3

- refs/tags/v3.3.0-rc4

- refs/tags/v3.3.0-rc5

- refs/tags/v3.3.0-rc6

- refs/tags/v3.3.1

- refs/tags/v3.3.1-rc1

- refs/tags/v3.3.1-rc2

- refs/tags/v3.3.1-rc3

- refs/tags/v3.3.1-rc4

- refs/tags/v3.3.2

- refs/tags/v3.3.2-rc1

- refs/tags/v3.3.3

- refs/tags/v3.3.3-rc1

- refs/tags/v3.3.4

- refs/tags/v3.3.4-rc1

- refs/tags/v3.4.0

- refs/tags/v3.4.0-rc1

- refs/tags/v3.4.0-rc2

- refs/tags/v3.4.0-rc3

- refs/tags/v3.4.0-rc4

- refs/tags/v3.4.0-rc5

- refs/tags/v3.4.0-rc6

- refs/tags/v3.4.0-rc7

- refs/tags/v3.4.1

- refs/tags/v3.4.1-rc1

- refs/tags/v3.4.2

- refs/tags/v3.4.2-rc1

- refs/tags/v3.5.0

- refs/tags/v3.5.0-rc1

- refs/tags/v3.5.0-rc2

- refs/tags/v3.5.0-rc3

- refs/tags/v3.5.0-rc4

- refs/tags/v3.5.0-rc5

- refs/tags/v3.5.1

- refs/tags/v3.5.1-rc1

- refs/tags/v3.5.1-rc2

Take a new snapshot of a software origin

If the archived software origin currently browsed is not synchronized with its upstream version (for instance when new commits have been issued), you can explicitly request Software Heritage to take a new snapshot of it.

Use the form below to proceed. Once a request has been submitted and accepted, it will be processed as soon as possible. You can then check its processing state by visiting this dedicated page.

Processing "take a new snapshot" request ...

Permalinks

To reference or cite the objects present in the Software Heritage archive, permalinks based on SoftWare Hash IDentifiers (SWHIDs) must be used.

Select below a type of object currently browsed in order to display its associated SWHID and permalink.

| Revision | Author | Date | Message | Commit Date |

|---|---|---|---|---|

| 2f3e4e3 | ulysses-you | 02 August 2022, 09:05:48 UTC | [SPARK-39932][SQL] WindowExec should clear the final partition buffer ### What changes were proposed in this pull request? Explicitly clear final partition buffer if can not find next in `WindowExec`. The same fix in `WindowInPandasExec` ### Why are the changes needed? We do a repartition after a window, then we need do a local sort after window due to RoundRobinPartitioning shuffle. The error stack: ```java ExternalAppendOnlyUnsafeRowArray INFO - Reached spill threshold of 4096 rows, switching to org.apache.spark.util.collection.unsafe.sort.UnsafeExternalSorter org.apache.spark.memory.SparkOutOfMemoryError: Unable to acquire 65536 bytes of memory, got 0 at org.apache.spark.memory.MemoryConsumer.throwOom(MemoryConsumer.java:157) at org.apache.spark.memory.MemoryConsumer.allocateArray(MemoryConsumer.java:97) at org.apache.spark.util.collection.unsafe.sort.UnsafeExternalSorter.growPointerArrayIfNecessary(UnsafeExternalSorter.java:352) at org.apache.spark.util.collection.unsafe.sort.UnsafeExternalSorter.allocateMemoryForRecordIfNecessary(UnsafeExternalSorter.java:435) at org.apache.spark.util.collection.unsafe.sort.UnsafeExternalSorter.insertRecord(UnsafeExternalSorter.java:455) at org.apache.spark.sql.execution.UnsafeExternalRowSorter.insertRow(UnsafeExternalRowSorter.java:138) at org.apache.spark.sql.execution.UnsafeExternalRowSorter.sort(UnsafeExternalRowSorter.java:226) at org.apache.spark.sql.execution.exchange.ShuffleExchangeExec$.$anonfun$prepareShuffleDependency$10(ShuffleExchangeExec.scala:355) ``` `WindowExec` only clear buffer in `fetchNextPartition` so the final partition buffer miss to clear. It is not a big problem since we have task completion listener. ```scala taskContext.addTaskCompletionListener(context -> { cleanupResources(); }); ``` This bug only affects if the window is not the last operator for this task and the follow operator like sort. ### Does this PR introduce _any_ user-facing change? yes, bug fix ### How was this patch tested? N/A Closes #37358 from ulysses-you/window. Authored-by: ulysses-you <ulyssesyou18@gmail.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 1fac870126c289a7ec75f45b6b61c93b9a4965d4) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 02 August 2022, 09:06:54 UTC |

| f7a9409 | yangjie01 | 27 July 2022, 00:26:47 UTC | [SPARK-39879][SQL][TESTS] Reduce local-cluster maximum memory size in `BroadcastJoinSuite*` and `HiveSparkSubmitSuite` ### What changes were proposed in this pull request? This pr change `local-cluster[2, 1, 1024]` in `BroadcastJoinSuite*` and `HiveSparkSubmitSuite` to `local-cluster[2, 1, 512]` to reduce test maximum memory usage. ### Why are the changes needed? Reduce the maximum memory usage of test cases. ### Does this PR introduce _any_ user-facing change? No, test-only. ### How was this patch tested? Should monitor CI Closes #37298 from LuciferYang/reduce-local-cluster-memory. Authored-by: yangjie01 <yangjie01@baidu.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 01d41e7de418d0a40db7b16ddd0d8546f0794d17) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 27 July 2022, 00:27:39 UTC |

| ea05c33 | Hyukjin Kwon | 25 July 2022, 06:56:34 UTC | Revert "[SPARK-39856][SQL][TESTS] Increase the number of partitions in TPC-DS build to avoid out-of-memory" This reverts commit 0a27d0c6e8e705176f0f245794bc8361860ac680. | 25 July 2022, 06:56:34 UTC |

| 0a27d0c | Hyukjin Kwon | 25 July 2022, 03:44:54 UTC | [SPARK-39856][SQL][TESTS] Increase the number of partitions in TPC-DS build to avoid out-of-memory This PR proposes to avoid out-of-memory in TPC-DS build at GitHub Actions CI by: - Increasing the number of partitions being used in shuffle. - Truncating precisions after 10th in floats. The number of partitions was previously set to 1 because of different results in precisions that generally we can just ignore. - Sort the results regardless of join type since Apache Spark does not guarantee the order of results One of the reasons for the large memory usage seems to be single partition that's being used in the shuffle. No, test-only. GitHub Actions in this CI will test it out. Closes #37270 from HyukjinKwon/deflake-tpcds. Authored-by: Hyukjin Kwon <gurwls223@apache.org> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 7358253755762f9bfe6cedc1a50ec14616cfeace) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 25 July 2022, 03:51:19 UTC |

| c653d28 | sandeepvinayak | 31 May 2022, 22:28:07 UTC | [SPARK-39283][CORE] Fix deadlock between TaskMemoryManager and UnsafeExternalSorter.SpillableIterator ### What changes were proposed in this pull request? This PR fixes a deadlock between TaskMemoryManager and UnsafeExternalSorter.SpillableIterator. ### Why are the changes needed? We are facing the deadlock issue b/w TaskMemoryManager and UnsafeExternalSorter.SpillableIterator during the join. It turns out that in UnsafeExternalSorter.SpillableIterator#spill() function, it tries to get lock on UnsafeExternalSorter`SpillableIterator` and UnsafeExternalSorter and call `freePage` to free all allocated pages except the last one which takes the lock on TaskMemoryManager. At the same time, there can be another `MemoryConsumer` using `UnsafeExternalSorter` as part of sorting can try to allocatePage needs to get lock on `TaskMemoryManager` which can cause spill to happen which requires lock on `UnsafeExternalSorter` again causing deadlock. There is a similar fix here as well: https://issues.apache.org/jira/browse/SPARK-27338 ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Existing unit tests. Closes #36680 from sandeepvinayak/SPARK-39283. Authored-by: sandeepvinayak <sandeep.pal@outlook.com> Signed-off-by: Josh Rosen <joshrosen@databricks.com> | 31 May 2022, 22:51:40 UTC |

| 02488e0 | Tom van Bussel | 18 September 2020, 11:49:26 UTC | [SPARK-32911][CORE] Free memory in UnsafeExternalSorter.SpillableIterator.spill() when all records have been read ### What changes were proposed in this pull request? This PR changes `UnsafeExternalSorter.SpillableIterator` to free its memory (except for the page holding the last record) if it is forced to spill after all of its records have been read. It also makes sure that `lastPage` is freed if `loadNext` is never called the again. The latter was necessary to get my test case to succeed (otherwise it would complain about a leak). ### Why are the changes needed? No memory is freed after calling `UnsafeExternalSorter.SpillableIterator.spill()` when all records have been read, even though it is still holding onto some memory. This may cause a `SparkOutOfMemoryError` to be thrown, even though we could have just freed the memory instead. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? A test was added to `UnsafeExternalSorterSuite`. Closes #29787 from tomvanbussel/SPARK-32911. Authored-by: Tom van Bussel <tom.vanbussel@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 31 May 2022, 22:50:52 UTC |

| 9b26812 | Takuya UESHIN | 26 May 2022, 01:36:03 UTC | [SPARK-39293][SQL] Fix the accumulator of ArrayAggregate to handle complex types properly Fix the accumulator of `ArrayAggregate` to handle complex types properly. The accumulator of `ArrayAggregate` should copy the intermediate result if string, struct, array, or map. If the intermediate data of `ArrayAggregate` holds reusable data, the result will be duplicated. ```scala import org.apache.spark.sql.functions._ val reverse = udf((s: String) => s.reverse) val df = Seq(Array("abc", "def")).toDF("array") val testArray = df.withColumn( "agg", aggregate( col("array"), array().cast("array<string>"), (acc, s) => concat(acc, array(reverse(s))))) aggArray.show(truncate=false) ``` should be: ``` +----------+----------+ |array |agg | +----------+----------+ |[abc, def]|[cba, fed]| +----------+----------+ ``` but: ``` +----------+----------+ |array |agg | +----------+----------+ |[abc, def]|[fed, fed]| +----------+----------+ ``` Yes, this fixes the correctness issue. Added a test. Closes #36674 from ueshin/issues/SPARK-39293/array_aggregate. Authored-by: Takuya UESHIN <ueshin@databricks.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit d6a11cb4b411c8136eb241aac167bc96990f5421) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 92e82fdf8e2faec5add61e2448f11272dfb19c6e) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 68d69501576ba21e182791aad91b82a1e7282d11) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 26 May 2022, 01:40:16 UTC |

| 19942e7 | Vitalii Li | 06 May 2022, 09:04:28 UTC | [SPARK-39060][SQL][3.0] Typo in error messages of decimal overflow ### What changes were proposed in this pull request? This PR removes extra curly bracket from debug string for Decimal type in SQL. This is a backport from master branch. Commit: https://github.com/apache/spark/commit/165ce4eb7d6d75201beb1bff879efa99fde24f94 ### Why are the changes needed? Typo in error messages of decimal overflow. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? By running updated test: ``` $ build/sbt "sql/testOnly org.apache.spark.sql.SQLQueryTestSuite -- -z decimalArithmeticOperations.sql" ``` Closes #36460 from vli-databricks/SPARK-39060-3.0. Authored-by: Vitalii Li <vitalii.li@databricks.com> Signed-off-by: Max Gekk <max.gekk@gmail.com> | 06 May 2022, 09:04:28 UTC |

| 4e38563 | allisonwang-db | 01 May 2022, 19:32:28 UTC | [SPARK-38918][SQL][3.0] Nested column pruning should filter out attributes that do not belong to the current relation ### What changes were proposed in this pull request? Backport https://github.com/apache/spark/pull/36216 to branch-3.0. ### Why are the changes needed? To fix a bug in `SchemaPruning`. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Unit test Closes #36388 from allisonwang-db/spark-38918-branch-3.0. Authored-by: allisonwang-db <allison.wang@databricks.com> Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com> | 01 May 2022, 19:32:28 UTC |

| 46fa499 | Hyukjin Kwon | 27 April 2022, 07:59:34 UTC | Revert "[SPARK-39032][PYTHON][DOCS] Examples' tag for pyspark.sql.functions.when()" This reverts commit 821a348ae7f9cd5958d29ccf342719f5d753ae28. | 27 April 2022, 07:59:34 UTC |

| 821a348 | vadim | 27 April 2022, 07:56:18 UTC | [SPARK-39032][PYTHON][DOCS] Examples' tag for pyspark.sql.functions.when() ### What changes were proposed in this pull request? Fix missing keyword for `pyspark.sql.functions.when()` documentation. ### Why are the changes needed? [Documentation](https://spark.apache.org/docs/latest/api/python/reference/api/pyspark.sql.functions.when.html) is not formatted correctly ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? All tests passed. Closes #36369 from vadim/SPARK-39032. Authored-by: vadim <86705+vadim@users.noreply.github.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 5b53bdfa83061c160652e07b999f996fc8bd2ece) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 27 April 2022, 07:57:26 UTC |

| aeea56d | Hyukjin Kwon | 22 April 2022, 10:01:05 UTC | [SPARK-38992][CORE] Avoid using bash -c in ShellBasedGroupsMappingProvider ### What changes were proposed in this pull request? This PR proposes to avoid using `bash -c` in `ShellBasedGroupsMappingProvider`. This could allow users a command injection. ### Why are the changes needed? For a security purpose. ### Does this PR introduce _any_ user-facing change? Virtually no. ### How was this patch tested? Manually tested. Closes #36315 from HyukjinKwon/SPARK-38992. Authored-by: Hyukjin Kwon <gurwls223@apache.org> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit c83618e4e5fc092829a1f2a726f12fb832e802cc) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 22 April 2022, 10:02:25 UTC |

| ac58f88 | Bruce Robbins | 22 April 2022, 03:30:34 UTC | [SPARK-38990][SQL] Avoid `NullPointerException` when evaluating date_trunc/trunc format as a bound reference ### What changes were proposed in this pull request? Change `TruncInstant.evalHelper` to pass the input row to `format.eval` when `format` is a not a literal (and therefore might be a bound reference). ### Why are the changes needed? This query fails with a `java.lang.NullPointerException`: ``` select date_trunc(col1, col2) from values ('week', timestamp'2012-01-01') as data(col1, col2); ``` This only happens if the data comes from an inline table. When the source is an inline table, `ConvertToLocalRelation` attempts to evaluate the function against the data in interpreted mode. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Update to unit tests. Closes #36312 from bersprockets/date_trunc_issue. Authored-by: Bruce Robbins <bersprockets@gmail.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 2e4f4abf553cedec1fa8611b9494a01d24e6238a) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 22 April 2022, 03:31:43 UTC |

| 156d1aa | Kent Yao | 20 April 2022, 06:38:26 UTC | [SPARK-38922][CORE] TaskLocation.apply throw NullPointerException ### What changes were proposed in this pull request? TaskLocation.apply w/o NULL check may throw NPE and fail job scheduling ``` Caused by: java.lang.NullPointerException at scala.collection.immutable.StringLike$class.stripPrefix(StringLike.scala:155) at scala.collection.immutable.StringOps.stripPrefix(StringOps.scala:29) at org.apache.spark.scheduler.TaskLocation$.apply(TaskLocation.scala:71) at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal ``` For instance, `org.apache.spark.rdd.HadoopRDD#convertSplitLocationInfo` might generate unexpected `Some(null)` elements where should be replace by `Option.apply` ### Why are the changes needed? fix NPE ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? new tests Closes #36222 from yaooqinn/SPARK-38922. Authored-by: Kent Yao <yao@apache.org> Signed-off-by: Kent Yao <yao@apache.org> (cherry picked from commit 33e07f3cd926105c6d28986eb6218f237505549e) Signed-off-by: Kent Yao <yao@apache.org> | 20 April 2022, 06:39:57 UTC |

| 5f90203 | fhygh | 15 April 2022, 11:42:18 UTC | [SPARK-38892][SQL][TESTS] Fix a test case schema assertion of ParquetPartitionDiscoverySuite ### What changes were proposed in this pull request? in ParquetPartitionDiscoverySuite, thare are some assert have no parctical significance. `assert(input.schema.sameType(input.schema))` ### Why are the changes needed? fix this to assert the actual result. ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? updated testsuites Closes #36189 from fhygh/assertutfix. Authored-by: fhygh <283452027@qq.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 4835946de2ef71b176da5106e9b6c2706e182722) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 15 April 2022, 11:43:25 UTC |

| beb64fa | Hyukjin Kwon | 07 April 2022, 00:45:02 UTC | Revert "[MINOR][SQL][SS][DOCS] Add varargs to Dataset.observe(String, ..) with a documentation fix" This reverts commit 952d476786ff7e2f5216094b272d46a253891358. | 07 April 2022, 00:45:02 UTC |

| 952d476 | Hyukjin Kwon | 06 April 2022, 08:26:17 UTC | [MINOR][SQL][SS][DOCS] Add varargs to Dataset.observe(String, ..) with a documentation fix ### What changes were proposed in this pull request? This PR proposes two minor changes: - Fixes the example at `Dataset.observe(String, ...)` - Adds `varargs` to be consistent with another overloaded version: `Dataset.observe(Observation, ..)` ### Why are the changes needed? To provide a correct example, support Java APIs properly with `varargs` and API consistency. ### Does this PR introduce _any_ user-facing change? Yes, the example is fixed in the documentation. Additionally Java users should be able to use `Dataset.observe(String, ..)` per `varargs`. ### How was this patch tested? Manually tested. CI should verify the changes too. Closes #36084 from HyukjinKwon/minor-docs. Authored-by: Hyukjin Kwon <gurwls223@apache.org> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit fb3f380b3834ca24947a82cb8d87efeae6487664) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 06 April 2022, 08:27:13 UTC |

| dd54888 | Dongjoon Hyun | 30 March 2022, 15:26:41 UTC | [SPARK-38652][K8S] `uploadFileUri` should preserve file scheme ### What changes were proposed in this pull request? This PR replaces `new Path(fileUri.getPath)` with `new Path(fileUri)`. By using `Path` class constructor with URI parameter, we can preserve file scheme. ### Why are the changes needed? If we use, `Path` class constructor with `String` parameter, it loses file scheme information. Although the original code works so far, it fails at Apache Hadoop 3.3.2 and breaks dependency upload feature which is covered by K8s Minikube integration tests. ```scala test("uploadFileUri") { val fileUri = org.apache.spark.util.Utils.resolveURI("/tmp/1.txt") assert(new Path(fileUri).toString == "file:/private/tmp/1.txt") assert(new Path(fileUri.getPath).toString == "/private/tmp/1.txt") } ``` ### Does this PR introduce _any_ user-facing change? No, this will prevent a regression at Apache Spark 3.3.0 instead. ### How was this patch tested? Pass the CIs. In addition, this PR and #36009 will recover K8s IT `DepsTestsSuite`. Closes #36010 from dongjoon-hyun/SPARK-38652. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit cab8aa1c4fe66c4cb1b69112094a203a04758f76) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 30 March 2022, 15:27:46 UTC |

| 2ac7872 | Yuming Wang | 27 March 2022, 00:45:26 UTC | [SPARK-38663][TESTS][3.0] Remove inaccessible repository: https://dl.bintray.com ### What changes were proposed in this pull request? This pr removes the resolver of https://dl.bintray.com/typesafe/sbt-plugins. ### Why are the changes needed? https://dl.bintray.com/typesafe/sbt-plugins is invalid: ``` Run ./dev/lint-scala Scalastyle checks failed at following occurrences: [error] SERVER ERROR: Bad Gateway url=https://dl.bintray.com/typesafe/sbt-plugins/com.etsy/sbt-checkstyle-plugin/3.1.1/jars/sbt-checkstyle-plugin.jar [error] SERVER ERROR: Bad Gateway url=https://dl.bintray.com/typesafe/sbt-plugins/org.sonatype.oss/oss-parent/7/jars/oss-parent.jar [error] SERVER ERROR: Bad Gateway url=https://dl.bintray.com/typesafe/sbt-plugins/org.antlr/antlr4-master/4.7.2/jars/antlr4-master.jar [error] SERVER ERROR: Bad Gateway url=https://dl.bintray.com/typesafe/sbt-plugins/org.apache/apache/1[9](https://github.com/apache/spark/runs/5657909908?check_suite_focus=true#step:11:9)/jars/apache.jar ``` ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Local test: ``` rm -rf ~/.ivy2/ build/sbt scalastyle test:scalastyle ... [info] downloading https://repo.scala-sbt.org/scalasbt/sbt-plugin-releases/com.typesafe/sbt-mima-plugin/scala_2.10/sbt_0.13/0.3.0/jars/sbt-mima-plugin.jar ... [info] [SUCCESSFUL ] com.typesafe#sbt-mima-plugin;0.3.0!sbt-mima-plugin.jar (4989ms) ... ``` Closes #35978 from wangyum/SPARK-38663. Authored-by: Yuming Wang <yumwang@ebay.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 27 March 2022, 00:45:26 UTC |

| 4fc718f | mcdull-zhang | 26 March 2022, 04:48:08 UTC | [SPARK-38570][SQL][3.0] Incorrect DynamicPartitionPruning caused by Literal This is a backport of #35878 to branch 3.0. The return value of Literal.references is an empty AttributeSet, so Literal is mistaken for a partition column. For example, the sql in the test case will generate such a physical plan when the adaptive is closed: ```tex *(4) Project [store_id#5281, date_id#5283, state_province#5292] +- *(4) BroadcastHashJoin [store_id#5281], [store_id#5291], Inner, BuildRight, false :- Union : :- *(1) Project [4 AS store_id#5281, date_id#5283] : : +- *(1) Filter ((isnotnull(date_id#5283) AND (date_id#5283 >= 1300)) AND dynamicpruningexpression(4 IN dynamicpruning#5300)) : : : +- ReusedSubquery SubqueryBroadcast dynamicpruning#5300, 0, [store_id#5291], [id=#336] : : +- *(1) ColumnarToRow : : +- FileScan parquet default.fact_sk[date_id#5283,store_id#5286] Batched: true, DataFilters: [isnotnull(date_id#5283), (date_id#5283 >= 1300)], Format: Parquet, Location: CatalogFileIndex(1 paths)[file:/Users/dongdongzhang/code/study/spark/spark-warehouse/org.apache.s..., PartitionFilters: [dynamicpruningexpression(4 IN dynamicpruning#5300)], PushedFilters: [IsNotNull(date_id), GreaterThanOrEqual(date_id,1300)], ReadSchema: struct<date_id:int> : : +- SubqueryBroadcast dynamicpruning#5300, 0, [store_id#5291], [id=#336] : : +- BroadcastExchange HashedRelationBroadcastMode(List(cast(input[0, int, true] as bigint)),false), [id=#335] : : +- *(1) Project [store_id#5291, state_province#5292] : : +- *(1) Filter (((isnotnull(country#5293) AND (country#5293 = US)) AND ((store_id#5291 <=> 4) OR (store_id#5291 <=> 5))) AND isnotnull(store_id#5291)) : : +- *(1) ColumnarToRow : : +- FileScan parquet default.dim_store[store_id#5291,state_province#5292,country#5293] Batched: true, DataFilters: [isnotnull(country#5293), (country#5293 = US), ((store_id#5291 <=> 4) OR (store_id#5291 <=> 5)), ..., Format: Parquet, Location: InMemoryFileIndex(1 paths)[file:/Users/dongdongzhang/code/study/spark/spark-warehouse/org.apache...., PartitionFilters: [], PushedFilters: [IsNotNull(country), EqualTo(country,US), Or(EqualNullSafe(store_id,4),EqualNullSafe(store_id,5))..., ReadSchema: struct<store_id:int,state_province:string,country:string> : +- *(2) Project [5 AS store_id#5282, date_id#5287] : +- *(2) Filter ((isnotnull(date_id#5287) AND (date_id#5287 <= 1000)) AND dynamicpruningexpression(5 IN dynamicpruning#5300)) : : +- ReusedSubquery SubqueryBroadcast dynamicpruning#5300, 0, [store_id#5291], [id=#336] : +- *(2) ColumnarToRow : +- FileScan parquet default.fact_stats[date_id#5287,store_id#5290] Batched: true, DataFilters: [isnotnull(date_id#5287), (date_id#5287 <= 1000)], Format: Parquet, Location: CatalogFileIndex(1 paths)[file:/Users/dongdongzhang/code/study/spark/spark-warehouse/org.apache.s..., PartitionFilters: [dynamicpruningexpression(5 IN dynamicpruning#5300)], PushedFilters: [IsNotNull(date_id), LessThanOrEqual(date_id,1000)], ReadSchema: struct<date_id:int> : +- ReusedSubquery SubqueryBroadcast dynamicpruning#5300, 0, [store_id#5291], [id=#336] +- ReusedExchange [store_id#5291, state_province#5292], BroadcastExchange HashedRelationBroadcastMode(List(cast(input[0, int, true] as bigint)),false), [id=#335] ``` after this pr: ```tex *(4) Project [store_id#5281, date_id#5283, state_province#5292] +- *(4) BroadcastHashJoin [store_id#5281], [store_id#5291], Inner, BuildRight, false :- Union : :- *(1) Project [4 AS store_id#5281, date_id#5283] : : +- *(1) Filter (isnotnull(date_id#5283) AND (date_id#5283 >= 1300)) : : +- *(1) ColumnarToRow : : +- FileScan parquet default.fact_sk[date_id#5283,store_id#5286] Batched: true, DataFilters: [isnotnull(date_id#5283), (date_id#5283 >= 1300)], Format: Parquet, Location: CatalogFileIndex(1 paths)[file:/Users/dongdongzhang/code/study/spark/spark-warehouse/org.apache.s..., PartitionFilters: [], PushedFilters: [IsNotNull(date_id), GreaterThanOrEqual(date_id,1300)], ReadSchema: struct<date_id:int> : +- *(2) Project [5 AS store_id#5282, date_id#5287] : +- *(2) Filter (isnotnull(date_id#5287) AND (date_id#5287 <= 1000)) : +- *(2) ColumnarToRow : +- FileScan parquet default.fact_stats[date_id#5287,store_id#5290] Batched: true, DataFilters: [isnotnull(date_id#5287), (date_id#5287 <= 1000)], Format: Parquet, Location: CatalogFileIndex(1 paths)[file:/Users/dongdongzhang/code/study/spark/spark-warehouse/org.apache.s..., PartitionFilters: [], PushedFilters: [IsNotNull(date_id), LessThanOrEqual(date_id,1000)], ReadSchema: struct<date_id:int> +- BroadcastExchange HashedRelationBroadcastMode(List(cast(input[0, int, true] as bigint)),false), [id=#326] +- *(3) Project [store_id#5291, state_province#5292] +- *(3) Filter (((isnotnull(country#5293) AND (country#5293 = US)) AND ((store_id#5291 <=> 4) OR (store_id#5291 <=> 5))) AND isnotnull(store_id#5291)) +- *(3) ColumnarToRow +- FileScan parquet default.dim_store[store_id#5291,state_province#5292,country#5293] Batched: true, DataFilters: [isnotnull(country#5293), (country#5293 = US), ((store_id#5291 <=> 4) OR (store_id#5291 <=> 5)), ..., Format: Parquet, Location: InMemoryFileIndex(1 paths)[file:/Users/dongdongzhang/code/study/spark/spark-warehouse/org.apache...., PartitionFilters: [], PushedFilters: [IsNotNull(country), EqualTo(country,US), Or(EqualNullSafe(store_id,4),EqualNullSafe(store_id,5))..., ReadSchema: struct<store_id:int,state_province:string,country:string> ``` Execution performance improvement No Added unit test Closes #35967 from mcdull-zhang/spark_38570_3.2. Authored-by: mcdull-zhang <work4dong@163.com> Signed-off-by: Yuming Wang <yumwang@ebay.com> (cherry picked from commit 8621914e2052eeab25e6ac4e7d5f48b5570c71f7) Signed-off-by: Yuming Wang <yumwang@ebay.com> | 26 March 2022, 05:47:28 UTC |

| 914b2ff | Kent Yao | 23 March 2022, 09:25:47 UTC | resolve conflicts | 23 March 2022, 09:34:49 UTC |

| f7bf0fe | huangmaoyang2 | 23 March 2022, 06:06:58 UTC | [SPARK-38629][SQL][DOCS] Two links beneath Spark SQL Guide/Data Sources do not work properly SPARK-38629 Two links beneath Spark SQL Guide/Data Sources do not work properly ### What changes were proposed in this pull request? Two typos have been corrected in sql-data-sources.md under Spark's docs directory. ### Why are the changes needed? Two links under latest documentation [Spark SQL Guide/Data Sources](https://spark.apache.org/docs/latest/sql-data-sources.html) do not work properly, when click 'Ignore Corrupt File' or 'Ignore Missing Files', it does redirect me to the right page, but does not scroll to the right section. This issue actually has been there since v3.0.0. ### Does this PR introduce _any_ user-facing change? Yes ### How was this patch tested? I've built the documentation locally and tested my change. Closes #35944 from morvenhuang/SPARK-38629. Authored-by: huangmaoyang2 <huangmaoyang1@jd.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit ac9ae98011424a030a6ef264caf077b8873e251d) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 23 March 2022, 06:07:50 UTC |

| e8310ea | Karen Feng | 14 March 2022, 05:55:44 UTC | [SPARK-37865][SQL][FOLLOWUP] Fix compilation error for union deduplication correctness bug fixup ### What changes were proposed in this pull request? Fixes compilation error following https://github.com/apache/spark/pull/35760. ### Why are the changes needed? Compilation error: https://github.com/apache/spark/runs/5473941519?check_suite_focus=true ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Unit tests Closes #35842 from karenfeng/SPARK-37865-branch-3.0. Authored-by: Karen Feng <karen.feng@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 14 March 2022, 05:55:44 UTC |

| b26ac3f | Dongjoon Hyun | 13 March 2022, 03:33:23 UTC | [SPARK-38538][K8S][TESTS] Fix driver environment verification in BasicDriverFeatureStepSuite ### What changes were proposed in this pull request? This PR aims to fix the driver environment verification logic in `BasicDriverFeatureStepSuite`. ### Why are the changes needed? When SPARK-25876 added a test logic at Apache Spark 3.0.0, it used `envs(v) === v` instead of `envs(k) === v`. https://github.com/apache/spark/blob/c032928515e74367137c668ce692d8fd53696485/resource-managers/kubernetes/core/src/test/scala/org/apache/spark/deploy/k8s/features/BasicDriverFeatureStepSuite.scala#L94-L96 This bug was hidden because the test key-value pairs have identical set. If we have different strings for keys and values, the test case fails. https://github.com/apache/spark/blob/c032928515e74367137c668ce692d8fd53696485/resource-managers/kubernetes/core/src/test/scala/org/apache/spark/deploy/k8s/features/BasicDriverFeatureStepSuite.scala#L42-L44 ### Does this PR introduce _any_ user-facing change? To have a correct test coverage. ### How was this patch tested? Pass the CIs. Closes #35828 from dongjoon-hyun/SPARK-38538. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Yuming Wang <yumwang@ebay.com> (cherry picked from commit 6becf4e93e68e36fbcdc82768de497d86072abeb) Signed-off-by: Yuming Wang <yumwang@ebay.com> | 13 March 2022, 03:34:49 UTC |

| 3eb7264 | Karen Feng | 09 March 2022, 01:34:01 UTC | [SPARK-37865][SQL] Fix union deduplication correctness bug Fixes a correctness bug in `Union` in the case that there are duplicate output columns. Previously, duplicate columns on one side of the union would result in a duplicate column being output on the other side of the union. To do so, we go through the union’s child’s output and find the duplicates. For each duplicate set, there is a first duplicate: this one is left alone. All following duplicates are aliased and given a tag; this tag is used to remove ambiguity during resolution. As the first duplicate is left alone, the user can still select it, avoiding a breaking change. As the later duplicates are given new expression IDs, this fixes the correctness bug. Output of union with duplicate columns in the children was incorrect Example query: ``` SELECT a, a FROM VALUES (1, 1), (1, 2) AS t1(a, b) UNION ALL SELECT c, d FROM VALUES (2, 2), (2, 3) AS t2(c, d) ``` Result before: ``` a | a _ | _ 1 | 1 1 | 1 2 | 2 2 | 2 ``` Result after: ``` a | a _ | _ 1 | 1 1 | 1 2 | 2 2 | 3 ``` Unit tests Closes #35760 from karenfeng/spark-37865. Authored-by: Karen Feng <karen.feng@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 59ce0a706cb52a54244a747d0a070b61f5cddd1c) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 09 March 2022, 01:38:01 UTC |

| e036de3 | Cheng Pan | 06 March 2022, 23:41:20 UTC | [SPARK-38411][CORE] Use `UTF-8` when `doMergeApplicationListingInternal` reads event logs ### What changes were proposed in this pull request? Use UTF-8 instead of system default encoding to read event log ### Why are the changes needed? After SPARK-29160, we should always use UTF-8 to read event log, otherwise, if Spark History Server run with different default charset than "UTF-8", will encounter such error. ``` 2022-03-04 12:16:00,143 [3752440] - INFO [log-replay-executor-19:Logging57] - Parsing hdfs://hz-cluster11/spark2-history/application_1640597251469_2453817_1.lz4 for listing data... 2022-03-04 12:16:00,145 [3752442] - ERROR [log-replay-executor-18:Logging94] - Exception while merging application listings java.nio.charset.MalformedInputException: Input length = 1 at java.nio.charset.CoderResult.throwException(CoderResult.java:281) at sun.nio.cs.StreamDecoder.implRead(StreamDecoder.java:339) at sun.nio.cs.StreamDecoder.read(StreamDecoder.java:178) at java.io.InputStreamReader.read(InputStreamReader.java:184) at java.io.BufferedReader.fill(BufferedReader.java:161) at java.io.BufferedReader.readLine(BufferedReader.java:324) at java.io.BufferedReader.readLine(BufferedReader.java:389) at scala.io.BufferedSource$BufferedLineIterator.hasNext(BufferedSource.scala:74) at scala.collection.Iterator$$anon$20.hasNext(Iterator.scala:884) at scala.collection.Iterator$$anon$12.hasNext(Iterator.scala:511) at org.apache.spark.scheduler.ReplayListenerBus.replay(ReplayListenerBus.scala:82) at org.apache.spark.deploy.history.FsHistoryProvider.$anonfun$doMergeApplicationListing$4(FsHistoryProvider.scala:819) at org.apache.spark.deploy.history.FsHistoryProvider.$anonfun$doMergeApplicationListing$4$adapted(FsHistoryProvider.scala:801) at org.apache.spark.util.Utils$.tryWithResource(Utils.scala:2626) at org.apache.spark.deploy.history.FsHistoryProvider.doMergeApplicationListing(FsHistoryProvider.scala:801) at org.apache.spark.deploy.history.FsHistoryProvider.mergeApplicationListing(FsHistoryProvider.scala:715) at org.apache.spark.deploy.history.FsHistoryProvider.$anonfun$checkForLogs$15(FsHistoryProvider.scala:581) at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) at java.util.concurrent.FutureTask.run(FutureTask.java:266) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748) ``` ### Does this PR introduce _any_ user-facing change? Yes, bug fix. ### How was this patch tested? Verification steps in ubuntu:20.04 1. build `spark-3.3.0-SNAPSHOT-bin-master.tgz` on commit `34618a7ef6` using `dev/make-distribution.sh --tgz --name master` 2. build `spark-3.3.0-SNAPSHOT-bin-SPARK-38411.tgz` on commit `2a8f56038b` using `dev/make-distribution.sh --tgz --name SPARK-38411` 3. switch to UTF-8 using `export LC_ALL=C.UTF-8 && bash` 4. generate event log contains no-ASCII chars. ``` bin/spark-submit \ --master local[*] \ --class org.apache.spark.examples.SparkPi \ --conf spark.eventLog.enabled=true \ --conf spark.user.key='计算圆周率' \ examples/jars/spark-examples_2.12-3.3.0-SNAPSHOT.jar ``` 5. switch to POSIX using `export LC_ALL=POSIX && bash` 6. run `spark-3.3.0-SNAPSHOT-bin-master/sbin/start-history-server.sh` and watch logs <details> ``` Spark Command: /usr/lib/jvm/java-8-openjdk-amd64/jre/bin/java -cp /spark-3.3.0-SNAPSHOT-bin-master/conf/:/spark-3.3.0-SNAPSHOT-bin-master/jars/* -Xmx1g org.apache.spark.deploy.history.HistoryServer ======================================== Using Spark's default log4j profile: org/apache/spark/log4j2-defaults.properties 22/03/06 13:37:19 INFO HistoryServer: Started daemon with process name: 48729c3ffc10aa9 22/03/06 13:37:19 INFO SignalUtils: Registering signal handler for TERM 22/03/06 13:37:19 INFO SignalUtils: Registering signal handler for HUP 22/03/06 13:37:19 INFO SignalUtils: Registering signal handler for INT 22/03/06 13:37:21 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 22/03/06 13:37:21 INFO SecurityManager: Changing view acls to: root 22/03/06 13:37:21 INFO SecurityManager: Changing modify acls to: root 22/03/06 13:37:21 INFO SecurityManager: Changing view acls groups to: 22/03/06 13:37:21 INFO SecurityManager: Changing modify acls groups to: 22/03/06 13:37:21 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set() 22/03/06 13:37:21 INFO FsHistoryProvider: History server ui acls disabled; users with admin permissions: ; groups with admin permissions: 22/03/06 13:37:22 INFO Utils: Successfully started service 'HistoryServerUI' on port 18080. 22/03/06 13:37:23 INFO HistoryServer: Bound HistoryServer to 0.0.0.0, and started at http://29c3ffc10aa9:18080 22/03/06 13:37:23 INFO FsHistoryProvider: Parsing file:/tmp/spark-events/local-1646573251839 for listing data... 22/03/06 13:37:25 ERROR FsHistoryProvider: Exception while merging application listings java.nio.charset.MalformedInputException: Input length = 1 at java.nio.charset.CoderResult.throwException(CoderResult.java:281) ~[?:1.8.0_312] at sun.nio.cs.StreamDecoder.implRead(StreamDecoder.java:339) ~[?:1.8.0_312] at sun.nio.cs.StreamDecoder.read(StreamDecoder.java:178) ~[?:1.8.0_312] at java.io.InputStreamReader.read(InputStreamReader.java:184) ~[?:1.8.0_312] at java.io.BufferedReader.fill(BufferedReader.java:161) ~[?:1.8.0_312] at java.io.BufferedReader.readLine(BufferedReader.java:324) ~[?:1.8.0_312] at java.io.BufferedReader.readLine(BufferedReader.java:389) ~[?:1.8.0_312] at scala.io.BufferedSource$BufferedLineIterator.hasNext(BufferedSource.scala:74) ~[scala-library-2.12.15.jar:?] at scala.collection.Iterator$$anon$20.hasNext(Iterator.scala:886) ~[scala-library-2.12.15.jar:?] at scala.collection.Iterator$$anon$12.hasNext(Iterator.scala:513) ~[scala-library-2.12.15.jar:?] at org.apache.spark.scheduler.ReplayListenerBus.replay(ReplayListenerBus.scala:82) ~[spark-core_2.12-3.3.0-SNAPSHOT.jar:3.3.0-SNAPSHOT] at org.apache.spark.deploy.history.FsHistoryProvider.$anonfun$doMergeApplicationListingInternal$4(FsHistoryProvider.scala:830) ~[spark-core_2.12-3.3.0-SNAPSHOT.jar:3.3.0-SNAPSHOT] at org.apache.spark.deploy.history.FsHistoryProvider.$anonfun$doMergeApplicationListingInternal$4$adapted(FsHistoryProvider.scala:812) ~[spark-core_2.12-3.3.0-SNAPSHOT.jar:3.3.0-SNAPSHOT] at org.apache.spark.util.Utils$.tryWithResource(Utils.scala:2738) ~[spark-core_2.12-3.3.0-SNAPSHOT.jar:3.3.0-SNAPSHOT] at org.apache.spark.deploy.history.FsHistoryProvider.doMergeApplicationListingInternal(FsHistoryProvider.scala:812) ~[spark-core_2.12-3.3.0-SNAPSHOT.jar:3.3.0-SNAPSHOT] at org.apache.spark.deploy.history.FsHistoryProvider.doMergeApplicationListing(FsHistoryProvider.scala:758) ~[spark-core_2.12-3.3.0-SNAPSHOT.jar:3.3.0-SNAPSHOT] at org.apache.spark.deploy.history.FsHistoryProvider.mergeApplicationListing(FsHistoryProvider.scala:718) ~[spark-core_2.12-3.3.0-SNAPSHOT.jar:3.3.0-SNAPSHOT] at org.apache.spark.deploy.history.FsHistoryProvider.$anonfun$checkForLogs$15(FsHistoryProvider.scala:584) ~[spark-core_2.12-3.3.0-SNAPSHOT.jar:3.3.0-SNAPSHOT] at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [?:1.8.0_312] at java.util.concurrent.FutureTask.run(FutureTask.java:266) [?:1.8.0_312] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_312] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_312] at java.lang.Thread.run(Thread.java:748) [?:1.8.0_312] ``` </details> 7. run `spark-3.3.0-SNAPSHOT-bin-master/sbin/stop-history-server.sh` 8. run `spark-3.3.0-SNAPSHOT-bin-SPARK-38411/sbin/stop-history-server.sh` and watch logs <details> ``` Spark Command: /usr/lib/jvm/java-8-openjdk-amd64/jre/bin/java -cp /spark-3.3.0-SNAPSHOT-bin-SPARK-38411/conf/:/spark-3.3.0-SNAPSHOT-bin-SPARK-38411/jars/* -Xmx1g org.apache.spark.deploy.history.HistoryServer ======================================== Using Spark's default log4j profile: org/apache/spark/log4j2-defaults.properties 22/03/06 13:30:54 INFO HistoryServer: Started daemon with process name: 34729c3ffc10aa9 22/03/06 13:30:54 INFO SignalUtils: Registering signal handler for TERM 22/03/06 13:30:54 INFO SignalUtils: Registering signal handler for HUP 22/03/06 13:30:54 INFO SignalUtils: Registering signal handler for INT 22/03/06 13:30:55 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 22/03/06 13:30:56 INFO SecurityManager: Changing view acls to: root 22/03/06 13:30:56 INFO SecurityManager: Changing modify acls to: root 22/03/06 13:30:56 INFO SecurityManager: Changing view acls groups to: 22/03/06 13:30:56 INFO SecurityManager: Changing modify acls groups to: 22/03/06 13:30:56 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set() 22/03/06 13:30:56 INFO FsHistoryProvider: History server ui acls disabled; users with admin permissions: ; groups with admin permissions: 22/03/06 13:30:57 INFO Utils: Successfully started service 'HistoryServerUI' on port 18080. 22/03/06 13:30:57 INFO HistoryServer: Bound HistoryServer to 0.0.0.0, and started at http://29c3ffc10aa9:18080 22/03/06 13:30:57 INFO FsHistoryProvider: Parsing file:/tmp/spark-events/local-1646573251839 for listing data... 22/03/06 13:30:59 INFO FsHistoryProvider: Finished parsing file:/tmp/spark-events/local-1646573251839 ``` </details> Closes #35730 from pan3793/SPARK-38411. Authored-by: Cheng Pan <chengpan@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 135841f257fbb008aef211a5e38222940849cb26) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 06 March 2022, 23:45:36 UTC |

| f125600 | attilapiros | 04 March 2022, 18:52:49 UTC | [SPARK-33206][CORE][3.0] Fix shuffle index cache weight calculation for small index files ### What changes were proposed in this pull request? Increasing the shuffle index weight with a constant number to avoid underestimating retained memory size caused by the bookkeeping objects: the `java.io.File` (depending on the path ~ 960 bytes) object and the `ShuffleIndexInformation` object (~180 bytes). ### Why are the changes needed? Underestimating cache entry size easily can cause OOM in the Yarn NodeManager. In the following analyses of a prod issue (HPROF file) we can see the leak suspect Guava's `LocalCache$Segment` objects: <img width="943" alt="Screenshot 2022-02-17 at 18 55 40" src="https://user-images.githubusercontent.com/2017933/154541995-44014212-2046-41d6-ba7f-99369ca7d739.png"> Going further we can see a `ShuffleIndexInformation` for a small index file (16 bytes) but the retained heap memory is 1192 bytes: <img width="1351" alt="image" src="https://user-images.githubusercontent.com/2017933/154645212-e0318d0f-cefa-4ae3-8a3b-97d2b506757d.png"> Finally we can see this is very common within this heap dump (using MAT's Object Query Language): <img width="1418" alt="image" src="https://user-images.githubusercontent.com/2017933/154547678-44c8af34-1765-4e14-b71a-dc03d1a304aa.png"> I have even exported the data to a CSV and done some calculations with `awk`: ``` $ tail -n+2 export.csv | awk -F, 'BEGIN { numUnderEstimated=0; } { sumOldSize += $1; corrected=$1 + 1176; sumCorrectedSize += corrected; sumRetainedMem += $2; if (corrected < $2) numUnderEstimated+=1; } END { print "sum old size: " sumOldSize / 1024 / 1024 " MB, sum corrected size: " sumCorrectedSize / 1024 / 1024 " MB, sum retained memory:" sumRetainedMem / 1024 / 1024 " MB, num under estimated: " numUnderEstimated }' ``` It gives the followings: ``` sum old size: 76.8785 MB, sum corrected size: 1066.93 MB, sum retained memory:1064.47 MB, num under estimated: 0 ``` So using the old calculation we were at 7.6.8 MB way under the default cache limit (100 MB). Using the correction (applying 1176 as increment to the size) we are at 1066.93 MB (~1GB) which is close to the real retained sum heap: 1064.47 MB (~1GB) and there is no entry which was underestimated. But we can go further and get rid of `java.io.File` completely and store the `ShuffleIndexInformation` for the file path. This way not only the cache size estimate is improved but the its size is decreased as well. Here the path size is not counted into the cache size as that string is interned. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? With the calculations above. Closes #35723 from attilapiros/SPARK-33206-3.0. Authored-by: attilapiros <piros.attila.zsolt@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 04 March 2022, 18:52:49 UTC |

| 5b3f567 | allisonwang-db | 01 March 2022, 07:13:50 UTC | [SPARK-38180][SQL][3.1] Allow safe up-cast expressions in correlated equality predicates Backport https://github.com/apache/spark/pull/35486 to branch-3.1. ### What changes were proposed in this pull request? This PR relaxes the constraint added in [SPARK-35080](https://issues.apache.org/jira/browse/SPARK-35080) by allowing safe up-cast expressions in correlated equality predicates. ### Why are the changes needed? Cast expressions are often added by the compiler during query analysis. Correlated equality predicates can be less restrictive to support this common pattern if a cast expression guarantees one-to-one mapping between the child expression and the output datatype (safe up-cast). ### Does this PR introduce _any_ user-facing change? Yes. Safe up-cast expressions are allowed in correlated equality predicates: ```sql SELECT (SELECT SUM(b) FROM VALUES (1, 1), (1, 2) t(a, b) WHERE CAST(a AS STRING) = x) FROM VALUES ('1'), ('2') t(x) ``` Before this change, this query will throw AnalysisException "Correlated column is not allowed in predicate...", and after this change, this query can run successfully. ### How was this patch tested? Unit tests. Closes #35689 from allisonwang-db/spark-38180-3.1. Authored-by: allisonwang-db <allison.wang@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 1e1f6b2aac5091343d572fb2472f46fa574882eb) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 01 March 2022, 07:14:28 UTC |

| 9d54a95 | Ruifeng Zheng | 24 February 2022, 02:49:52 UTC | [SPARK-38286][SQL] Union's maxRows and maxRowsPerPartition may overflow check Union's maxRows and maxRowsPerPartition Union's maxRows and maxRowsPerPartition may overflow: case 1: ``` scala> val df1 = spark.range(0, Long.MaxValue, 1, 1) df1: org.apache.spark.sql.Dataset[Long] = [id: bigint] scala> val df2 = spark.range(0, 100, 1, 10) df2: org.apache.spark.sql.Dataset[Long] = [id: bigint] scala> val union = df1.union(df2) union: org.apache.spark.sql.Dataset[Long] = [id: bigint] scala> union.queryExecution.logical.maxRowsPerPartition res19: Option[Long] = Some(-9223372036854775799) scala> union.queryExecution.logical.maxRows res20: Option[Long] = Some(-9223372036854775709) ``` case 2: ``` scala> val n = 2000000 n: Int = 2000000 scala> val df1 = spark.range(0, n, 1, 1).selectExpr("id % 5 as key1", "id as value1") df1: org.apache.spark.sql.DataFrame = [key1: bigint, value1: bigint] scala> val df2 = spark.range(0, n, 1, 2).selectExpr("id % 3 as key2", "id as value2") df2: org.apache.spark.sql.DataFrame = [key2: bigint, value2: bigint] scala> val df3 = spark.range(0, n, 1, 3).selectExpr("id % 4 as key3", "id as value3") df3: org.apache.spark.sql.DataFrame = [key3: bigint, value3: bigint] scala> val joined = df1.join(df2, col("key1") === col("key2")).join(df3, col("key1") === col("key3")) joined: org.apache.spark.sql.DataFrame = [key1: bigint, value1: bigint ... 4 more fields] scala> val unioned = joined.select(col("key1"), col("value3")).union(joined.select(col("key1"), col("value2"))) unioned: org.apache.spark.sql.Dataset[org.apache.spark.sql.Row] = [key1: bigint, value3: bigint] scala> unioned.queryExecution.optimizedPlan.maxRows res32: Option[Long] = Some(-2446744073709551616) scala> unioned.queryExecution.optimizedPlan.maxRows res33: Option[Long] = Some(-2446744073709551616) ``` No added testsuite Closes #35609 from zhengruifeng/union_maxRows_validate. Authored-by: Ruifeng Zheng <ruifengz@foxmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 683bc46ff9a791ab6b9cd3cb95be6bbc368121e0) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 24 February 2022, 02:54:42 UTC |

| 679a7e1 | itholic | 22 February 2022, 07:41:16 UTC | [SPARK-38279][TESTS][3.2] Pin MarkupSafe to 2.0.1 fix linter failure This PR proposes to pin the Python package `markupsafe` to 2.0.1 to fix the CI failure as below. ``` ImportError: cannot import name 'soft_unicode' from 'markupsafe' (/home/runner/work/_temp/setup-sam-43osIE/.venv/lib/python3.10/site-packages/markupsafe/__init__.py) ``` Since `markupsafe==2.1.0` has removed `soft_unicode`, `from markupsafe import soft_unicode` no longer working properly. See https://github.com/aws/aws-sam-cli/issues/3661 for more detail. To fix the CI failure on branch-3.2 No. The existing tests are should be passed Closes #35602 from itholic/SPARK-38279. Authored-by: itholic <haejoon.lee@databricks.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 79099cf7baf6e094884b5f77e82a4915272f15c5) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 22 February 2022, 07:48:49 UTC |

| 32e7e7b | Itay Bittan | 20 February 2022, 02:51:53 UTC | [MINOR][DOCS] fix default value of history server ### What changes were proposed in this pull request? Alignment between the documentation and the code. ### Why are the changes needed? The [actual default value ](https://github.com/apache/spark/blame/master/core/src/main/scala/org/apache/spark/internal/config/History.scala#L198) for `spark.history.custom.executor.log.url.applyIncompleteApplication` is `true` and not `false` as stated in the documentation. ### Does this PR introduce _any_ user-facing change? ### How was this patch tested? Closes #35577 from itayB/doc. Authored-by: Itay Bittan <itay.bittan@gmail.com> Signed-off-by: Yuming Wang <yumwang@ebay.com> (cherry picked from commit ae67adde4d2dc0a75e03710fc3e66ea253feeda3) Signed-off-by: Yuming Wang <yumwang@ebay.com> | 20 February 2022, 02:53:48 UTC |

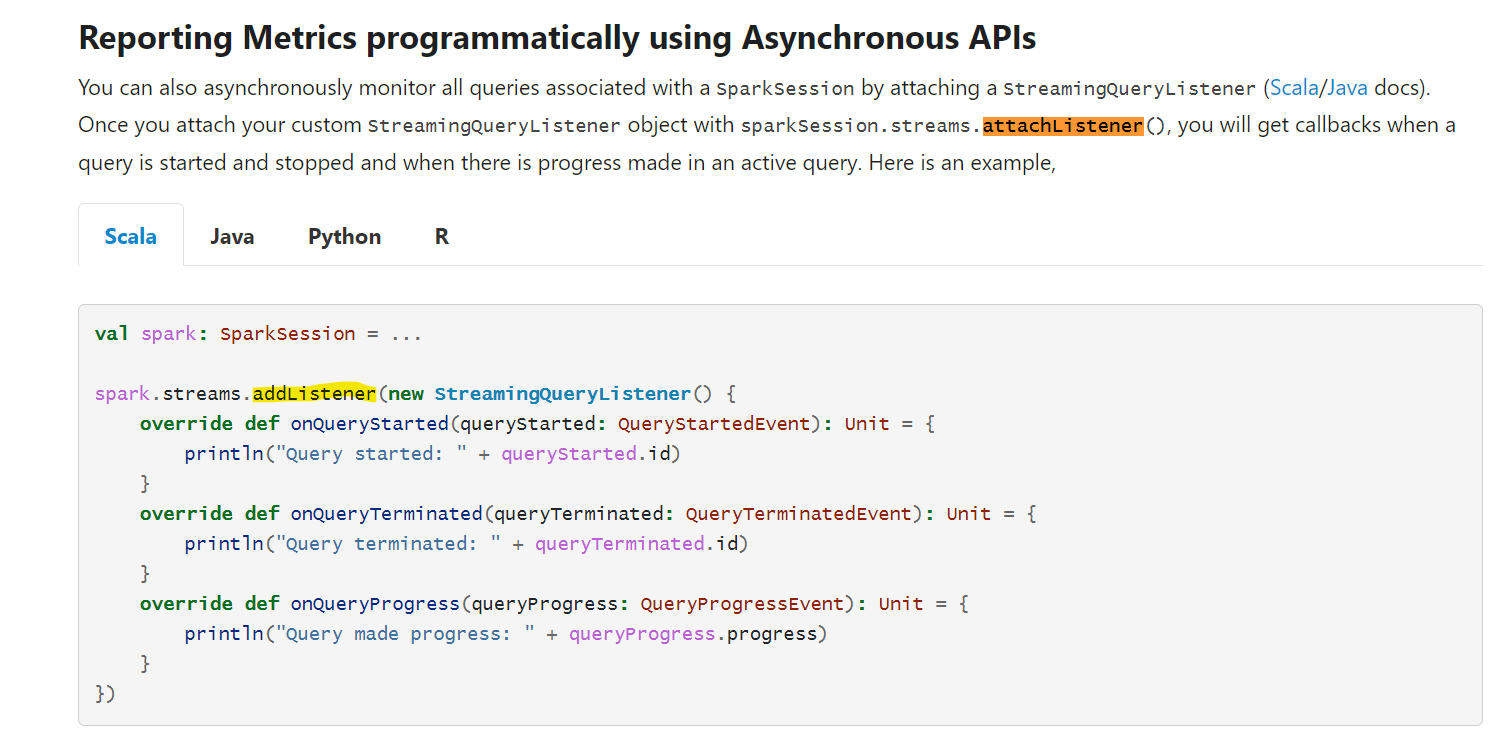

| bbbcb66 | Karthik Subramanian | 18 February 2022, 03:52:11 UTC | [MINOR][DOC] Fix documentation for structured streaming - addListener ### What changes were proposed in this pull request? This PR fixes the incorrect documentation in Structured Streaming Guide where it says `sparkSession.streams.attachListener()` instead of `sparkSession.streams.addListener()` which is the correct usage as mentioned in the code snippet below in the same doc.  ### Why are the changes needed? The documentation was erroneous, and needs to be fixed to avoid confusion by readers ### Does this PR introduce _any_ user-facing change? Yes, since it's a doc fix. This fix needs to be applied to previous versions retro-actively as well. ### How was this patch tested? Not necessary Closes #35562 from yeskarthik/fix-structured-streaming-docs-1. Authored-by: Karthik Subramanian <karsubr@microsoft.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 837248a0c42d55ad48240647d503ad544e64f016) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 18 February 2022, 03:53:55 UTC |

| 3945792 | tianlzhang | 15 February 2022, 03:52:37 UTC | [SPARK-38211][SQL][DOCS] Add SQL migration guide on restoring loose upcast from string to other types ### What changes were proposed in this pull request? Add doc on restoring loose upcast from string to other types (behavior before 2.4.1) to SQL migration guide. ### Why are the changes needed? After [SPARK-24586](https://issues.apache.org/jira/browse/SPARK-24586), loose upcasting from string to other types are not allowed by default. User can still set `spark.sql.legacy.looseUpcast=true` to restore old behavior but it's not documented. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Only doc change. Closes #35519 from manuzhang/spark-38211. Authored-by: tianlzhang <tianlzhang@ebay.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 78514e3149bc43b2485e4be0ab982601a842600b) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 15 February 2022, 03:53:20 UTC |

| 755d11d | stczwd | 11 January 2022, 06:23:12 UTC | [SPARK-37860][UI] Fix taskindex in the stage page task event timeline ### What changes were proposed in this pull request? This reverts commit 450b415028c3b00f3a002126cd11318d3932e28f. ### Why are the changes needed? In #32888, shahidki31 change taskInfo.index to taskInfo.taskId. However, we generally use `index.attempt` or `taskId` to distinguish tasks within a stage, not `taskId.attempt`. Thus #32888 was a wrong fix issue, we should revert it. ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? origin test suites Closes #35160 from stczwd/SPARK-37860. Authored-by: stczwd <qcsd2011@163.com> Signed-off-by: Kousuke Saruta <sarutak@oss.nttdata.com> (cherry picked from commit 3d2fde5242c8989688c176b8ed5eb0bff5e1f17f) Signed-off-by: Kousuke Saruta <sarutak@oss.nttdata.com> | 11 January 2022, 06:24:11 UTC |

| 2fbf969 | Hyukjin Kwon | 05 January 2022, 08:51:30 UTC | Revert "[SPARK-37779][SQL] Make ColumnarToRowExec plan canonicalizable after (de)serialization" This reverts commit 8ab7cd3ca7e828e114218ae811a9afebb5c9bcc7. | 05 January 2022, 08:51:30 UTC |

| be441e8 | yi.wu | 05 January 2022, 02:48:16 UTC | [SPARK-35714][FOLLOW-UP][CORE] WorkerWatcher should run System.exit in a thread out of RpcEnv ### What changes were proposed in this pull request? This PR proposes to let `WorkerWatcher` run `System.exit` in a separate thread instead of some thread of `RpcEnv`. ### Why are the changes needed? `System.exit` will trigger the shutdown hook to run `executor.stop`, which will result in the same deadlock issue with SPARK-14180. But note that since Spark upgrades to Hadoop 3 recently, each hook now will have a [timeout threshold](https://github.com/apache/hadoop/blob/d4794dd3b2ba365a9d95ad6aafcf43a1ea40f777/hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/util/ShutdownHookManager.java#L205-L209) which forcibly interrupt the hook execution once reaches timeout. So, the deadlock issue doesn't really exist in the master branch. However, it's still critical for previous releases and is a wrong behavior that should be fixed. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Tested manually. Closes #35069 from Ngone51/fix-workerwatcher-exit. Authored-by: yi.wu <yi.wu@databricks.com> Signed-off-by: yi.wu <yi.wu@databricks.com> (cherry picked from commit 639d6f40e597d79c680084376ece87e40f4d2366) Signed-off-by: yi.wu <yi.wu@databricks.com> | 05 January 2022, 02:49:51 UTC |

| 3aaf722 | Josh Rosen | 04 January 2022, 18:59:53 UTC | [SPARK-37784][SQL] Correctly handle UDTs in CodeGenerator.addBufferedState() ### What changes were proposed in this pull request? This PR fixes a correctness issue in the CodeGenerator.addBufferedState() helper method (which is used by the SortMergeJoinExec operator). The addBufferedState() method generates code for buffering values that come from a row in an operator's input iterator, performing any necessary copying so that the buffered values remain correct after the input iterator advances to the next row. The current logic does not correctly handle UDTs: these fall through to the match statement's default branch, causing UDT values to be buffered without copying. This is problematic if the UDT's underlying SQL type is an array, map, struct, or string type (since those types require copying). Failing to copy values can lead to correctness issues or crashes. This patch's fix is simple: when the dataType is a UDT, use its underlying sqlType for determining whether values need to be copied. I used an existing helper function to perform this type unwrapping. ### Why are the changes needed? Fix a correctness issue. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? I manually tested this change by re-running a workload which failed with a segfault prior to this patch. See JIRA for more details: https://issues.apache.org/jira/browse/SPARK-37784 So far I have been unable to come up with a CI-runnable regression test which would have failed prior to this change (my only working reproduction runs in a pre-production environment and does not fail in my development environment). Closes #35066 from JoshRosen/SPARK-37784. Authored-by: Josh Rosen <joshrosen@databricks.com> Signed-off-by: Josh Rosen <joshrosen@databricks.com> (cherry picked from commit eeef48fac412a57382b02ba3f39456d96379b5f5) Signed-off-by: Josh Rosen <joshrosen@databricks.com> | 04 January 2022, 19:03:20 UTC |

| 8ab7cd3 | Hyukjin Kwon | 30 December 2021, 03:38:37 UTC | [SPARK-37779][SQL] Make ColumnarToRowExec plan canonicalizable after (de)serialization This PR proposes to add a driver-side check on `supportsColumnar` sanity check at `ColumnarToRowExec`. SPARK-23731 fixed the plans to be serializable by leveraging lazy but SPARK-28213 happened to refer to the lazy variable at: https://github.com/apache/spark/blob/77b164aac9764049a4820064421ef82ec0bc14fb/sql/core/src/main/scala/org/apache/spark/sql/execution/Columnar.scala#L68 This can fail during canonicalization during, for example, eliminating sub common expressions (on executor side): ``` java.lang.NullPointerException at org.apache.spark.sql.execution.FileSourceScanExec.supportsColumnar$lzycompute(DataSourceScanExec.scala:280) at org.apache.spark.sql.execution.FileSourceScanExec.supportsColumnar(DataSourceScanExec.scala:279) at org.apache.spark.sql.execution.InputAdapter.supportsColumnar(WholeStageCodegenExec.scala:509) at org.apache.spark.sql.execution.ColumnarToRowExec.<init>(Columnar.scala:67) ... at org.apache.spark.sql.catalyst.plans.QueryPlan.canonicalized$lzycompute(QueryPlan.scala:581) at org.apache.spark.sql.catalyst.plans.QueryPlan.canonicalized(QueryPlan.scala:580) at org.apache.spark.sql.execution.ScalarSubquery.canonicalized$lzycompute(subquery.scala:110) ... at org.apache.spark.sql.catalyst.expressions.ExpressionEquals.hashCode(EquivalentExpressions.scala:275) ... at scala.collection.mutable.HashTable.findEntry$(HashTable.scala:135) at scala.collection.mutable.HashMap.findEntry(HashMap.scala:44) at scala.collection.mutable.HashMap.get(HashMap.scala:74) at org.apache.spark.sql.catalyst.expressions.EquivalentExpressions.addExpr(EquivalentExpressions.scala:46) at org.apache.spark.sql.catalyst.expressions.EquivalentExpressions.addExprTreeHelper$1(EquivalentExpressions.scala:147) at org.apache.spark.sql.catalyst.expressions.EquivalentExpressions.addExprTree(EquivalentExpressions.scala:170) at org.apache.spark.sql.catalyst.expressions.SubExprEvaluationRuntime.$anonfun$proxyExpressions$1(SubExprEvaluationRuntime.scala:89) at org.apache.spark.sql.catalyst.expressions.SubExprEvaluationRuntime.$anonfun$proxyExpressions$1$adapted(SubExprEvaluationRuntime.scala:89) at scala.collection.immutable.List.foreach(List.scala:392) ``` This fix is still a bandaid fix but at least addresses the issue with minimized change - this fix should ideally be backported too. Pretty unlikely - when `ColumnarToRowExec` has to be canonicalized on the executor side (see the stacktrace), but yes. it would fix a bug. Unittest was added. Closes #35058 from HyukjinKwon/SPARK-37779. Authored-by: Hyukjin Kwon <gurwls223@apache.org> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 195f1aaf4361fb8f5f31ef7f5c63464767ad88bd) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 30 December 2021, 03:42:24 UTC |

| 85a8f70 | Alex Ott | 19 December 2021, 08:02:40 UTC | [MINOR][PYTHON][DOCS] Fix documentation for Python's recentProgress & lastProgress This small PR fixes incorrect documentation in Structured Streaming Guide where Python's `recentProgress` & `lastProgress` where shown as functions although they are [properties](https://github.com/apache/spark/blob/master/python/pyspark/sql/streaming.py#L117), so if they are called as functions it generates error: ``` >>> query.lastProgress() Traceback (most recent call last): File "<stdin>", line 1, in <module> TypeError: 'dict' object is not callable ``` The documentation was erroneous, and needs to be fixed to avoid confusion by readers yes, it's a fix of the documentation Not necessary Closes #34947 from alexott/fix-python-recent-progress-docs. Authored-by: Alex Ott <alexott@gmail.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit bad96e6d029dff5be9efaf99f388cd9436741b6f) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 19 December 2021, 08:04:03 UTC |

| 113f750 | Daniel Dai | 07 December 2021, 14:48:23 UTC | [SPARK-37556][SQL] Deser void class fail with Java serialization **What changes were proposed in this pull request?** Change the deserialization mapping for primitive type void. **Why are the changes needed?** The void primitive type in Scala should be classOf[Unit] not classOf[Void]. Spark erroneously [map it](https://github.com/apache/spark/blob/v3.2.0/core/src/main/scala/org/apache/spark/serializer/JavaSerializer.scala#L80) differently than all other primitive types. Here is the code: ``` private object JavaDeserializationStream { val primitiveMappings = Map[String, Class[_]]( "boolean" -> classOf[Boolean], "byte" -> classOf[Byte], "char" -> classOf[Char], "short" -> classOf[Short], "int" -> classOf[Int], "long" -> classOf[Long], "float" -> classOf[Float], "double" -> classOf[Double], "void" -> classOf[Void] ) } ``` Spark code is Here is the demonstration: ``` scala> classOf[Long] val res0: Class[Long] = long scala> classOf[Double] val res1: Class[Double] = double scala> classOf[Byte] val res2: Class[Byte] = byte scala> classOf[Void] val res3: Class[Void] = class java.lang.Void <--- this is wrong scala> classOf[Unit] val res4: Class[Unit] = void <---- this is right ``` It will result in Spark deserialization error if the Spark code contains void primitive type: `java.io.InvalidClassException: java.lang.Void; local class name incompatible with stream class name "void"` **Does this PR introduce any user-facing change?** no **How was this patch tested?** Changed test, also tested e2e with the code results deserialization error and it pass now. Closes #34816 from daijyc/voidtype. Authored-by: Daniel Dai <jdai@pinterest.com> Signed-off-by: Sean Owen <srowen@gmail.com> (cherry picked from commit fb40c0e19f84f2de9a3d69d809e9e4031f76ef90) Signed-off-by: Sean Owen <srowen@gmail.com> | 07 December 2021, 15:30:57 UTC |

| 8fb3e39 | weixiuli | 03 December 2021, 03:34:36 UTC | [SPARK-37524][SQL] We should drop all tables after testing dynamic partition pruning ### What changes were proposed in this pull request? Drop all tables after testing dynamic partition pruning. ### Why are the changes needed? We should drop all tables after testing dynamic partition pruning. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Exist unittests Closes #34768 from weixiuli/SPARK-11150-fix. Authored-by: weixiuli <weixiuli@jd.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 2433c942ca39b948efe804aeab0185a3f37f3eea) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 03 December 2021, 03:35:30 UTC |

| 7ba340c | yangjie01 | 22 November 2021, 01:11:40 UTC | [SPARK-37209][YARN][TESTS] Fix `YarnShuffleIntegrationSuite` releated UTs when using `hadoop-3.2` profile without `assembly/target/scala-%s/jars` ### What changes were proposed in this pull request? `YarnShuffleIntegrationSuite`, `YarnShuffleAuthSuite` and `YarnShuffleAlternateNameConfigSuite` will failed when using `hadoop-3.2` profile without `assembly/target/scala-%s/jars`, the fail reason is `java.lang.NoClassDefFoundError: breeze/linalg/Matrix`. The above UTS can succeed when using `hadoop-2.7` profile without `assembly/target/scala-%s/jars` because `KryoSerializer.loadableSparkClasses` can workaroud when `Utils.isTesting` is true, but `Utils.isTesting` is false when using `hadoop-3.2` profile. After investigated, I found that when `hadoop-2.7` profile is used, `SPARK_TESTING` will be propagated to AM and Executor, but when `hadoop-3.2` profile is used, `SPARK_TESTING` will not be propagated to AM and Executor. In order to ensure the consistent behavior of using `hadoop-2.7` and ``hadoop-3.2``, this pr change to manually propagate `SPARK_TESTING` environment variable if it exists to ensure `Utils.isTesting` is true in above test scenario. ### Why are the changes needed? Ensure `YarnShuffleIntegrationSuite` releated UTs can succeed when using `hadoop-3.2` profile without `assembly/target/scala-%s/jars` ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? - Pass the Jenkins or GitHub Action - Manual test `YarnShuffleIntegrationSuite`. `YarnShuffleAuthSuite` and `YarnShuffleAlternateNameConfigSuite` can be verified in the same way. Please ensure that the `assembly/target/scala-%s/jars` directory does not exist before executing the test command, we can clean up the whole project by executing follow command or clone a new local code repo. 1. run with `hadoop-3.2` profile ``` mvn clean install -Phadoop-3.2 -Pyarn -Dtest=none -DwildcardSuites=org.apache.spark.deploy.yarn.YarnShuffleIntegrationSuite ``` **Before** ``` YarnShuffleIntegrationSuite: - external shuffle service *** FAILED *** FAILED did not equal FINISHED (stdout/stderr was not captured) (BaseYarnClusterSuite.scala:227) Run completed in 48 seconds, 137 milliseconds. Total number of tests run: 1 Suites: completed 2, aborted 0 Tests: succeeded 0, failed 1, canceled 0, ignored 0, pending 0 *** 1 TEST FAILED *** ``` Error stack as follows: ``` 21/11/20 23:00:09.682 main ERROR Client: Application diagnostics message: User class threw exception: org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 0.0 failed 4 times, most recent failure: Lost task 0.3 in stage 0.0 (TID 6) (localhost executor 1): java.lang.NoClassDefFoundError: breeze/linalg/Matrix at java.lang.Class.forName0(Native Method) at java.lang.Class.forName(Class.java:348) at org.apache.spark.util.Utils$.classForName(Utils.scala:216) at org.apache.spark.serializer.KryoSerializer$.$anonfun$loadableSparkClasses$1(KryoSerializer.scala:537) at scala.collection.immutable.List.flatMap(List.scala:366) at org.apache.spark.serializer.KryoSerializer$.loadableSparkClasses$lzycompute(KryoSerializer.scala:535) at org.apache.spark.serializer.KryoSerializer$.org$apache$spark$serializer$KryoSerializer$$loadableSparkClasses(KryoSerializer.scala:502) at org.apache.spark.serializer.KryoSerializer.newKryo(KryoSerializer.scala:226) at org.apache.spark.serializer.KryoSerializer$$anon$1.create(KryoSerializer.scala:102) at com.esotericsoftware.kryo.pool.KryoPoolQueueImpl.borrow(KryoPoolQueueImpl.java:48) at org.apache.spark.serializer.KryoSerializer$PoolWrapper.borrow(KryoSerializer.scala:109) at org.apache.spark.serializer.KryoSerializerInstance.borrowKryo(KryoSerializer.scala:346) at org.apache.spark.serializer.KryoSerializationStream.<init>(KryoSerializer.scala:266) at org.apache.spark.serializer.KryoSerializerInstance.serializeStream(KryoSerializer.scala:432) at org.apache.spark.shuffle.ShufflePartitionPairsWriter.open(ShufflePartitionPairsWriter.scala:76) at org.apache.spark.shuffle.ShufflePartitionPairsWriter.write(ShufflePartitionPairsWriter.scala:59) at org.apache.spark.util.collection.WritablePartitionedIterator.writeNext(WritablePartitionedPairCollection.scala:83) at org.apache.spark.util.collection.ExternalSorter.$anonfun$writePartitionedMapOutput$1(ExternalSorter.scala:772) at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23) at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1468) at org.apache.spark.util.collection.ExternalSorter.writePartitionedMapOutput(ExternalSorter.scala:775) at org.apache.spark.shuffle.sort.SortShuffleWriter.write(SortShuffleWriter.scala:70) at org.apache.spark.shuffle.ShuffleWriteProcessor.write(ShuffleWriteProcessor.scala:59) at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:99) at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:52) at org.apache.spark.scheduler.Task.run(Task.scala:136) at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:507) at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1468) at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:510) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748) Caused by: java.lang.ClassNotFoundException: breeze.linalg.Matrix at java.net.URLClassLoader.findClass(URLClassLoader.java:382) at java.lang.ClassLoader.loadClass(ClassLoader.java:419) at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:352) at java.lang.ClassLoader.loadClass(ClassLoader.java:352) ... 32 more ``` **After** ``` YarnShuffleIntegrationSuite: - external shuffle service Run completed in 35 seconds, 188 milliseconds. Total number of tests run: 1 Suites: completed 2, aborted 0 Tests: succeeded 1, failed 0, canceled 0, ignored 0, pending 0 All tests passed. ``` 2. run with `hadoop-2.7` profile ``` mvn clean install -Phadoop-2.7 -Pyarn -Dtest=none -DwildcardSuites=org.apache.spark.deploy.yarn.YarnShuffleIntegrationSuite ``` **Before** ``` YarnShuffleIntegrationSuite: - external shuffle service Run completed in 30 seconds, 828 milliseconds. Total number of tests run: 1 Suites: completed 2, aborted 0 Tests: succeeded 1, failed 0, canceled 0, ignored 0, pending 0 All tests passed. ``` **After** ``` YarnShuffleIntegrationSuite: - external shuffle service Run completed in 30 seconds, 967 milliseconds. Total number of tests run: 1 Suites: completed 2, aborted 0 Tests: succeeded 1, failed 0, canceled 0, ignored 0, pending 0 All tests passed. ``` Closes #34620 from LuciferYang/SPARK-37209. Authored-by: yangjie01 <yangjie01@baidu.com> Signed-off-by: Sean Owen <srowen@gmail.com> (cherry picked from commit a7b3fc7cef4c5df0254b945fe9f6815b072b31dd) Signed-off-by: Sean Owen <srowen@gmail.com> | 22 November 2021, 01:12:25 UTC |

| 45c84ca | zero323 | 19 November 2021, 00:47:17 UTC | [MINOR][R][DOCS] Fix minor issues in SparkR docs examples ### What changes were proposed in this pull request? This PR fixes two minor problems in SpakR `examples` - Replace misplaced standard comment (`#`) with roxygen comment (`#'`) in `sparkR.session` `examples` - Add missing comma in `write.stream` examples. ### Why are the changes needed? - `sparkR.session` examples are not fully rendered. - `write.stream` example is not syntactically valid. ### Does this PR introduce _any_ user-facing change? Docs only. ### How was this patch tested? Manual inspection of build docs. Closes #34654 from zero323/sparkr-docs-fixes. Authored-by: zero323 <mszymkiewicz@gmail.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 207dd4ce72a5566c554f224edb046106cf97b952) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 19 November 2021, 00:48:10 UTC |

| aa4ef0e | Angerszhuuuu | 08 November 2021, 20:08:32 UTC | [SPARK-37196][SQL] HiveDecimal enforcePrecisionScale failed return null For case ``` withTempDir { dir => withSQLConf(HiveUtils.CONVERT_METASTORE_PARQUET.key -> "false") { withTable("test_precision") { val df = sql("SELECT 'dummy' AS name, 1000000000000000000010.7000000000000010 AS value") df.write.mode("Overwrite").parquet(dir.getAbsolutePath) sql( s""" |CREATE EXTERNAL TABLE test_precision(name STRING, value DECIMAL(18,6)) |STORED AS PARQUET LOCATION '${dir.getAbsolutePath}' |""".stripMargin) checkAnswer(sql("SELECT * FROM test_precision"), Row("dummy", null)) } } } ``` We write a data with schema It's caused by you create a df with ``` root |-- name: string (nullable = false) |-- value: decimal(38,16) (nullable = false) ``` but create table schema ``` root |-- name: string (nullable = false) |-- value: decimal(18,6) (nullable = false) ``` This will cause enforcePrecisionScale return `null` ``` public HiveDecimal getPrimitiveJavaObject(Object o) { return o == null ? null : this.enforcePrecisionScale(((HiveDecimalWritable)o).getHiveDecimal()); } ``` Then throw NPE when call `toCatalystDecimal ` We should judge if the return value is `null` to avoid throw NPE Fix bug No Added UT Closes #34519 from AngersZhuuuu/SPARK-37196. Authored-by: Angerszhuuuu <angers.zhu@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit a4f8ffbbfb0158a03ff52f1ed0dde75241c3a90e) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 08 November 2021, 20:20:53 UTC |