https://github.com/apache/spark

- HEAD

- refs/heads/branch-0.5

- refs/heads/branch-0.6

- refs/heads/branch-0.7

- refs/heads/branch-0.8

- refs/heads/branch-0.9

- refs/heads/branch-1.0

- refs/heads/branch-1.0-jdbc

- refs/heads/branch-1.1

- refs/heads/branch-1.2

- refs/heads/branch-1.3

- refs/heads/branch-1.4

- refs/heads/branch-1.5

- refs/heads/branch-1.6

- refs/heads/branch-2.0

- refs/heads/branch-2.1

- refs/heads/branch-2.2

- refs/heads/branch-2.3

- refs/heads/branch-2.4

- refs/heads/branch-3.0

- refs/heads/branch-3.1

- refs/heads/branch-3.2

- refs/heads/branch-3.3

- refs/heads/branch-3.4

- refs/heads/branch-3.5

- refs/heads/master

- refs/remotes/origin/branch-0.8

- refs/remotes/origin/td-rdd-save

- refs/tags/0.3-scala-2.8

- refs/tags/0.3-scala-2.9

- refs/tags/2.0.0-preview

- refs/tags/alpha-0.1

- refs/tags/alpha-0.2

- refs/tags/v0.5.0

- refs/tags/v0.5.1

- refs/tags/v0.5.2

- refs/tags/v0.6.0

- refs/tags/v0.6.0-yarn

- refs/tags/v0.6.1

- refs/tags/v0.6.2

- refs/tags/v0.7.0

- refs/tags/v0.7.0-bizo-1

- refs/tags/v0.7.1

- refs/tags/v0.7.2

- refs/tags/v0.9.1

- refs/tags/v0.9.2

- refs/tags/v1.0.0

- refs/tags/v1.0.1

- refs/tags/v1.0.2

- refs/tags/v1.1.0

- refs/tags/v1.1.1

- refs/tags/v1.2.0

- refs/tags/v1.2.1

- refs/tags/v1.2.2

- refs/tags/v1.3.0

- refs/tags/v1.3.1

- refs/tags/v1.4.0

- refs/tags/v1.4.1

- refs/tags/v1.5.0-rc1

- refs/tags/v1.5.0-rc2

- refs/tags/v1.5.0-rc3

- refs/tags/v1.5.1

- refs/tags/v1.6.0

- refs/tags/v1.6.1

- refs/tags/v1.6.2

- refs/tags/v1.6.3

- refs/tags/v2.0.0

- refs/tags/v2.0.1

- refs/tags/v2.0.2

- refs/tags/v2.1.0

- refs/tags/v2.1.1

- refs/tags/v2.1.2

- refs/tags/v2.1.2-rc1

- refs/tags/v2.1.2-rc2

- refs/tags/v2.1.2-rc3

- refs/tags/v2.1.2-rc4

- refs/tags/v2.1.3

- refs/tags/v2.1.3-rc1

- refs/tags/v2.1.3-rc2

- refs/tags/v2.2.0

- refs/tags/v2.2.1

- refs/tags/v2.2.1-rc1

- refs/tags/v2.2.1-rc2

- refs/tags/v2.2.2

- refs/tags/v2.2.2-rc1

- refs/tags/v2.2.2-rc2

- refs/tags/v2.2.3

- refs/tags/v2.2.3-rc1

- refs/tags/v2.3.0

- refs/tags/v2.3.0-rc1

- refs/tags/v2.3.0-rc2

- refs/tags/v2.3.0-rc3

- refs/tags/v2.3.0-rc4

- refs/tags/v2.3.1

- refs/tags/v2.3.1-rc1

- refs/tags/v2.3.1-rc2

- refs/tags/v2.3.1-rc3

- refs/tags/v2.3.1-rc4

- refs/tags/v2.3.2

- refs/tags/v2.3.2-rc1

- refs/tags/v2.3.2-rc2

- refs/tags/v2.3.2-rc3

- refs/tags/v2.3.2-rc4

- refs/tags/v2.3.2-rc5

- refs/tags/v2.3.2-rc6

- refs/tags/v2.3.3

- refs/tags/v2.3.3-rc1

- refs/tags/v2.3.3-rc2

- refs/tags/v2.3.4

- refs/tags/v2.3.4-rc1

- refs/tags/v2.4.0

- refs/tags/v2.4.0-rc1

- refs/tags/v2.4.0-rc2

- refs/tags/v2.4.0-rc3

- refs/tags/v2.4.0-rc4

- refs/tags/v2.4.0-rc5

- refs/tags/v2.4.1

- refs/tags/v2.4.1-rc1

- refs/tags/v2.4.1-rc2

- refs/tags/v2.4.1-rc3

- refs/tags/v2.4.1-rc4

- refs/tags/v2.4.1-rc5

- refs/tags/v2.4.1-rc6

- refs/tags/v2.4.1-rc7

- refs/tags/v2.4.1-rc8

- refs/tags/v2.4.1-rc9

- refs/tags/v2.4.2

- refs/tags/v2.4.2-rc1

- refs/tags/v2.4.3

- refs/tags/v2.4.3-rc1

- refs/tags/v2.4.4

- refs/tags/v2.4.4-rc1

- refs/tags/v2.4.4-rc2

- refs/tags/v2.4.4-rc3

- refs/tags/v2.4.5

- refs/tags/v2.4.5-rc1

- refs/tags/v2.4.5-rc2

- refs/tags/v2.4.6

- refs/tags/v2.4.6-rc1

- refs/tags/v2.4.6-rc2

- refs/tags/v2.4.6-rc3

- refs/tags/v2.4.6-rc4

- refs/tags/v2.4.6-rc5

- refs/tags/v2.4.6-rc6

- refs/tags/v2.4.6-rc7

- refs/tags/v2.4.6-rc8

- refs/tags/v2.4.7

- refs/tags/v2.4.7-rc1

- refs/tags/v2.4.7-rc2

- refs/tags/v2.4.7-rc3

- refs/tags/v2.4.8

- refs/tags/v2.4.8-rc1

- refs/tags/v2.4.8-rc2

- refs/tags/v2.4.8-rc3

- refs/tags/v2.4.8-rc4

- refs/tags/v3.0.0

- refs/tags/v3.0.0-preview2

- refs/tags/v3.0.0-preview2-rc1

- refs/tags/v3.0.0-preview2-rc2

- refs/tags/v3.0.0-rc1

- refs/tags/v3.0.0-rc2

- refs/tags/v3.0.0-rc3

- refs/tags/v3.0.1

- refs/tags/v3.0.1-rc1

- refs/tags/v3.0.1-rc2

- refs/tags/v3.0.1-rc3

- refs/tags/v3.0.2

- refs/tags/v3.0.2-rc1

- refs/tags/v3.0.3

- refs/tags/v3.0.3-rc1

- refs/tags/v3.1.0-rc1

- refs/tags/v3.1.1

- refs/tags/v3.1.1-rc1

- refs/tags/v3.1.1-rc2

- refs/tags/v3.1.1-rc3

- refs/tags/v3.1.2

- refs/tags/v3.1.2-rc1

- refs/tags/v3.1.3

- refs/tags/v3.1.3-rc1

- refs/tags/v3.1.3-rc2

- refs/tags/v3.1.3-rc3

- refs/tags/v3.1.3-rc4

- refs/tags/v3.2.0

- refs/tags/v3.2.0-rc1

- refs/tags/v3.2.0-rc2

- refs/tags/v3.2.0-rc3

- refs/tags/v3.2.0-rc4

- refs/tags/v3.2.0-rc5

- refs/tags/v3.2.0-rc6

- refs/tags/v3.2.0-rc7

- refs/tags/v3.2.1

- refs/tags/v3.2.1-rc1

- refs/tags/v3.2.1-rc2

- refs/tags/v3.2.2

- refs/tags/v3.2.2-rc1

- refs/tags/v3.2.3

- refs/tags/v3.2.3-rc1

- refs/tags/v3.2.4

- refs/tags/v3.2.4-rc1

- refs/tags/v3.3.0

- refs/tags/v3.3.0-rc1

- refs/tags/v3.3.0-rc2

- refs/tags/v3.3.0-rc3

- refs/tags/v3.3.0-rc4

- refs/tags/v3.3.0-rc5

- refs/tags/v3.3.0-rc6

- refs/tags/v3.3.1

- refs/tags/v3.3.1-rc1

- refs/tags/v3.3.1-rc2

- refs/tags/v3.3.1-rc3

- refs/tags/v3.3.1-rc4

- refs/tags/v3.3.2

- refs/tags/v3.3.2-rc1

- refs/tags/v3.3.3

- refs/tags/v3.3.3-rc1

- refs/tags/v3.3.4

- refs/tags/v3.3.4-rc1

- refs/tags/v3.4.0

- refs/tags/v3.4.0-rc1

- refs/tags/v3.4.0-rc2

- refs/tags/v3.4.0-rc3

- refs/tags/v3.4.0-rc4

- refs/tags/v3.4.0-rc5

- refs/tags/v3.4.0-rc6

- refs/tags/v3.4.0-rc7

- refs/tags/v3.4.1

- refs/tags/v3.4.1-rc1

- refs/tags/v3.4.2

- refs/tags/v3.4.2-rc1

- refs/tags/v3.5.0

- refs/tags/v3.5.0-rc1

- refs/tags/v3.5.0-rc2

- refs/tags/v3.5.0-rc3

- refs/tags/v3.5.0-rc4

- refs/tags/v3.5.0-rc5

- refs/tags/v3.5.1

- refs/tags/v3.5.1-rc1

- refs/tags/v3.5.1-rc2

Take a new snapshot of a software origin

If the archived software origin currently browsed is not synchronized with its upstream version (for instance when new commits have been issued), you can explicitly request Software Heritage to take a new snapshot of it.

Use the form below to proceed. Once a request has been submitted and accepted, it will be processed as soon as possible. You can then check its processing state by visiting this dedicated page.

Processing "take a new snapshot" request ...

Permalinks

To reference or cite the objects present in the Software Heritage archive, permalinks based on SoftWare Hash IDentifiers (SWHIDs) must be used.

Select below a type of object currently browsed in order to display its associated SWHID and permalink.

| Revision | Author | Date | Message | Commit Date |

|---|---|---|---|---|

| 44095cb | Sameer Agarwal | 17 February 2018, 01:29:46 UTC | Preparing Spark release v2.3.0-rc4 | 17 February 2018, 01:29:46 UTC |

| 8360da0 | Shintaro Murakami | 17 February 2018, 01:17:55 UTC | [SPARK-23381][CORE] Murmur3 hash generates a different value from other implementations ## What changes were proposed in this pull request? Murmur3 hash generates a different value from the original and other implementations (like Scala standard library and Guava or so) when the length of a bytes array is not multiple of 4. ## How was this patch tested? Added a unit test. **Note: When we merge this PR, please give all the credits to Shintaro Murakami.** Author: Shintaro Murakami <mrkm4ntrgmail.com> Author: gatorsmile <gatorsmile@gmail.com> Author: Shintaro Murakami <mrkm4ntr@gmail.com> Closes #20630 from gatorsmile/pr-20568. (cherry picked from commit d5ed2108d32e1d95b26ee7fed39e8a733e935e2c) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 17 February 2018, 01:18:15 UTC |

| ccb0a59 | hyukjinkwon | 16 February 2018, 17:41:17 UTC | [SPARK-23446][PYTHON] Explicitly check supported types in toPandas ## What changes were proposed in this pull request? This PR explicitly specifies and checks the types we supported in `toPandas`. This was a hole. For example, we haven't finished the binary type support in Python side yet but now it allows as below: ```python spark.conf.set("spark.sql.execution.arrow.enabled", "false") df = spark.createDataFrame([[bytearray("a")]]) df.toPandas() spark.conf.set("spark.sql.execution.arrow.enabled", "true") df.toPandas() ``` ``` _1 0 [97] _1 0 a ``` This should be disallowed. I think the same things also apply to nested timestamps too. I also added some nicer message about `spark.sql.execution.arrow.enabled` in the error message. ## How was this patch tested? Manually tested and tests added in `python/pyspark/sql/tests.py`. Author: hyukjinkwon <gurwls223@gmail.com> Closes #20625 from HyukjinKwon/pandas_convertion_supported_type. (cherry picked from commit c5857e496ff0d170ed0339f14afc7d36b192da6d) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 16 February 2018, 17:41:32 UTC |

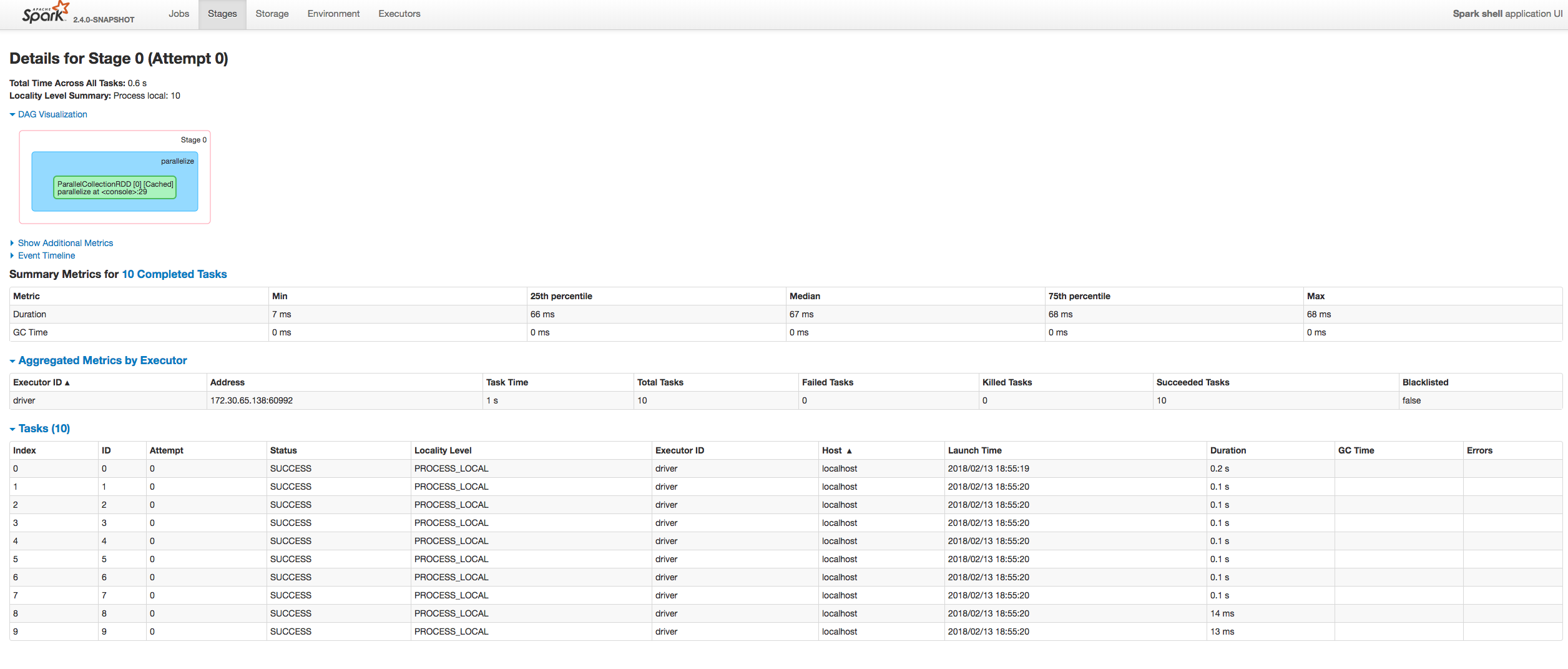

| 75bb19a | “attilapiros” | 15 February 2018, 20:03:41 UTC | [SPARK-23413][UI] Fix sorting tasks by Host / Executor ID at the Stag… …e page ## What changes were proposed in this pull request? Fixing exception got at sorting tasks by Host / Executor ID: ``` java.lang.IllegalArgumentException: Invalid sort column: Host at org.apache.spark.ui.jobs.ApiHelper$.indexName(StagePage.scala:1017) at org.apache.spark.ui.jobs.TaskDataSource.sliceData(StagePage.scala:694) at org.apache.spark.ui.PagedDataSource.pageData(PagedTable.scala:61) at org.apache.spark.ui.PagedTable$class.table(PagedTable.scala:96) at org.apache.spark.ui.jobs.TaskPagedTable.table(StagePage.scala:708) at org.apache.spark.ui.jobs.StagePage.liftedTree1$1(StagePage.scala:293) at org.apache.spark.ui.jobs.StagePage.render(StagePage.scala:282) at org.apache.spark.ui.WebUI$$anonfun$2.apply(WebUI.scala:82) at org.apache.spark.ui.WebUI$$anonfun$2.apply(WebUI.scala:82) at org.apache.spark.ui.JettyUtils$$anon$3.doGet(JettyUtils.scala:90) at javax.servlet.http.HttpServlet.service(HttpServlet.java:687) at javax.servlet.http.HttpServlet.service(HttpServlet.java:790) at org.spark_project.jetty.servlet.ServletHolder.handle(ServletHolder.java:848) at org.spark_project.jetty.servlet.ServletHandler.doHandle(ServletHandler.java:584) ``` Moreover some refactoring to avoid similar problems by introducing constants for each header name and reusing them at the identification of the corresponding sorting index. ## How was this patch tested? Manually:  (cherry picked from commit 1dc2c1d5e85c5f404f470aeb44c1f3c22786bdea) Author: “attilapiros” <piros.attila.zsolt@gmail.com> Closes #20623 from squito/fix_backport. | 15 February 2018, 20:03:41 UTC |

| 0bd7765 | Liang-Chi Hsieh | 15 February 2018, 17:54:39 UTC | [SPARK-23377][ML] Fixes Bucketizer with multiple columns persistence bug ## What changes were proposed in this pull request? #### Problem: Since 2.3, `Bucketizer` supports multiple input/output columns. We will check if exclusive params are set during transformation. E.g., if `inputCols` and `outputCol` are both set, an error will be thrown. However, when we write `Bucketizer`, looks like the default params and user-supplied params are merged during writing. All saved params are loaded back and set to created model instance. So the default `outputCol` param in `HasOutputCol` trait will be set in `paramMap` and become an user-supplied param. That makes the check of exclusive params failed. #### Fix: This changes the saving logic of Bucketizer to handle this case. This is a quick fix to catch the time of 2.3. We should consider modify the persistence mechanism later. Please see the discussion in the JIRA. Note: The multi-column `QuantileDiscretizer` also has the same issue. ## How was this patch tested? Modified tests. Author: Liang-Chi Hsieh <viirya@gmail.com> Closes #20594 from viirya/SPARK-23377-2. (cherry picked from commit db45daab90ede4c03c1abc9096f4eac584e9db17) Signed-off-by: Joseph K. Bradley <joseph@databricks.com> | 15 February 2018, 19:23:24 UTC |

| 03960fa | Dongjoon Hyun | 15 February 2018, 17:40:08 UTC | [MINOR][SQL] Fix an error message about inserting into bucketed tables ## What changes were proposed in this pull request? This replaces `Sparkcurrently` to `Spark currently` in the following error message. ```scala scala> sql("insert into t2 select * from v1") org.apache.spark.sql.AnalysisException: Output Hive table `default`.`t2` is bucketed but Sparkcurrently does NOT populate bucketed ... ``` ## How was this patch tested? Manual. Author: Dongjoon Hyun <dongjoon@apache.org> Closes #20617 from dongjoon-hyun/SPARK-ERROR-MSG. (cherry picked from commit 6968c3cfd70961c4e86daffd6a156d0a9c1d7a2a) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 15 February 2018, 17:40:28 UTC |

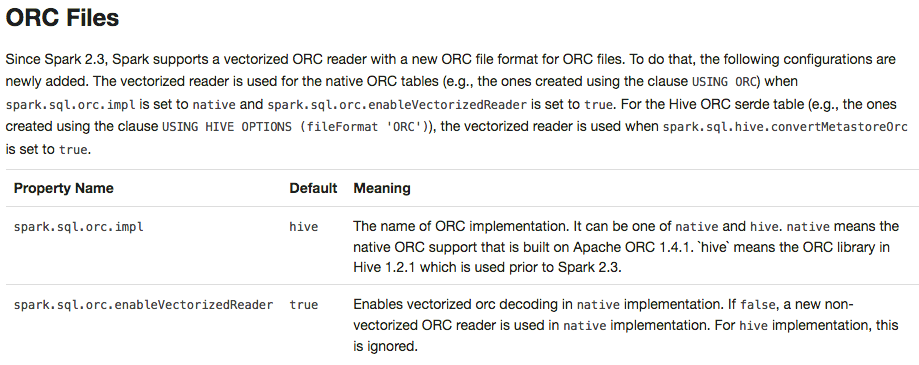

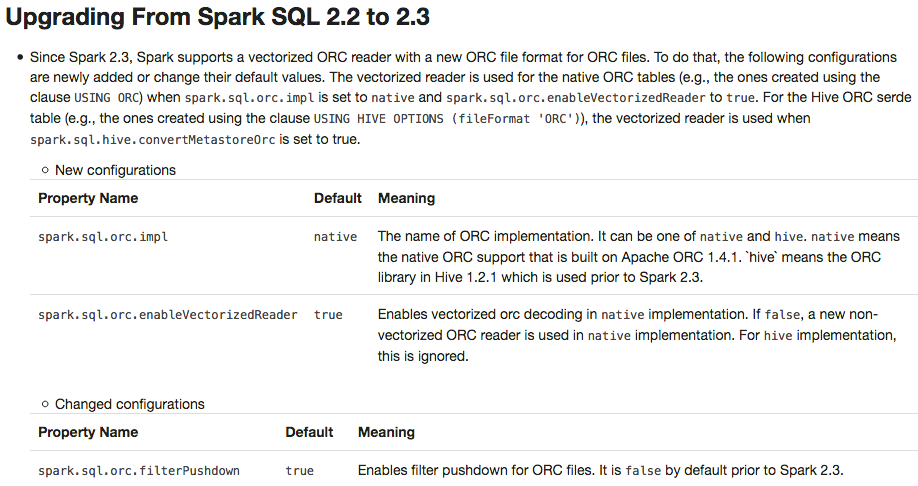

| bae4449 | Dongjoon Hyun | 15 February 2018, 16:55:39 UTC | [SPARK-23426][SQL] Use `hive` ORC impl and disable PPD for Spark 2.3.0 ## What changes were proposed in this pull request? To prevent any regressions, this PR changes ORC implementation to `hive` by default like Spark 2.2.X. Users can enable `native` ORC. Also, ORC PPD is also restored to `false` like Spark 2.2.X.  ## How was this patch tested? Pass all test cases. Author: Dongjoon Hyun <dongjoon@apache.org> Closes #20610 from dongjoon-hyun/SPARK-ORC-DISABLE. (cherry picked from commit 2f0498d1e85a53b60da6a47d20bbdf56b42b7dcb) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 15 February 2018, 16:55:59 UTC |

| d24d131 | Gabor Somogyi | 15 February 2018, 11:52:40 UTC | [SPARK-23422][CORE] YarnShuffleIntegrationSuite fix when SPARK_PREPEN… …D_CLASSES set to 1 ## What changes were proposed in this pull request? YarnShuffleIntegrationSuite fails when SPARK_PREPEND_CLASSES set to 1. Normally mllib built before yarn module. When SPARK_PREPEND_CLASSES used mllib classes are on yarn test classpath. Before 2.3 that did not cause issues. But 2.3 has SPARK-22450, which registered some mllib classes with the kryo serializer. Now it dies with the following error: ` 18/02/13 07:33:29 INFO SparkContext: Starting job: collect at YarnShuffleIntegrationSuite.scala:143 Exception in thread "dag-scheduler-event-loop" java.lang.NoClassDefFoundError: breeze/linalg/DenseMatrix ` In this PR NoClassDefFoundError caught only in case of testing and then do nothing. ## How was this patch tested? Automated: Pass the Jenkins. Author: Gabor Somogyi <gabor.g.somogyi@gmail.com> Closes #20608 from gaborgsomogyi/SPARK-23422. (cherry picked from commit 44e20c42254bc6591b594f54cd94ced5fcfadae3) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 15 February 2018, 11:52:55 UTC |

| f2c0585 | Wenchen Fan | 15 February 2018, 08:59:44 UTC | [SPARK-23419][SPARK-23416][SS] data source v2 write path should re-throw interruption exceptions directly ## What changes were proposed in this pull request? Streaming execution has a list of exceptions that means interruption, and handle them specially. `WriteToDataSourceV2Exec` should also respect this list and not wrap them with `SparkException`. ## How was this patch tested? existing test. Author: Wenchen Fan <wenchen@databricks.com> Closes #20605 from cloud-fan/write. (cherry picked from commit f38c760638063f1fb45e9ee2c772090fb203a4a0) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 15 February 2018, 09:01:37 UTC |

| 129fd45 | gatorsmile | 15 February 2018, 07:56:02 UTC | [SPARK-23094] Revert [] Fix invalid character handling in JsonDataSource ## What changes were proposed in this pull request? This PR is to revert the PR https://github.com/apache/spark/pull/20302, because it causes a regression. ## How was this patch tested? N/A Author: gatorsmile <gatorsmile@gmail.com> Closes #20614 from gatorsmile/revertJsonFix. (cherry picked from commit 95e4b4916065e66a4f8dba57e98e725796f75e04) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 15 February 2018, 07:56:12 UTC |

| bd83f7b | gatorsmile | 15 February 2018, 07:52:59 UTC | [SPARK-23421][SPARK-22356][SQL] Document the behavior change in ## What changes were proposed in this pull request? https://github.com/apache/spark/pull/19579 introduces a behavior change. We need to document it in the migration guide. ## How was this patch tested? Also update the HiveExternalCatalogVersionsSuite to verify it. Author: gatorsmile <gatorsmile@gmail.com> Closes #20606 from gatorsmile/addMigrationGuide. (cherry picked from commit a77ebb0921e390cf4fc6279a8c0a92868ad7e69b) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 15 February 2018, 07:53:10 UTC |

| a5a8a86 | gatorsmile | 14 February 2018, 18:59:36 UTC | Revert "[SPARK-23249][SQL] Improved block merging logic for partitions" This reverts commit f5f21e8c4261c0dfe8e3e788a30b38b188a18f67. | 14 February 2018, 18:59:36 UTC |

| fd66a3b | “attilapiros” | 14 February 2018, 14:45:54 UTC | [SPARK-23394][UI] In RDD storage page show the executor addresses instead of the IDs ## What changes were proposed in this pull request? Extending RDD storage page to show executor addresses in the block table. ## How was this patch tested? Manually:  Author: “attilapiros” <piros.attila.zsolt@gmail.com> Closes #20589 from attilapiros/SPARK-23394. (cherry picked from commit 140f87533a468b1046504fc3ff01fbe1637e41cd) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 14 February 2018, 14:46:10 UTC |

| bb26bdb | Dongjoon Hyun | 14 February 2018, 02:55:24 UTC | [SPARK-23399][SQL] Register a task completion listener first for OrcColumnarBatchReader This PR aims to resolve an open file leakage issue reported at [SPARK-23390](https://issues.apache.org/jira/browse/SPARK-23390) by moving the listener registration position. Currently, the sequence is like the following. 1. Create `batchReader` 2. `batchReader.initialize` opens a ORC file. 3. `batchReader.initBatch` may take a long time to alloc memory in some environment and cause errors. 4. `Option(TaskContext.get()).foreach(_.addTaskCompletionListener(_ => iter.close()))` This PR moves 4 before 2 and 3. To sum up, the new sequence is 1 -> 4 -> 2 -> 3. Manual. The following test case makes OOM intentionally to cause leaked filesystem connection in the current code base. With this patch, leakage doesn't occurs. ```scala // This should be tested manually because it raises OOM intentionally // in order to cause `Leaked filesystem connection`. test("SPARK-23399 Register a task completion listener first for OrcColumnarBatchReader") { withSQLConf(SQLConf.ORC_VECTORIZED_READER_BATCH_SIZE.key -> s"${Int.MaxValue}") { withTempDir { dir => val basePath = dir.getCanonicalPath Seq(0).toDF("a").write.format("orc").save(new Path(basePath, "first").toString) Seq(1).toDF("a").write.format("orc").save(new Path(basePath, "second").toString) val df = spark.read.orc( new Path(basePath, "first").toString, new Path(basePath, "second").toString) val e = intercept[SparkException] { df.collect() } assert(e.getCause.isInstanceOf[OutOfMemoryError]) } } } ``` Author: Dongjoon Hyun <dongjoon@apache.org> Closes #20590 from dongjoon-hyun/SPARK-23399. (cherry picked from commit 357babde5a8eb9710de7016d7ae82dee21fa4ef3) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 14 February 2018, 02:56:37 UTC |

| 4f6a457 | gatorsmile | 13 February 2018, 19:56:49 UTC | [SPARK-23400][SQL] Add a constructors for ScalaUDF ## What changes were proposed in this pull request? In this upcoming 2.3 release, we changed the interface of `ScalaUDF`. Unfortunately, some Spark packages (e.g., spark-deep-learning) are using our internal class `ScalaUDF`. In the release 2.3, we added new parameters into this class. The users hit the binary compatibility issues and got the exception: ``` > java.lang.NoSuchMethodError: org.apache.spark.sql.catalyst.expressions.ScalaUDF.<init>(Ljava/lang/Object;Lorg/apache/spark/sql/types/DataType;Lscala/collection/Seq;Lscala/collection/Seq;Lscala/Option;)V ``` This PR is to improve the backward compatibility. However, we definitely should not encourage the external packages to use our internal classes. This might make us hard to maintain/develop the codes in Spark. ## How was this patch tested? N/A Author: gatorsmile <gatorsmile@gmail.com> Closes #20591 from gatorsmile/scalaUDF. (cherry picked from commit 2ee76c22b6e48e643694c9475e5f0d37124215e7) Signed-off-by: Shixiong Zhu <zsxwing@gmail.com> | 13 February 2018, 19:56:57 UTC |

| 320ffb1 | Joseph K. Bradley | 13 February 2018, 19:18:45 UTC | [SPARK-23154][ML][DOC] Document backwards compatibility guarantees for ML persistence ## What changes were proposed in this pull request? Added documentation about what MLlib guarantees in terms of loading ML models and Pipelines from old Spark versions. Discussed & confirmed on linked JIRA. Author: Joseph K. Bradley <joseph@databricks.com> Closes #20592 from jkbradley/SPARK-23154-backwards-compat-doc. (cherry picked from commit d58fe28836639e68e262812d911f167cb071007b) Signed-off-by: Joseph K. Bradley <joseph@databricks.com> | 13 February 2018, 19:18:55 UTC |

| ab01ba7 | Bogdan Raducanu | 13 February 2018, 17:49:52 UTC | [SPARK-23316][SQL] AnalysisException after max iteration reached for IN query ## What changes were proposed in this pull request? Added flag ignoreNullability to DataType.equalsStructurally. The previous semantic is for ignoreNullability=false. When ignoreNullability=true equalsStructurally ignores nullability of contained types (map key types, value types, array element types, structure field types). In.checkInputTypes calls equalsStructurally to check if the children types match. They should match regardless of nullability (which is just a hint), so it is now called with ignoreNullability=true. ## How was this patch tested? New test in SubquerySuite Author: Bogdan Raducanu <bogdan@databricks.com> Closes #20548 from bogdanrdc/SPARK-23316. (cherry picked from commit 05d051293fe46938e9cb012342fea6e8a3715cd4) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 13 February 2018, 17:50:11 UTC |

| dbb1b39 | huangtengfei | 13 February 2018, 15:59:21 UTC | [SPARK-23053][CORE] taskBinarySerialization and task partitions calculate in DagScheduler.submitMissingTasks should keep the same RDD checkpoint status ## What changes were proposed in this pull request? When we run concurrent jobs using the same rdd which is marked to do checkpoint. If one job has finished running the job, and start the process of RDD.doCheckpoint, while another job is submitted, then submitStage and submitMissingTasks will be called. In [submitMissingTasks](https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/scheduler/DAGScheduler.scala#L961), will serialize taskBinaryBytes and calculate task partitions which are both affected by the status of checkpoint, if the former is calculated before doCheckpoint finished, while the latter is calculated after doCheckpoint finished, when run task, rdd.compute will be called, for some rdds with particular partition type such as [UnionRDD](https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/rdd/UnionRDD.scala) who will do partition type cast, will get a ClassCastException because the part params is actually a CheckpointRDDPartition. This error occurs because rdd.doCheckpoint occurs in the same thread that called sc.runJob, while the task serialization occurs in the DAGSchedulers event loop. ## How was this patch tested? the exist uts and also add a test case in DAGScheduerSuite to show the exception case. Author: huangtengfei <huangtengfei@huangtengfeideMacBook-Pro.local> Closes #20244 from ivoson/branch-taskpart-mistype. (cherry picked from commit 091a000d27f324de8c5c527880854ecfcf5de9a4) Signed-off-by: Imran Rashid <irashid@cloudera.com> | 13 February 2018, 16:00:05 UTC |

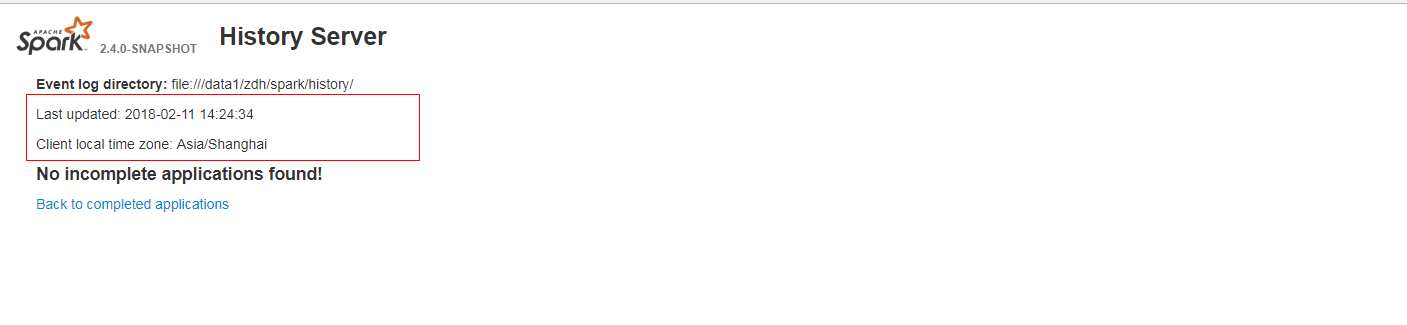

| 1c81c0c | guoxiaolong | 13 February 2018, 12:23:10 UTC | [SPARK-23384][WEB-UI] When it has no incomplete(completed) applications found, the last updated time is not formatted and client local time zone is not show in history server web ui. ## What changes were proposed in this pull request? When it has no incomplete(completed) applications found, the last updated time is not formatted and client local time zone is not show in history server web ui. It is a bug. fix before:  fix after:  ## How was this patch tested? (Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests) (If this patch involves UI changes, please attach a screenshot; otherwise, remove this) Please review http://spark.apache.org/contributing.html before opening a pull request. Author: guoxiaolong <guo.xiaolong1@zte.com.cn> Closes #20573 from guoxiaolongzte/SPARK-23384. (cherry picked from commit 300c40f50ab4258d697f06a814d1491dc875c847) Signed-off-by: Sean Owen <sowen@cloudera.com> | 13 February 2018, 12:23:19 UTC |

| 3737c3d | gatorsmile | 13 February 2018, 06:05:13 UTC | [SPARK-20090][FOLLOW-UP] Revert the deprecation of `names` in PySpark ## What changes were proposed in this pull request? Deprecating the field `name` in PySpark is not expected. This PR is to revert the change. ## How was this patch tested? N/A Author: gatorsmile <gatorsmile@gmail.com> Closes #20595 from gatorsmile/removeDeprecate. (cherry picked from commit 407f67249639709c40c46917700ed6dd736daa7d) Signed-off-by: hyukjinkwon <gurwls223@gmail.com> | 13 February 2018, 06:05:33 UTC |

| 43f5e40 | hyukjinkwon | 13 February 2018, 00:47:28 UTC | [SPARK-23352][PYTHON][BRANCH-2.3] Explicitly specify supported types in Pandas UDFs ## What changes were proposed in this pull request? This PR backports https://github.com/apache/spark/pull/20531: It explicitly specifies supported types in Pandas UDFs. The main change here is to add a deduplicated and explicit type checking in `returnType` ahead with documenting this; however, it happened to fix multiple things. 1. Currently, we don't support `BinaryType` in Pandas UDFs, for example, see: ```python from pyspark.sql.functions import pandas_udf pudf = pandas_udf(lambda x: x, "binary") df = spark.createDataFrame([[bytearray(1)]]) df.select(pudf("_1")).show() ``` ``` ... TypeError: Unsupported type in conversion to Arrow: BinaryType ``` We can document this behaviour for its guide. 2. Since we can check the return type ahead, we can fail fast before actual execution. ```python # we can fail fast at this stage because we know the schema ahead pandas_udf(lambda x: x, BinaryType()) ``` ## How was this patch tested? Manually tested and unit tests for `BinaryType` and `ArrayType(...)` were added. Author: hyukjinkwon <gurwls223@gmail.com> Closes #20588 from HyukjinKwon/PR_TOOL_PICK_PR_20531_BRANCH-2.3. | 13 February 2018, 00:47:28 UTC |

| befb22d | sychen | 13 February 2018, 00:00:47 UTC | [SPARK-23230][SQL] When hive.default.fileformat is other kinds of file types, create textfile table cause a serde error When hive.default.fileformat is other kinds of file types, create textfile table cause a serde error. We should take the default type of textfile and sequencefile both as org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe. ``` set hive.default.fileformat=orc; create table tbl( i string ) stored as textfile; desc formatted tbl; Serde Library org.apache.hadoop.hive.ql.io.orc.OrcSerde InputFormat org.apache.hadoop.mapred.TextInputFormat OutputFormat org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat ``` Author: sychen <sychen@ctrip.com> Closes #20406 from cxzl25/default_serde. (cherry picked from commit 4104b68e958cd13975567a96541dac7cccd8195c) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 13 February 2018, 00:01:16 UTC |

| 2b80571 | Dongjoon Hyun | 12 February 2018, 23:26:37 UTC | [SPARK-23313][DOC] Add a migration guide for ORC ## What changes were proposed in this pull request? This PR adds a migration guide documentation for ORC.  ## How was this patch tested? N/A. Author: Dongjoon Hyun <dongjoon@apache.org> Closes #20484 from dongjoon-hyun/SPARK-23313. (cherry picked from commit 6cb59708c70c03696c772fbb5d158eed57fe67d4) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 12 February 2018, 23:27:00 UTC |

| 9632c46 | Takuya UESHIN | 12 February 2018, 20:20:29 UTC | [SPARK-22002][SQL][FOLLOWUP][TEST] Add a test to check if the original schema doesn't have metadata. ## What changes were proposed in this pull request? This is a follow-up pr of #19231 which modified the behavior to remove metadata from JDBC table schema. This pr adds a test to check if the schema doesn't have metadata. ## How was this patch tested? Added a test and existing tests. Author: Takuya UESHIN <ueshin@databricks.com> Closes #20585 from ueshin/issues/SPARK-22002/fup1. (cherry picked from commit 0c66fe4f22f8af4932893134bb0fd56f00fabeae) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 12 February 2018, 20:21:04 UTC |

| 4e13820 | James Thompson | 12 February 2018, 19:34:56 UTC | [SPARK-23388][SQL] Support for Parquet Binary DecimalType in VectorizedColumnReader ## What changes were proposed in this pull request? Re-add support for parquet binary DecimalType in VectorizedColumnReader ## How was this patch tested? Existing test suite Author: James Thompson <jamesthomp@users.noreply.github.com> Closes #20580 from jamesthomp/jt/add-back-binary-decimal. (cherry picked from commit 5bb11411aec18b8d623e54caba5397d7cb8e89f0) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 12 February 2018, 19:35:06 UTC |

| 70be603 | Sameer Agarwal | 12 February 2018, 19:08:34 UTC | Preparing development version 2.3.1-SNAPSHOT | 12 February 2018, 19:08:34 UTC |

| 89f6fcb | Sameer Agarwal | 12 February 2018, 19:08:28 UTC | Preparing Spark release v2.3.0-rc3 | 12 February 2018, 19:08:28 UTC |

| d31c4ae | liuxian | 12 February 2018, 14:49:45 UTC | [SPARK-23391][CORE] It may lead to overflow for some integer multiplication ## What changes were proposed in this pull request? In the `getBlockData`,`blockId.reduceId` is the `Int` type, when it is greater than 2^28, `blockId.reduceId*8` will overflow In the `decompress0`, `len` and `unitSize` are Int type, so `len * unitSize` may lead to overflow ## How was this patch tested? N/A Author: liuxian <liu.xian3@zte.com.cn> Closes #20581 from 10110346/overflow2. (cherry picked from commit 4a4dd4f36f65410ef5c87f7b61a960373f044e61) Signed-off-by: Sean Owen <sowen@cloudera.com> | 12 February 2018, 14:49:52 UTC |

| 1e3118c | Wenchen Fan | 12 February 2018, 14:07:59 UTC | [SPARK-22977][SQL] fix web UI SQL tab for CTAS ## What changes were proposed in this pull request? This is a regression in Spark 2.3. In Spark 2.2, we have a fragile UI support for SQL data writing commands. We only track the input query plan of `FileFormatWriter` and display its metrics. This is not ideal because we don't know who triggered the writing(can be table insertion, CTAS, etc.), but it's still useful to see the metrics of the input query. In Spark 2.3, we introduced a new mechanism: `DataWritigCommand`, to fix the UI issue entirely. Now these writing commands have real children, and we don't need to hack into the `FileFormatWriter` for the UI. This also helps with `explain`, now `explain` can show the physical plan of the input query, while in 2.2 the physical writing plan is simply `ExecutedCommandExec` and it has no child. However there is a regression in CTAS. CTAS commands don't extend `DataWritigCommand`, and we don't have the UI hack in `FileFormatWriter` anymore, so the UI for CTAS is just an empty node. See https://issues.apache.org/jira/browse/SPARK-22977 for more information about this UI issue. To fix it, we should apply the `DataWritigCommand` mechanism to CTAS commands. TODO: In the future, we should refactor this part and create some physical layer code pieces for data writing, and reuse them in different writing commands. We should have different logical nodes for different operators, even some of them share some same logic, e.g. CTAS, CREATE TABLE, INSERT TABLE. Internally we can share the same physical logic. ## How was this patch tested? manually tested. For data source table <img width="644" alt="1" src="https://user-images.githubusercontent.com/3182036/35874155-bdffab28-0ba6-11e8-94a8-e32e106ba069.png"> For hive table <img width="666" alt="2" src="https://user-images.githubusercontent.com/3182036/35874161-c437e2a8-0ba6-11e8-98ed-7930f01432c5.png"> Author: Wenchen Fan <wenchen@databricks.com> Closes #20521 from cloud-fan/UI. (cherry picked from commit 0e2c266de7189473177f45aa68ea6a45c7e47ec3) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 12 February 2018, 14:08:16 UTC |

| 79e8650 | Wenchen Fan | 12 February 2018, 07:46:23 UTC | [SPARK-23390][SQL] Flaky Test Suite: FileBasedDataSourceSuite in Spark 2.3/hadoop 2.7 ## What changes were proposed in this pull request? This test only fails with sbt on Hadoop 2.7, I can't reproduce it locally, but here is my speculation by looking at the code: 1. FileSystem.delete doesn't delete the directory entirely, somehow we can still open the file as a 0-length empty file.(just speculation) 2. ORC intentionally allow empty files, and the reader fails during reading without closing the file stream. This PR improves the test to make sure all files are deleted and can't be opened. ## How was this patch tested? N/A Author: Wenchen Fan <wenchen@databricks.com> Closes #20584 from cloud-fan/flaky-test. (cherry picked from commit 6efd5d117e98074d1b16a5c991fbd38df9aa196e) Signed-off-by: Sameer Agarwal <sameerag@apache.org> | 12 February 2018, 07:46:43 UTC |

| 7e2a2b3 | Wenchen Fan | 11 February 2018, 16:03:49 UTC | [SPARK-23376][SQL] creating UnsafeKVExternalSorter with BytesToBytesMap may fail ## What changes were proposed in this pull request? This is a long-standing bug in `UnsafeKVExternalSorter` and was reported in the dev list multiple times. When creating `UnsafeKVExternalSorter` with `BytesToBytesMap`, we need to create a `UnsafeInMemorySorter` to sort the data in `BytesToBytesMap`. The data format of the sorter and the map is same, so no data movement is required. However, both the sorter and the map need a point array for some bookkeeping work. There is an optimization in `UnsafeKVExternalSorter`: reuse the point array between the sorter and the map, to avoid an extra memory allocation. This sounds like a reasonable optimization, the length of the `BytesToBytesMap` point array is at least 4 times larger than the number of keys(to avoid hash collision, the hash table size should be at least 2 times larger than the number of keys, and each key occupies 2 slots). `UnsafeInMemorySorter` needs the pointer array size to be 4 times of the number of entries, so we are safe to reuse the point array. However, the number of keys of the map doesn't equal to the number of entries in the map, because `BytesToBytesMap` supports duplicated keys. This breaks the assumption of the above optimization and we may run out of space when inserting data into the sorter, and hit error ``` java.lang.IllegalStateException: There is no space for new record at org.apache.spark.util.collection.unsafe.sort.UnsafeInMemorySorter.insertRecord(UnsafeInMemorySorter.java:239) at org.apache.spark.sql.execution.UnsafeKVExternalSorter.<init>(UnsafeKVExternalSorter.java:149) ... ``` This PR fixes this bug by creating a new point array if the existing one is not big enough. ## How was this patch tested? a new test Author: Wenchen Fan <wenchen@databricks.com> Closes #20561 from cloud-fan/bug. (cherry picked from commit 4bbd7443ebb005f81ed6bc39849940ac8db3b3cc) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 11 February 2018, 16:04:14 UTC |

| 8875e47 | Takuya UESHIN | 11 February 2018, 13:16:47 UTC | [SPARK-23387][SQL][PYTHON][TEST][BRANCH-2.3] Backport assertPandasEqual to branch-2.3. ## What changes were proposed in this pull request? When backporting a pr with tests using `assertPandasEqual` from master to branch-2.3, the tests fail because `assertPandasEqual` doesn't exist in branch-2.3. We should backport `assertPandasEqual` to branch-2.3 to avoid the failures. ## How was this patch tested? Modified tests. Author: Takuya UESHIN <ueshin@databricks.com> Closes #20577 from ueshin/issues/SPARK-23387/branch-2.3. | 11 February 2018, 13:16:47 UTC |

| 9fa7b0e | Li Jin | 11 February 2018, 08:31:35 UTC | [SPARK-23314][PYTHON] Add ambiguous=False when localizing tz-naive timestamps in Arrow codepath to deal with dst ## What changes were proposed in this pull request? When tz_localize a tz-naive timetamp, pandas will throw exception if the timestamp is during daylight saving time period, e.g., `2015-11-01 01:30:00`. This PR fixes this issue by setting `ambiguous=False` when calling tz_localize, which is the same default behavior of pytz. ## How was this patch tested? Add `test_timestamp_dst` Author: Li Jin <ice.xelloss@gmail.com> Closes #20537 from icexelloss/SPARK-23314. (cherry picked from commit a34fce19bc0ee5a7e36c6ecba75d2aeb70fdcbc7) Signed-off-by: hyukjinkwon <gurwls223@gmail.com> | 11 February 2018, 08:31:48 UTC |

| b7571b9 | Takuya UESHIN | 10 February 2018, 16:08:02 UTC | [SPARK-23360][SQL][PYTHON] Get local timezone from environment via pytz, or dateutil. ## What changes were proposed in this pull request? Currently we use `tzlocal()` to get Python local timezone, but it sometimes causes unexpected behavior. I changed the way to get Python local timezone to use pytz if the timezone is specified in environment variable, or timezone file via dateutil . ## How was this patch tested? Added a test and existing tests. Author: Takuya UESHIN <ueshin@databricks.com> Closes #20559 from ueshin/issues/SPARK-23360/master. (cherry picked from commit 97a224a855c4410b2dfb9c0bcc6aae583bd28e92) Signed-off-by: hyukjinkwon <gurwls223@gmail.com> | 10 February 2018, 16:08:16 UTC |

| f3a9a7f | Feng Liu | 10 February 2018, 00:21:47 UTC | [SPARK-23275][SQL] fix the thread leaking in hive/tests ## What changes were proposed in this pull request? This is a follow up of https://github.com/apache/spark/pull/20441. The two lines actually can trigger the hive metastore bug: https://issues.apache.org/jira/browse/HIVE-16844 The two configs are not in the default `ObjectStore` properties, so any run hive commands after these two lines will set the `propsChanged` flag in the `ObjectStore.setConf` and then cause thread leaks. I don't think the two lines are very useful. They can be removed safely. ## How was this patch tested? (Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests) (If this patch involves UI changes, please attach a screenshot; otherwise, remove this) Please review http://spark.apache.org/contributing.html before opening a pull request. Author: Feng Liu <fengliu@databricks.com> Closes #20562 from liufengdb/fix-omm. (cherry picked from commit 6d7c38330e68c7beb10f54eee8b4f607ee3c4136) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 10 February 2018, 00:22:14 UTC |

| 49771ac | Jacek Laskowski | 10 February 2018, 00:18:30 UTC | [MINOR][HIVE] Typo fixes ## What changes were proposed in this pull request? Typo fixes (with expanding a Hive property) ## How was this patch tested? local build. Awaiting Jenkins Author: Jacek Laskowski <jacek@japila.pl> Closes #20550 from jaceklaskowski/hiveutils-typos. (cherry picked from commit 557938e2839afce26a10a849a2a4be8fc4580427) Signed-off-by: Sean Owen <sowen@cloudera.com> | 10 February 2018, 00:18:38 UTC |

| 08eb95f | liuxian | 09 February 2018, 14:45:06 UTC | [SPARK-23358][CORE] When the number of partitions is greater than 2^28, it will result in an error result ## What changes were proposed in this pull request? In the `checkIndexAndDataFile`,the `blocks` is the ` Int` type, when it is greater than 2^28, `blocks*8` will overflow, and this will result in an error result. In fact, `blocks` is actually the number of partitions. ## How was this patch tested? Manual test Author: liuxian <liu.xian3@zte.com.cn> Closes #20544 from 10110346/overflow. (cherry picked from commit f77270b8811bbd8956d0c08fa556265d2c5ee20e) Signed-off-by: Sean Owen <sowen@cloudera.com> | 09 February 2018, 14:45:15 UTC |

| 196304a | hyukjinkwon | 09 February 2018, 06:21:10 UTC | [SPARK-23328][PYTHON] Disallow default value None in na.replace/replace when 'to_replace' is not a dictionary ## What changes were proposed in this pull request? This PR proposes to disallow default value None when 'to_replace' is not a dictionary. It seems weird we set the default value of `value` to `None` and we ended up allowing the case as below: ```python >>> df.show() ``` ``` +----+------+-----+ | age|height| name| +----+------+-----+ | 10| 80|Alice| ... ``` ```python >>> df.na.replace('Alice').show() ``` ``` +----+------+----+ | age|height|name| +----+------+----+ | 10| 80|null| ... ``` **After** This PR targets to disallow the case above: ```python >>> df.na.replace('Alice').show() ``` ``` ... TypeError: value is required when to_replace is not a dictionary. ``` while we still allow when `to_replace` is a dictionary: ```python >>> df.na.replace({'Alice': None}).show() ``` ``` +----+------+----+ | age|height|name| +----+------+----+ | 10| 80|null| ... ``` ## How was this patch tested? Manually tested, tests were added in `python/pyspark/sql/tests.py` and doctests were fixed. Author: hyukjinkwon <gurwls223@gmail.com> Closes #20499 from HyukjinKwon/SPARK-19454-followup. (cherry picked from commit 4b4ee2601079f12f8f410a38d2081793cbdedc14) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 09 February 2018, 06:21:32 UTC |

| dfb1614 | Dongjoon Hyun | 09 February 2018, 04:54:57 UTC | [SPARK-23186][SQL] Initialize DriverManager first before loading JDBC Drivers ## What changes were proposed in this pull request? Since some JDBC Drivers have class initialization code to call `DriverManager`, we need to initialize `DriverManager` first in order to avoid potential executor-side **deadlock** situations like the following (or [STORM-2527](https://issues.apache.org/jira/browse/STORM-2527)). ``` Thread 9587: (state = BLOCKED) - sun.reflect.NativeConstructorAccessorImpl.newInstance0(java.lang.reflect.Constructor, java.lang.Object[]) bci=0 (Compiled frame; information may be imprecise) - sun.reflect.NativeConstructorAccessorImpl.newInstance(java.lang.Object[]) bci=85, line=62 (Compiled frame) - sun.reflect.DelegatingConstructorAccessorImpl.newInstance(java.lang.Object[]) bci=5, line=45 (Compiled frame) - java.lang.reflect.Constructor.newInstance(java.lang.Object[]) bci=79, line=423 (Compiled frame) - java.lang.Class.newInstance() bci=138, line=442 (Compiled frame) - java.util.ServiceLoader$LazyIterator.nextService() bci=119, line=380 (Interpreted frame) - java.util.ServiceLoader$LazyIterator.next() bci=11, line=404 (Interpreted frame) - java.util.ServiceLoader$1.next() bci=37, line=480 (Interpreted frame) - java.sql.DriverManager$2.run() bci=21, line=603 (Interpreted frame) - java.sql.DriverManager$2.run() bci=1, line=583 (Interpreted frame) - java.security.AccessController.doPrivileged(java.security.PrivilegedAction) bci=0 (Compiled frame) - java.sql.DriverManager.loadInitialDrivers() bci=27, line=583 (Interpreted frame) - java.sql.DriverManager.<clinit>() bci=32, line=101 (Interpreted frame) - org.apache.phoenix.mapreduce.util.ConnectionUtil.getConnection(java.lang.String, java.lang.Integer, java.lang.String, java.util.Properties) bci=12, line=98 (Interpreted frame) - org.apache.phoenix.mapreduce.util.ConnectionUtil.getInputConnection(org.apache.hadoop.conf.Configuration, java.util.Properties) bci=22, line=57 (Interpreted frame) - org.apache.phoenix.mapreduce.PhoenixInputFormat.getQueryPlan(org.apache.hadoop.mapreduce.JobContext, org.apache.hadoop.conf.Configuration) bci=61, line=116 (Interpreted frame) - org.apache.phoenix.mapreduce.PhoenixInputFormat.createRecordReader(org.apache.hadoop.mapreduce.InputSplit, org.apache.hadoop.mapreduce.TaskAttemptContext) bci=10, line=71 (Interpreted frame) - org.apache.spark.rdd.NewHadoopRDD$$anon$1.<init>(org.apache.spark.rdd.NewHadoopRDD, org.apache.spark.Partition, org.apache.spark.TaskContext) bci=233, line=156 (Interpreted frame) Thread 9170: (state = BLOCKED) - org.apache.phoenix.jdbc.PhoenixDriver.<clinit>() bci=35, line=125 (Interpreted frame) - sun.reflect.NativeConstructorAccessorImpl.newInstance0(java.lang.reflect.Constructor, java.lang.Object[]) bci=0 (Compiled frame) - sun.reflect.NativeConstructorAccessorImpl.newInstance(java.lang.Object[]) bci=85, line=62 (Compiled frame) - sun.reflect.DelegatingConstructorAccessorImpl.newInstance(java.lang.Object[]) bci=5, line=45 (Compiled frame) - java.lang.reflect.Constructor.newInstance(java.lang.Object[]) bci=79, line=423 (Compiled frame) - java.lang.Class.newInstance() bci=138, line=442 (Compiled frame) - org.apache.spark.sql.execution.datasources.jdbc.DriverRegistry$.register(java.lang.String) bci=89, line=46 (Interpreted frame) - org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$$anonfun$createConnectionFactory$2.apply() bci=7, line=53 (Interpreted frame) - org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$$anonfun$createConnectionFactory$2.apply() bci=1, line=52 (Interpreted frame) - org.apache.spark.sql.execution.datasources.jdbc.JDBCRDD$$anon$1.<init>(org.apache.spark.sql.execution.datasources.jdbc.JDBCRDD, org.apache.spark.Partition, org.apache.spark.TaskContext) bci=81, line=347 (Interpreted frame) - org.apache.spark.sql.execution.datasources.jdbc.JDBCRDD.compute(org.apache.spark.Partition, org.apache.spark.TaskContext) bci=7, line=339 (Interpreted frame) ``` ## How was this patch tested? N/A Author: Dongjoon Hyun <dongjoon@apache.org> Closes #20359 from dongjoon-hyun/SPARK-23186. (cherry picked from commit 8cbcc33876c773722163b2259644037bbb259bd1) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 09 February 2018, 04:55:17 UTC |

| 68f3a07 | Wenchen Fan | 08 February 2018, 11:20:11 UTC | [SPARK-23268][SQL][FOLLOWUP] Reorganize packages in data source V2 ## What changes were proposed in this pull request? This is a followup of https://github.com/apache/spark/pull/20435. While reorganizing the packages for streaming data source v2, the top level stream read/write support interfaces should not be in the reader/writer package, but should be in the `sources.v2` package, to follow the `ReadSupport`, `WriteSupport`, etc. ## How was this patch tested? N/A Author: Wenchen Fan <wenchen@databricks.com> Closes #20509 from cloud-fan/followup. (cherry picked from commit a75f927173632eee1316879447cb62c8cf30ae37) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 08 February 2018, 11:20:33 UTC |

| 0c2a210 | Wenchen Fan | 08 February 2018, 08:08:54 UTC | [SPARK-23348][SQL] append data using saveAsTable should adjust the data types ## What changes were proposed in this pull request? For inserting/appending data to an existing table, Spark should adjust the data types of the input query according to the table schema, or fail fast if it's uncastable. There are several ways to insert/append data: SQL API, `DataFrameWriter.insertInto`, `DataFrameWriter.saveAsTable`. The first 2 ways create `InsertIntoTable` plan, and the last way creates `CreateTable` plan. However, we only adjust input query data types for `InsertIntoTable`, and users may hit weird errors when appending data using `saveAsTable`. See the JIRA for the error case. This PR fixes this bug by adjusting data types for `CreateTable` too. ## How was this patch tested? new test. Author: Wenchen Fan <wenchen@databricks.com> Closes #20527 from cloud-fan/saveAsTable. (cherry picked from commit 7f5f5fb1296275a38da0adfa05125dd8ebf729ff) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 08 February 2018, 08:09:03 UTC |

| 0538302 | hyukjinkwon | 08 February 2018, 07:47:12 UTC | [SPARK-23319][TESTS][BRANCH-2.3] Explicitly specify Pandas and PyArrow versions in PySpark tests (to skip or test) This PR backports https://github.com/apache/spark/pull/20487 to branch-2.3. Author: hyukjinkwon <gurwls223@gmail.com> Author: Takuya UESHIN <ueshin@databricks.com> Closes #20534 from HyukjinKwon/PR_TOOL_PICK_PR_20487_BRANCH-2.3. | 08 February 2018, 07:47:12 UTC |

| db59e55 | gatorsmile | 08 February 2018, 04:21:18 UTC | Revert [SPARK-22279][SQL] Turn on spark.sql.hive.convertMetastoreOrc by default ## What changes were proposed in this pull request? This is to revert the changes made in https://github.com/apache/spark/pull/19499 , because this causes a regression. We should not ignore the table-specific compression conf when the Hive serde tables are converted to the data source tables. ## How was this patch tested? The existing tests. Author: gatorsmile <gatorsmile@gmail.com> Closes #20536 from gatorsmile/revert22279. (cherry picked from commit 3473fda6dc77bdfd84b3de95d2082856ad4f8626) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 08 February 2018, 04:21:31 UTC |

| 2ba07d5 | hyukjinkwon | 08 February 2018, 00:29:31 UTC | [SPARK-23300][TESTS][BRANCH-2.3] Prints out if Pandas and PyArrow are installed or not in PySpark SQL tests This PR backports https://github.com/apache/spark/pull/20473 to branch-2.3. Author: hyukjinkwon <gurwls223@gmail.com> Closes #20533 from HyukjinKwon/backport-20473. | 08 February 2018, 00:29:31 UTC |

| 05239af | Liang-Chi Hsieh | 07 February 2018, 17:48:49 UTC | [SPARK-23345][SQL] Remove open stream record even closing it fails ## What changes were proposed in this pull request? When `DebugFilesystem` closes opened stream, if any exception occurs, we still need to remove the open stream record from `DebugFilesystem`. Otherwise, it goes to report leaked filesystem connection. ## How was this patch tested? Existing tests. Author: Liang-Chi Hsieh <viirya@gmail.com> Closes #20524 from viirya/SPARK-23345. (cherry picked from commit 9841ae0313cbee1f083f131f9446808c90ed5a7b) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 07 February 2018, 17:48:57 UTC |

| cb22e83 | gatorsmile | 07 February 2018, 14:24:16 UTC | [SPARK-23122][PYSPARK][FOLLOWUP] Replace registerTempTable by createOrReplaceTempView ## What changes were proposed in this pull request? Replace `registerTempTable` by `createOrReplaceTempView`. ## How was this patch tested? N/A Author: gatorsmile <gatorsmile@gmail.com> Closes #20523 from gatorsmile/updateExamples. (cherry picked from commit 9775df67f924663598d51723a878557ddafb8cfd) Signed-off-by: hyukjinkwon <gurwls223@gmail.com> | 07 February 2018, 14:24:30 UTC |

| 874d3f8 | gatorsmile | 07 February 2018, 00:46:43 UTC | [SPARK-23327][SQL] Update the description and tests of three external API or functions ## What changes were proposed in this pull request? Update the description and tests of three external API or functions `createFunction `, `length` and `repartitionByRange ` ## How was this patch tested? N/A Author: gatorsmile <gatorsmile@gmail.com> Closes #20495 from gatorsmile/updateFunc. (cherry picked from commit c36fecc3b416c38002779c3cf40b6a665ac4bf13) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 07 February 2018, 00:46:55 UTC |

| f9c9132 | Wenchen Fan | 06 February 2018, 20:43:45 UTC | [SPARK-23315][SQL] failed to get output from canonicalized data source v2 related plans ## What changes were proposed in this pull request? `DataSourceV2Relation` keeps a `fullOutput` and resolves the real output on demand by column name lookup. i.e. ``` lazy val output: Seq[Attribute] = reader.readSchema().map(_.name).map { name => fullOutput.find(_.name == name).get } ``` This will be broken after we canonicalize the plan, because all attribute names become "None", see https://github.com/apache/spark/blob/v2.3.0-rc1/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/Canonicalize.scala#L42 To fix this, `DataSourceV2Relation` should just keep `output`, and update the `output` when doing column pruning. ## How was this patch tested? a new test case Author: Wenchen Fan <wenchen@databricks.com> Closes #20485 from cloud-fan/canonicalize. (cherry picked from commit b96a083b1c6ff0d2c588be9499b456e1adce97dc) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 06 February 2018, 20:43:53 UTC |

| 77cccc5 | Li Jin | 06 February 2018, 20:30:04 UTC | [MINOR][TEST] Fix class name for Pandas UDF tests In https://github.com/apache/spark/commit/b2ce17b4c9fea58140a57ca1846b2689b15c0d61, I mistakenly renamed `VectorizedUDFTests` to `ScalarPandasUDF`. This PR fixes the mistake. Existing tests. Author: Li Jin <ice.xelloss@gmail.com> Closes #20489 from icexelloss/fix-scalar-udf-tests. (cherry picked from commit caf30445632de6aec810309293499199e7a20892) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 06 February 2018, 20:37:25 UTC |

| 036a04b | Wenchen Fan | 06 February 2018, 20:27:37 UTC | [SPARK-23312][SQL][FOLLOWUP] add a config to turn off vectorized cache reader ## What changes were proposed in this pull request? https://github.com/apache/spark/pull/20483 tried to provide a way to turn off the new columnar cache reader, to restore the behavior in 2.2. However even we turn off that config, the behavior is still different than 2.2. If the output data are rows, we still enable whole stage codegen for the scan node, which is different with 2.2, we should also fix it. ## How was this patch tested? existing tests. Author: Wenchen Fan <wenchen@databricks.com> Closes #20513 from cloud-fan/cache. (cherry picked from commit ac7454cac04a1d9252b3856360eda5c3e8bcb8da) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 06 February 2018, 20:27:52 UTC |

| 7782fd0 | Takuya UESHIN | 06 February 2018, 18:46:48 UTC | [SPARK-23310][CORE][FOLLOWUP] Fix Java style check issues. ## What changes were proposed in this pull request? This is a follow-up of #20492 which broke lint-java checks. This pr fixes the lint-java issues. ``` [ERROR] src/main/java/org/apache/spark/util/collection/unsafe/sort/UnsafeSorterSpillReader.java:[79] (sizes) LineLength: Line is longer than 100 characters (found 114). ``` ## How was this patch tested? Checked manually in my local environment. Author: Takuya UESHIN <ueshin@databricks.com> Closes #20514 from ueshin/issues/SPARK-23310/fup1. (cherry picked from commit 7db9979babe52d15828967c86eb77e3fb2791579) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 06 February 2018, 18:47:04 UTC |

| a511544 | Takuya UESHIN | 06 February 2018, 09:30:50 UTC | [SPARK-23334][SQL][PYTHON] Fix pandas_udf with return type StringType() to handle str type properly in Python 2. ## What changes were proposed in this pull request? In Python 2, when `pandas_udf` tries to return string type value created in the udf with `".."`, the execution fails. E.g., ```python from pyspark.sql.functions import pandas_udf, col import pandas as pd df = spark.range(10) str_f = pandas_udf(lambda x: pd.Series(["%s" % i for i in x]), "string") df.select(str_f(col('id'))).show() ``` raises the following exception: ``` ... java.lang.AssertionError: assertion failed: Invalid schema from pandas_udf: expected StringType, got BinaryType at scala.Predef$.assert(Predef.scala:170) at org.apache.spark.sql.execution.python.ArrowEvalPythonExec$$anon$2.<init>(ArrowEvalPythonExec.scala:93) ... ``` Seems like pyarrow ignores `type` parameter for `pa.Array.from_pandas()` and consider it as binary type when the type is string type and the string values are `str` instead of `unicode` in Python 2. This pr adds a workaround for the case. ## How was this patch tested? Added a test and existing tests. Author: Takuya UESHIN <ueshin@databricks.com> Closes #20507 from ueshin/issues/SPARK-23334. (cherry picked from commit 63c5bf13ce5cd3b8d7e7fb88de881ed207fde720) Signed-off-by: hyukjinkwon <gurwls223@gmail.com> | 06 February 2018, 09:31:06 UTC |

| 4493303 | Takuya UESHIN | 06 February 2018, 09:29:37 UTC | [SPARK-23290][SQL][PYTHON][BACKPORT-2.3] Use datetime.date for date type when converting Spark DataFrame to Pandas DataFrame. ## What changes were proposed in this pull request? This is a backport of #20506. In #18664, there was a change in how `DateType` is being returned to users ([line 1968 in dataframe.py](https://github.com/apache/spark/pull/18664/files#diff-6fc344560230bf0ef711bb9b5573f1faR1968)). This can cause client code which works in Spark 2.2 to fail. See [SPARK-23290](https://issues.apache.org/jira/browse/SPARK-23290?focusedCommentId=16350917&page=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-16350917) for an example. This pr modifies to use `datetime.date` for date type as Spark 2.2 does. ## How was this patch tested? Tests modified to fit the new behavior and existing tests. Author: Takuya UESHIN <ueshin@databricks.com> Closes #20515 from ueshin/issues/SPARK-23290_2.3. | 06 February 2018, 09:29:37 UTC |

| 521494d | Shixiong Zhu | 06 February 2018, 06:42:42 UTC | [SPARK-23326][WEBUI] schedulerDelay should return 0 when the task is running ## What changes were proposed in this pull request? When a task is still running, metrics like executorRunTime are not available. Then `schedulerDelay` will be almost the same as `duration` and that's confusing. This PR makes `schedulerDelay` return 0 when the task is running which is the same behavior as 2.2. ## How was this patch tested? `AppStatusUtilsSuite.schedulerDelay` Author: Shixiong Zhu <zsxwing@gmail.com> Closes #20493 from zsxwing/SPARK-23326. (cherry picked from commit f3f1e14bb73dfdd2927d95b12d7d61d22de8a0ac) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 06 February 2018, 06:43:22 UTC |

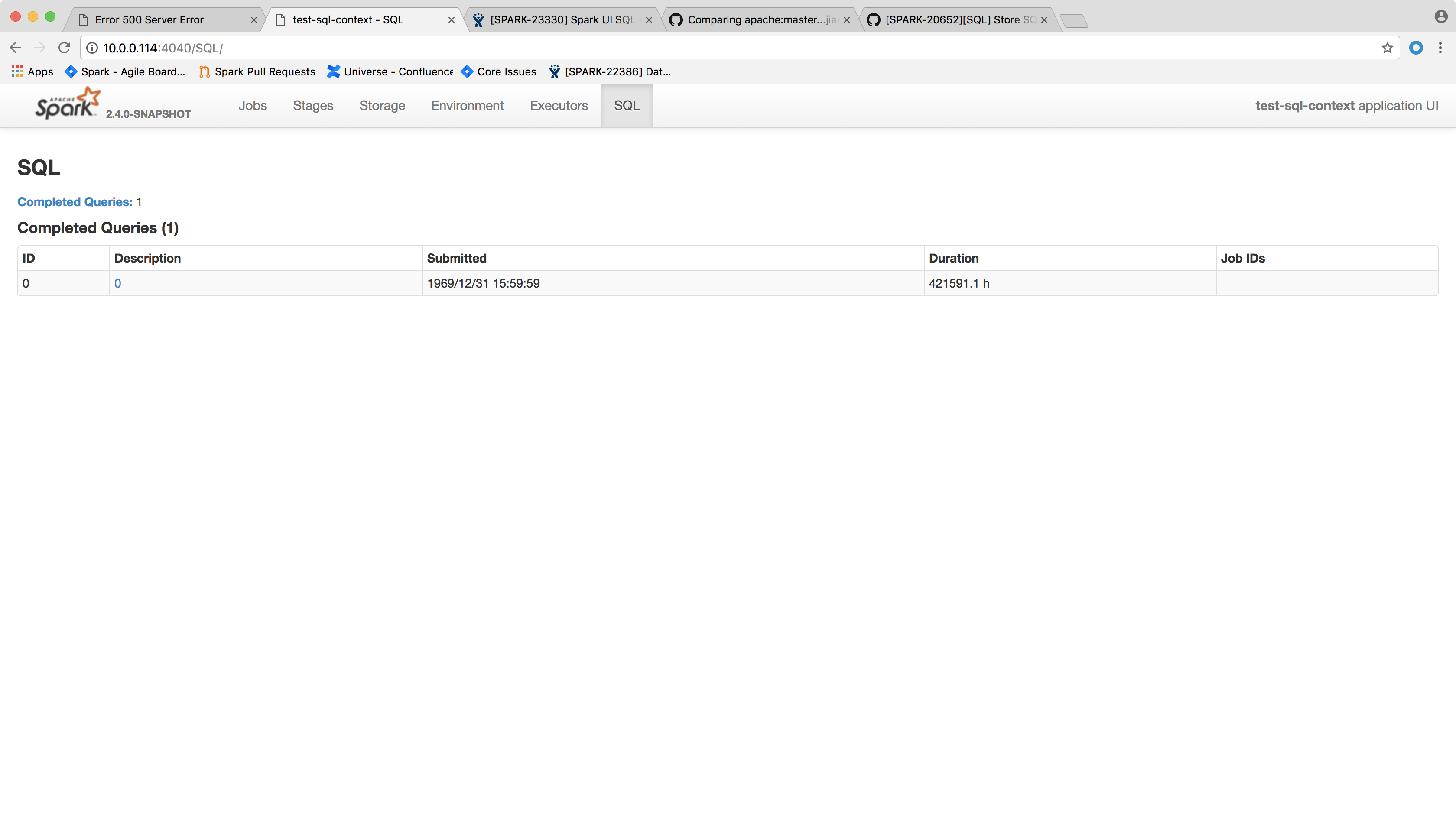

| 4aa9aaf | Xingbo Jiang | 05 February 2018, 22:17:11 UTC | [SPARK-23330][WEBUI] Spark UI SQL executions page throws NPE ## What changes were proposed in this pull request? Spark SQL executions page throws the following error and the page crashes: ``` HTTP ERROR 500 Problem accessing /SQL/. Reason: Server Error Caused by: java.lang.NullPointerException at scala.collection.immutable.StringOps$.length$extension(StringOps.scala:47) at scala.collection.immutable.StringOps.length(StringOps.scala:47) at scala.collection.IndexedSeqOptimized$class.isEmpty(IndexedSeqOptimized.scala:27) at scala.collection.immutable.StringOps.isEmpty(StringOps.scala:29) at scala.collection.TraversableOnce$class.nonEmpty(TraversableOnce.scala:111) at scala.collection.immutable.StringOps.nonEmpty(StringOps.scala:29) at org.apache.spark.sql.execution.ui.ExecutionTable.descriptionCell(AllExecutionsPage.scala:182) at org.apache.spark.sql.execution.ui.ExecutionTable.row(AllExecutionsPage.scala:155) at org.apache.spark.sql.execution.ui.ExecutionTable$$anonfun$8.apply(AllExecutionsPage.scala:204) at org.apache.spark.sql.execution.ui.ExecutionTable$$anonfun$8.apply(AllExecutionsPage.scala:204) at org.apache.spark.ui.UIUtils$$anonfun$listingTable$2.apply(UIUtils.scala:339) at org.apache.spark.ui.UIUtils$$anonfun$listingTable$2.apply(UIUtils.scala:339) at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234) at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234) at scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59) at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:48) at scala.collection.TraversableLike$class.map(TraversableLike.scala:234) at scala.collection.AbstractTraversable.map(Traversable.scala:104) at org.apache.spark.ui.UIUtils$.listingTable(UIUtils.scala:339) at org.apache.spark.sql.execution.ui.ExecutionTable.toNodeSeq(AllExecutionsPage.scala:203) at org.apache.spark.sql.execution.ui.AllExecutionsPage.render(AllExecutionsPage.scala:67) at org.apache.spark.ui.WebUI$$anonfun$2.apply(WebUI.scala:82) at org.apache.spark.ui.WebUI$$anonfun$2.apply(WebUI.scala:82) at org.apache.spark.ui.JettyUtils$$anon$3.doGet(JettyUtils.scala:90) at javax.servlet.http.HttpServlet.service(HttpServlet.java:687) at javax.servlet.http.HttpServlet.service(HttpServlet.java:790) at org.eclipse.jetty.servlet.ServletHolder.handle(ServletHolder.java:848) at org.eclipse.jetty.servlet.ServletHandler.doHandle(ServletHandler.java:584) at org.eclipse.jetty.server.handler.ContextHandler.doHandle(ContextHandler.java:1180) at org.eclipse.jetty.servlet.ServletHandler.doScope(ServletHandler.java:512) at org.eclipse.jetty.server.handler.ContextHandler.doScope(ContextHandler.java:1112) at org.eclipse.jetty.server.handler.ScopedHandler.handle(ScopedHandler.java:141) at org.eclipse.jetty.server.handler.ContextHandlerCollection.handle(ContextHandlerCollection.java:213) at org.eclipse.jetty.server.handler.HandlerWrapper.handle(HandlerWrapper.java:134) at org.eclipse.jetty.server.Server.handle(Server.java:534) at org.eclipse.jetty.server.HttpChannel.handle(HttpChannel.java:320) at org.eclipse.jetty.server.HttpConnection.onFillable(HttpConnection.java:251) at org.eclipse.jetty.io.AbstractConnection$ReadCallback.succeeded(AbstractConnection.java:283) at org.eclipse.jetty.io.FillInterest.fillable(FillInterest.java:108) at org.eclipse.jetty.io.SelectChannelEndPoint$2.run(SelectChannelEndPoint.java:93) at org.eclipse.jetty.util.thread.strategy.ExecuteProduceConsume.executeProduceConsume(ExecuteProduceConsume.java:303) at org.eclipse.jetty.util.thread.strategy.ExecuteProduceConsume.produceConsume(ExecuteProduceConsume.java:148) at org.eclipse.jetty.util.thread.strategy.ExecuteProduceConsume.run(ExecuteProduceConsume.java:136) at org.eclipse.jetty.util.thread.QueuedThreadPool.runJob(QueuedThreadPool.java:671) at org.eclipse.jetty.util.thread.QueuedThreadPool$2.run(QueuedThreadPool.java:589) at java.lang.Thread.run(Thread.java:748) ``` One of the possible reason that this page fails may be the `SparkListenerSQLExecutionStart` event get dropped before processed, so the execution description and details don't get updated. This was not a issue in 2.2 because it would ignore any job start event that arrives before the corresponding execution start event, which doesn't sound like a good decision. We shall try to handle the null values in the front page side, that is, try to give a default value when `execution.details` or `execution.description` is null. Another possible approach is not to spill the `LiveExecutionData` in `SQLAppStatusListener.update(exec: LiveExecutionData)` if `exec.details` is null. This is not ideal because this way you will not see the execution if `SparkListenerSQLExecutionStart` event is lost, because `AllExecutionsPage` only read executions from KVStore. ## How was this patch tested? After the change, the page shows the following:  Author: Xingbo Jiang <xingbo.jiang@databricks.com> Closes #20502 from jiangxb1987/executionPage. (cherry picked from commit c2766b07b4b9ed976931966a79c65043e81cf694) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 05 February 2018, 22:17:23 UTC |

| 173449c | Sital Kedia | 05 February 2018, 18:19:18 UTC | [SPARK-23310][CORE] Turn off read ahead input stream for unshafe shuffle reader To fix regression for TPC-DS queries Author: Sital Kedia <skedia@fb.com> Closes #20492 from sitalkedia/turn_off_async_inputstream. (cherry picked from commit 03b7e120dd7ff7848c936c7a23644da5bd7219ab) Signed-off-by: Sameer Agarwal <sameerag@apache.org> | 05 February 2018, 18:20:02 UTC |

| e688ffe | Shixiong Zhu | 05 February 2018, 10:41:49 UTC | [SPARK-23307][WEBUI] Sort jobs/stages/tasks/queries with the completed timestamp before cleaning up them ## What changes were proposed in this pull request? Sort jobs/stages/tasks/queries with the completed timestamp before cleaning up them to make the behavior consistent with 2.2. ## How was this patch tested? - Jenkins. - Manually ran the following codes and checked the UI for jobs/stages/tasks/queries. ``` spark.ui.retainedJobs 10 spark.ui.retainedStages 10 spark.sql.ui.retainedExecutions 10 spark.ui.retainedTasks 10 ``` ``` new Thread() { override def run() { spark.range(1, 2).foreach { i => Thread.sleep(10000) } } }.start() Thread.sleep(5000) for (_ <- 1 to 20) { new Thread() { override def run() { spark.range(1, 2).foreach { i => } } }.start() } Thread.sleep(15000) spark.range(1, 2).foreach { i => } sc.makeRDD(1 to 100, 100).foreach { i => } ``` Author: Shixiong Zhu <zsxwing@gmail.com> Closes #20481 from zsxwing/SPARK-23307. (cherry picked from commit a6bf3db20773ba65cbc4f2775db7bd215e78829a) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 05 February 2018, 10:42:09 UTC |

| 430025c | Yuming Wang | 04 February 2018, 17:15:48 UTC | [SPARK-22036][SQL][FOLLOWUP] Fix decimalArithmeticOperations.sql ## What changes were proposed in this pull request? Fix decimalArithmeticOperations.sql test ## How was this patch tested? N/A Author: Yuming Wang <wgyumg@gmail.com> Author: wangyum <wgyumg@gmail.com> Author: Yuming Wang <yumwang@ebay.com> Closes #20498 from wangyum/SPARK-22036. (cherry picked from commit 6fb3fd15365d43733aefdb396db205d7ccf57f75) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 04 February 2018, 17:16:06 UTC |

| 45f0f4f | hyukjinkwon | 03 February 2018, 18:40:21 UTC | [SPARK-21658][SQL][PYSPARK] Revert "[] Add default None for value in na.replace in PySpark" This reverts commit 0fcde87aadc9a92e138f11583119465ca4b5c518. See the discussion in [SPARK-21658](https://issues.apache.org/jira/browse/SPARK-21658), [SPARK-19454](https://issues.apache.org/jira/browse/SPARK-19454) and https://github.com/apache/spark/pull/16793 Author: hyukjinkwon <gurwls223@gmail.com> Closes #20496 from HyukjinKwon/revert-SPARK-21658. (cherry picked from commit 551dff2bccb65e9b3f77b986f167aec90d9a6016) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 03 February 2018, 18:40:29 UTC |

| be3de87 | Shashwat Anand | 03 February 2018, 18:31:04 UTC | [MINOR][DOC] Use raw triple double quotes around docstrings where there are occurrences of backslashes. From [PEP 257](https://www.python.org/dev/peps/pep-0257/): > For consistency, always use """triple double quotes""" around docstrings. Use r"""raw triple double quotes""" if you use any backslashes in your docstrings. For Unicode docstrings, use u"""Unicode triple-quoted strings""". For example, this is what help (kafka_wordcount) shows: ``` DESCRIPTION Counts words in UTF8 encoded, ' ' delimited text received from the network every second. Usage: kafka_wordcount.py <zk> <topic> To run this on your local machine, you need to setup Kafka and create a producer first, see http://kafka.apache.org/documentation.html#quickstart and then run the example `$ bin/spark-submit --jars external/kafka-assembly/target/scala-*/spark-streaming-kafka-assembly-*.jar examples/src/main/python/streaming/kafka_wordcount.py localhost:2181 test` ``` This is what it shows, after the fix: ``` DESCRIPTION Counts words in UTF8 encoded, '\n' delimited text received from the network every second. Usage: kafka_wordcount.py <zk> <topic> To run this on your local machine, you need to setup Kafka and create a producer first, see http://kafka.apache.org/documentation.html#quickstart and then run the example `$ bin/spark-submit --jars \ external/kafka-assembly/target/scala-*/spark-streaming-kafka-assembly-*.jar \ examples/src/main/python/streaming/kafka_wordcount.py \ localhost:2181 test` ``` The thing worth noticing is no linebreak here in the help. ## What changes were proposed in this pull request? Change triple double quotes to raw triple double quotes when there are occurrences of backslashes in docstrings. ## How was this patch tested? Manually as this is a doc fix. Author: Shashwat Anand <me@shashwat.me> Closes #20497 from ashashwat/docstring-fixes. (cherry picked from commit 4aaa7d40bf495317e740b6d6f9c2a55dfd03521b) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 03 February 2018, 18:31:17 UTC |

| 4de2061 | Dongjoon Hyun | 03 February 2018, 08:04:00 UTC | [SPARK-23305][SQL][TEST] Test `spark.sql.files.ignoreMissingFiles` for all file-based data sources ## What changes were proposed in this pull request? Like Parquet, all file-based data source handles `spark.sql.files.ignoreMissingFiles` correctly. We had better have a test coverage for feature parity and in order to prevent future accidental regression for all data sources. ## How was this patch tested? Pass Jenkins with a newly added test case. Author: Dongjoon Hyun <dongjoon@apache.org> Closes #20479 from dongjoon-hyun/SPARK-23305. (cherry picked from commit 522e0b1866a0298669c83de5a47ba380dc0b7c84) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 03 February 2018, 08:04:08 UTC |

| 1bcb372 | caoxuewen | 03 February 2018, 08:02:03 UTC | [SPARK-23311][SQL][TEST] add FilterFunction test case for test CombineTypedFilters ## What changes were proposed in this pull request? In the current test case for CombineTypedFilters, we lack the test of FilterFunction, so let's add it. In addition, in TypedFilterOptimizationSuite's existing test cases, Let's extract a common LocalRelation. ## How was this patch tested? add new test cases. Author: caoxuewen <cao.xuewen@zte.com.cn> Closes #20482 from heary-cao/TypedFilterOptimizationSuite. (cherry picked from commit 63b49fa2e599080c2ba7d5189f9dde20a2e01fb4) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 03 February 2018, 08:02:11 UTC |

| b614c08 | Wenchen Fan | 03 February 2018, 04:49:08 UTC | [SPARK-23317][SQL] rename ContinuousReader.setOffset to setStartOffset ## What changes were proposed in this pull request? In the document of `ContinuousReader.setOffset`, we say this method is used to specify the start offset. We also have a `ContinuousReader.getStartOffset` to get the value back. I think it makes more sense to rename `ContinuousReader.setOffset` to `setStartOffset`. ## How was this patch tested? N/A Author: Wenchen Fan <wenchen@databricks.com> Closes #20486 from cloud-fan/rename. (cherry picked from commit fe73cb4b439169f16cc24cd851a11fd398ce7edf) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 03 February 2018, 04:49:17 UTC |

| dcd0af4 | Reynold Xin | 03 February 2018, 04:36:27 UTC | [SQL] Minor doc update: Add an example in DataFrameReader.schema ## What changes were proposed in this pull request? This patch adds a small example to the schema string definition of schema function. It isn't obvious how to use it, so an example would be useful. ## How was this patch tested? N/A - doc only. Author: Reynold Xin <rxin@databricks.com> Closes #20491 from rxin/schema-doc. (cherry picked from commit 3ff83ad43a704cc3354ef9783e711c065e2a1a22) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 03 February 2018, 04:36:37 UTC |

| 56eb9a3 | Tathagata Das | 03 February 2018, 01:37:51 UTC | [SPARK-23064][SS][DOCS] Stream-stream joins Documentation - follow up ## What changes were proposed in this pull request? Further clarification of caveats in using stream-stream outer joins. ## How was this patch tested? N/A Author: Tathagata Das <tathagata.das1565@gmail.com> Closes #20494 from tdas/SPARK-23064-2. (cherry picked from commit eaf35de2471fac4337dd2920026836d52b1ec847) Signed-off-by: Tathagata Das <tathagata.das1565@gmail.com> | 03 February 2018, 01:38:07 UTC |

| e5e9f9a | Wenchen Fan | 02 February 2018, 14:43:28 UTC | [SPARK-23312][SQL] add a config to turn off vectorized cache reader ## What changes were proposed in this pull request? https://issues.apache.org/jira/browse/SPARK-23309 reported a performance regression about cached table in Spark 2.3. While the investigating is still going on, this PR adds a conf to turn off the vectorized cache reader, to unblock the 2.3 release. ## How was this patch tested? a new test Author: Wenchen Fan <wenchen@databricks.com> Closes #20483 from cloud-fan/cache. (cherry picked from commit b9503fcbb3f4a3ce263164d1f11a8e99b9ca5710) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 02 February 2018, 14:43:51 UTC |

| 2b07452 | Wenchen Fan | 02 February 2018, 04:44:46 UTC | [SPARK-23301][SQL] data source column pruning should work for arbitrary expressions This PR fixes a mistake in the `PushDownOperatorsToDataSource` rule, the column pruning logic is incorrect about `Project`. a new test case for column pruning with arbitrary expressions, and improve the existing tests to make sure the `PushDownOperatorsToDataSource` really works. Author: Wenchen Fan <wenchen@databricks.com> Closes #20476 from cloud-fan/push-down. (cherry picked from commit 19c7c7ebdef6c1c7a02ebac9af6a24f521b52c37) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 02 February 2018, 04:46:09 UTC |

| 7baae3a | Liang-Chi Hsieh | 02 February 2018, 02:18:32 UTC | [SPARK-23284][SQL] Document the behavior of several ColumnVector's get APIs when accessing null slot ## What changes were proposed in this pull request? For some ColumnVector get APIs such as getDecimal, getBinary, getStruct, getArray, getInterval, getUTF8String, we should clearly document their behaviors when accessing null slot. They should return null in this case. Then we can remove null checks from the places using above APIs. For the APIs of primitive values like getInt, getInts, etc., this also documents their behaviors when accessing null slots. Their returning values are undefined and can be anything. ## How was this patch tested? Added tests into `ColumnarBatchSuite`. Author: Liang-Chi Hsieh <viirya@gmail.com> Closes #20455 from viirya/SPARK-23272-followup. (cherry picked from commit 90848d507457d30abb36e3ba07618dfc87c34cd6) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 02 February 2018, 02:18:49 UTC |

| ab23785 | Gera Shegalov | 01 February 2018, 23:26:59 UTC | [SPARK-23296][YARN] Include stacktrace in YARN-app diagnostic ## What changes were proposed in this pull request? Include stacktrace in the diagnostics message upon abnormal unregister from RM ## How was this patch tested? Tested with a failing job, and confirmed a stacktrace in the client output and YARN webUI. Author: Gera Shegalov <gera@apache.org> Closes #20470 from gerashegalov/gera/stacktrace-diagnostics. (cherry picked from commit 032c11b83f0d276bf8085992229b8c598f02798a) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 01 February 2018, 23:27:09 UTC |

| 07a8f4d | Wenchen Fan | 01 February 2018, 18:48:34 UTC | [SPARK-23293][SQL] fix data source v2 self join `DataSourceV2Relation` should extend `MultiInstanceRelation`, to take care of self-join. a new test Author: Wenchen Fan <wenchen@databricks.com> Closes #20466 from cloud-fan/dsv2-selfjoin. (cherry picked from commit 73da3b6968630d9e2cafc742ccb6d4eb54957df4) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 01 February 2018, 18:50:44 UTC |

| 2db7e49 | Yuming Wang | 01 February 2018, 18:36:31 UTC | [SPARK-13983][SQL] Fix HiveThriftServer2 can not get "--hiveconf" and ''--hivevar" variables since 2.0 ## What changes were proposed in this pull request? `--hiveconf` and `--hivevar` variables no longer work since Spark 2.0. The `spark-sql` client has fixed by [SPARK-15730](https://issues.apache.org/jira/browse/SPARK-15730) and [SPARK-18086](https://issues.apache.org/jira/browse/SPARK-18086). but `beeline`/[`Spark SQL HiveThriftServer2`](https://github.com/apache/spark/blob/v2.1.1/sql/hive-thriftserver/src/main/scala/org/apache/spark/sql/hive/thriftserver/HiveThriftServer2.scala) is still broken. This pull request fix it. This pull request works for both `JDBC client` and `beeline`. ## How was this patch tested? unit tests for `JDBC client` manual tests for `beeline`: ``` git checkout origin/pr/17886 dev/make-distribution.sh --mvn mvn --tgz -Phive -Phive-thriftserver -Phadoop-2.6 -DskipTests tar -zxf spark-2.3.0-SNAPSHOT-bin-2.6.5.tgz && cd spark-2.3.0-SNAPSHOT-bin-2.6.5 sbin/start-thriftserver.sh ``` ``` cat <<EOF > test.sql select '\${a}', '\${b}'; EOF beeline -u jdbc:hive2://localhost:10000 --hiveconf a=avalue --hivevar b=bvalue -f test.sql ``` Author: Yuming Wang <wgyumg@gmail.com> Closes #17886 from wangyum/SPARK-13983-dev. (cherry picked from commit f051f834036e63d5e480d86440ce39924f979e82) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 01 February 2018, 18:36:47 UTC |

| 2549bea | Shixiong Zhu | 01 February 2018, 13:00:47 UTC | [SPARK-23289][CORE] OneForOneBlockFetcher.DownloadCallback.onData should write the buffer fully ## What changes were proposed in this pull request? `channel.write(buf)` may not write the whole buffer since the underlying channel is a FileChannel, we should retry until the whole buffer is written. ## How was this patch tested? Jenkins Author: Shixiong Zhu <zsxwing@gmail.com> Closes #20461 from zsxwing/SPARK-23289. (cherry picked from commit ec63e2d0743a4f75e1cce21d0fe2b54407a86a4a) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 01 February 2018, 13:02:54 UTC |

| 3aa780e | Takuya UESHIN | 01 February 2018, 12:28:53 UTC | [SPARK-23280][SQL][FOLLOWUP] Enable `MutableColumnarRow.getMap()`. ## What changes were proposed in this pull request? This is a followup pr of #20450. We should've enabled `MutableColumnarRow.getMap()` as well. ## How was this patch tested? Existing tests. Author: Takuya UESHIN <ueshin@databricks.com> Closes #20471 from ueshin/issues/SPARK-23280/fup2. (cherry picked from commit 89e8d556b93d1bf1b28fe153fd284f154045b0ee) Signed-off-by: Takuya UESHIN <ueshin@databricks.com> | 01 February 2018, 12:29:19 UTC |

| 6b6bc9c | Takuya UESHIN | 01 February 2018, 12:25:02 UTC | [SPARK-23280][SQL][FOLLOWUP] Fix Java style check issues. ## What changes were proposed in this pull request? This is a follow-up of #20450 which broke lint-java checks. This pr fixes the lint-java issues. ``` [ERROR] src/main/java/org/apache/spark/sql/vectorized/ColumnVector.java:[20,8] (imports) UnusedImports: Unused import - org.apache.spark.sql.catalyst.util.MapData. [ERROR] src/main/java/org/apache/spark/sql/vectorized/ColumnarArray.java:[21,8] (imports) UnusedImports: Unused import - org.apache.spark.sql.catalyst.util.MapData. [ERROR] src/main/java/org/apache/spark/sql/vectorized/ColumnarRow.java:[22,8] (imports) UnusedImports: Unused import - org.apache.spark.sql.catalyst.util.MapData. ``` ## How was this patch tested? Checked manually in my local environment. Author: Takuya UESHIN <ueshin@databricks.com> Closes #20468 from ueshin/issues/SPARK-23280/fup1. (cherry picked from commit 8bb70b068ea782e799e45238fcb093a6acb0fc9f) Signed-off-by: Takuya UESHIN <ueshin@databricks.com> | 01 February 2018, 12:25:19 UTC |

| 205bce9 | Yanbo Liang | 01 February 2018, 09:25:01 UTC | [SPARK-23107][ML] ML 2.3 QA: New Scala APIs, docs. ## What changes were proposed in this pull request? Audit new APIs and docs in 2.3.0. ## How was this patch tested? No test. Author: Yanbo Liang <ybliang8@gmail.com> Closes #20459 from yanboliang/SPARK-23107. (cherry picked from commit e15da5b14c8d845028365a609c0c66731d024ee7) Signed-off-by: Nick Pentreath <nickp@za.ibm.com> | 01 February 2018, 09:25:14 UTC |