https://github.com/apache/spark

- HEAD

- refs/heads/branch-0.5

- refs/heads/branch-0.6

- refs/heads/branch-0.7

- refs/heads/branch-0.8

- refs/heads/branch-0.9

- refs/heads/branch-1.0

- refs/heads/branch-1.0-jdbc

- refs/heads/branch-1.1

- refs/heads/branch-1.2

- refs/heads/branch-1.3

- refs/heads/branch-1.4

- refs/heads/branch-1.5

- refs/heads/branch-1.6

- refs/heads/branch-2.0

- refs/heads/branch-2.1

- refs/heads/branch-2.2

- refs/heads/branch-2.3

- refs/heads/branch-2.4

- refs/heads/branch-3.0

- refs/heads/branch-3.1

- refs/heads/branch-3.2

- refs/heads/branch-3.3

- refs/heads/branch-3.4

- refs/heads/branch-3.5

- refs/heads/master

- refs/remotes/origin/branch-0.8

- refs/remotes/origin/td-rdd-save

- refs/tags/0.3-scala-2.8

- refs/tags/0.3-scala-2.9

- refs/tags/2.0.0-preview

- refs/tags/alpha-0.1

- refs/tags/alpha-0.2

- refs/tags/v0.5.0

- refs/tags/v0.5.1

- refs/tags/v0.5.2

- refs/tags/v0.6.0

- refs/tags/v0.6.0-yarn

- refs/tags/v0.6.1

- refs/tags/v0.6.2

- refs/tags/v0.7.0

- refs/tags/v0.7.0-bizo-1

- refs/tags/v0.7.1

- refs/tags/v0.7.2

- refs/tags/v0.9.1

- refs/tags/v0.9.2

- refs/tags/v1.0.0

- refs/tags/v1.0.1

- refs/tags/v1.0.2

- refs/tags/v1.1.0

- refs/tags/v1.1.1

- refs/tags/v1.2.0

- refs/tags/v1.2.1

- refs/tags/v1.2.2

- refs/tags/v1.3.0

- refs/tags/v1.3.1

- refs/tags/v1.4.0

- refs/tags/v1.4.1

- refs/tags/v1.5.0-rc1

- refs/tags/v1.5.0-rc2

- refs/tags/v1.5.0-rc3

- refs/tags/v1.5.1

- refs/tags/v1.6.0

- refs/tags/v1.6.1

- refs/tags/v1.6.2

- refs/tags/v1.6.3

- refs/tags/v2.0.0

- refs/tags/v2.0.1

- refs/tags/v2.0.2

- refs/tags/v2.1.0

- refs/tags/v2.1.1

- refs/tags/v2.1.2

- refs/tags/v2.1.2-rc1

- refs/tags/v2.1.2-rc2

- refs/tags/v2.1.2-rc3

- refs/tags/v2.1.2-rc4

- refs/tags/v2.1.3

- refs/tags/v2.1.3-rc1

- refs/tags/v2.1.3-rc2

- refs/tags/v2.2.0

- refs/tags/v2.2.1

- refs/tags/v2.2.1-rc1

- refs/tags/v2.2.1-rc2

- refs/tags/v2.2.2

- refs/tags/v2.2.2-rc1

- refs/tags/v2.2.2-rc2

- refs/tags/v2.2.3

- refs/tags/v2.2.3-rc1

- refs/tags/v2.3.0

- refs/tags/v2.3.0-rc1

- refs/tags/v2.3.0-rc2

- refs/tags/v2.3.0-rc3

- refs/tags/v2.3.0-rc4

- refs/tags/v2.3.1

- refs/tags/v2.3.1-rc1

- refs/tags/v2.3.1-rc2

- refs/tags/v2.3.1-rc3

- refs/tags/v2.3.1-rc4

- refs/tags/v2.3.2

- refs/tags/v2.3.2-rc1

- refs/tags/v2.3.2-rc2

- refs/tags/v2.3.2-rc3

- refs/tags/v2.3.2-rc4

- refs/tags/v2.3.2-rc5

- refs/tags/v2.3.2-rc6

- refs/tags/v2.3.3

- refs/tags/v2.3.3-rc1

- refs/tags/v2.3.3-rc2

- refs/tags/v2.3.4

- refs/tags/v2.3.4-rc1

- refs/tags/v2.4.0

- refs/tags/v2.4.0-rc1

- refs/tags/v2.4.0-rc2

- refs/tags/v2.4.0-rc3

- refs/tags/v2.4.0-rc4

- refs/tags/v2.4.0-rc5

- refs/tags/v2.4.1

- refs/tags/v2.4.1-rc1

- refs/tags/v2.4.1-rc2

- refs/tags/v2.4.1-rc3

- refs/tags/v2.4.1-rc4

- refs/tags/v2.4.1-rc5

- refs/tags/v2.4.1-rc6

- refs/tags/v2.4.1-rc7

- refs/tags/v2.4.1-rc8

- refs/tags/v2.4.1-rc9

- refs/tags/v2.4.2

- refs/tags/v2.4.2-rc1

- refs/tags/v2.4.3

- refs/tags/v2.4.3-rc1

- refs/tags/v2.4.4

- refs/tags/v2.4.4-rc1

- refs/tags/v2.4.4-rc2

- refs/tags/v2.4.4-rc3

- refs/tags/v2.4.5

- refs/tags/v2.4.5-rc1

- refs/tags/v2.4.5-rc2

- refs/tags/v2.4.6

- refs/tags/v2.4.6-rc1

- refs/tags/v2.4.6-rc2

- refs/tags/v2.4.6-rc3

- refs/tags/v2.4.6-rc4

- refs/tags/v2.4.6-rc5

- refs/tags/v2.4.6-rc6

- refs/tags/v2.4.6-rc7

- refs/tags/v2.4.6-rc8

- refs/tags/v2.4.7

- refs/tags/v2.4.7-rc1

- refs/tags/v2.4.7-rc2

- refs/tags/v2.4.7-rc3

- refs/tags/v2.4.8

- refs/tags/v2.4.8-rc1

- refs/tags/v2.4.8-rc2

- refs/tags/v2.4.8-rc3

- refs/tags/v2.4.8-rc4

- refs/tags/v3.0.0

- refs/tags/v3.0.0-preview2

- refs/tags/v3.0.0-preview2-rc1

- refs/tags/v3.0.0-preview2-rc2

- refs/tags/v3.0.0-rc1

- refs/tags/v3.0.0-rc2

- refs/tags/v3.0.0-rc3

- refs/tags/v3.0.1

- refs/tags/v3.0.1-rc1

- refs/tags/v3.0.1-rc2

- refs/tags/v3.0.1-rc3

- refs/tags/v3.0.2

- refs/tags/v3.0.2-rc1

- refs/tags/v3.0.3

- refs/tags/v3.0.3-rc1

- refs/tags/v3.1.0-rc1

- refs/tags/v3.1.1

- refs/tags/v3.1.1-rc1

- refs/tags/v3.1.1-rc2

- refs/tags/v3.1.1-rc3

- refs/tags/v3.1.2

- refs/tags/v3.1.2-rc1

- refs/tags/v3.1.3

- refs/tags/v3.1.3-rc1

- refs/tags/v3.1.3-rc2

- refs/tags/v3.1.3-rc3

- refs/tags/v3.1.3-rc4

- refs/tags/v3.2.0

- refs/tags/v3.2.0-rc1

- refs/tags/v3.2.0-rc2

- refs/tags/v3.2.0-rc3

- refs/tags/v3.2.0-rc4

- refs/tags/v3.2.0-rc5

- refs/tags/v3.2.0-rc6

- refs/tags/v3.2.0-rc7

- refs/tags/v3.2.1

- refs/tags/v3.2.1-rc1

- refs/tags/v3.2.1-rc2

- refs/tags/v3.2.2

- refs/tags/v3.2.2-rc1

- refs/tags/v3.2.3

- refs/tags/v3.2.3-rc1

- refs/tags/v3.2.4

- refs/tags/v3.2.4-rc1

- refs/tags/v3.3.0

- refs/tags/v3.3.0-rc1

- refs/tags/v3.3.0-rc2

- refs/tags/v3.3.0-rc3

- refs/tags/v3.3.0-rc4

- refs/tags/v3.3.0-rc5

- refs/tags/v3.3.0-rc6

- refs/tags/v3.3.1

- refs/tags/v3.3.1-rc1

- refs/tags/v3.3.1-rc2

- refs/tags/v3.3.1-rc3

- refs/tags/v3.3.1-rc4

- refs/tags/v3.3.2

- refs/tags/v3.3.2-rc1

- refs/tags/v3.3.3

- refs/tags/v3.3.3-rc1

- refs/tags/v3.3.4

- refs/tags/v3.3.4-rc1

- refs/tags/v3.4.0

- refs/tags/v3.4.0-rc1

- refs/tags/v3.4.0-rc2

- refs/tags/v3.4.0-rc3

- refs/tags/v3.4.0-rc4

- refs/tags/v3.4.0-rc5

- refs/tags/v3.4.0-rc6

- refs/tags/v3.4.0-rc7

- refs/tags/v3.4.1

- refs/tags/v3.4.1-rc1

- refs/tags/v3.4.2

- refs/tags/v3.4.2-rc1

- refs/tags/v3.5.0

- refs/tags/v3.5.0-rc1

- refs/tags/v3.5.0-rc2

- refs/tags/v3.5.0-rc3

- refs/tags/v3.5.0-rc4

- refs/tags/v3.5.0-rc5

- refs/tags/v3.5.1

- refs/tags/v3.5.1-rc1

- refs/tags/v3.5.1-rc2

Take a new snapshot of a software origin

If the archived software origin currently browsed is not synchronized with its upstream version (for instance when new commits have been issued), you can explicitly request Software Heritage to take a new snapshot of it.

Use the form below to proceed. Once a request has been submitted and accepted, it will be processed as soon as possible. You can then check its processing state by visiting this dedicated page.

Processing "take a new snapshot" request ...

Permalinks

To reference or cite the objects present in the Software Heritage archive, permalinks based on SoftWare Hash IDentifiers (SWHIDs) must be used.

Select below a type of object currently browsed in order to display its associated SWHID and permalink.

| Revision | Author | Date | Message | Commit Date |

|---|---|---|---|---|

| cc93bc9 | Marcelo Vanzin | 15 May 2018, 00:57:16 UTC | Preparing Spark release v2.3.1-rc1 | 15 May 2018, 00:57:16 UTC |

| 6dfb515 | Henry Robinson | 14 May 2018, 21:35:08 UTC | [SPARK-23852][SQL] Add withSQLConf(...) to test case ## What changes were proposed in this pull request? Add a `withSQLConf(...)` wrapper to force Parquet filter pushdown for a test that relies on it. ## How was this patch tested? Test passes Author: Henry Robinson <henry@apache.org> Closes #21323 from henryr/spark-23582. (cherry picked from commit 061e0084ce19c1384ba271a97a0aa1f87abe879d) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 14 May 2018, 21:35:17 UTC |

| a8ee570 | Henry Robinson | 14 May 2018, 21:05:32 UTC | [SPARK-23852][SQL] Upgrade to Parquet 1.8.3 ## What changes were proposed in this pull request? Upgrade Parquet dependency to 1.8.3 to avoid PARQUET-1217 ## How was this patch tested? Ran the included new test case. Author: Henry Robinson <henry@apache.org> Closes #21302 from henryr/branch-2.3. | 14 May 2018, 21:05:32 UTC |

| 2f60df0 | Shixiong Zhu | 14 May 2018, 18:37:57 UTC | [SPARK-24246][SQL] Improve AnalysisException by setting the cause when it's available ## What changes were proposed in this pull request? If there is an exception, it's better to set it as the cause of AnalysisException since the exception may contain useful debug information. ## How was this patch tested? Jenkins Author: Shixiong Zhu <zsxwing@gmail.com> Closes #21297 from zsxwing/SPARK-24246. (cherry picked from commit c26f673252c2cbbccf8c395ba6d4ab80c098d60e) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 14 May 2018, 18:38:09 UTC |

| 88003f0 | Felix Cheung | 14 May 2018, 17:49:12 UTC | [SPARK-24263][R] SparkR java check breaks with openjdk ## What changes were proposed in this pull request? Change text to grep for. ## How was this patch tested? manual test Author: Felix Cheung <felixcheung_m@hotmail.com> Closes #21314 from felixcheung/openjdkver. (cherry picked from commit 1430fa80e37762e31cc5adc74cd609c215d84b6e) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 14 May 2018, 17:49:24 UTC |

| 867d948 | Kelley Robinson | 13 May 2018, 20:19:03 UTC | [SPARK-24262][PYTHON] Fix typo in UDF type match error message ## What changes were proposed in this pull request? Updates `functon` to `function`. This was called out in holdenk's PyCon 2018 conference talk. Didn't see any existing PR's for this. holdenk happy to fix the Pandas.Series bug too but will need a bit more guidance. Author: Kelley Robinson <krobinson@twilio.com> Closes #21304 from robinske/master. (cherry picked from commit 0d210ec8b610e4b0570ce730f3987dc86787c663) Signed-off-by: Holden Karau <holden@pigscanfly.ca> | 13 May 2018, 20:20:30 UTC |

| 7de4bef | Shivaram Venkataraman | 12 May 2018, 00:00:51 UTC | [SPARKR] Require Java 8 for SparkR This change updates the SystemRequirements and also includes a runtime check if the JVM is being launched by R. The runtime check is done by querying `java -version` ## How was this patch tested? Tested on a Mac and Windows machine Author: Shivaram Venkataraman <shivaram@cs.berkeley.edu> Closes #21278 from shivaram/sparkr-skip-solaris. (cherry picked from commit f27a035daf705766d3445e5c6a99867c11c552b0) Signed-off-by: Shivaram Venkataraman <shivaram@cs.berkeley.edu> | 12 May 2018, 00:01:02 UTC |

| 1d598b7 | cody koeninger | 11 May 2018, 18:40:36 UTC | [SPARK-24067][BACKPORT-2.3][STREAMING][KAFKA] Allow non-consecutive offsets ## What changes were proposed in this pull request? Backport of the bugfix in SPARK-17147 Add a configuration spark.streaming.kafka.allowNonConsecutiveOffsets to allow streaming jobs to proceed on compacted topics (or other situations involving gaps between offsets in the log). ## How was this patch tested? Added new unit test justinrmiller has been testing this branch in production for a few weeks Author: cody koeninger <cody@koeninger.org> Closes #21300 from koeninger/branch-2.3_kafkafix. | 11 May 2018, 18:40:36 UTC |

| 414e4e3 | Kazuaki Ishizaki | 10 May 2018, 21:41:55 UTC | [SPARK-10878][CORE] Fix race condition when multiple clients resolves artifacts at the same time ## What changes were proposed in this pull request? When multiple clients attempt to resolve artifacts via the `--packages` parameter, they could run into race condition when they each attempt to modify the dummy `org.apache.spark-spark-submit-parent-default.xml` file created in the default ivy cache dir. This PR changes the behavior to encode UUID in the dummy module descriptor so each client will operate on a different resolution file in the ivy cache dir. In addition, this patch changes the behavior of when and which resolution files are cleaned to prevent accumulation of resolution files in the default ivy cache dir. Since this PR is a successor of #18801, close #18801. Many codes were ported from #18801. **Many efforts were put here. I think this PR should credit to Victsm .** ## How was this patch tested? added UT into `SparkSubmitUtilsSuite` Author: Kazuaki Ishizaki <ishizaki@jp.ibm.com> Closes #21251 from kiszk/SPARK-10878. (cherry picked from commit d3c426a5b02abdec49ff45df12a8f11f9e473a88) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 10 May 2018, 21:42:06 UTC |

| 4c49b12 | “attilapiros” | 10 May 2018, 21:26:38 UTC | [SPARK-19181][CORE] Fixing flaky "SparkListenerSuite.local metrics" ## What changes were proposed in this pull request? Sometimes "SparkListenerSuite.local metrics" test fails because the average of executorDeserializeTime is too short. As squito suggested to avoid these situations in one of the task a reference introduced to an object implementing a custom Externalizable.readExternal which sleeps 1ms before returning. ## How was this patch tested? With unit tests (and checking the effect of this change to the average with a much larger sleep time). Author: “attilapiros” <piros.attila.zsolt@gmail.com> Author: Attila Zsolt Piros <2017933+attilapiros@users.noreply.github.com> Closes #21280 from attilapiros/SPARK-19181. (cherry picked from commit 3e2600538ee477ffe3f23fba57719e035219550b) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 10 May 2018, 21:26:47 UTC |

| 16cd9ac | Marcelo Vanzin | 17 April 2018, 20:29:43 UTC | [SPARKR] Match pyspark features in SparkR communication protocol. (cherry picked from commit 628c7b517969c4a7ccb26ea67ab3dd61266073ca) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 10 May 2018, 17:47:37 UTC |

| 323dc3a | Marcelo Vanzin | 13 April 2018, 21:28:24 UTC | [PYSPARK] Update py4j to version 0.10.7. (cherry picked from commit cc613b552e753d03cb62661591de59e1c8d82c74) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 10 May 2018, 17:47:37 UTC |

| eab10f9 | Maxim Gekk | 10 May 2018, 16:28:43 UTC | [SPARK-24068][BACKPORT-2.3] Propagating DataFrameReader's options to Text datasource on schema inferring ## What changes were proposed in this pull request? While reading CSV or JSON files, DataFrameReader's options are converted to Hadoop's parameters, for example there: https://github.com/apache/spark/blob/branch-2.3/sql/core/src/main/scala/org/apache/spark/sql/execution/DataSourceScanExec.scala#L302 but the options are not propagated to Text datasource on schema inferring, for instance: https://github.com/apache/spark/blob/branch-2.3/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/csv/CSVDataSource.scala#L184-L188 The PR proposes propagation of user's options to Text datasource on scheme inferring in similar way as user's options are converted to Hadoop parameters if schema is specified. ## How was this patch tested? The changes were tested manually by using https://github.com/twitter/hadoop-lzo: ``` hadoop-lzo> mvn clean package hadoop-lzo> ln -s ./target/hadoop-lzo-0.4.21-SNAPSHOT.jar ./hadoop-lzo.jar ``` Create 2 test files in JSON and CSV format and compress them: ```shell $ cat test.csv col1|col2 a|1 $ lzop test.csv $ cat test.json {"col1":"a","col2":1} $ lzop test.json ``` Run `spark-shell` with hadoop-lzo: ``` bin/spark-shell --jars ~/hadoop-lzo/hadoop-lzo.jar ``` reading compressed CSV and JSON without schema: ```scala spark.read.option("io.compression.codecs", "com.hadoop.compression.lzo.LzopCodec").option("inferSchema",true).option("header",true).option("sep","|").csv("test.csv.lzo").show() +----+----+ |col1|col2| +----+----+ | a| 1| +----+----+ ``` ```scala spark.read.option("io.compression.codecs", "com.hadoop.compression.lzo.LzopCodec").option("multiLine", true).json("test.json.lzo").printSchema root |-- col1: string (nullable = true) |-- col2: long (nullable = true) ``` Author: Maxim Gekk <maxim.gekk@databricks.com> Author: Maxim Gekk <max.gekk@gmail.com> Closes #21292 from MaxGekk/text-options-backport-v2.3. | 10 May 2018, 16:28:43 UTC |

| 8889d78 | Shixiong Zhu | 09 May 2018, 18:32:17 UTC | [SPARK-24214][SS] Fix toJSON for StreamingRelationV2/StreamingExecutionRelation/ContinuousExecutionRelation ## What changes were proposed in this pull request? We should overwrite "otherCopyArgs" to provide the SparkSession parameter otherwise TreeNode.toJSON cannot get the full constructor parameter list. ## How was this patch tested? The new unit test. Author: Shixiong Zhu <zsxwing@gmail.com> Closes #21275 from zsxwing/SPARK-24214. (cherry picked from commit fd1179c17273283d32f275d5cd5f97aaa2aca1f7) Signed-off-by: Shixiong Zhu <zsxwing@gmail.com> | 09 May 2018, 18:32:27 UTC |

| aba52f4 | Marcelo Vanzin | 08 May 2018, 06:32:04 UTC | [SPARK-24188][CORE] Restore "/version" API endpoint. It was missing the jax-rs annotation. Author: Marcelo Vanzin <vanzin@cloudera.com> Closes #21245 from vanzin/SPARK-24188. Change-Id: Ib338e34b363d7c729cc92202df020dc51033b719 (cherry picked from commit 05eb19b6e09065265358eec2db2ff3b42806dfc9) Signed-off-by: jerryshao <sshao@hortonworks.com> | 08 May 2018, 06:32:19 UTC |

| 4dc6719 | Henry Robinson | 08 May 2018, 04:21:33 UTC | [SPARK-24128][SQL] Mention configuration option in implicit CROSS JOIN error ## What changes were proposed in this pull request? Mention `spark.sql.crossJoin.enabled` in error message when an implicit `CROSS JOIN` is detected. ## How was this patch tested? `CartesianProductSuite` and `JoinSuite`. Author: Henry Robinson <henry@apache.org> Closes #21201 from henryr/spark-24128. (cherry picked from commit cd12c5c3ecf28f7b04f566c2057f9b65eb456b7d) Signed-off-by: hyukjinkwon <gurwls223@apache.org> | 08 May 2018, 04:21:54 UTC |

| 3a22fea | hyukjinkwon | 07 May 2018, 21:48:28 UTC | [SPARK-23291][SQL][R][BRANCH-2.3] R's substr should not reduce starting position by 1 when calling Scala API ## What changes were proposed in this pull request? This PR backports https://github.com/apache/spark/commit/24b5c69ee3feded439e5bb6390e4b63f503eeafe and https://github.com/apache/spark/pull/21249 There's no conflict but I opened this just to run the test and for sure. See the discussion in https://issues.apache.org/jira/browse/SPARK-23291 ## How was this patch tested? Jenkins tests. Author: hyukjinkwon <gurwls223@apache.org> Author: Liang-Chi Hsieh <viirya@gmail.com> Closes #21250 from HyukjinKwon/SPARK-23291-backport. | 07 May 2018, 21:48:28 UTC |

| f87785a | Gabor Somogyi | 07 May 2018, 06:45:14 UTC | [SPARK-23775][TEST] Make DataFrameRangeSuite not flaky ## What changes were proposed in this pull request? DataFrameRangeSuite.test("Cancelling stage in a query with Range.") stays sometimes in an infinite loop and times out the build. There were multiple issues with the test: 1. The first valid stageId is zero when the test started alone and not in a suite and the following code waits until timeout: ``` eventually(timeout(10.seconds), interval(1.millis)) { assert(DataFrameRangeSuite.stageToKill > 0) } ``` 2. The `DataFrameRangeSuite.stageToKill` was overwritten by the task's thread after the reset which ended up in canceling the same stage 2 times. This caused the infinite wait. This PR solves this mentioned flakyness by removing the shared `DataFrameRangeSuite.stageToKill` and using `onTaskStart` where stage ID is provided. In order to make sure cancelStage called for all stages `waitUntilEmpty` is called on `ListenerBus`. In [PR20888](https://github.com/apache/spark/pull/20888) this tried to get solved by: * Stopping the executor thread with `wait` * Wait for all `cancelStage` called * Kill the executor thread by setting `SparkContext.SPARK_JOB_INTERRUPT_ON_CANCEL` but the thread killing left the shared `SparkContext` sometimes in a state where further jobs can't be submitted. As a result DataFrameRangeSuite.test("Cancelling stage in a query with Range.") test passed properly but the next test inside the suite was hanging. ## How was this patch tested? Existing unit test executed 10k times. Author: Gabor Somogyi <gabor.g.somogyi@gmail.com> Closes #21214 from gaborgsomogyi/SPARK-23775_1. (cherry picked from commit c5981976f1d514a3ad8a684b9a21cebe38b786fa) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 07 May 2018, 06:46:56 UTC |

| 3f78f60 | Wenchen Fan | 04 May 2018, 11:20:15 UTC | [SPARK-23697][CORE] LegacyAccumulatorWrapper should define isZero correctly ## What changes were proposed in this pull request? It's possible that Accumulators of Spark 1.x may no longer work with Spark 2.x. This is because `LegacyAccumulatorWrapper.isZero` may return wrong answer if `AccumulableParam` doesn't define equals/hashCode. This PR fixes this by using reference equality check in `LegacyAccumulatorWrapper.isZero`. ## How was this patch tested? a new test Author: Wenchen Fan <wenchen@databricks.com> Closes #21229 from cloud-fan/accumulator. (cherry picked from commit 4d5de4d303a773b1c18c350072344bd7efca9fc4) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 04 May 2018, 11:20:39 UTC |

| d35eb2f | Wenchen Fan | 04 May 2018, 00:27:13 UTC | [SPARK-24168][SQL] WindowExec should not access SQLConf at executor side ## What changes were proposed in this pull request? This PR is extracted from #21190 , to make it easier to backport. `WindowExec#createBoundOrdering` is called on executor side, so we can't use `conf.sessionLocalTimezone` there. ## How was this patch tested? tested in #21190 Author: Wenchen Fan <wenchen@databricks.com> Closes #21225 from cloud-fan/minor3. (cherry picked from commit e646ae67f2e793204bc819ab2b90815214c2bbf3) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 04 May 2018, 00:27:23 UTC |

| 8509284 | Imran Rashid | 03 May 2018, 15:59:18 UTC | [SPARK-23433][CORE] Late zombie task completions update all tasksets Fetch failure lead to multiple tasksets which are active for a given stage. While there is only one "active" version of the taskset, the earlier attempts can still have running tasks, which can complete successfully. So a task completion needs to update every taskset so that it knows the partition is completed. That way the final active taskset does not try to submit another task for the same partition, and so that it knows when it is completed and when it should be marked as a "zombie". Added a regression test. Author: Imran Rashid <irashid@cloudera.com> Closes #21131 from squito/SPARK-23433. (cherry picked from commit 94641fe6cc68e5977dd8663b8f232a287a783acb) Signed-off-by: Imran Rashid <irashid@cloudera.com> | 03 May 2018, 15:59:30 UTC |

| 61e7bc0 | Wenchen Fan | 03 May 2018, 15:36:09 UTC | [SPARK-24169][SQL] JsonToStructs should not access SQLConf at executor side ## What changes were proposed in this pull request? This PR is extracted from #21190 , to make it easier to backport. `JsonToStructs` can be serialized to executors and evaluate, we should not call `SQLConf.get.getConf(SQLConf.FROM_JSON_FORCE_NULLABLE_SCHEMA)` in the body. ## How was this patch tested? tested in #21190 Author: Wenchen Fan <wenchen@databricks.com> Closes #21226 from cloud-fan/minor4. (cherry picked from commit 96a50016bb0fb1cc57823a6706bff2467d671efd) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 03 May 2018, 15:36:59 UTC |

| bfe50b6 | Ala Luszczak | 03 May 2018, 14:52:40 UTC | [SPARK-24133][SQL] Backport [] Check for integer overflows when resizing WritableColumnVectors `ColumnVector`s store string data in one big byte array. Since the array size is capped at just under Integer.MAX_VALUE, a single `ColumnVector` cannot store more than 2GB of string data. But since the Parquet files commonly contain large blobs stored as strings, and `ColumnVector`s by default carry 4096 values, it's entirely possible to go past that limit. In such cases a negative capacity is requested from `WritableColumnVector.reserve()`. The call succeeds (requested capacity is smaller than already allocated capacity), and consequently `java.lang.ArrayIndexOutOfBoundsException` is thrown when the reader actually attempts to put the data into the array. This change introduces a simple check for integer overflow to `WritableColumnVector.reserve()` which should help catch the error earlier and provide more informative exception. Additionally, the error message in `WritableColumnVector.throwUnsupportedException()` was corrected. New units tests were added. Author: Ala Luszczak <aladatabricks.com> Closes #21206 from ala/overflow-reserve. (cherry picked from commit 8bd27025b7cf0b44726b6f4020d294ef14dbbb7e) Signed-off-by: Ala Luszczak <aladatabricks.com> Author: Ala Luszczak <ala@databricks.com> Closes #21227 from ala/cherry-pick-overflow-reserve. | 03 May 2018, 14:52:40 UTC |

| 10e2f1f | Wenchen Fan | 03 May 2018, 11:56:30 UTC | [SPARK-24166][SQL] InMemoryTableScanExec should not access SQLConf at executor side ## What changes were proposed in this pull request? This PR is extracted from https://github.com/apache/spark/pull/21190 , to make it easier to backport. `InMemoryTableScanExec#createAndDecompressColumn` is executed inside `rdd.map`, we can't access `conf.offHeapColumnVectorEnabled` there. ## How was this patch tested? it's tested in #21190 Author: Wenchen Fan <wenchen@databricks.com> Closes #21223 from cloud-fan/minor1. (cherry picked from commit 991b526992bcf1dc1268578b650916569b12f583) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 03 May 2018, 11:57:08 UTC |

| 0fe53b6 | Dongjoon Hyun | 03 May 2018, 07:15:05 UTC | [SPARK-23489][SQL][TEST] HiveExternalCatalogVersionsSuite should verify the downloaded file ## What changes were proposed in this pull request? Although [SPARK-22654](https://issues.apache.org/jira/browse/SPARK-22654) made `HiveExternalCatalogVersionsSuite` download from Apache mirrors three times, it has been flaky because it didn't verify the downloaded file. Some Apache mirrors terminate the downloading abnormally, the *corrupted* file shows the following errors. ``` gzip: stdin: not in gzip format tar: Child returned status 1 tar: Error is not recoverable: exiting now 22:46:32.700 WARN org.apache.spark.sql.hive.HiveExternalCatalogVersionsSuite: ===== POSSIBLE THREAD LEAK IN SUITE o.a.s.sql.hive.HiveExternalCatalogVersionsSuite, thread names: Keep-Alive-Timer ===== *** RUN ABORTED *** java.io.IOException: Cannot run program "./bin/spark-submit" (in directory "/tmp/test-spark/spark-2.2.0"): error=2, No such file or directory ``` This has been reported weirdly in two ways. For example, the above case is reported as Case 2 `no failures`. - Case 1. [Test Result (1 failure / +1)](https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Test%20(Dashboard)/job/spark-master-test-sbt-hadoop-2.7/4389/) - Case 2. [Test Result (no failures)](https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Test%20(Dashboard)/job/spark-master-test-maven-hadoop-2.6/4811/) This PR aims to make `HiveExternalCatalogVersionsSuite` more robust by verifying the downloaded `tgz` file by extracting and checking the existence of `bin/spark-submit`. If it turns out that the file is empty or corrupted, `HiveExternalCatalogVersionsSuite` will do retry logic like the download failure. ## How was this patch tested? Pass the Jenkins. Author: Dongjoon Hyun <dongjoon@apache.org> Closes #21210 from dongjoon-hyun/SPARK-23489. (cherry picked from commit c9bfd1c6f8d16890ea1e5bc2bcb654a3afb32591) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 03 May 2018, 07:15:29 UTC |

| b3adb53 | Eric Liang | 02 May 2018, 19:02:02 UTC | [SPARK-23971][BACKPORT-2.3] Should not leak Spark sessions across test suites This PR is to backport the PR https://github.com/apache/spark/pull/21058 to Apache 2.3. This should be the cause why we saw the test regressions in Apache 2.3 branches: https://amplab.cs.berkeley.edu/jenkins/job/spark-branch-2.3-test-sbt-hadoop-2.6/317/testReport/org.apache.spark.sql.execution.datasources.parquet/ParquetQuerySuite/SPARK_15678__not_use_cache_on_overwrite/history/ https://amplab.cs.berkeley.edu/jenkins/job/spark-branch-2.3-test-sbt-hadoop-2.7/318/testReport/junit/org.apache.spark.sql/DataFrameSuite/inputFiles/history/ --- ## What changes were proposed in this pull request? Many suites currently leak Spark sessions (sometimes with stopped SparkContexts) via the thread-local active Spark session and default Spark session. We should attempt to clean these up and detect when this happens to improve the reproducibility of tests. ## How was this patch tested? Existing tests Author: Eric Liang <ekl@databricks.com> Closes #21197 from gatorsmile/backportSPARK-23971. | 02 May 2018, 19:02:02 UTC |

| 88abf7b | WangJinhai02 | 02 May 2018, 14:40:14 UTC | [SPARK-24107][CORE] ChunkedByteBuffer.writeFully method has not reset the limit value JIRA Issue: https://issues.apache.org/jira/browse/SPARK-24107?jql=text%20~%20%22ChunkedByteBuffer%22 ChunkedByteBuffer.writeFully method has not reset the limit value. When chunks larger than bufferWriteChunkSize, such as 80 * 1024 * 1024 larger than config.BUFFER_WRITE_CHUNK_SIZE(64 * 1024 * 1024),only while once, will lost 16 * 1024 * 1024 byte Author: WangJinhai02 <jinhai.wang02@ele.me> Closes #21175 from manbuyun/bugfix-ChunkedByteBuffer. (cherry picked from commit 152eaf6ae698cd0df7f5a5be3f17ee46e0be929d) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 02 May 2018, 14:42:58 UTC |

| 682f05d | Bounkong Khamphousone | 01 May 2018, 15:28:21 UTC | [SPARK-23941][MESOS] Mesos task failed on specific spark app name ## What changes were proposed in this pull request? Shell escaped the name passed to spark-submit and change how conf attributes are shell escaped. ## How was this patch tested? This test has been tested manually with Hive-on-spark with mesos or with the use case described in the issue with the sparkPi application with a custom name which contains illegal shell characters. With this PR, hive-on-spark on mesos works like a charm with hive 3.0.0-SNAPSHOT. I state that this contribution is my original work and that I license the work to the project under the project’s open source license Author: Bounkong Khamphousone <bounkong.khamphousone@ebiznext.com> Closes #21014 from tiboun/fix/SPARK-23941. (cherry picked from commit 6782359a04356e4cde32940861bf2410ef37f445) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 01 May 2018, 15:28:34 UTC |

| 52a420f | Dongjoon Hyun | 01 May 2018, 01:06:23 UTC | [SPARK-23853][PYSPARK][TEST] Run Hive-related PySpark tests only for `-Phive` ## What changes were proposed in this pull request? When `PyArrow` or `Pandas` are not available, the corresponding PySpark tests are skipped automatically. Currently, PySpark tests fail when we are not using `-Phive`. This PR aims to skip Hive related PySpark tests when `-Phive` is not given. **BEFORE** ```bash $ build/mvn -DskipTests clean package $ python/run-tests.py --python-executables python2.7 --modules pyspark-sql File "/Users/dongjoon/spark/python/pyspark/sql/readwriter.py", line 295, in pyspark.sql.readwriter.DataFrameReader.table ... IllegalArgumentException: u"Error while instantiating 'org.apache.spark.sql.hive.HiveExternalCatalog':" ********************************************************************** 1 of 3 in pyspark.sql.readwriter.DataFrameReader.table ***Test Failed*** 1 failures. ``` **AFTER** ```bash $ build/mvn -DskipTests clean package $ python/run-tests.py --python-executables python2.7 --modules pyspark-sql ... Tests passed in 138 seconds Skipped tests in pyspark.sql.tests with python2.7: ... test_hivecontext (pyspark.sql.tests.HiveSparkSubmitTests) ... skipped 'Hive is not available.' ``` ## How was this patch tested? This is a test-only change. First, this should pass the Jenkins. Then, manually do the following. ```bash build/mvn -DskipTests clean package python/run-tests.py --python-executables python2.7 --modules pyspark-sql ``` Author: Dongjoon Hyun <dongjoon@apache.org> Closes #21141 from dongjoon-hyun/SPARK-23853. (cherry picked from commit b857fb549f3bf4e6f289ba11f3903db0a3696dec) Signed-off-by: hyukjinkwon <gurwls223@apache.org> | 01 May 2018, 01:06:51 UTC |

| 235ec9e | hyukjinkwon | 30 April 2018, 01:40:46 UTC | [MINOR][DOCS] Fix a broken link for Arrow's supported types in the programming guide ## What changes were proposed in this pull request? This PR fixes a broken link for Arrow's supported types in the programming guide. ## How was this patch tested? Manually tested via `SKIP_API=1 jekyll watch`. "Supported SQL Types" here in https://spark.apache.org/docs/latest/sql-programming-guide.html#enabling-for-conversion-tofrom-pandas is broken. It should be https://spark.apache.org/docs/latest/sql-programming-guide.html#supported-sql-types Author: hyukjinkwon <gurwls223@apache.org> Closes #21191 from HyukjinKwon/minor-arrow-link. (cherry picked from commit 56f501e1c0cec3be7d13008bd2c0182ec83ed2a2) Signed-off-by: hyukjinkwon <gurwls223@apache.org> | 30 April 2018, 01:41:41 UTC |

| df45ddb | Juliusz Sompolski | 27 April 2018, 21:14:28 UTC | [SPARK-24104] SQLAppStatusListener overwrites metrics onDriverAccumUpdates instead of updating them ## What changes were proposed in this pull request? Event `SparkListenerDriverAccumUpdates` may happen multiple times in a query - e.g. every `FileSourceScanExec` and `BroadcastExchangeExec` call `postDriverMetricUpdates`. In Spark 2.2 `SQLListener` updated the map with new values. `SQLAppStatusListener` overwrites it. Unless `update` preserved it in the KV store (dependant on `exec.lastWriteTime`), only the metrics from the last operator that does `postDriverMetricUpdates` are preserved. ## How was this patch tested? Unit test added. Author: Juliusz Sompolski <julek@databricks.com> Closes #21171 from juliuszsompolski/SPARK-24104. (cherry picked from commit 8614edd445264007144caa6743a8c2ca2b5082e0) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 27 April 2018, 21:14:38 UTC |

| 4a10df0 | Dilip Biswal | 27 April 2018, 18:43:29 UTC | [SPARK-24085][SQL] Query returns UnsupportedOperationException when scalar subquery is present in partitioning expression ## What changes were proposed in this pull request? In this case, the partition pruning happens before the planning phase of scalar subquery expressions. For scalar subquery expressions, the planning occurs late in the cycle (after the physical planning) in "PlanSubqueries" just before execution. Currently we try to execute the scalar subquery expression as part of partition pruning and fail as it implements Unevaluable. The fix attempts to ignore the Subquery expressions from partition pruning computation. Another option can be to somehow plan the subqueries before the partition pruning. Since this may not be a commonly occuring expression, i am opting for a simpler fix. Repro ``` SQL CREATE TABLE test_prc_bug ( id_value string ) partitioned by (id_type string) location '/tmp/test_prc_bug' stored as parquet; insert into test_prc_bug values ('1','a'); insert into test_prc_bug values ('2','a'); insert into test_prc_bug values ('3','b'); insert into test_prc_bug values ('4','b'); select * from test_prc_bug where id_type = (select 'b'); ``` ## How was this patch tested? Added test in SubquerySuite and hive/SQLQuerySuite Author: Dilip Biswal <dbiswal@us.ibm.com> Closes #21174 from dilipbiswal/spark-24085. (cherry picked from commit 3fd297af6dc568357c97abf86760c570309d6597) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 27 April 2018, 18:43:39 UTC |

| 07ec75c | jerryshao | 26 April 2018, 05:27:33 UTC | [SPARK-24062][THRIFT SERVER] Fix SASL encryption cannot enabled issue in thrift server ## What changes were proposed in this pull request? For the details of the exception please see [SPARK-24062](https://issues.apache.org/jira/browse/SPARK-24062). The issue is: Spark on Yarn stores SASL secret in current UGI's credentials, this credentials will be distributed to AM and executors, so that executors and drive share the same secret to communicate. But STS/Hive library code will refresh the current UGI by UGI's loginFromKeytab() after Spark application is started, this will create a new UGI in the current driver's context with empty tokens and secret keys, so secret key is lost in the current context's UGI, that's why Spark driver throws secret key not found exception. In Spark 2.2 code, Spark also stores this secret key in SecurityManager's class variable, so even UGI is refreshed, the secret is still existed in the object, so STS with SASL can still be worked in Spark 2.2. But in Spark 2.3, we always search key from current UGI, which makes it fail to work in Spark 2.3. To fix this issue, there're two possible solutions: 1. Fix in STS/Hive library, when a new UGI is refreshed, copy the secret key from original UGI to the new one. The difficulty is that some codes to refresh the UGI is existed in Hive library, which makes us hard to change the code. 2. Roll back the logics in SecurityManager to match Spark 2.2, so that this issue can be fixed. 2nd solution seems a simple one. So I will propose a PR with 2nd solution. ## How was this patch tested? Verified in local cluster. CC vanzin tgravescs please help to review. Thanks! Author: jerryshao <sshao@hortonworks.com> Closes #21138 from jerryshao/SPARK-24062. (cherry picked from commit ffaf0f9fd407aeba7006f3d785ea8a0e51187357) Signed-off-by: jerryshao <sshao@hortonworks.com> | 26 April 2018, 05:27:58 UTC |

| 096defd | seancxmao | 24 April 2018, 08:16:07 UTC | [MINOR][DOCS] Fix comments of SQLExecution#withExecutionId ## What changes were proposed in this pull request? Fix comment. Change `BroadcastHashJoin.broadcastFuture` to `BroadcastExchangeExec.relationFuture`: https://github.com/apache/spark/blob/d28d5732ae205771f1f443b15b10e64dcffb5ff0/sql/core/src/main/scala/org/apache/spark/sql/execution/exchange/BroadcastExchangeExec.scala#L66 ## How was this patch tested? N/A Author: seancxmao <seancxmao@gmail.com> Closes #21113 from seancxmao/SPARK-13136. (cherry picked from commit c303b1b6766a3dc5961713f98f62cd7d7ac7972a) Signed-off-by: hyukjinkwon <gurwls223@apache.org> | 24 April 2018, 08:16:41 UTC |

| 1c3e820 | gatorsmile | 23 April 2018, 20:57:57 UTC | Revert "[SPARK-23799][SQL] FilterEstimation.evaluateInSet produces devision by zero in a case of empty table with analyzed statistics" This reverts commit c2f4ee7baf07501cc1f8a23dd21d14aea53606c7. | 23 April 2018, 20:57:57 UTC |

| 8eb9a41 | Tathagata Das | 23 April 2018, 20:20:32 UTC | [SPARK-23004][SS] Ensure StateStore.commit is called only once in a streaming aggregation task ## What changes were proposed in this pull request? A structured streaming query with a streaming aggregation can throw the following error in rare cases. ``` java.lang.IllegalStateException: Cannot commit after already committed or aborted at org.apache.spark.sql.execution.streaming.state.HDFSBackedStateStoreProvider.org$apache$spark$sql$execution$streaming$state$HDFSBackedStateStoreProvider$$verify(HDFSBackedStateStoreProvider.scala:643) at org.apache.spark.sql.execution.streaming.state.HDFSBackedStateStoreProvider$HDFSBackedStateStore.commit(HDFSBackedStateStoreProvider.scala:135) at org.apache.spark.sql.execution.streaming.StateStoreSaveExec$$anonfun$doExecute$3$$anon$2$$anonfun$hasNext$2.apply$mcV$sp(statefulOperators.scala:359) at org.apache.spark.sql.execution.streaming.StateStoreWriter$class.timeTakenMs(statefulOperators.scala:102) at org.apache.spark.sql.execution.streaming.StateStoreSaveExec.timeTakenMs(statefulOperators.scala:251) at org.apache.spark.sql.execution.streaming.StateStoreSaveExec$$anonfun$doExecute$3$$anon$2.hasNext(statefulOperators.scala:359) at org.apache.spark.sql.execution.aggregate.ObjectAggregationIterator.processInputs(ObjectAggregationIterator.scala:188) at org.apache.spark.sql.execution.aggregate.ObjectAggregationIterator.<init>(ObjectAggregationIterator.scala:78) at org.apache.spark.sql.execution.aggregate.ObjectHashAggregateExec$$anonfun$doExecute$1$$anonfun$2.apply(ObjectHashAggregateExec.scala:114) at org.apache.spark.sql.execution.aggregate.ObjectHashAggregateExec$$anonfun$doExecute$1$$anonfun$2.apply(ObjectHashAggregateExec.scala:105) at org.apache.spark.rdd.RDD$$anonfun$mapPartitionsWithIndexInternal$1$$anonfun$apply$24.apply(RDD.scala:830) at org.apache.spark.rdd.RDD$$anonfun$mapPartitionsWithIndexInternal$1$$anonfun$apply$24.apply(RDD.scala:830) at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:42) at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:336) ``` This can happen when the following conditions are accidentally hit. - Streaming aggregation with aggregation function that is a subset of [`TypedImperativeAggregation`](https://github.com/apache/spark/blob/76b8b840ddc951ee6203f9cccd2c2b9671c1b5e8/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/aggregate/interfaces.scala#L473) (for example, `collect_set`, `collect_list`, `percentile`, etc.). - Query running in `update}` mode - After the shuffle, a partition has exactly 128 records. This causes StateStore.commit to be called twice. See the [JIRA](https://issues.apache.org/jira/browse/SPARK-23004) for a more detailed explanation. The solution is to use `NextIterator` or `CompletionIterator`, each of which has a flag to prevent the "onCompletion" task from being called more than once. In this PR, I chose to implement using `NextIterator`. ## How was this patch tested? Added unit test that I have confirm will fail without the fix. Author: Tathagata Das <tathagata.das1565@gmail.com> Closes #21124 from tdas/SPARK-23004. (cherry picked from commit 770add81c3474e754867d7105031a5eaf27159bd) Signed-off-by: Tathagata Das <tathagata.das1565@gmail.com> | 23 April 2018, 20:22:03 UTC |

| c2f4ee7 | Mykhailo Shtelma | 22 April 2018, 06:33:57 UTC | [SPARK-23799][SQL] FilterEstimation.evaluateInSet produces devision by zero in a case of empty table with analyzed statistics >What changes were proposed in this pull request? During evaluation of IN conditions, if the source data frame, is represented by a plan, that uses hive table with columns, which were previously analysed, and the plan has conditions for these fields, that cannot be satisfied (which leads us to an empty data frame), FilterEstimation.evaluateInSet method produces NumberFormatException and ClassCastException. In order to fix this bug, method FilterEstimation.evaluateInSet at first checks, if distinct count is not zero, and also checks if colStat.min and colStat.max are defined, and only in this case proceeds with the calculation. If at least one of the conditions is not satisfied, zero is returned. >How was this patch tested? In order to test the PR two tests were implemented: one in FilterEstimationSuite, that tests the plan with the statistics that violates the conditions mentioned above, and another one in StatisticsCollectionSuite, that test the whole process of analysis/optimisation of the query, that leads to the problems, mentioned in the first section. Author: Mykhailo Shtelma <mykhailo.shtelma@bearingpoint.com> Author: smikesh <mshtelma@gmail.com> Closes #21052 from mshtelma/filter_estimation_evaluateInSet_Bugs. (cherry picked from commit c48085aa91c60615a4de3b391f019f46f3fcdbe3) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 22 April 2018, 06:34:06 UTC |

| d914100 | gatorsmile | 21 April 2018, 17:45:12 UTC | [SPARK-24033][SQL] Fix Mismatched of Window Frame specifiedwindowframe(RowFrame, -1, -1) ## What changes were proposed in this pull request? When the OffsetWindowFunction's frame is `UnaryMinus(Literal(1))` but the specified window frame has been simplified to `Literal(-1)` by some optimizer rules e.g., `ConstantFolding`. Thus, they do not match and cause the following error: ``` org.apache.spark.sql.AnalysisException: Window Frame specifiedwindowframe(RowFrame, -1, -1) must match the required frame specifiedwindowframe(RowFrame, -1, -1); at org.apache.spark.sql.catalyst.analysis.CheckAnalysis$class.failAnalysis(CheckAnalysis.scala:41) at org.apache.spark.sql.catalyst.analysis.Analyzer.failAnalysis(Analyzer.scala:91) at ``` ## How was this patch tested? Added a test Author: gatorsmile <gatorsmile@gmail.com> Closes #21115 from gatorsmile/fixLag. (cherry picked from commit 7bc853d08973a6bd839ad2222911eb0a0f413677) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 21 April 2018, 17:45:24 UTC |

| 8eb64a5 | Marcelo Vanzin | 20 April 2018, 17:22:14 UTC | Revert "[SPARK-23775][TEST] Make DataFrameRangeSuite not flaky" This reverts commit 130641102ceecf2a795d7f0dc6412c7e56eb03a8. | 20 April 2018, 17:22:14 UTC |

| 9b562d6 | Gabor Somogyi | 19 April 2018, 22:06:27 UTC | [SPARK-24022][TEST] Make SparkContextSuite not flaky ## What changes were proposed in this pull request? SparkContextSuite.test("Cancelling stages/jobs with custom reasons.") could stay in an infinite loop because of the problem found and fixed in [SPARK-23775](https://issues.apache.org/jira/browse/SPARK-23775). This PR solves this mentioned flakyness by removing shared variable usages when cancel happens in a loop and using wait and CountDownLatch for synhronization. ## How was this patch tested? Existing unit test. Author: Gabor Somogyi <gabor.g.somogyi@gmail.com> Closes #21105 from gaborgsomogyi/SPARK-24022. (cherry picked from commit e55953b0bf2a80b34127ba123417ee54955a6064) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 19 April 2018, 22:06:38 UTC |

| be184d1 | Dongjoon Hyun | 19 April 2018, 16:48:34 UTC | [SPARK-23340][SQL][BRANCH-2.3] Upgrade Apache ORC to 1.4.3 ## What changes were proposed in this pull request? This PR updates Apache ORC dependencies to 1.4.3 released on February 9th. Apache ORC 1.4.2 release removes unnecessary dependencies and 1.4.3 has 5 more patches (https://s.apache.org/Fll8). Especially, the following ORC-285 is fixed at 1.4.3. ```scala scala> val df = Seq(Array.empty[Float]).toDF() scala> df.write.format("orc").save("/tmp/floatarray") scala> spark.read.orc("/tmp/floatarray") res1: org.apache.spark.sql.DataFrame = [value: array<float>] scala> spark.read.orc("/tmp/floatarray").show() 18/02/12 22:09:10 ERROR Executor: Exception in task 0.0 in stage 1.0 (TID 1) java.io.IOException: Error reading file: file:/tmp/floatarray/part-00000-9c0b461b-4df1-4c23-aac1-3e4f349ac7d6-c000.snappy.orc at org.apache.orc.impl.RecordReaderImpl.nextBatch(RecordReaderImpl.java:1191) at org.apache.orc.mapreduce.OrcMapreduceRecordReader.ensureBatch(OrcMapreduceRecordReader.java:78) ... Caused by: java.io.EOFException: Read past EOF for compressed stream Stream for column 2 kind DATA position: 0 length: 0 range: 0 offset: 0 limit: 0 ``` ## How was this patch tested? Pass the Jenkins test. Author: Dongjoon Hyun <dongjoon@apache.org> Closes #21093 from dongjoon-hyun/SPARK-23340-2. | 19 April 2018, 16:48:34 UTC |

| fb96821 | Wenchen Fan | 19 April 2018, 15:54:53 UTC | [SPARK-23989][SQL] exchange should copy data before non-serialized shuffle ## What changes were proposed in this pull request? In Spark SQL, we usually reuse the `UnsafeRow` instance and need to copy the data when a place buffers non-serialized objects. Shuffle may buffer objects if we don't make it to the bypass merge shuffle or unsafe shuffle. `ShuffleExchangeExec.needToCopyObjectsBeforeShuffle` misses the case that, if `spark.sql.shuffle.partitions` is large enough, we could fail to run unsafe shuffle and go with the non-serialized shuffle. This bug is very hard to hit since users wouldn't set such a large number of partitions(16 million) for Spark SQL exchange. TODO: test ## How was this patch tested? todo. Author: Wenchen Fan <wenchen@databricks.com> Closes #21101 from cloud-fan/shuffle. (cherry picked from commit 6e19f7683fc73fabe7cdaac4eb1982d2e3e607b7) Signed-off-by: Herman van Hovell <hvanhovell@databricks.com> | 19 April 2018, 15:55:06 UTC |

| 7fb1117 | wuyi | 19 April 2018, 14:00:33 UTC | [SPARK-24021][CORE] fix bug in BlacklistTracker's updateBlacklistForFetchFailure ## What changes were proposed in this pull request? There‘s a miswrite in BlacklistTracker's updateBlacklistForFetchFailure: ``` val blacklistedExecsOnNode = nodeToBlacklistedExecs.getOrElseUpdate(exec, HashSet[String]()) blacklistedExecsOnNode += exec ``` where first **exec** should be **host**. ## How was this patch tested? adjust existed test. Author: wuyi <ngone_5451@163.com> Closes #21104 from Ngone51/SPARK-24021. (cherry picked from commit 0deaa5251326a32a3d2d2b8851193ca926303972) Signed-off-by: Imran Rashid <irashid@cloudera.com> | 19 April 2018, 14:00:46 UTC |

| 32bec6c | Liang-Chi Hsieh | 19 April 2018, 02:00:57 UTC | [SPARK-24014][PYSPARK] Add onStreamingStarted method to StreamingListener ## What changes were proposed in this pull request? The `StreamingListener` in PySpark side seems to be lack of `onStreamingStarted` method. This patch adds it and a test for it. This patch also includes a trivial doc improvement for `createDirectStream`. Original PR is #21057. ## How was this patch tested? Added test. Author: Liang-Chi Hsieh <viirya@gmail.com> Closes #21098 from viirya/SPARK-24014. (cherry picked from commit 8bb0df2c65355dfdcd28e362ff661c6c7ebc99c0) Signed-off-by: jerryshao <sshao@hortonworks.com> | 19 April 2018, 02:01:13 UTC |

| 1306411 | Gabor Somogyi | 18 April 2018, 23:37:41 UTC | [SPARK-23775][TEST] Make DataFrameRangeSuite not flaky ## What changes were proposed in this pull request? DataFrameRangeSuite.test("Cancelling stage in a query with Range.") stays sometimes in an infinite loop and times out the build. There were multiple issues with the test: 1. The first valid stageId is zero when the test started alone and not in a suite and the following code waits until timeout: ``` eventually(timeout(10.seconds), interval(1.millis)) { assert(DataFrameRangeSuite.stageToKill > 0) } ``` 2. The `DataFrameRangeSuite.stageToKill` was overwritten by the task's thread after the reset which ended up in canceling the same stage 2 times. This caused the infinite wait. This PR solves this mentioned flakyness by removing the shared `DataFrameRangeSuite.stageToKill` and using `wait` and `CountDownLatch` for synhronization. ## How was this patch tested? Existing unit test. Author: Gabor Somogyi <gabor.g.somogyi@gmail.com> Closes #20888 from gaborgsomogyi/SPARK-23775. (cherry picked from commit 0c94e48bc50717e1627c0d2acd5382d9adc73c97) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 18 April 2018, 23:37:52 UTC |

| 5bcb7bd | Bruce Robbins | 13 April 2018, 21:05:04 UTC | [SPARK-23963][SQL] Properly handle large number of columns in query on text-based Hive table ## What changes were proposed in this pull request? TableReader would get disproportionately slower as the number of columns in the query increased. I fixed the way TableReader was looking up metadata for each column in the row. Previously, it had been looking up this data in linked lists, accessing each linked list by an index (column number). Now it looks up this data in arrays, where indexing by column number works better. ## How was this patch tested? Manual testing All sbt unit tests python sql tests Author: Bruce Robbins <bersprockets@gmail.com> Closes #21043 from bersprockets/tabreadfix. | 18 April 2018, 16:48:49 UTC |

| a1c56b6 | Takuya UESHIN | 18 April 2018, 15:22:05 UTC | [SPARK-24007][SQL] EqualNullSafe for FloatType and DoubleType might generate a wrong result by codegen. ## What changes were proposed in this pull request? `EqualNullSafe` for `FloatType` and `DoubleType` might generate a wrong result by codegen. ```scala scala> val df = Seq((Some(-1.0d), None), (None, Some(-1.0d))).toDF() df: org.apache.spark.sql.DataFrame = [_1: double, _2: double] scala> df.show() +----+----+ | _1| _2| +----+----+ |-1.0|null| |null|-1.0| +----+----+ scala> df.filter("_1 <=> _2").show() +----+----+ | _1| _2| +----+----+ |-1.0|null| |null|-1.0| +----+----+ ``` The result should be empty but the result remains two rows. ## How was this patch tested? Added a test. Author: Takuya UESHIN <ueshin@databricks.com> Closes #21094 from ueshin/issues/SPARK-24007/equalnullsafe. (cherry picked from commit f09a9e9418c1697d198de18f340b1288f5eb025c) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 18 April 2018, 15:22:16 UTC |

| 6b99d5b | jinxing | 17 April 2018, 20:53:29 UTC | [SPARK-23948] Trigger mapstage's job listener in submitMissingTasks ## What changes were proposed in this pull request? SparkContext submitted a map stage from `submitMapStage` to `DAGScheduler`, `markMapStageJobAsFinished` is called only in (https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/scheduler/DAGScheduler.scala#L933 and https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/scheduler/DAGScheduler.scala#L1314); But think about below scenario: 1. stage0 and stage1 are all `ShuffleMapStage` and stage1 depends on stage0; 2. We submit stage1 by `submitMapStage`; 3. When stage 1 running, `FetchFailed` happened, stage0 and stage1 got resubmitted as stage0_1 and stage1_1; 4. When stage0_1 running, speculated tasks in old stage1 come as succeeded, but stage1 is not inside `runningStages`. So even though all splits(including the speculated tasks) in stage1 succeeded, job listener in stage1 will not be called; 5. stage0_1 finished, stage1_1 starts running. When `submitMissingTasks`, there is no missing tasks. But in current code, job listener is not triggered. We should call the job listener for map stage in `5`. ## How was this patch tested? Not added yet. Author: jinxing <jinxing6042126.com> (cherry picked from commit 3990daaf3b6ca2c5a9f7790030096262efb12cb2) Author: jinxing <jinxing6042@126.com> Closes #21085 from squito/cp. | 17 April 2018, 20:53:29 UTC |

| 564019b | Marco Gaido | 17 April 2018, 16:35:44 UTC | [SPARK-23986][SQL] freshName can generate non-unique names ## What changes were proposed in this pull request? We are using `CodegenContext.freshName` to get a unique name for any new variable we are adding. Unfortunately, this method currently fails to create a unique name when we request more than one instance of variables with starting name `name1` and an instance with starting name `name11`. The PR changes the way a new name is generated by `CodegenContext.freshName` so that we generate unique names in this scenario too. ## How was this patch tested? added UT Author: Marco Gaido <marcogaido91@gmail.com> Closes #21080 from mgaido91/SPARK-23986. (cherry picked from commit f39e82ce150b6a7ea038e6858ba7adbaba3cad88) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 17 April 2018, 16:37:38 UTC |

| 9857e24 | Marco Gaido | 17 April 2018, 13:45:20 UTC | [SPARK-23835][SQL] Add not-null check to Tuples' arguments deserialization ## What changes were proposed in this pull request? There was no check on nullability for arguments of `Tuple`s. This could lead to have weird behavior when a null value had to be deserialized into a non-nullable Scala object: in those cases, the `null` got silently transformed in a valid value (like `-1` for `Int`), corresponding to the default value we are using in the SQL codebase. This situation was very likely to happen when deserializing to a Tuple of primitive Scala types (like Double, Int, ...). The PR adds the `AssertNotNull` to arguments of tuples which have been asked to be converted to non-nullable types. ## How was this patch tested? added UT Author: Marco Gaido <marcogaido91@gmail.com> Closes #20976 from mgaido91/SPARK-23835. (cherry picked from commit 0a9172a05e604a4a94adbb9208c8c02362afca00) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 17 April 2018, 13:46:00 UTC |

| d4f204c | hyukjinkwon | 14 April 2018, 13:44:06 UTC | [SPARK-23942][PYTHON][SQL][BRANCH-2.3] Makes collect in PySpark as action for a query executor listener ## What changes were proposed in this pull request? This PR proposes to add `collect` to a query executor as an action. Seems `collect` / `collect` with Arrow are not recognised via `QueryExecutionListener` as an action. For example, if we have a custom listener as below: ```scala package org.apache.spark.sql import org.apache.spark.internal.Logging import org.apache.spark.sql.execution.QueryExecution import org.apache.spark.sql.util.QueryExecutionListener class TestQueryExecutionListener extends QueryExecutionListener with Logging { override def onSuccess(funcName: String, qe: QueryExecution, durationNs: Long): Unit = { logError("Look at me! I'm 'onSuccess'") } override def onFailure(funcName: String, qe: QueryExecution, exception: Exception): Unit = { } } ``` and set `spark.sql.queryExecutionListeners` to `org.apache.spark.sql.TestQueryExecutionListener` Other operations in PySpark or Scala side seems fine: ```python >>> sql("SELECT * FROM range(1)").show() ``` ``` 18/04/09 17:02:04 ERROR TestQueryExecutionListener: Look at me! I'm 'onSuccess' +---+ | id| +---+ | 0| +---+ ``` ```scala scala> sql("SELECT * FROM range(1)").collect() ``` ``` 18/04/09 16:58:41 ERROR TestQueryExecutionListener: Look at me! I'm 'onSuccess' res1: Array[org.apache.spark.sql.Row] = Array([0]) ``` but .. **Before** ```python >>> sql("SELECT * FROM range(1)").collect() ``` ``` [Row(id=0)] ``` ```python >>> spark.conf.set("spark.sql.execution.arrow.enabled", "true") >>> sql("SELECT * FROM range(1)").toPandas() ``` ``` id 0 0 ``` **After** ```python >>> sql("SELECT * FROM range(1)").collect() ``` ``` 18/04/09 16:57:58 ERROR TestQueryExecutionListener: Look at me! I'm 'onSuccess' [Row(id=0)] ``` ```python >>> spark.conf.set("spark.sql.execution.arrow.enabled", "true") >>> sql("SELECT * FROM range(1)").toPandas() ``` ``` 18/04/09 17:53:26 ERROR TestQueryExecutionListener: Look at me! I'm 'onSuccess' id 0 0 ``` ## How was this patch tested? I have manually tested as described above and unit test was added. Author: hyukjinkwon <gurwls223@apache.org> Closes #21060 from HyukjinKwon/PR_TOOL_PICK_PR_21007_BRANCH-2.3. | 14 April 2018, 13:44:06 UTC |

| dfdf1bb | Fangshi Li | 13 April 2018, 05:46:34 UTC | [SPARK-23815][CORE] Spark writer dynamic partition overwrite mode may fail to write output on multi level partition ## What changes were proposed in this pull request? Spark introduced new writer mode to overwrite only related partitions in SPARK-20236. While we are using this feature in our production cluster, we found a bug when writing multi-level partitions on HDFS. A simple test case to reproduce this issue: val df = Seq(("1","2","3")).toDF("col1", "col2","col3") df.write.partitionBy("col1","col2").mode("overwrite").save("/my/hdfs/location") If HDFS location "/my/hdfs/location" does not exist, there will be no output. This seems to be caused by the job commit change in SPARK-20236 in HadoopMapReduceCommitProtocol. In the commit job process, the output has been written into staging dir /my/hdfs/location/.spark-staging.xxx/col1=1/col2=2, and then the code calls fs.rename to rename /my/hdfs/location/.spark-staging.xxx/col1=1/col2=2 to /my/hdfs/location/col1=1/col2=2. However, in our case the operation will fail on HDFS because /my/hdfs/location/col1=1 does not exists. HDFS rename can not create directory for more than one level. This does not happen in the new unit test added with SPARK-20236 which uses local file system. We are proposing a fix. When cleaning current partition dir /my/hdfs/location/col1=1/col2=2 before the rename op, if the delete op fails (because /my/hdfs/location/col1=1/col2=2 may not exist), we call mkdirs op to create the parent dir /my/hdfs/location/col1=1 (if the parent dir does not exist) so the following rename op can succeed. Reference: in official HDFS document(https://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-common/filesystem/filesystem.html), the rename command has precondition "dest must be root, or have a parent that exists" ## How was this patch tested? We have tested this patch on our production cluster and it fixed the problem Author: Fangshi Li <fli@linkedin.com> Closes #20931 from fangshil/master. (cherry picked from commit 4b07036799b01894826b47c73142fe282c607a57) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 13 April 2018, 05:47:31 UTC |

| 2995b79 | jerryshao | 13 April 2018, 03:00:25 UTC | [SPARK-23748][SS] Fix SS continuous process doesn't support SubqueryAlias issue ## What changes were proposed in this pull request? Current SS continuous doesn't support processing on temp table or `df.as("xxx")`, SS will throw an exception as LogicalPlan not supported, details described in [here](https://issues.apache.org/jira/browse/SPARK-23748). So here propose to add this support. ## How was this patch tested? new UT. Author: jerryshao <sshao@hortonworks.com> Closes #21017 from jerryshao/SPARK-23748. (cherry picked from commit 14291b061b9b40eadbf4ed442f9a5021b8e09597) Signed-off-by: Tathagata Das <tathagata.das1565@gmail.com> | 13 April 2018, 03:00:40 UTC |

| 908c681 | Patrick Pisciuneri | 13 April 2018, 01:45:27 UTC | [SPARK-23867][SCHEDULER] use droppedCount in logWarning ## What changes were proposed in this pull request? Get the count of dropped events for output in log message. ## How was this patch tested? The fix is pretty trivial, but `./dev/run-tests` were run and were successful. Please review http://spark.apache.org/contributing.html before opening a pull request. vanzin cloud-fan The contribution is my original work and I license the work to the project under the project’s open source license. Author: Patrick Pisciuneri <Patrick.Pisciuneri@target.com> Closes #20977 from phpisciuneri/fix-log-warning. (cherry picked from commit 682002b6da844ed11324ee5ff4d00fc0294c0b31) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 13 April 2018, 01:45:45 UTC |

| 5712695 | Imran Rashid | 12 April 2018, 07:58:04 UTC | [SPARK-23962][SQL][TEST] Fix race in currentExecutionIds(). SQLMetricsTestUtils.currentExecutionIds() was racing with the listener bus, which lead to some flaky tests. We should wait till the listener bus is empty. I tested by adding some Thread.sleep()s in SQLAppStatusListener, which reproduced the exceptions I saw on Jenkins. With this change, they went away. Author: Imran Rashid <irashid@cloudera.com> Closes #21041 from squito/SPARK-23962. (cherry picked from commit 6a2289ecf020a99cd9b3bcea7da5e78fb4e0303a) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 12 April 2018, 07:58:36 UTC |

| 03a4dfd | JBauerKogentix | 11 April 2018, 22:52:13 UTC | typo rawPredicition changed to rawPrediction MultilayerPerceptronClassifier had 4 occurrences ## What changes were proposed in this pull request? (Please fill in changes proposed in this fix) ## How was this patch tested? (Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests) (If this patch involves UI changes, please attach a screenshot; otherwise, remove this) Please review http://spark.apache.org/contributing.html before opening a pull request. Author: JBauerKogentix <37910022+JBauerKogentix@users.noreply.github.com> Closes #21030 from JBauerKogentix/patch-1. (cherry picked from commit 9d960de0814a1128318676cc2e91f447cdf0137f) Signed-off-by: Joseph K. Bradley <joseph@databricks.com> | 11 April 2018, 22:52:24 UTC |

| acfc156 | Joseph K. Bradley | 11 April 2018, 18:41:50 UTC | [SPARK-22883][ML] ML test for StructuredStreaming: spark.ml.feature, I-M This backports https://github.com/apache/spark/pull/20964 to branch-2.3. ## What changes were proposed in this pull request? Adds structured streaming tests using testTransformer for these suites: * IDF * Imputer * Interaction * MaxAbsScaler * MinHashLSH * MinMaxScaler * NGram ## How was this patch tested? It is a bunch of tests! Author: Joseph K. Bradley <josephdatabricks.com> Author: Joseph K. Bradley <joseph@databricks.com> Closes #21042 from jkbradley/SPARK-22883-part2-2.3backport. | 11 April 2018, 18:41:50 UTC |

| 320269e | hyukjinkwon | 11 April 2018, 11:44:01 UTC | [MINOR][DOCS] Fix R documentation generation instruction for roxygen2 ## What changes were proposed in this pull request? This PR proposes to fix `roxygen2` to `5.0.1` in `docs/README.md` for SparkR documentation generation. If I use higher version and creates the doc, it shows the diff below. Not a big deal but it bothered me. ```diff diff --git a/R/pkg/DESCRIPTION b/R/pkg/DESCRIPTION index 855eb5bf77f..159fca61e06 100644 --- a/R/pkg/DESCRIPTION +++ b/R/pkg/DESCRIPTION -57,6 +57,6 Collate: 'types.R' 'utils.R' 'window.R' -RoxygenNote: 5.0.1 +RoxygenNote: 6.0.1 VignetteBuilder: knitr NeedsCompilation: no ``` ## How was this patch tested? Manually tested. I met this every time I set the new environment for Spark dev but I have kept forgetting to fix it. Author: hyukjinkwon <gurwls223@apache.org> Closes #21020 from HyukjinKwon/minor-r-doc. (cherry picked from commit 87611bba222a95158fc5b638a566bdf47346da8e) Signed-off-by: hyukjinkwon <gurwls223@apache.org> Signed-off-by: hyukjinkwon <gurwls223@apache.org> | 11 April 2018, 11:44:22 UTC |

| 0f2aabc | Imran Rashid | 09 April 2018, 18:31:21 UTC | [SPARK-23816][CORE] Killed tasks should ignore FetchFailures. SPARK-19276 ensured that FetchFailures do not get swallowed by other layers of exception handling, but it also meant that a killed task could look like a fetch failure. This is particularly a problem with speculative execution, where we expect to kill tasks as they are reading shuffle data. The fix is to ensure that we always check for killed tasks first. Added a new unit test which fails before the fix, ran it 1k times to check for flakiness. Full suite of tests on jenkins. Author: Imran Rashid <irashid@cloudera.com> Closes #20987 from squito/SPARK-23816. (cherry picked from commit 10f45bb8233e6ac838dd4f053052c8556f5b54bd) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 09 April 2018, 18:31:39 UTC |

| bf1dabe | Xingbo Jiang | 09 April 2018, 17:19:22 UTC | [SPARK-23881][CORE][TEST] Fix flaky test JobCancellationSuite."interruptible iterator of shuffle reader" ## What changes were proposed in this pull request? The test case JobCancellationSuite."interruptible iterator of shuffle reader" has been flaky because `KillTask` event is handled asynchronously, so it can happen that the semaphore is released but the task is still running. Actually we only have to check if the total number of processed elements is less than the input elements number, so we know the task get cancelled. ## How was this patch tested? The new test case still fails without the purposed patch, and succeeded in current master. Author: Xingbo Jiang <xingbo.jiang@databricks.com> Closes #20993 from jiangxb1987/JobCancellationSuite. (cherry picked from commit d81f29ecafe8fc9816e36087e3b8acdc93d6cc1b) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 09 April 2018, 17:19:33 UTC |

| 1a537a2 | Eric Liang | 08 April 2018, 04:18:50 UTC | [SPARK-23809][SQL][BACKPORT] Active SparkSession should be set by getOrCreate This backports https://github.com/apache/spark/pull/20927 to branch-2.3 ## What changes were proposed in this pull request? Currently, the active spark session is set inconsistently (e.g., in createDataFrame, prior to query execution). Many places in spark also incorrectly query active session when they should be calling activeSession.getOrElse(defaultSession) and so might get None even if a Spark session exists. The semantics here can be cleaned up if we also set the active session when the default session is set. Related: https://github.com/apache/spark/pull/20926/files ## How was this patch tested? Unit test, existing test. Note that if https://github.com/apache/spark/pull/20926 merges first we should also update the tests there. Author: Eric Liang <ekl@databricks.com> Closes #20971 from ericl/backport-23809. | 08 April 2018, 04:18:50 UTC |

| ccc4a20 | Yuchen Huo | 06 April 2018, 15:35:20 UTC | [SPARK-23822][SQL] Improve error message for Parquet schema mismatches ## What changes were proposed in this pull request? This pull request tries to improve the error message for spark while reading parquet files with different schemas, e.g. One with a STRING column and the other with a INT column. A new ParquetSchemaColumnConvertNotSupportedException is added to replace the old UnsupportedOperationException. The Exception is again wrapped in FileScanRdd.scala to throw a more a general QueryExecutionException with the actual parquet file name which trigger the exception. ## How was this patch tested? Unit tests added to check the new exception and verify the error messages. Also manually tested with two parquet with different schema to check the error message. <img width="1125" alt="screen shot 2018-03-30 at 4 03 04 pm" src="https://user-images.githubusercontent.com/37087310/38156580-dd58a140-3433-11e8-973a-b816d859fbe1.png"> Author: Yuchen Huo <yuchen.huo@databricks.com> Closes #20953 from yuchenhuo/SPARK-23822. (cherry picked from commit 94524019315ad463f9bc13c107131091d17c6af9) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 06 April 2018, 15:36:20 UTC |

| f93667f | JiahuiJiang | 06 April 2018, 03:06:08 UTC | [SPARK-23823][SQL] Keep origin in transformExpression Fixes https://issues.apache.org/jira/browse/SPARK-23823 Keep origin for all the methods using transformExpression ## What changes were proposed in this pull request? Keep origin in transformExpression ## How was this patch tested? Manually tested that this fixes https://issues.apache.org/jira/browse/SPARK-23823 and columns have correct origins after Analyzer.analyze Author: JiahuiJiang <jjiang@palantir.com> Author: Jiahui Jiang <jjiang@palantir.com> Closes #20961 from JiahuiJiang/jj/keep-origin. (cherry picked from commit d65e531b44a388fed25d3cbf28fdce5a2d0598e6) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 06 April 2018, 03:06:19 UTC |

| 0b7b8cc | jinxing | 04 April 2018, 22:51:27 UTC | [SPARK-23637][YARN] Yarn might allocate more resource if a same executor is killed multiple times. ## What changes were proposed in this pull request? `YarnAllocator` uses `numExecutorsRunning` to track the number of running executor. `numExecutorsRunning` is used to check if there're executors missing and need to allocate more. In current code, `numExecutorsRunning` can be negative when driver asks to kill a same idle executor multiple times. ## How was this patch tested? UT added Author: jinxing <jinxing6042@126.com> Closes #20781 from jinxing64/SPARK-23637. (cherry picked from commit d3bd0435ee4ff3d414f32cce3f58b6b9f67e68bc) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 04 April 2018, 22:51:38 UTC |

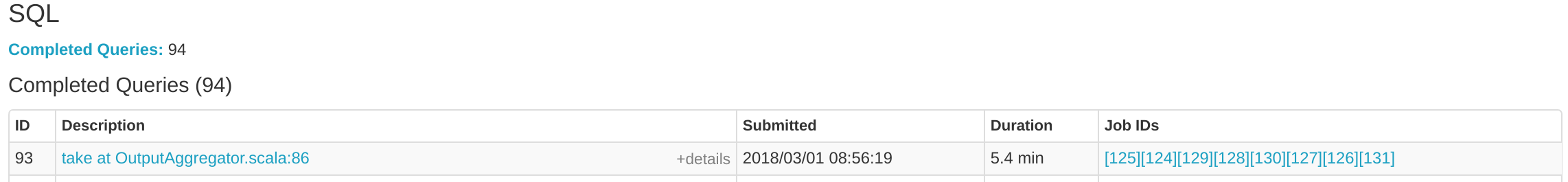

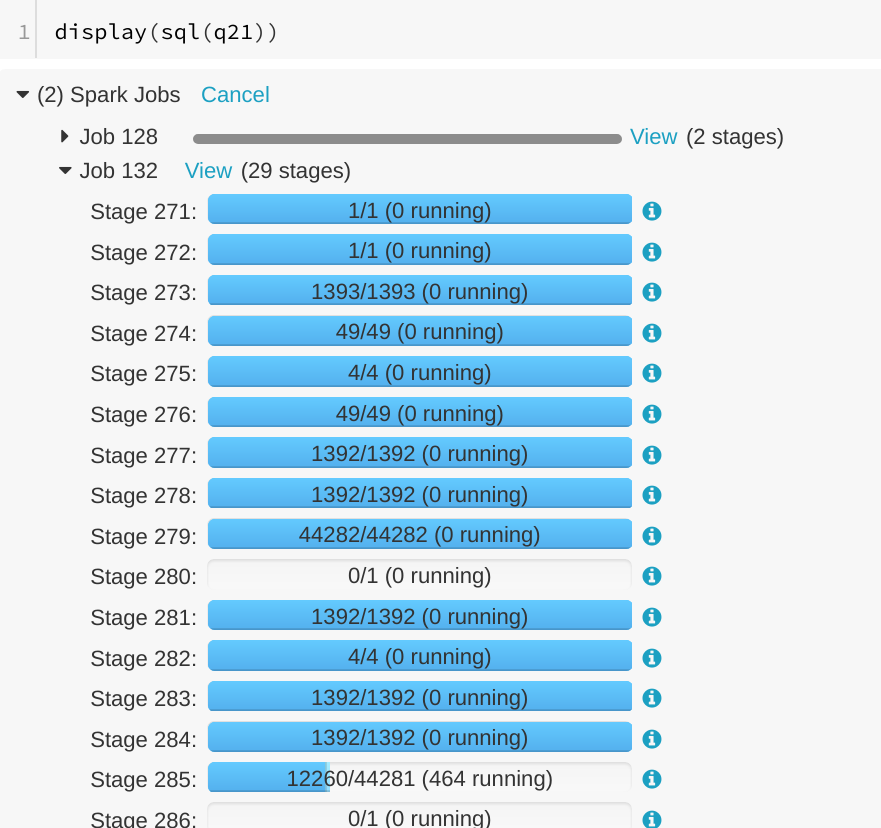

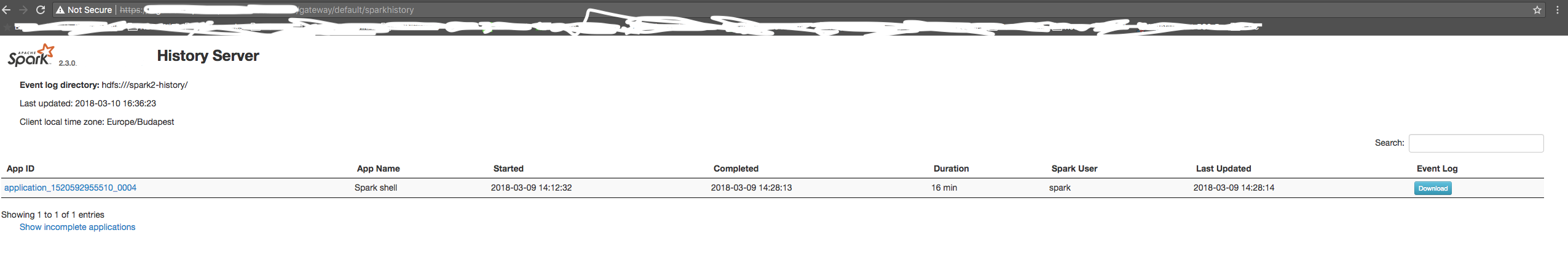

| a81e203 | Gengliang Wang | 04 April 2018, 22:43:58 UTC | [SPARK-23838][WEBUI] Running SQL query is displayed as "completed" in SQL tab ## What changes were proposed in this pull request? A running SQL query would appear as completed in the Spark UI:  We can see the query in "Completed queries", while in in the job page we see it's still running Job 132.  After some time in the query still appears in "Completed queries" (while it's still running), but the "Duration" gets increased.  To reproduce, we can run a query with multiple jobs. E.g. Run TPCDS q6. The reason is that updates from executions are written into kvstore periodically, and the job start event may be missed. ## How was this patch tested? Manually run the job again and check the SQL Tab. The fix is pretty simple. Author: Gengliang Wang <gengliang.wang@databricks.com> Closes #20955 from gengliangwang/jobCompleted. (cherry picked from commit d8379e5bc3629f4e8233ad42831bdaf68c24cfeb) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 04 April 2018, 22:44:11 UTC |

| 28c9adb | Robert Kruszewski | 04 April 2018, 00:25:54 UTC | [SPARK-23802][SQL] PropagateEmptyRelation can leave query plan in unresolved state ## What changes were proposed in this pull request? Add cast to nulls introduced by PropagateEmptyRelation so in cases they're part of coalesce they will not break its type checking rules ## How was this patch tested? Added unit test Author: Robert Kruszewski <robertk@palantir.com> Closes #20914 from robert3005/rk/propagate-empty-fix. (cherry picked from commit 5cfd5fabcdbd77a806b98a6dd59b02772d2f6dee) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 04 April 2018, 00:26:06 UTC |

| f36bdb4 | Xingbo Jiang | 03 April 2018, 13:26:49 UTC | [MINOR][CORE] Show block manager id when remove RDD/Broadcast fails. ## What changes were proposed in this pull request? Address https://github.com/apache/spark/pull/20924#discussion_r177987175, show block manager id when remove RDD/Broadcast fails. ## How was this patch tested? N/A Author: Xingbo Jiang <xingbo.jiang@databricks.com> Closes #20960 from jiangxb1987/bmid. (cherry picked from commit 7cf9fab33457ccc9b2d548f15dd5700d5e8d08ef) Signed-off-by: hyukjinkwon <gurwls223@apache.org> | 03 April 2018, 13:27:05 UTC |

| ce15651 | lemonjing | 03 April 2018, 01:36:44 UTC | [MINOR][DOC] Fix a few markdown typos ## What changes were proposed in this pull request? Easy fix in the markdown. ## How was this patch tested? jekyII build test manually. Please review http://spark.apache.org/contributing.html before opening a pull request. Author: lemonjing <932191671@qq.com> Closes #20897 from Lemonjing/master. (cherry picked from commit 8020f66fc47140a1b5f843fb18c34ec80541d5ca) Signed-off-by: hyukjinkwon <gurwls223@apache.org> | 03 April 2018, 01:36:59 UTC |

| 6ca6483 | Marcelo Vanzin | 03 April 2018, 01:31:47 UTC | [SPARK-19964][CORE] Avoid reading from remote repos in SparkSubmitSuite. These tests can fail with a timeout if the remote repos are not responding, or slow. The tests don't need anything from those repos, so use an empty ivy config file to avoid setting up the defaults. The tests are passing reliably for me locally now, and failing more often than not today without this change since http://dl.bintray.com/spark-packages/maven doesn't seem to be loading from my machine. Author: Marcelo Vanzin <vanzin@cloudera.com> Closes #20916 from vanzin/SPARK-19964. (cherry picked from commit 441d0d0766e9a6ac4c6ff79680394999ff7191fd) Signed-off-by: hyukjinkwon <gurwls223@apache.org> | 03 April 2018, 01:32:03 UTC |

| f1f10da | Xianjin YE | 01 April 2018, 14:58:52 UTC | [SPARK-23040][BACKPORT][CORE] Returns interruptible iterator for shuffle reader Backport https://github.com/apache/spark/pull/20449 and https://github.com/apache/spark/pull/20920 to branch-2.3 --- ## What changes were proposed in this pull request? Before this commit, a non-interruptible iterator is returned if aggregator or ordering is specified. This commit also ensures that sorter is closed even when task is cancelled(killed) in the middle of sorting. ## How was this patch tested? Add a unit test in JobCancellationSuite Author: Xianjin YE <advancedxy@gmail.com> Author: Xingbo Jiang <xingbo.jiang@databricks.com> Closes #20954 from jiangxb1987/SPARK-23040-2.3. | 01 April 2018, 14:58:52 UTC |

| 507cff2 | Tathagata Das | 30 March 2018, 23:48:26 UTC | [SPARK-23827][SS] StreamingJoinExec should ensure that input data is partitioned into specific number of partitions ## What changes were proposed in this pull request? Currently, the requiredChildDistribution does not specify the partitions. This can cause the weird corner cases where the child's distribution is `SinglePartition` which satisfies the required distribution of `ClusterDistribution(no-num-partition-requirement)`, thus eliminating the shuffle needed to repartition input data into the required number of partitions (i.e. same as state stores). That can lead to "file not found" errors on the state store delta files as the micro-batch-with-no-shuffle will not run certain tasks and therefore not generate the expected state store delta files. This PR adds the required constraint on the number of partitions. ## How was this patch tested? Modified test harness to always check that ANY stateful operator should have a constraint on the number of partitions. As part of that, the existing opt-in checks on child output partitioning were removed, as they are redundant. Author: Tathagata Das <tathagata.das1565@gmail.com> Closes #20941 from tdas/SPARK-23827. (cherry picked from commit 15298b99ac8944e781328423289586176cf824d7) Signed-off-by: Tathagata Das <tathagata.das1565@gmail.com> | 30 March 2018, 23:48:55 UTC |

| 3f5955a | Marcelo Vanzin | 30 March 2018, 17:25:17 UTC | Revert "[SPARK-23785][LAUNCHER] LauncherBackend doesn't check state of connection before setting state" This reverts commit 0bfbcaf6696570b74923047266b00ba4dc2ba97c. | 30 March 2018, 17:25:17 UTC |

| 1365d73 | Jose Torres | 30 March 2018, 04:36:56 UTC | [SPARK-23808][SQL] Set default Spark session in test-only spark sessions. ## What changes were proposed in this pull request? Set default Spark session in the TestSparkSession and TestHiveSparkSession constructors. ## How was this patch tested? new unit tests Author: Jose Torres <torres.joseph.f+github@gmail.com> Closes #20926 from jose-torres/test3. (cherry picked from commit b348901192b231153b58fe5720253168c87963d4) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 30 March 2018, 04:37:07 UTC |

| 5163045 | Kent Yao | 29 March 2018, 17:46:28 UTC | [SPARK-23639][SQL] Obtain token before init metastore client in SparkSQL CLI ## What changes were proposed in this pull request? In SparkSQLCLI, SessionState generates before SparkContext instantiating. When we use --proxy-user to impersonate, it's unable to initializing a metastore client to talk to the secured metastore for no kerberos ticket. This PR use real user ugi to obtain token for owner before talking to kerberized metastore. ## How was this patch tested? Manually verified with kerberized hive metasotre / hdfs. Author: Kent Yao <yaooqinn@hotmail.com> Closes #20784 from yaooqinn/SPARK-23639. (cherry picked from commit a7755fd8ce2f022118b9827aaac7d5d59f0f297a) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 29 March 2018, 17:46:39 UTC |

| 0bfbcaf | Sahil Takiar | 29 March 2018, 17:23:23 UTC | [SPARK-23785][LAUNCHER] LauncherBackend doesn't check state of connection before setting state ## What changes were proposed in this pull request? Changed `LauncherBackend` `set` method so that it checks if the connection is open or not before writing to it (uses `isConnected`). ## How was this patch tested? None Author: Sahil Takiar <stakiar@cloudera.com> Closes #20893 from sahilTakiar/master. (cherry picked from commit 491ec114fd3886ebd9fa29a482e3d112fb5a088c) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 29 March 2018, 17:23:35 UTC |

| 38c0bd7 | Thomas Graves | 29 March 2018, 08:37:46 UTC | [SPARK-23806] Broadcast.unpersist can cause fatal exception when used… … with dynamic allocation ## What changes were proposed in this pull request? ignore errors when you are waiting for a broadcast.unpersist. This is handling it the same way as doing rdd.unpersist in https://issues.apache.org/jira/browse/SPARK-22618 ## How was this patch tested? Patch was tested manually against a couple jobs that exhibit this behavior, with the change the application no longer dies due to this and just prints the warning. Please review http://spark.apache.org/contributing.html before opening a pull request. Author: Thomas Graves <tgraves@unharmedunarmed.corp.ne1.yahoo.com> Closes #20924 from tgravescs/SPARK-23806. (cherry picked from commit 641aec68e8167546dbb922874c086c9b90198f08) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 29 March 2018, 08:38:07 UTC |