https://github.com/apache/spark

- HEAD

- refs/heads/branch-0.5

- refs/heads/branch-0.6

- refs/heads/branch-0.7

- refs/heads/branch-0.8

- refs/heads/branch-0.9

- refs/heads/branch-1.0

- refs/heads/branch-1.0-jdbc

- refs/heads/branch-1.1

- refs/heads/branch-1.2

- refs/heads/branch-1.3

- refs/heads/branch-1.4

- refs/heads/branch-1.5

- refs/heads/branch-1.6

- refs/heads/branch-2.0

- refs/heads/branch-2.1

- refs/heads/branch-2.2

- refs/heads/branch-2.3

- refs/heads/branch-2.4

- refs/heads/branch-3.0

- refs/heads/branch-3.1

- refs/heads/branch-3.2

- refs/heads/branch-3.3

- refs/heads/branch-3.4

- refs/heads/branch-3.5

- refs/heads/master

- refs/remotes/origin/branch-0.8

- refs/remotes/origin/td-rdd-save

- refs/tags/0.3-scala-2.8

- refs/tags/0.3-scala-2.9

- refs/tags/2.0.0-preview

- refs/tags/alpha-0.1

- refs/tags/alpha-0.2

- refs/tags/v0.5.0

- refs/tags/v0.5.1

- refs/tags/v0.5.2

- refs/tags/v0.6.0

- refs/tags/v0.6.0-yarn

- refs/tags/v0.6.1

- refs/tags/v0.6.2

- refs/tags/v0.7.0

- refs/tags/v0.7.0-bizo-1

- refs/tags/v0.7.1

- refs/tags/v0.7.2

- refs/tags/v0.9.1

- refs/tags/v0.9.2

- refs/tags/v1.0.0

- refs/tags/v1.0.1

- refs/tags/v1.0.2

- refs/tags/v1.1.0

- refs/tags/v1.1.1

- refs/tags/v1.2.0

- refs/tags/v1.2.1

- refs/tags/v1.2.2

- refs/tags/v1.3.0

- refs/tags/v1.3.1

- refs/tags/v1.4.0

- refs/tags/v1.4.1

- refs/tags/v1.5.0-rc1

- refs/tags/v1.5.0-rc2

- refs/tags/v1.5.0-rc3

- refs/tags/v1.5.1

- refs/tags/v1.6.0

- refs/tags/v1.6.1

- refs/tags/v1.6.2

- refs/tags/v1.6.3

- refs/tags/v2.0.0

- refs/tags/v2.0.1

- refs/tags/v2.0.2

- refs/tags/v2.1.0

- refs/tags/v2.1.1

- refs/tags/v2.1.2

- refs/tags/v2.1.2-rc1

- refs/tags/v2.1.2-rc2

- refs/tags/v2.1.2-rc3

- refs/tags/v2.1.2-rc4

- refs/tags/v2.1.3

- refs/tags/v2.1.3-rc1

- refs/tags/v2.1.3-rc2

- refs/tags/v2.2.0

- refs/tags/v2.2.1

- refs/tags/v2.2.1-rc1

- refs/tags/v2.2.1-rc2

- refs/tags/v2.2.2

- refs/tags/v2.2.2-rc1

- refs/tags/v2.2.2-rc2

- refs/tags/v2.2.3

- refs/tags/v2.2.3-rc1

- refs/tags/v2.3.0

- refs/tags/v2.3.0-rc1

- refs/tags/v2.3.0-rc2

- refs/tags/v2.3.0-rc3

- refs/tags/v2.3.0-rc4

- refs/tags/v2.3.1

- refs/tags/v2.3.1-rc1

- refs/tags/v2.3.1-rc2

- refs/tags/v2.3.1-rc3

- refs/tags/v2.3.1-rc4

- refs/tags/v2.3.2

- refs/tags/v2.3.2-rc1

- refs/tags/v2.3.2-rc2

- refs/tags/v2.3.2-rc3

- refs/tags/v2.3.2-rc4

- refs/tags/v2.3.2-rc5

- refs/tags/v2.3.2-rc6

- refs/tags/v2.3.3

- refs/tags/v2.3.3-rc1

- refs/tags/v2.3.3-rc2

- refs/tags/v2.3.4

- refs/tags/v2.3.4-rc1

- refs/tags/v2.4.0

- refs/tags/v2.4.0-rc1

- refs/tags/v2.4.0-rc2

- refs/tags/v2.4.0-rc3

- refs/tags/v2.4.0-rc4

- refs/tags/v2.4.0-rc5

- refs/tags/v2.4.1

- refs/tags/v2.4.1-rc1

- refs/tags/v2.4.1-rc2

- refs/tags/v2.4.1-rc3

- refs/tags/v2.4.1-rc4

- refs/tags/v2.4.1-rc5

- refs/tags/v2.4.1-rc6

- refs/tags/v2.4.1-rc7

- refs/tags/v2.4.1-rc8

- refs/tags/v2.4.1-rc9

- refs/tags/v2.4.2

- refs/tags/v2.4.2-rc1

- refs/tags/v2.4.3

- refs/tags/v2.4.3-rc1

- refs/tags/v2.4.4

- refs/tags/v2.4.4-rc1

- refs/tags/v2.4.4-rc2

- refs/tags/v2.4.4-rc3

- refs/tags/v2.4.5

- refs/tags/v2.4.5-rc1

- refs/tags/v2.4.5-rc2

- refs/tags/v2.4.6

- refs/tags/v2.4.6-rc1

- refs/tags/v2.4.6-rc2

- refs/tags/v2.4.6-rc3

- refs/tags/v2.4.6-rc4

- refs/tags/v2.4.6-rc5

- refs/tags/v2.4.6-rc6

- refs/tags/v2.4.6-rc7

- refs/tags/v2.4.6-rc8

- refs/tags/v2.4.7

- refs/tags/v2.4.7-rc1

- refs/tags/v2.4.7-rc2

- refs/tags/v2.4.7-rc3

- refs/tags/v2.4.8

- refs/tags/v2.4.8-rc1

- refs/tags/v2.4.8-rc2

- refs/tags/v2.4.8-rc3

- refs/tags/v2.4.8-rc4

- refs/tags/v3.0.0

- refs/tags/v3.0.0-preview2

- refs/tags/v3.0.0-preview2-rc1

- refs/tags/v3.0.0-preview2-rc2

- refs/tags/v3.0.0-rc1

- refs/tags/v3.0.0-rc2

- refs/tags/v3.0.0-rc3

- refs/tags/v3.0.1

- refs/tags/v3.0.1-rc1

- refs/tags/v3.0.1-rc2

- refs/tags/v3.0.1-rc3

- refs/tags/v3.0.2

- refs/tags/v3.0.2-rc1

- refs/tags/v3.0.3

- refs/tags/v3.0.3-rc1

- refs/tags/v3.1.0-rc1

- refs/tags/v3.1.1

- refs/tags/v3.1.1-rc1

- refs/tags/v3.1.1-rc2

- refs/tags/v3.1.1-rc3

- refs/tags/v3.1.2

- refs/tags/v3.1.2-rc1

- refs/tags/v3.1.3

- refs/tags/v3.1.3-rc1

- refs/tags/v3.1.3-rc2

- refs/tags/v3.1.3-rc3

- refs/tags/v3.1.3-rc4

- refs/tags/v3.2.0

- refs/tags/v3.2.0-rc1

- refs/tags/v3.2.0-rc2

- refs/tags/v3.2.0-rc3

- refs/tags/v3.2.0-rc4

- refs/tags/v3.2.0-rc5

- refs/tags/v3.2.0-rc6

- refs/tags/v3.2.0-rc7

- refs/tags/v3.2.1

- refs/tags/v3.2.1-rc1

- refs/tags/v3.2.1-rc2

- refs/tags/v3.2.2

- refs/tags/v3.2.2-rc1

- refs/tags/v3.2.3

- refs/tags/v3.2.3-rc1

- refs/tags/v3.2.4

- refs/tags/v3.2.4-rc1

- refs/tags/v3.3.0

- refs/tags/v3.3.0-rc1

- refs/tags/v3.3.0-rc2

- refs/tags/v3.3.0-rc3

- refs/tags/v3.3.0-rc4

- refs/tags/v3.3.0-rc5

- refs/tags/v3.3.0-rc6

- refs/tags/v3.3.1

- refs/tags/v3.3.1-rc1

- refs/tags/v3.3.1-rc2

- refs/tags/v3.3.1-rc3

- refs/tags/v3.3.1-rc4

- refs/tags/v3.3.2

- refs/tags/v3.3.2-rc1

- refs/tags/v3.3.3

- refs/tags/v3.3.3-rc1

- refs/tags/v3.3.4

- refs/tags/v3.3.4-rc1

- refs/tags/v3.4.0

- refs/tags/v3.4.0-rc1

- refs/tags/v3.4.0-rc2

- refs/tags/v3.4.0-rc3

- refs/tags/v3.4.0-rc4

- refs/tags/v3.4.0-rc5

- refs/tags/v3.4.0-rc6

- refs/tags/v3.4.0-rc7

- refs/tags/v3.4.1

- refs/tags/v3.4.1-rc1

- refs/tags/v3.4.2

- refs/tags/v3.4.2-rc1

- refs/tags/v3.5.0

- refs/tags/v3.5.0-rc1

- refs/tags/v3.5.0-rc2

- refs/tags/v3.5.0-rc3

- refs/tags/v3.5.0-rc4

- refs/tags/v3.5.0-rc5

- refs/tags/v3.5.1

- refs/tags/v3.5.1-rc1

- refs/tags/v3.5.1-rc2

Take a new snapshot of a software origin

If the archived software origin currently browsed is not synchronized with its upstream version (for instance when new commits have been issued), you can explicitly request Software Heritage to take a new snapshot of it.

Use the form below to proceed. Once a request has been submitted and accepted, it will be processed as soon as possible. You can then check its processing state by visiting this dedicated page.

Processing "take a new snapshot" request ...

Permalinks

To reference or cite the objects present in the Software Heritage archive, permalinks based on SoftWare Hash IDentifiers (SWHIDs) must be used.

Select below a type of object currently browsed in order to display its associated SWHID and permalink.

| Revision | Author | Date | Message | Commit Date |

|---|---|---|---|---|

| 4df06b4 | Saisai Shao | 08 July 2018, 01:24:42 UTC | Preparing Spark release v2.3.2-rc1 | 08 July 2018, 01:24:42 UTC |

| 64c72b4 | hyukjinkwon | 07 July 2018, 03:37:41 UTC | [SPARK-24739][PYTHON] Make PySpark compatible with Python 3.7 ## What changes were proposed in this pull request? This PR proposes to make PySpark compatible with Python 3.7. There are rather radical change in semantic of `StopIteration` within a generator. It now throws it as a `RuntimeError`. To make it compatible, we should fix it: ```python try: next(...) except StopIteration return ``` See [release note](https://docs.python.org/3/whatsnew/3.7.html#porting-to-python-3-7) and [PEP 479](https://www.python.org/dev/peps/pep-0479/). ## How was this patch tested? Manually tested: ``` $ ./run-tests --python-executables=python3.7 Running PySpark tests. Output is in /.../spark/python/unit-tests.log Will test against the following Python executables: ['python3.7'] Will test the following Python modules: ['pyspark-core', 'pyspark-ml', 'pyspark-mllib', 'pyspark-sql', 'pyspark-streaming'] Starting test(python3.7): pyspark.mllib.tests Starting test(python3.7): pyspark.sql.tests Starting test(python3.7): pyspark.streaming.tests Starting test(python3.7): pyspark.tests Finished test(python3.7): pyspark.streaming.tests (130s) Starting test(python3.7): pyspark.accumulators Finished test(python3.7): pyspark.accumulators (8s) Starting test(python3.7): pyspark.broadcast Finished test(python3.7): pyspark.broadcast (9s) Starting test(python3.7): pyspark.conf Finished test(python3.7): pyspark.conf (6s) Starting test(python3.7): pyspark.context Finished test(python3.7): pyspark.context (27s) Starting test(python3.7): pyspark.ml.classification Finished test(python3.7): pyspark.tests (200s) ... 3 tests were skipped Starting test(python3.7): pyspark.ml.clustering Finished test(python3.7): pyspark.mllib.tests (244s) Starting test(python3.7): pyspark.ml.evaluation Finished test(python3.7): pyspark.ml.classification (63s) Starting test(python3.7): pyspark.ml.feature Finished test(python3.7): pyspark.ml.clustering (48s) Starting test(python3.7): pyspark.ml.fpm Finished test(python3.7): pyspark.ml.fpm (0s) Starting test(python3.7): pyspark.ml.image Finished test(python3.7): pyspark.ml.evaluation (23s) Starting test(python3.7): pyspark.ml.linalg.__init__ Finished test(python3.7): pyspark.ml.linalg.__init__ (0s) Starting test(python3.7): pyspark.ml.recommendation Finished test(python3.7): pyspark.ml.image (20s) Starting test(python3.7): pyspark.ml.regression Finished test(python3.7): pyspark.ml.regression (58s) Starting test(python3.7): pyspark.ml.stat Finished test(python3.7): pyspark.ml.feature (90s) Starting test(python3.7): pyspark.ml.tests Finished test(python3.7): pyspark.ml.recommendation (82s) Starting test(python3.7): pyspark.ml.tuning Finished test(python3.7): pyspark.ml.stat (27s) Starting test(python3.7): pyspark.mllib.classification Finished test(python3.7): pyspark.sql.tests (362s) ... 102 tests were skipped Starting test(python3.7): pyspark.mllib.clustering Finished test(python3.7): pyspark.ml.tuning (29s) Starting test(python3.7): pyspark.mllib.evaluation Finished test(python3.7): pyspark.mllib.classification (39s) Starting test(python3.7): pyspark.mllib.feature Finished test(python3.7): pyspark.mllib.evaluation (30s) Starting test(python3.7): pyspark.mllib.fpm Finished test(python3.7): pyspark.mllib.feature (44s) Starting test(python3.7): pyspark.mllib.linalg.__init__ Finished test(python3.7): pyspark.mllib.linalg.__init__ (0s) Starting test(python3.7): pyspark.mllib.linalg.distributed Finished test(python3.7): pyspark.mllib.clustering (78s) Starting test(python3.7): pyspark.mllib.random Finished test(python3.7): pyspark.mllib.fpm (33s) Starting test(python3.7): pyspark.mllib.recommendation Finished test(python3.7): pyspark.mllib.random (12s) Starting test(python3.7): pyspark.mllib.regression Finished test(python3.7): pyspark.mllib.linalg.distributed (45s) Starting test(python3.7): pyspark.mllib.stat.KernelDensity Finished test(python3.7): pyspark.mllib.stat.KernelDensity (0s) Starting test(python3.7): pyspark.mllib.stat._statistics Finished test(python3.7): pyspark.mllib.recommendation (41s) Starting test(python3.7): pyspark.mllib.tree Finished test(python3.7): pyspark.mllib.regression (44s) Starting test(python3.7): pyspark.mllib.util Finished test(python3.7): pyspark.mllib.stat._statistics (20s) Starting test(python3.7): pyspark.profiler Finished test(python3.7): pyspark.mllib.tree (26s) Starting test(python3.7): pyspark.rdd Finished test(python3.7): pyspark.profiler (11s) Starting test(python3.7): pyspark.serializers Finished test(python3.7): pyspark.mllib.util (24s) Starting test(python3.7): pyspark.shuffle Finished test(python3.7): pyspark.shuffle (0s) Starting test(python3.7): pyspark.sql.catalog Finished test(python3.7): pyspark.serializers (15s) Starting test(python3.7): pyspark.sql.column Finished test(python3.7): pyspark.rdd (27s) Starting test(python3.7): pyspark.sql.conf Finished test(python3.7): pyspark.sql.catalog (24s) Starting test(python3.7): pyspark.sql.context Finished test(python3.7): pyspark.sql.conf (8s) Starting test(python3.7): pyspark.sql.dataframe Finished test(python3.7): pyspark.sql.column (29s) Starting test(python3.7): pyspark.sql.functions Finished test(python3.7): pyspark.sql.context (26s) Starting test(python3.7): pyspark.sql.group Finished test(python3.7): pyspark.sql.dataframe (51s) Starting test(python3.7): pyspark.sql.readwriter Finished test(python3.7): pyspark.ml.tests (266s) Starting test(python3.7): pyspark.sql.session Finished test(python3.7): pyspark.sql.group (36s) Starting test(python3.7): pyspark.sql.streaming Finished test(python3.7): pyspark.sql.functions (57s) Starting test(python3.7): pyspark.sql.types Finished test(python3.7): pyspark.sql.session (25s) Starting test(python3.7): pyspark.sql.udf Finished test(python3.7): pyspark.sql.types (10s) Starting test(python3.7): pyspark.sql.window Finished test(python3.7): pyspark.sql.readwriter (31s) Starting test(python3.7): pyspark.streaming.util Finished test(python3.7): pyspark.sql.streaming (22s) Starting test(python3.7): pyspark.util Finished test(python3.7): pyspark.util (0s) Finished test(python3.7): pyspark.streaming.util (0s) Finished test(python3.7): pyspark.sql.udf (16s) Finished test(python3.7): pyspark.sql.window (12s) ``` In my local (I have two Macs but both have the same issues), I currently faced some issues for now to install both extra dependencies PyArrow and Pandas same as Jenkins's, against Python 3.7. Author: hyukjinkwon <gurwls223@apache.org> Closes #21714 from HyukjinKwon/SPARK-24739. (cherry picked from commit 74f6a92fcea9196d62c2d531c11ec7efd580b760) Signed-off-by: hyukjinkwon <gurwls223@apache.org> | 07 July 2018, 03:37:58 UTC |

| e5cc5f6 | Felix Cheung | 06 July 2018, 07:08:03 UTC | [SPARK-24535][SPARKR] fix tests on java check error ## What changes were proposed in this pull request? change to skip tests if - couldn't determine java version fix problem on windows ## How was this patch tested? unit test, manual, win-builder Author: Felix Cheung <felixcheung_m@hotmail.com> Closes #21666 from felixcheung/rjavaskip. (cherry picked from commit 141953f4c44dbad1c2a7059e92bec5fe770af932) Signed-off-by: Felix Cheung <felixcheung@apache.org> | 06 July 2018, 07:08:20 UTC |

| bc7ee75 | Marco Gaido | 03 July 2018, 04:20:03 UTC | [SPARK-24385][SQL] Resolve self-join condition ambiguity for EqualNullSafe ## What changes were proposed in this pull request? In Dataset.join we have a small hack for resolving ambiguity in the column name for self-joins. The current code supports only `EqualTo`. The PR extends the fix to `EqualNullSafe`. Credit for this PR should be given to daniel-shields. ## How was this patch tested? added UT Author: Marco Gaido <marcogaido91@gmail.com> Closes #21605 from mgaido91/SPARK-24385_2. (cherry picked from commit a7c8f0c8cb144a026ea21e8780107e363ceacb8d) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 03 July 2018, 04:28:58 UTC |

| 1cba050 | Rekha Joshi | 02 July 2018, 14:39:00 UTC | [SPARK-24507][DOCUMENTATION] Update streaming guide ## What changes were proposed in this pull request? Updated streaming guide for direct stream and link to integration guide. ## How was this patch tested? jekyll build Author: Rekha Joshi <rekhajoshm@gmail.com> Closes #21683 from rekhajoshm/SPARK-24507. (cherry picked from commit f599cde69506a5aedeeec449cba9a8b5ab128282) Signed-off-by: hyukjinkwon <gurwls223@apache.org> | 02 July 2018, 14:39:22 UTC |

| 3c0af79 | maryannxue | 30 June 2018, 06:46:12 UTC | [SPARK-24696][SQL] ColumnPruning rule fails to remove extra Project The ColumnPruning rule tries adding an extra Project if an input node produces fields more than needed, but as a post-processing step, it needs to remove the lower Project in the form of "Project - Filter - Project" otherwise it would conflict with PushPredicatesThroughProject and would thus cause a infinite optimization loop. The current post-processing method is defined as: ``` private def removeProjectBeforeFilter(plan: LogicalPlan): LogicalPlan = plan transform { case p1 Project(_, f Filter(_, p2 Project(_, child))) if p2.outputSet.subsetOf(child.outputSet) => p1.copy(child = f.copy(child = child)) } ``` This method works well when there is only one Filter but would not if there's two or more Filters. In this case, there is a deterministic filter and a non-deterministic filter so they stay as separate filter nodes and cannot be combined together. An simplified illustration of the optimization process that forms the infinite loop is shown below (F1 stands for the 1st filter, F2 for the 2nd filter, P for project, S for scan of relation, PredicatePushDown as abbrev. of PushPredicatesThroughProject): ``` F1 - F2 - P - S PredicatePushDown => F1 - P - F2 - S ColumnPruning => F1 - P - F2 - P - S => F1 - P - F2 - S (Project removed) PredicatePushDown => P - F1 - F2 - S ColumnPruning => P - F1 - P - F2 - S => P - F1 - P - F2 - P - S => P - F1 - F2 - P - S (only one Project removed) RemoveRedundantProject => F1 - F2 - P - S (goes back to the loop start) ``` So the problem is the ColumnPruning rule adds a Project under a Filter (and fails to remove it in the end), and that new Project triggers PushPredicateThroughProject. Once the filters have been push through the Project, a new Project will be added by the ColumnPruning rule and this goes on and on. The fix should be when adding Projects, the rule applies top-down, but later when removing extra Projects, the process should go bottom-up to ensure all extra Projects can be matched. Added a optimization rule test in ColumnPruningSuite; and a end-to-end test in SQLQuerySuite. Author: maryannxue <maryannxue@apache.org> Closes #21674 from maryannxue/spark-24696. | 30 June 2018, 06:57:09 UTC |

| 8ff4b97 | Xiao Li | 30 June 2018, 06:51:13 UTC | simplify rand in dsl/package.scala (cherry picked from commit d54d8b86301581142293341af25fd78b3278a2e8) Signed-off-by: Xiao Li <gatorsmile@gmail.com> | 30 June 2018, 06:53:00 UTC |

| 0f534d3 | Fokko Driesprong | 28 June 2018, 01:59:00 UTC | [SPARK-24603][SQL] Fix findTightestCommonType reference in comments findTightestCommonTypeOfTwo has been renamed to findTightestCommonType ## What changes were proposed in this pull request? (Please fill in changes proposed in this fix) ## How was this patch tested? (Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests) (If this patch involves UI changes, please attach a screenshot; otherwise, remove this) Please review http://spark.apache.org/contributing.html before opening a pull request. Author: Fokko Driesprong <fokkodriesprong@godatadriven.com> Closes #21597 from Fokko/fd-typo. (cherry picked from commit 6a97e8eb31da76fe5af912a6304c07b63735062f) Signed-off-by: hyukjinkwon <gurwls223@apache.org> | 28 June 2018, 02:00:38 UTC |

| 6e1f5e0 | Maryann Xue | 21 June 2018, 18:45:30 UTC | [SPARK-24613][SQL] Cache with UDF could not be matched with subsequent dependent caches Wrap the logical plan with a `AnalysisBarrier` for execution plan compilation in CacheManager, in order to avoid the plan being analyzed again. Add one test in `DatasetCacheSuite` Author: Maryann Xue <maryannxue@apache.org> Closes #21602 from maryannxue/cache-mismatch. | 27 June 2018, 20:20:57 UTC |

| db538b2 | Marcelo Vanzin | 25 June 2018, 23:55:41 UTC | [SPARK-24552][CORE][SQL][BRANCH-2.3] Use unique id instead of attempt number for writes . This passes a unique attempt id instead of attempt number to v2 data sources and hadoop APIs, because attempt number is reused when stages are retried. When attempt numbers are reused, sources that track data by partition id and attempt number may incorrectly clean up data because the same attempt number can be both committed and aborted. Author: Marcelo Vanzin <vanzin@cloudera.com> Closes #21615 from vanzin/SPARK-24552-2.3. | 25 June 2018, 23:55:41 UTC |

| a1e9640 | Wenchen Fan | 21 June 2018, 22:38:46 UTC | [SPARK-24588][SS] streaming join should require HashClusteredPartitioning from children ## What changes were proposed in this pull request? In https://github.com/apache/spark/pull/19080 we simplified the distribution/partitioning framework, and make all the join-like operators require `HashClusteredDistribution` from children. Unfortunately streaming join operator was missed. This can cause wrong result. Think about ``` val input1 = MemoryStream[Int] val input2 = MemoryStream[Int] val df1 = input1.toDF.select('value as 'a, 'value * 2 as 'b) val df2 = input2.toDF.select('value as 'a, 'value * 2 as 'b).repartition('b) val joined = df1.join(df2, Seq("a", "b")).select('a) ``` The physical plan is ``` *(3) Project [a#5] +- StreamingSymmetricHashJoin [a#5, b#6], [a#10, b#11], Inner, condition = [ leftOnly = null, rightOnly = null, both = null, full = null ], state info [ checkpoint = <unknown>, runId = 54e31fce-f055-4686-b75d-fcd2b076f8d8, opId = 0, ver = 0, numPartitions = 5], 0, state cleanup [ left = null, right = null ] :- Exchange hashpartitioning(a#5, b#6, 5) : +- *(1) Project [value#1 AS a#5, (value#1 * 2) AS b#6] : +- StreamingRelation MemoryStream[value#1], [value#1] +- Exchange hashpartitioning(b#11, 5) +- *(2) Project [value#3 AS a#10, (value#3 * 2) AS b#11] +- StreamingRelation MemoryStream[value#3], [value#3] ``` The left table is hash partitioned by `a, b`, while the right table is hash partitioned by `b`. This means, we may have a matching record that is in different partitions, which should be in the output but not. ## How was this patch tested? N/A Author: Wenchen Fan <wenchen@databricks.com> Closes #21587 from cloud-fan/join. (cherry picked from commit dc8a6befa5dad861a731b4d7865f3ccf37482ae0) Signed-off-by: Xiao Li <gatorsmile@gmail.com> | 21 June 2018, 22:39:07 UTC |

| 3a4b6f3 | Marcelo Vanzin | 21 June 2018, 18:25:15 UTC | [SPARK-24589][CORE] Correctly identify tasks in output commit coordinator. When an output stage is retried, it's possible that tasks from the previous attempt are still running. In that case, there would be a new task for the same partition in the new attempt, and the coordinator would allow both tasks to commit their output since it did not keep track of stage attempts. The change adds more information to the stage state tracked by the coordinator, so that only one task is allowed to commit the output in the above case. The stage state in the coordinator is also maintained across stage retries, so that a stray speculative task from a previous stage attempt is not allowed to commit. This also removes some code added in SPARK-18113 that allowed for duplicate commit requests; with the RPC code used in Spark 2, that situation cannot happen, so there is no need to handle it. Author: Marcelo Vanzin <vanzin@cloudera.com> Closes #21577 from vanzin/SPARK-24552. (cherry picked from commit c8e909cd498b67b121fa920ceee7631c652dac38) Signed-off-by: Thomas Graves <tgraves@apache.org> | 21 June 2018, 19:04:21 UTC |

| 8928de3 | Wenbo Zhao | 20 June 2018, 21:26:04 UTC | [SPARK-24578][CORE] Cap sub-region's size of returned nio buffer ## What changes were proposed in this pull request? This PR tries to fix the performance regression introduced by SPARK-21517. In our production job, we performed many parallel computations, with high possibility, some task could be scheduled to a host-2 where it needs to read the cache block data from host-1. Often, this big transfer makes the cluster suffer time out issue (it will retry 3 times, each with 120s timeout, and then do recompute to put the cache block into the local MemoryStore). The root cause is that we don't do `consolidateIfNeeded` anymore as we are using ``` Unpooled.wrappedBuffer(chunks.length, getChunks(): _*) ``` in ChunkedByteBuffer. If we have many small chunks, it could cause the `buf.notBuffer(...)` have very bad performance in the case that we have to call `copyByteBuf(...)` many times. ## How was this patch tested? Existing unit tests and also test in production Author: Wenbo Zhao <wzhao@twosigma.com> Closes #21593 from WenboZhao/spark-24578. (cherry picked from commit 3f4bda7289f1bfbbe8b9bc4b516007f569c44d2e) Signed-off-by: Shixiong Zhu <zsxwing@gmail.com> | 20 June 2018, 21:26:32 UTC |

| d687d97 | Maryann Xue | 19 June 2018, 22:27:20 UTC | [SPARK-24583][SQL] Wrong schema type in InsertIntoDataSourceCommand ## What changes were proposed in this pull request? Change insert input schema type: "insertRelationType" -> "insertRelationType.asNullable", in order to avoid nullable being overridden. ## How was this patch tested? Added one test in InsertSuite. Author: Maryann Xue <maryannxue@apache.org> Closes #21585 from maryannxue/spark-24583. (cherry picked from commit bc0498d5820ded2b428277e396502e74ef0ce36d) Signed-off-by: Xiao Li <gatorsmile@gmail.com> | 19 June 2018, 22:27:30 UTC |

| 50cdb41 | Xiao Li | 19 June 2018, 03:17:04 UTC | [SPARK-24542][SQL] UDF series UDFXPathXXXX allow users to pass carefully crafted XML to access arbitrary files ## What changes were proposed in this pull request? UDF series UDFXPathXXXX allow users to pass carefully crafted XML to access arbitrary files. Spark does not have built-in access control. When users use the external access control library, users might bypass them and access the file contents. This PR basically patches the Hive fix to Apache Spark. https://issues.apache.org/jira/browse/HIVE-18879 ## How was this patch tested? A unit test case Author: Xiao Li <gatorsmile@gmail.com> Closes #21549 from gatorsmile/xpathSecurity. (cherry picked from commit 9a75c18290fff7d116cf88a44f9120bf67d8bd27) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 19 June 2018, 03:17:32 UTC |

| b8dbfcc | Fabrizio Cucci | 18 June 2018, 21:40:24 UTC | Fix issue in 'docker-image-tool.sh' Because of the missing assignment of the variable `BUILD_ARGS` the command `./bin/docker-image-tool.sh -r docker.io/myrepo -t v2.3.1 build` fails: ``` docker build" requires exactly 1 argument. See 'docker build --help'. Usage: docker build [OPTIONS] PATH | URL | - [flags] Build an image from a Dockerfile ``` This has been fixed on the `master` already but, apparently, it has not been ported back to the branch `2.3`, leading to the same error even on the latest `2.3.1` release (dated 8 June 2018). Author: Fabrizio Cucci <fabrizio.cucci@gmail.com> Closes #21551 from fabriziocucci/patch-1. | 18 June 2018, 21:40:24 UTC |

| 9d63e54 | Fangshi Li | 12 June 2018, 19:10:08 UTC | [SPARK-24216][SQL] Spark TypedAggregateExpression uses getSimpleName that is not safe in scala When user create a aggregator object in scala and pass the aggregator to Spark Dataset's agg() method, Spark's will initialize TypedAggregateExpression with the nodeName field as aggregator.getClass.getSimpleName. However, getSimpleName is not safe in scala environment, depending on how user creates the aggregator object. For example, if the aggregator class full qualified name is "com.my.company.MyUtils$myAgg$2$", the getSimpleName will throw java.lang.InternalError "Malformed class name". This has been reported in scalatest https://github.com/scalatest/scalatest/pull/1044 and discussed in many scala upstream jiras such as SI-8110, SI-5425. To fix this issue, we follow the solution in https://github.com/scalatest/scalatest/pull/1044 to add safer version of getSimpleName as a util method, and TypedAggregateExpression will invoke this util method rather than getClass.getSimpleName. added unit test Author: Fangshi Li <fli@linkedin.com> Closes #21276 from fangshil/SPARK-24216. (cherry picked from commit cc88d7fad16e8b5cbf7b6b9bfe412908782b4a45) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 16 June 2018, 03:23:05 UTC |

| d426104 | Kazuaki Ishizaki | 15 June 2018, 20:47:48 UTC | [SPARK-24452][SQL][CORE] Avoid possible overflow in int add or multiple This PR fixes possible overflow in int add or multiply. In particular, their overflows in multiply are detected by [Spotbugs](https://spotbugs.github.io/) The following assignments may cause overflow in right hand side. As a result, the result may be negative. ``` long = int * int long = int + int ``` To avoid this problem, this PR performs cast from int to long in right hand side. Existing UTs. Author: Kazuaki Ishizaki <ishizaki@jp.ibm.com> Closes #21481 from kiszk/SPARK-24452. (cherry picked from commit 90da7dc241f8eec2348c0434312c97c116330bc4) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 15 June 2018, 20:49:04 UTC |

| a7d378e | Marco Gaido | 13 June 2018, 22:18:19 UTC | [SPARK-24531][TESTS] Replace 2.3.0 version with 2.3.1 The PR updates the 2.3 version tested to the new release 2.3.1. existing UTs Author: Marco Gaido <marcogaido91@gmail.com> Closes #21543 from mgaido91/patch-1. (cherry picked from commit 3bf76918fb67fb3ee9aed254d4fb3b87a7e66117) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 15 June 2018, 16:42:24 UTC |

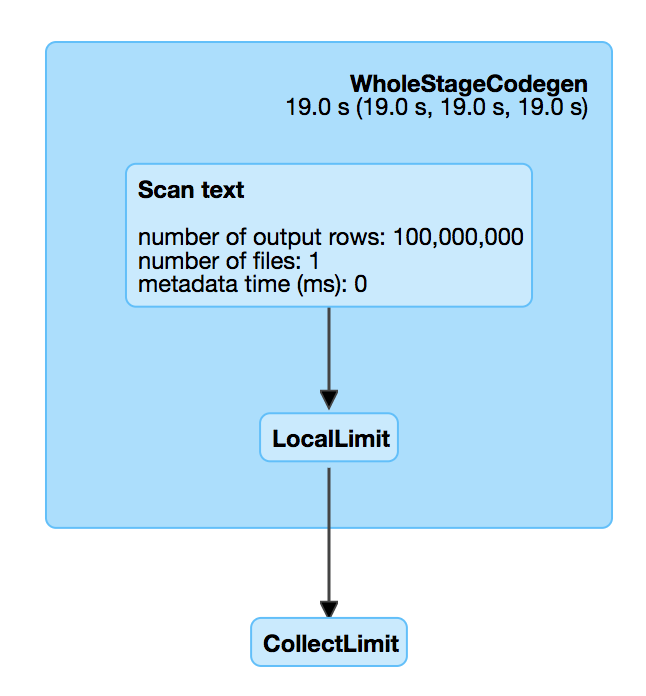

| d3255a5 | Wenchen Fan | 15 June 2018, 12:33:17 UTC | revert [SPARK-21743][SQL] top-most limit should not cause memory leak ## What changes were proposed in this pull request? There is a performance regression in Spark 2.3. When we read a big compressed text file which is un-splittable(e.g. gz), and then take the first record, Spark will scan all the data in the text file which is very slow. For example, `spark.read.text("/tmp/test.csv.gz").head(1)`, we can check out the SQL UI and see that the file is fully scanned.  This is introduced by #18955 , which adds a LocalLimit to the query when executing `Dataset.head`. The foundamental problem is, `Limit` is not well whole-stage-codegened. It keeps consuming the input even if we have already hit the limitation. However, if we just fix LIMIT whole-stage-codegen, the memory leak test will fail, as we don't fully consume the inputs to trigger the resource cleanup. To fix it completely, we should do the following 1. fix LIMIT whole-stage-codegen, stop consuming inputs after hitting the limitation. 2. in whole-stage-codegen, provide a way to release resource of the parant operator, and apply it in LIMIT 3. automatically release resource when task ends. Howere this is a non-trivial change, and is risky to backport to Spark 2.3. This PR proposes to revert #18955 in Spark 2.3. The memory leak is not a big issue. When task ends, Spark will release all the pages allocated by this task, which is kind of releasing most of the resources. I'll submit a exhaustive fix to master later. ## How was this patch tested? N/A Author: Wenchen Fan <wenchen@databricks.com> Closes #21573 from cloud-fan/limit. | 15 June 2018, 12:33:17 UTC |

| 7f1708a | Ruben Berenguel Montoro | 15 June 2018, 08:59:00 UTC | [PYTHON] Fix typo in serializer exception ## What changes were proposed in this pull request? Fix typo in exception raised in Python serializer ## How was this patch tested? No code changes Please review http://spark.apache.org/contributing.html before opening a pull request. Author: Ruben Berenguel Montoro <ruben@mostlymaths.net> Closes #21566 from rberenguel/fix_typo_pyspark_serializers. (cherry picked from commit 6567fc43aca75b41900cde976594e21c8b0ca98a) Signed-off-by: hyukjinkwon <gurwls223@apache.org> | 15 June 2018, 08:59:21 UTC |

| e6bf325 | Marco Gaido | 14 June 2018, 16:20:41 UTC | [SPARK-24495][SQL] EnsureRequirement returns wrong plan when reordering equal keys `EnsureRequirement` in its `reorder` method currently assumes that the same key appears only once in the join condition. This of course might not be the case, and when it is not satisfied, it returns a wrong plan which produces a wrong result of the query. added UT Author: Marco Gaido <marcogaido91@gmail.com> Closes #21529 from mgaido91/SPARK-24495. (cherry picked from commit fdadc4be08dcf1a06383bbb05e53540da2092c63) Signed-off-by: Xiao Li <gatorsmile@gmail.com> | 14 June 2018, 16:22:16 UTC |

| a2f65eb | Xingbo Jiang | 14 June 2018, 06:20:48 UTC | [MINOR][CORE][TEST] Remove unnecessary sort in UnsafeInMemorySorterSuite ## What changes were proposed in this pull request? We don't require specific ordering of the input data, the sort action is not necessary and misleading. ## How was this patch tested? Existing test suite. Author: Xingbo Jiang <xingbo.jiang@databricks.com> Closes #21536 from jiangxb1987/sorterSuite. (cherry picked from commit 534065efeb51ff0d308fa6cc9dea0715f8ce25ad) Signed-off-by: hyukjinkwon <gurwls223@apache.org> | 14 June 2018, 06:22:11 UTC |

| 470cacd | edorigatti | 13 June 2018, 01:06:06 UTC | [SPARK-23754][PYTHON][FOLLOWUP][BACKPORT-2.3] Move UDF stop iteration wrapping from driver to executor SPARK-23754 was fixed in #21383 by changing the UDF code to wrap the user function, but this required a hack to save its argspec. This PR reverts this change and fixes the `StopIteration` bug in the worker. The root of the problem is that when an user-supplied function raises a `StopIteration`, pyspark might stop processing data, if this function is used in a for-loop. The solution is to catch `StopIteration`s exceptions and re-raise them as `RuntimeError`s, so that the execution fails and the error is reported to the user. This is done using the `fail_on_stopiteration` wrapper, in different ways depending on where the function is used: - In RDDs, the user function is wrapped in the driver, because this function is also called in the driver itself. - In SQL UDFs, the function is wrapped in the worker, since all processing happens there. Moreover, the worker needs the signature of the user function, which is lost when wrapping it, but passing this signature to the worker requires a not so nice hack. HyukjinKwon Author: edorigatti <emilio.dorigatti@gmail.com> Author: e-dorigatti <emilio.dorigatti@gmail.com> Closes #21538 from e-dorigatti/branch-2.3. | 13 June 2018, 01:06:06 UTC |

| a55de38 | Marco Gaido | 12 June 2018, 23:42:44 UTC | [SPARK-24506][UI] Add UI filters to tabs added after binding ## What changes were proposed in this pull request? Currently, `spark.ui.filters` are not applied to the handlers added after binding the server. This means that every page which is added after starting the UI will not have the filters configured on it. This can allow unauthorized access to the pages. The PR adds the filters also to the handlers added after the UI starts. ## How was this patch tested? manual tests (without the patch, starting the thriftserver with `--conf spark.ui.filters=org.apache.hadoop.security.authentication.server.AuthenticationFilter --conf spark.org.apache.hadoop.security.authentication.server.AuthenticationFilter.params="type=simple"` you can access `http://localhost:4040/sqlserver`; with the patch, 401 is the response as for the other pages). Author: Marco Gaido <marcogaido91@gmail.com> Closes #21523 from mgaido91/SPARK-24506. (cherry picked from commit f53818d35bdef5d20a2718b14a2fed4c468545c6) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 12 June 2018, 23:42:56 UTC |

| 63e1da1 | Marco Gaido | 12 June 2018, 16:56:35 UTC | [SPARK-24531][TESTS] Remove version 2.2.0 from testing versions in HiveExternalCatalogVersionsSuite ## What changes were proposed in this pull request? Removing version 2.2.0 from testing versions in HiveExternalCatalogVersionsSuite as it is not present anymore in the mirrors and this is blocking all the open PRs. ## How was this patch tested? running UTs Author: Marco Gaido <marcogaido91@gmail.com> Closes #21540 from mgaido91/SPARK-24531. (cherry picked from commit 2824f1436bb0371b7216730455f02456ef8479ce) Signed-off-by: Xiao Li <gatorsmile@gmail.com> | 12 June 2018, 16:56:48 UTC |

| bf58687 | Wenchen Fan | 12 June 2018, 05:08:44 UTC | [SPARK-24502][SQL] flaky test: UnsafeRowSerializerSuite ## What changes were proposed in this pull request? `UnsafeRowSerializerSuite` calls `UnsafeProjection.create` which accesses `SQLConf.get`, while the current active SparkSession may already be stopped, and we may hit exception like this ``` sbt.ForkMain$ForkError: java.lang.IllegalStateException: LiveListenerBus is stopped. at org.apache.spark.scheduler.LiveListenerBus.addToQueue(LiveListenerBus.scala:97) at org.apache.spark.scheduler.LiveListenerBus.addToStatusQueue(LiveListenerBus.scala:80) at org.apache.spark.sql.internal.SharedState.<init>(SharedState.scala:93) at org.apache.spark.sql.SparkSession$$anonfun$sharedState$1.apply(SparkSession.scala:120) at org.apache.spark.sql.SparkSession$$anonfun$sharedState$1.apply(SparkSession.scala:120) at scala.Option.getOrElse(Option.scala:121) at org.apache.spark.sql.SparkSession.sharedState$lzycompute(SparkSession.scala:120) at org.apache.spark.sql.SparkSession.sharedState(SparkSession.scala:119) at org.apache.spark.sql.internal.BaseSessionStateBuilder.build(BaseSessionStateBuilder.scala:286) at org.apache.spark.sql.test.TestSparkSession.sessionState$lzycompute(TestSQLContext.scala:42) at org.apache.spark.sql.test.TestSparkSession.sessionState(TestSQLContext.scala:41) at org.apache.spark.sql.SparkSession$$anonfun$1$$anonfun$apply$1.apply(SparkSession.scala:95) at org.apache.spark.sql.SparkSession$$anonfun$1$$anonfun$apply$1.apply(SparkSession.scala:95) at scala.Option.map(Option.scala:146) at org.apache.spark.sql.SparkSession$$anonfun$1.apply(SparkSession.scala:95) at org.apache.spark.sql.SparkSession$$anonfun$1.apply(SparkSession.scala:94) at org.apache.spark.sql.internal.SQLConf$.get(SQLConf.scala:126) at org.apache.spark.sql.catalyst.expressions.CodeGeneratorWithInterpretedFallback.createObject(CodeGeneratorWithInterpretedFallback.scala:54) at org.apache.spark.sql.catalyst.expressions.UnsafeProjection$.create(Projection.scala:157) at org.apache.spark.sql.catalyst.expressions.UnsafeProjection$.create(Projection.scala:150) at org.apache.spark.sql.execution.UnsafeRowSerializerSuite.org$apache$spark$sql$execution$UnsafeRowSerializerSuite$$unsafeRowConverter(UnsafeRowSerializerSuite.scala:54) at org.apache.spark.sql.execution.UnsafeRowSerializerSuite.org$apache$spark$sql$execution$UnsafeRowSerializerSuite$$toUnsafeRow(UnsafeRowSerializerSuite.scala:49) at org.apache.spark.sql.execution.UnsafeRowSerializerSuite$$anonfun$2.apply(UnsafeRowSerializerSuite.scala:63) at org.apache.spark.sql.execution.UnsafeRowSerializerSuite$$anonfun$2.apply(UnsafeRowSerializerSuite.scala:60) ... ``` ## How was this patch tested? N/A Author: Wenchen Fan <wenchen@databricks.com> Closes #21518 from cloud-fan/test. (cherry picked from commit 01452ea9c75ff027ceeb8314368c6bbedefdb2bf) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 12 June 2018, 05:09:18 UTC |

| 4d4548a | Marcelo Vanzin | 12 June 2018, 01:32:14 UTC | [SPARK-23732][DOCS] Fix source links in generated scaladoc. Apply the suggestion on the bug to fix source links. Tested with the 2.3.1 release docs. Author: Marcelo Vanzin <vanzin@cloudera.com> Closes #21521 from vanzin/SPARK-23732. | 12 June 2018, 01:33:30 UTC |

| 1582945 | Marco Gaido | 09 June 2018, 01:51:56 UTC | [SPARK-24468][SQL] Handle negative scale when adjusting precision for decimal operations ## What changes were proposed in this pull request? In SPARK-22036 we introduced the possibility to allow precision loss in arithmetic operations (according to the SQL standard). The implementation was drawn from Hive's one, where Decimals with a negative scale are not allowed in the operations. The PR handles the case when the scale is negative, removing the assertion that it is not. ## How was this patch tested? added UTs Author: Marco Gaido <marcogaido91@gmail.com> Closes #21499 from mgaido91/SPARK-24468. (cherry picked from commit f07c5064a3967cdddf57c2469635ee50a26d864c) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 09 June 2018, 01:52:13 UTC |

| 36f1d5e | Wenchen Fan | 04 June 2018, 04:57:42 UTC | [SPARK-24369][SQL] Correct handling for multiple distinct aggregations having the same argument set ## What changes were proposed in this pull request? bring back https://github.com/apache/spark/pull/21443 This is a different approach: just change the check to count distinct columns with `toSet` ## How was this patch tested? a new test to verify the planner behavior. Author: Wenchen Fan <wenchen@databricks.com> Author: Takeshi Yamamuro <yamamuro@apache.org> Closes #21487 from cloud-fan/back. (cherry picked from commit 416cd1fd96c0db9194e32ba877b1396b6dc13c8e) Signed-off-by: Xiao Li <gatorsmile@gmail.com> | 04 June 2018, 04:57:58 UTC |

| 1819454 | xueyu | 04 June 2018, 01:10:49 UTC | [SPARK-24455][CORE] fix typo in TaskSchedulerImpl comment change runTasks to submitTasks in the TaskSchedulerImpl.scala 's comment Author: xueyu <xueyu@yidian-inc.com> Author: Xue Yu <278006819@qq.com> Closes #21485 from xueyumusic/fixtypo1. (cherry picked from commit a2166ecddaec030f78acaa66ce660d979a35079c) Signed-off-by: hyukjinkwon <gurwls223@apache.org> | 04 June 2018, 01:11:09 UTC |

| 21800b8 | Marcelo Vanzin | 01 June 2018, 20:34:24 UTC | Preparing development version 2.3.2-SNAPSHOT | 01 June 2018, 20:34:24 UTC |

| 30aaa5a | Marcelo Vanzin | 01 June 2018, 20:34:19 UTC | Preparing Spark release v2.3.1-rc4 | 01 June 2018, 20:34:19 UTC |

| e4e96f9 | Xiao Li | 01 June 2018, 18:52:20 UTC | Revert "[SPARK-24369][SQL] Correct handling for multiple distinct aggregations having the same argument set" This reverts commit 66289a3e067769cb8ed35953187f6363463791e1. | 01 June 2018, 18:52:20 UTC |

| 2e0c346 | Marcelo Vanzin | 01 June 2018, 17:56:29 UTC | Preparing development version 2.3.2-SNAPSHOT | 01 June 2018, 17:56:29 UTC |

| 1cc5f68 | Marcelo Vanzin | 01 June 2018, 17:56:26 UTC | Preparing Spark release v2.3.1-rc3 | 01 June 2018, 17:56:26 UTC |

| e56266a | Bryan Cutler | 01 June 2018, 06:27:10 UTC | [SPARK-24444][DOCS][PYTHON][BRANCH-2.3] Improve Pandas UDF docs to explain column assignment ## What changes were proposed in this pull request? Added sections to pandas_udf docs, in the grouped map section, to indicate columns are assigned by position. Backported to branch-2.3. ## How was this patch tested? NA Author: Bryan Cutler <cutlerb@gmail.com> Closes #21478 from BryanCutler/arrow-doc-pandas_udf-column_by_pos-2_3_1-SPARK-21427. | 01 June 2018, 06:27:10 UTC |

| b37e76f | Marcelo Vanzin | 31 May 2018, 17:05:20 UTC | [SPARK-24414][UI] Calculate the correct number of tasks for a stage. This change takes into account all non-pending tasks when calculating the number of tasks to be shown. This also means that when the stage is pending, the task table (or, in fact, most of the data in the stage page) will not be rendered. I also fixed the label when the known number of tasks is larger than the recorded number of tasks (it was inverted). Author: Marcelo Vanzin <vanzin@cloudera.com> Closes #21457 from vanzin/SPARK-24414. (cherry picked from commit 7a82e93b349b4f414f2075dd5add8e4ed72fe357) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 31 May 2018, 17:05:33 UTC |

| dc24da2 | Sean Owen | 31 May 2018, 16:34:39 UTC | [WEBUI] Avoid possibility of script in query param keys As discussed separately, this avoids the possibility of XSS on certain request param keys. CC vanzin Author: Sean Owen <srowen@gmail.com> Closes #21464 from srowen/XSS2. (cherry picked from commit 698b9a0981f0ec322e15d6ac89cc38c8f49ed33d) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 31 May 2018, 16:35:04 UTC |

| 3a024a4 | hyukjinkwon | 30 May 2018, 17:33:34 UTC | [SPARK-24384][PYTHON][SPARK SUBMIT] Add .py files correctly into PythonRunner in submit with client mode in spark-submit ## What changes were proposed in this pull request? In client side before context initialization specifically, .py file doesn't work in client side before context initialization when the application is a Python file. See below: ``` $ cat /home/spark/tmp.py def testtest(): return 1 ``` This works: ``` $ cat app.py import pyspark pyspark.sql.SparkSession.builder.getOrCreate() import tmp print("************************%s" % tmp.testtest()) $ ./bin/spark-submit --master yarn --deploy-mode client --py-files /home/spark/tmp.py app.py ... ************************1 ``` but this doesn't: ``` $ cat app.py import pyspark import tmp pyspark.sql.SparkSession.builder.getOrCreate() print("************************%s" % tmp.testtest()) $ ./bin/spark-submit --master yarn --deploy-mode client --py-files /home/spark/tmp.py app.py Traceback (most recent call last): File "/home/spark/spark/app.py", line 2, in <module> import tmp ImportError: No module named tmp ``` ### How did it happen? In client mode specifically, the paths are being added into PythonRunner as are: https://github.com/apache/spark/blob/628c7b517969c4a7ccb26ea67ab3dd61266073ca/core/src/main/scala/org/apache/spark/deploy/SparkSubmit.scala#L430 https://github.com/apache/spark/blob/628c7b517969c4a7ccb26ea67ab3dd61266073ca/core/src/main/scala/org/apache/spark/deploy/PythonRunner.scala#L49-L88 The problem here is, .py file shouldn't be added as are since `PYTHONPATH` expects a directory or an archive like zip or egg. ### How does this PR fix? We shouldn't simply just add its parent directory because other files in the parent directory could also be added into the `PYTHONPATH` in client mode before context initialization. Therefore, we copy .py files into a temp directory for .py files and add it to `PYTHONPATH`. ## How was this patch tested? Unit tests are added and manually tested in both standalond and yarn client modes with submit. Author: hyukjinkwon <gurwls223@apache.org> Closes #21426 from HyukjinKwon/SPARK-24384. (cherry picked from commit b142157dcc7f595eea93d66dda8b1d169a38d95c) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 30 May 2018, 17:33:45 UTC |

| e1c0ab1 | e-dorigatti | 30 May 2018, 16:46:09 UTC | [SPARK-23754][BRANCH-2.3][PYTHON] Re-raising StopIteration in client code ## What changes are proposed Make sure that `StopIteration`s raised in users' code do not silently interrupt processing by spark, but are raised as exceptions to the users. The users' functions are wrapped in `safe_iter` (in `shuffle.py`), which re-raises `StopIteration`s as `RuntimeError`s ## How were the changes tested Unit tests, making sure that the exceptions are indeed raised. I am not sure how to check whether a `Py4JJavaError` contains my exception, so I simply looked for the exception message in the java exception's `toString`. Can you propose a better way? This is my original work, licensed in the same way as spark --- Author: e-dorigatti <emilio.dorigattigmail.com> Closes #21383 from e-dorigatti/fix_spark_23754. (cherry picked from commit 0ebb0c0d4dd3e192464dc5e0e6f01efa55b945ed) Author: e-dorigatti <emilio.dorigatti@gmail.com> Closes #21463 from e-dorigatti/branch-2.3. | 30 May 2018, 16:46:09 UTC |

| 66289a3 | Takeshi Yamamuro | 30 May 2018, 16:23:25 UTC | [SPARK-24369][SQL] Correct handling for multiple distinct aggregations having the same argument set ## What changes were proposed in this pull request? This pr fixed an issue when having multiple distinct aggregations having the same argument set, e.g., ``` scala>: paste val df = sql( s"""SELECT corr(DISTINCT x, y), corr(DISTINCT y, x), count(*) | FROM (VALUES (1, 1), (2, 2), (2, 2)) t(x, y) """.stripMargin) java.lang.RuntimeException You hit a query analyzer bug. Please report your query to Spark user mailing list. ``` The root cause is that `RewriteDistinctAggregates` can't detect multiple distinct aggregations if they have the same argument set. This pr modified code so that `RewriteDistinctAggregates` could count the number of aggregate expressions with `isDistinct=true`. ## How was this patch tested? Added tests in `DataFrameAggregateSuite`. Author: Takeshi Yamamuro <yamamuro@apache.org> Closes #21443 from maropu/SPARK-24369. (cherry picked from commit 1e46f92f956a00d04d47340489b6125d44dbd47b) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 30 May 2018, 16:24:14 UTC |

| 49a6c2b | Gabor Somogyi | 29 May 2018, 12:10:59 UTC | [SPARK-23991][DSTREAMS] Fix data loss when WAL write fails in allocateBlocksToBatch When blocks tried to get allocated to a batch and WAL write fails then the blocks will be removed from the received block queue. This fact simply produces data loss because the next allocation will not find the mentioned blocks in the queue. In this PR blocks will be removed from the received queue only if WAL write succeded. Additional unit test. Author: Gabor Somogyi <gabor.g.somogyi@gmail.com> Closes #21430 from gaborgsomogyi/SPARK-23991. Change-Id: I5ead84f0233f0c95e6d9f2854ac2ff6906f6b341 (cherry picked from commit aca65c63cb12073eb193fe08998994c60acb8b58) Signed-off-by: jerryshao <sshao@hortonworks.com> | 29 May 2018, 12:11:14 UTC |

| fec43fe | Dongjoon Hyun | 29 May 2018, 02:35:30 UTC | [SPARK-19613][SS][TEST] Random.nextString is not safe for directory namePrefix ## What changes were proposed in this pull request? `Random.nextString` is good for generating random string data, but it's not proper for directory name prefix in `Utils.createDirectory(tempDir, Random.nextString(10))`. This PR uses more safe directory namePrefix. ```scala scala> scala.util.Random.nextString(10) res0: String = 馨쭔ᎰႻ穚䃈兩㻞藑並 ``` ```scala StateStoreRDDSuite: - versioning and immutability - recovering from files - usage with iterators - only gets and only puts - preferred locations using StateStoreCoordinator *** FAILED *** java.io.IOException: Failed to create a temp directory (under /.../spark/sql/core/target/tmp/StateStoreRDDSuite8712796397908632676) after 10 attempts! at org.apache.spark.util.Utils$.createDirectory(Utils.scala:295) at org.apache.spark.sql.execution.streaming.state.StateStoreRDDSuite$$anonfun$13$$anonfun$apply$6.apply(StateStoreRDDSuite.scala:152) at org.apache.spark.sql.execution.streaming.state.StateStoreRDDSuite$$anonfun$13$$anonfun$apply$6.apply(StateStoreRDDSuite.scala:149) at org.apache.spark.sql.catalyst.util.package$.quietly(package.scala:42) at org.apache.spark.sql.execution.streaming.state.StateStoreRDDSuite$$anonfun$13.apply(StateStoreRDDSuite.scala:149) at org.apache.spark.sql.execution.streaming.state.StateStoreRDDSuite$$anonfun$13.apply(StateStoreRDDSuite.scala:149) ... - distributed test *** FAILED *** java.io.IOException: Failed to create a temp directory (under /.../spark/sql/core/target/tmp/StateStoreRDDSuite8712796397908632676) after 10 attempts! at org.apache.spark.util.Utils$.createDirectory(Utils.scala:295) ``` ## How was this patch tested? Pass the existing tests.StateStoreRDDSuite: Author: Dongjoon Hyun <dongjoon@apache.org> Closes #21446 from dongjoon-hyun/SPARK-19613. (cherry picked from commit b31b587cd091010337378cf448fd598c37757053) Signed-off-by: hyukjinkwon <gurwls223@apache.org> | 29 May 2018, 02:35:46 UTC |

| a9700cb | Marco Gaido | 28 May 2018, 04:09:44 UTC | [SPARK-24373][SQL] Add AnalysisBarrier to RelationalGroupedDataset's and KeyValueGroupedDataset's child When we create a `RelationalGroupedDataset` or a `KeyValueGroupedDataset` we set its child to the `logicalPlan` of the `DataFrame` we need to aggregate. Since the `logicalPlan` is already analyzed, we should not analyze it again. But this happens when the new plan of the aggregate is analyzed. The current behavior in most of the cases is likely to produce no harm, but in other cases re-analyzing an analyzed plan can change it, since the analysis is not idempotent. This can cause issues like the one described in the JIRA (missing to find a cached plan). The PR adds an `AnalysisBarrier` to the `logicalPlan` which is used as child of `RelationalGroupedDataset` or a `KeyValueGroupedDataset`. added UT Author: Marco Gaido <marcogaido91@gmail.com> Closes #21432 from mgaido91/SPARK-24373. (cherry picked from commit de01a8d50c9c3e196591db057d544f5d7b24d95f) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 28 May 2018, 05:55:04 UTC |

| 8bb6c22 | Bryan Cutler | 28 May 2018, 04:56:05 UTC | [SPARK-24392][PYTHON] Label pandas_udf as Experimental The pandas_udf functionality was introduced in 2.3.0, but is not completely stable and still evolving. This adds a label to indicate it is still an experimental API. NA Author: Bryan Cutler <cutlerb@gmail.com> Closes #21435 from BryanCutler/arrow-pandas_udf-experimental-SPARK-24392. (cherry picked from commit fa2ae9d2019f839647d17932d8fea769e7622777) Signed-off-by: hyukjinkwon <gurwls223@apache.org> | 28 May 2018, 04:57:18 UTC |

| 9b0f6f5 | Li Jin | 28 May 2018, 02:50:17 UTC | [SPARK-24334] Fix race condition in ArrowPythonRunner causes unclean shutdown of Arrow memory allocator ## What changes were proposed in this pull request? There is a race condition of closing Arrow VectorSchemaRoot and Allocator in the writer thread of ArrowPythonRunner. The race results in memory leak exception when closing the allocator. This patch removes the closing routine from the TaskCompletionListener and make the writer thread responsible for cleaning up the Arrow memory. This issue be reproduced by this test: ``` def test_memory_leak(self): from pyspark.sql.functions import pandas_udf, col, PandasUDFType, array, lit, explode # Have all data in a single executor thread so it can trigger the race condition easier with self.sql_conf({'spark.sql.shuffle.partitions': 1}): df = self.spark.range(0, 1000) df = df.withColumn('id', array([lit(i) for i in range(0, 300)])) \ .withColumn('id', explode(col('id'))) \ .withColumn('v', array([lit(i) for i in range(0, 1000)])) pandas_udf(df.schema, PandasUDFType.GROUPED_MAP) def foo(pdf): xxx return pdf result = df.groupby('id').apply(foo) with QuietTest(self.sc): with self.assertRaises(py4j.protocol.Py4JJavaError) as context: result.count() self.assertTrue('Memory leaked' not in str(context.exception)) ``` Note: Because of the race condition, the test case cannot reproduce the issue reliably so it's not added to test cases. ## How was this patch tested? Because of the race condition, the bug cannot be unit test easily. So far it has only happens on large amount of data. This is currently tested manually. Author: Li Jin <ice.xelloss@gmail.com> Closes #21397 from icexelloss/SPARK-24334-arrow-memory-leak. (cherry picked from commit 672209f2909a95e891f3c779bfb2f0e534239851) Signed-off-by: hyukjinkwon <gurwls223@apache.org> | 28 May 2018, 02:50:42 UTC |

| a06fc45 | Yuming Wang | 26 May 2018, 12:26:00 UTC | [SPARK-19112][CORE][FOLLOW-UP] Add missing shortCompressionCodecNames to configuration. ## What changes were proposed in this pull request? Spark provides four codecs: `lz4`, `lzf`, `snappy`, and `zstd`. This pr add missing shortCompressionCodecNames to configuration. ## How was this patch tested? manually tested Author: Yuming Wang <yumwang@ebay.com> Closes #21431 from wangyum/SPARK-19112. (cherry picked from commit ed1a65448f228776afe2e5c6b1ac4228d2ed2854) Signed-off-by: hyukjinkwon <gurwls223@apache.org> | 26 May 2018, 12:26:24 UTC |

| 54aeae7 | Marco Gaido | 25 May 2018, 19:49:06 UTC | [MINOR] Add port SSL config in toString and scaladoc ## What changes were proposed in this pull request? SPARK-17874 introduced a new configuration to set the port where SSL services bind to. We missed to update the scaladoc and the `toString` method, though. The PR adds it in the missing places ## How was this patch tested? checked the `toString` output in the logs Author: Marco Gaido <marcogaido91@gmail.com> Closes #21429 from mgaido91/minor_ssl. (cherry picked from commit fd315f5884c03c6dd21abca178897584dee83f1a) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 25 May 2018, 19:49:19 UTC |

| d0f30e3 | Yuming Wang | 24 May 2018, 15:38:50 UTC | [SPARK-24378][SQL] Fix date_trunc function incorrect examples ## What changes were proposed in this pull request? Fix `date_trunc` function incorrect examples. ## How was this patch tested? N/A Author: Yuming Wang <yumwang@ebay.com> Closes #21423 from wangyum/SPARK-24378. | 24 May 2018, 15:42:20 UTC |

| f48d624 | Ryan Blue | 24 May 2018, 12:55:26 UTC | [SPARK-24230][SQL] Fix SpecificParquetRecordReaderBase with dictionary filters. ## What changes were proposed in this pull request? I missed this commit when preparing #21070. When Parquet is able to filter blocks with dictionary filtering, the expected total value count to be too high in Spark, leading to an error when there were fewer than expected row groups to process. Spark should get the row groups from Parquet to pick up new filter schemes in Parquet like dictionary filtering. ## How was this patch tested? Using in production at Netflix. Added test case for dictionary-filtered blocks. Author: Ryan Blue <blue@apache.org> Closes #21295 from rdblue/SPARK-24230-fix-parquet-block-tracking. (cherry picked from commit 3469f5c989e686866051382a3a28b2265619cab9) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 24 May 2018, 13:00:44 UTC |

| 068c4ae | hyukjinkwon | 24 May 2018, 05:21:02 UTC | [SPARK-24364][SS] Prevent InMemoryFileIndex from failing if file path doesn't exist ## What changes were proposed in this pull request? This PR proposes to follow up https://github.com/apache/spark/pull/15153 and complete SPARK-17599. `FileSystem` operation (`fs.getFileBlockLocations`) can still fail if the file path does not exist. For example see the exception message below: ``` Error occurred while processing: File does not exist: /rel/00171151/input/PJ/part-00136-b6403bac-a240-44f8-a792-fc2e174682b7-c000.csv ... java.io.FileNotFoundException: File does not exist: /rel/00171151/input/PJ/part-00136-b6403bac-a240-44f8-a792-fc2e174682b7-c000.csv ... org.apache.hadoop.hdfs.DistributedFileSystem.getFileBlockLocations(DistributedFileSystem.java:249) at org.apache.hadoop.hdfs.DistributedFileSystem.getFileBlockLocations(DistributedFileSystem.java:229) at org.apache.spark.sql.execution.datasources.InMemoryFileIndex$$anonfun$org$apache$spark$sql$execution$datasources$InMemoryFileIndex$$listLeafFiles$3.apply(InMemoryFileIndex.scala:314) at org.apache.spark.sql.execution.datasources.InMemoryFileIndex$$anonfun$org$apache$spark$sql$execution$datasources$InMemoryFileIndex$$listLeafFiles$3.apply(InMemoryFileIndex.scala:297) at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234) at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234) at scala.collection.IndexedSeqOptimized$class.foreach(IndexedSeqOptimized.scala:33) at scala.collection.mutable.ArrayOps$ofRef.foreach(ArrayOps.scala:186) at scala.collection.TraversableLike$class.map(TraversableLike.scala:234) at scala.collection.mutable.ArrayOps$ofRef.map(ArrayOps.scala:186) at org.apache.spark.sql.execution.datasources.InMemoryFileIndex$.org$apache$spark$sql$execution$datasources$InMemoryFileIndex$$listLeafFiles(InMemoryFileIndex.scala:297) at org.apache.spark.sql.execution.datasources.InMemoryFileIndex$$anonfun$org$apache$spark$sql$execution$datasources$InMemoryFileIndex$$bulkListLeafFiles$1.apply(InMemoryFileIndex.scala:174) at org.apache.spark.sql.execution.datasources.InMemoryFileIndex$$anonfun$org$apache$spark$sql$execution$datasources$InMemoryFileIndex$$bulkListLeafFiles$1.apply(InMemoryFileIndex.scala:173) at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234) at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234) at scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59) at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:48) at scala.collection.TraversableLike$class.map(TraversableLike.scala:234) at scala.collection.AbstractTraversable.map(Traversable.scala:104) at org.apache.spark.sql.execution.datasources.InMemoryFileIndex$.org$apache$spark$sql$execution$datasources$InMemoryFileIndex$$bulkListLeafFiles(InMemoryFileIndex.scala:173) at org.apache.spark.sql.execution.datasources.InMemoryFileIndex.listLeafFiles(InMemoryFileIndex.scala:126) at org.apache.spark.sql.execution.datasources.InMemoryFileIndex.refresh0(InMemoryFileIndex.scala:91) at org.apache.spark.sql.execution.datasources.InMemoryFileIndex.<init>(InMemoryFileIndex.scala:67) at org.apache.spark.sql.execution.datasources.DataSource.tempFileIndex$lzycompute$1(DataSource.scala:161) at org.apache.spark.sql.execution.datasources.DataSource.org$apache$spark$sql$execution$datasources$DataSource$$tempFileIndex$1(DataSource.scala:152) at org.apache.spark.sql.execution.datasources.DataSource.getOrInferFileFormatSchema(DataSource.scala:166) at org.apache.spark.sql.execution.datasources.DataSource.sourceSchema(DataSource.scala:261) at org.apache.spark.sql.execution.datasources.DataSource.sourceInfo$lzycompute(DataSource.scala:94) at org.apache.spark.sql.execution.datasources.DataSource.sourceInfo(DataSource.scala:94) at org.apache.spark.sql.execution.streaming.StreamingRelation$.apply(StreamingRelation.scala:33) at org.apache.spark.sql.streaming.DataStreamReader.load(DataStreamReader.scala:196) at org.apache.spark.sql.streaming.DataStreamReader.load(DataStreamReader.scala:206) at com.hwx.StreamTest$.main(StreamTest.scala:97) at com.hwx.StreamTest.main(StreamTest.scala) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52) at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:906) at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:197) at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:227) at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:136) at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala) Caused by: org.apache.hadoop.ipc.RemoteException(java.io.FileNotFoundException): File does not exist: /rel/00171151/input/PJ/part-00136-b6403bac-a240-44f8-a792-fc2e174682b7-c000.csv ... ``` So, it fixes it to make a warning instead. ## How was this patch tested? It's hard to write a test. Manually tested multiple times. Author: hyukjinkwon <gurwls223@apache.org> Closes #21408 from HyukjinKwon/missing-files. (cherry picked from commit 8a545822d0cc3a866ef91a94e58ea5c8b1014007) Signed-off-by: hyukjinkwon <gurwls223@apache.org> | 24 May 2018, 05:21:25 UTC |

| 75e2cd1 | Dongjoon Hyun | 24 May 2018, 03:34:13 UTC | [SPARK-24322][BUILD] Upgrade Apache ORC to 1.4.4 ORC 1.4.4 includes [nine fixes](https://issues.apache.org/jira/issues/?filter=12342568&jql=project%20%3D%20ORC%20AND%20resolution%20%3D%20Fixed%20AND%20fixVersion%20%3D%201.4.4). One of the issues is about `Timestamp` bug (ORC-306) which occurs when `native` ORC vectorized reader reads ORC column vector's sub-vector `times` and `nanos`. ORC-306 fixes this according to the [original definition](https://github.com/apache/hive/blob/master/storage-api/src/java/org/apache/hadoop/hive/ql/exec/vector/TimestampColumnVector.java#L45-L46) and this PR includes the updated interpretation on ORC column vectors. Note that `hive` ORC reader and ORC MR reader is not affected. ```scala scala> spark.version res0: String = 2.3.0 scala> spark.sql("set spark.sql.orc.impl=native") scala> Seq(java.sql.Timestamp.valueOf("1900-05-05 12:34:56.000789")).toDF().write.orc("/tmp/orc") scala> spark.read.orc("/tmp/orc").show(false) +--------------------------+ |value | +--------------------------+ |1900-05-05 12:34:55.000789| +--------------------------+ ``` This PR aims to update Apache Spark to use it. **FULL LIST** ID | TITLE -- | -- ORC-281 | Fix compiler warnings from clang 5.0 ORC-301 | `extractFileTail` should open a file in `try` statement ORC-304 | Fix TestRecordReaderImpl to not fail with new storage-api ORC-306 | Fix incorrect workaround for bug in java.sql.Timestamp ORC-324 | Add support for ARM and PPC arch ORC-330 | Remove unnecessary Hive artifacts from root pom ORC-332 | Add syntax version to orc_proto.proto ORC-336 | Remove avro and parquet dependency management entries ORC-360 | Implement error checking on subtype fields in Java Pass the Jenkins. Author: Dongjoon Hyun <dongjoon@apache.org> Closes #21372 from dongjoon-hyun/SPARK_ORC144. (cherry picked from commit 486ecc680e9a0e7b6b3c3a45fb883a61072096fc) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 24 May 2018, 03:36:25 UTC |

| 3d2ae0b | sychen | 24 May 2018, 03:02:09 UTC | [SPARK-24257][SQL] LongToUnsafeRowMap calculate the new size may be wrong LongToUnsafeRowMap has a mistake when growing its page array: it blindly grows to `oldSize * 2`, while the new record may be larger than `oldSize * 2`. Then we may have a malformed UnsafeRow when querying this map, whose actual data is smaller than its declared size, and the data is corrupted. Author: sychen <sychen@ctrip.com> Closes #21311 from cxzl25/fix_LongToUnsafeRowMap_page_size. (cherry picked from commit 888340151f737bb68d0e419b1e949f11469881f9) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 24 May 2018, 03:18:34 UTC |

| ded6709 | Marco Gaido | 23 May 2018, 14:26:39 UTC | [SPARK-24313][SQL][BACKPORT-2.3] Fix collection operations' interpreted evaluation for complex types ## What changes were proposed in this pull request? The interpreted evaluation of several collection operations works only for simple datatypes. For complex data types, for instance, `array_contains` it returns always `false`. The list of the affected functions is `array_contains` and `GetMapValue`. The PR fixes the behavior for all the datatypes. ## How was this patch tested? added UT Author: Marco Gaido <marcogaido91@gmail.com> Closes #21407 from mgaido91/SPARK-24313_2.3. | 23 May 2018, 14:26:39 UTC |

| ed0060c | Seth Fitzsimmons | 23 May 2018, 01:14:03 UTC | Correct reference to Offset class This is a documentation-only correction; `org.apache.spark.sql.sources.v2.reader.Offset` is actually `org.apache.spark.sql.sources.v2.reader.streaming.Offset`. Author: Seth Fitzsimmons <seth@mojodna.net> Closes #21387 from mojodna/patch-1. (cherry picked from commit 00c13cfad78607fde0787c9d494f0df8ab7051ba) Signed-off-by: hyukjinkwon <gurwls223@apache.org> | 23 May 2018, 01:14:34 UTC |

| efe183f | Marcelo Vanzin | 22 May 2018, 16:37:08 UTC | Preparing development version 2.3.2-SNAPSHOT | 22 May 2018, 16:37:08 UTC |

| 93258d8 | Marcelo Vanzin | 22 May 2018, 16:37:04 UTC | Preparing Spark release v2.3.1-rc2 | 22 May 2018, 16:37:04 UTC |

| 70b8665 | Imran Rashid | 21 May 2018, 23:26:39 UTC | [SPARK-24309][CORE] AsyncEventQueue should stop on interrupt. EventListeners can interrupt the event queue thread. In particular, when the EventLoggingListener writes to hdfs, hdfs can interrupt the thread. When there is an interrupt, the queue should be removed and stop accepting any more events. Before this change, the queue would continue to take more events (till it was full), and then would not stop when the application was complete because the PoisonPill couldn't be added. Added a unit test which failed before this change. Author: Imran Rashid <irashid@cloudera.com> Closes #21356 from squito/SPARK-24309. (cherry picked from commit 32447079e9d0fa9f7e180b94ecac19091b6af1ab) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 21 May 2018, 23:27:45 UTC |

| d88f3e4 | Marcelo Vanzin | 18 May 2018, 18:14:22 UTC | [SPARK-23850][SQL] Add separate config for SQL options redaction. The old code was relying on a core configuration and extended its default value to include things that redact desired things in the app's environment. Instead, add a SQL-specific option for which options to redact, and apply both the core and SQL-specific rules when redacting the options in the save command. This is a little sub-optimal since it adds another config, but it retains the current default behavior. While there I also fixed a typo and a couple of minor config API usage issues in the related redaction option that SQL already had. Tested with existing unit tests, plus checking the env page on a shell UI. Author: Marcelo Vanzin <vanzin@cloudera.com> Closes #21158 from vanzin/SPARK-23850. (cherry picked from commit ed7ba7db8fa344ff182b72d23ae458e711f63432) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 18 May 2018, 18:14:36 UTC |

| 895c95e | Artem Rudoy | 17 May 2018, 10:49:46 UTC | [SPARK-22371][CORE] Return None instead of throwing an exception when an accumulator is garbage collected. ## What changes were proposed in this pull request? There's a period of time when an accumulator has been garbage collected, but hasn't been removed from AccumulatorContext.originals by ContextCleaner. When an update is received for such accumulator it will throw an exception and kill the whole job. This can happen when a stage completes, but there're still running tasks from other attempts, speculation etc. Since AccumulatorContext.get() returns an option we can just return None in such case. ## How was this patch tested? Unit test. Author: Artem Rudoy <artem.rudoy@gmail.com> Closes #21114 from artemrd/SPARK-22371. (cherry picked from commit 6c35865d949a8b46f654cd53c7e5f3288def18d0) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 18 May 2018, 03:09:47 UTC |

| 28973e1 | hyukjinkwon | 17 May 2018, 04:07:58 UTC | [SPARK-21945][YARN][PYTHON] Make --py-files work with PySpark shell in Yarn client mode When we run _PySpark shell with Yarn client mode_, specified `--py-files` are not recognised in _driver side_. Here are the steps I took to check: ```bash $ cat /home/spark/tmp.py def testtest(): return 1 ``` ```bash $ ./bin/pyspark --master yarn --deploy-mode client --py-files /home/spark/tmp.py ``` ```python >>> def test(): ... import tmp ... return tmp.testtest() ... >>> spark.range(1).rdd.map(lambda _: test()).collect() # executor side [1] >>> test() # driver side Traceback (most recent call last): File "<stdin>", line 1, in <module> File "<stdin>", line 2, in test ImportError: No module named tmp ``` Unlike Yarn cluster and client mode with Spark submit, when Yarn client mode with PySpark shell specifically, 1. It first runs Python shell via: https://github.com/apache/spark/blob/3cb82047f2f51af553df09b9323796af507d36f8/launcher/src/main/java/org/apache/spark/launcher/SparkSubmitCommandBuilder.java#L158 as pointed out by tgravescs in the JIRA. 2. this triggers shell.py and submit another application to launch a py4j gateway: https://github.com/apache/spark/blob/209b9361ac8a4410ff797cff1115e1888e2f7e66/python/pyspark/java_gateway.py#L45-L60 3. it runs a Py4J gateway: https://github.com/apache/spark/blob/3cb82047f2f51af553df09b9323796af507d36f8/core/src/main/scala/org/apache/spark/deploy/SparkSubmit.scala#L425 4. it copies (or downloads) --py-files into local temp directory: https://github.com/apache/spark/blob/3cb82047f2f51af553df09b9323796af507d36f8/core/src/main/scala/org/apache/spark/deploy/SparkSubmit.scala#L365-L376 and then these files are set up to `spark.submit.pyFiles` 5. Py4J JVM is launched and then the Python paths are set via: https://github.com/apache/spark/blob/7013eea11cb32b1e0038dc751c485da5c94a484b/python/pyspark/context.py#L209-L216 However, these are not actually set because those files were copied into a tmp directory in 4. whereas this code path looks for `SparkFiles.getRootDirectory` where the files are stored only when `SparkContext.addFile()` is called. In other cluster mode, `spark.files` are set via: https://github.com/apache/spark/blob/3cb82047f2f51af553df09b9323796af507d36f8/core/src/main/scala/org/apache/spark/deploy/SparkSubmit.scala#L554-L555 and those files are explicitly added via: https://github.com/apache/spark/blob/ecb8b383af1cf1b67f3111c148229e00c9c17c40/core/src/main/scala/org/apache/spark/SparkContext.scala#L395 So we are fine in other modes. In case of Yarn client and cluster with _submit_, these are manually being handled. In particular https://github.com/apache/spark/pull/6360 added most of the logics. In this case, the Python path looks manually set via, for example, `deploy.PythonRunner`. We don't use `spark.files` here. I tried to make an isolated approach as possible as I can: simply copy py file or zip files into `SparkFiles.getRootDirectory()` in driver side if not existing. Another possible way is to set `spark.files` but it does unnecessary stuff together and sounds a bit invasive. **Before** ```python >>> def test(): ... import tmp ... return tmp.testtest() ... >>> spark.range(1).rdd.map(lambda _: test()).collect() [1] >>> test() Traceback (most recent call last): File "<stdin>", line 1, in <module> File "<stdin>", line 2, in test ImportError: No module named tmp ``` **After** ```python >>> def test(): ... import tmp ... return tmp.testtest() ... >>> spark.range(1).rdd.map(lambda _: test()).collect() [1] >>> test() 1 ``` I manually tested in standalone and yarn cluster with PySpark shell. .zip and .py files were also tested with the similar steps above. It's difficult to add a test. Author: hyukjinkwon <gurwls223@apache.org> Closes #21267 from HyukjinKwon/SPARK-21945. (cherry picked from commit 9a641e7f721d01d283afb09dccefaf32972d3c04) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 17 May 2018, 19:46:39 UTC |