https://github.com/apache/spark

- HEAD

- refs/heads/branch-0.5

- refs/heads/branch-0.6

- refs/heads/branch-0.7

- refs/heads/branch-0.8

- refs/heads/branch-0.9

- refs/heads/branch-1.0

- refs/heads/branch-1.0-jdbc

- refs/heads/branch-1.1

- refs/heads/branch-1.2

- refs/heads/branch-1.3

- refs/heads/branch-1.4

- refs/heads/branch-1.5

- refs/heads/branch-1.6

- refs/heads/branch-2.0

- refs/heads/branch-2.1

- refs/heads/branch-2.2

- refs/heads/branch-2.3

- refs/heads/branch-2.4

- refs/heads/branch-3.0

- refs/heads/branch-3.1

- refs/heads/branch-3.2

- refs/heads/branch-3.3

- refs/heads/branch-3.4

- refs/heads/branch-3.5

- refs/heads/master

- refs/remotes/origin/branch-0.8

- refs/remotes/origin/td-rdd-save

- refs/tags/0.3-scala-2.8

- refs/tags/0.3-scala-2.9

- refs/tags/2.0.0-preview

- refs/tags/alpha-0.1

- refs/tags/alpha-0.2

- refs/tags/v0.5.0

- refs/tags/v0.5.1

- refs/tags/v0.5.2

- refs/tags/v0.6.0

- refs/tags/v0.6.0-yarn

- refs/tags/v0.6.1

- refs/tags/v0.6.2

- refs/tags/v0.7.0

- refs/tags/v0.7.0-bizo-1

- refs/tags/v0.7.1

- refs/tags/v0.7.2

- refs/tags/v0.9.1

- refs/tags/v0.9.2

- refs/tags/v1.0.0

- refs/tags/v1.0.1

- refs/tags/v1.0.2

- refs/tags/v1.1.0

- refs/tags/v1.1.1

- refs/tags/v1.2.0

- refs/tags/v1.2.1

- refs/tags/v1.2.2

- refs/tags/v1.3.0

- refs/tags/v1.3.1

- refs/tags/v1.4.0

- refs/tags/v1.4.1

- refs/tags/v1.5.0-rc1

- refs/tags/v1.5.0-rc2

- refs/tags/v1.5.0-rc3

- refs/tags/v1.5.1

- refs/tags/v1.6.0

- refs/tags/v1.6.1

- refs/tags/v1.6.2

- refs/tags/v1.6.3

- refs/tags/v2.0.0

- refs/tags/v2.0.1

- refs/tags/v2.0.2

- refs/tags/v2.1.0

- refs/tags/v2.1.1

- refs/tags/v2.1.2

- refs/tags/v2.1.2-rc1

- refs/tags/v2.1.2-rc2

- refs/tags/v2.1.2-rc3

- refs/tags/v2.1.2-rc4

- refs/tags/v2.1.3

- refs/tags/v2.1.3-rc1

- refs/tags/v2.1.3-rc2

- refs/tags/v2.2.0

- refs/tags/v2.2.1

- refs/tags/v2.2.1-rc1

- refs/tags/v2.2.1-rc2

- refs/tags/v2.2.2

- refs/tags/v2.2.2-rc1

- refs/tags/v2.2.2-rc2

- refs/tags/v2.2.3

- refs/tags/v2.2.3-rc1

- refs/tags/v2.3.0

- refs/tags/v2.3.0-rc1

- refs/tags/v2.3.0-rc2

- refs/tags/v2.3.0-rc3

- refs/tags/v2.3.0-rc4

- refs/tags/v2.3.1

- refs/tags/v2.3.1-rc1

- refs/tags/v2.3.1-rc2

- refs/tags/v2.3.1-rc3

- refs/tags/v2.3.1-rc4

- refs/tags/v2.3.2

- refs/tags/v2.3.2-rc1

- refs/tags/v2.3.2-rc2

- refs/tags/v2.3.2-rc3

- refs/tags/v2.3.2-rc4

- refs/tags/v2.3.2-rc5

- refs/tags/v2.3.2-rc6

- refs/tags/v2.3.3

- refs/tags/v2.3.3-rc1

- refs/tags/v2.3.3-rc2

- refs/tags/v2.3.4

- refs/tags/v2.3.4-rc1

- refs/tags/v2.4.0

- refs/tags/v2.4.0-rc1

- refs/tags/v2.4.0-rc2

- refs/tags/v2.4.0-rc3

- refs/tags/v2.4.0-rc4

- refs/tags/v2.4.0-rc5

- refs/tags/v2.4.1

- refs/tags/v2.4.1-rc1

- refs/tags/v2.4.1-rc2

- refs/tags/v2.4.1-rc3

- refs/tags/v2.4.1-rc4

- refs/tags/v2.4.1-rc5

- refs/tags/v2.4.1-rc6

- refs/tags/v2.4.1-rc7

- refs/tags/v2.4.1-rc8

- refs/tags/v2.4.1-rc9

- refs/tags/v2.4.2

- refs/tags/v2.4.2-rc1

- refs/tags/v2.4.3

- refs/tags/v2.4.3-rc1

- refs/tags/v2.4.4

- refs/tags/v2.4.4-rc1

- refs/tags/v2.4.4-rc2

- refs/tags/v2.4.4-rc3

- refs/tags/v2.4.5

- refs/tags/v2.4.5-rc1

- refs/tags/v2.4.5-rc2

- refs/tags/v2.4.6

- refs/tags/v2.4.6-rc1

- refs/tags/v2.4.6-rc2

- refs/tags/v2.4.6-rc3

- refs/tags/v2.4.6-rc4

- refs/tags/v2.4.6-rc5

- refs/tags/v2.4.6-rc6

- refs/tags/v2.4.6-rc7

- refs/tags/v2.4.6-rc8

- refs/tags/v2.4.7

- refs/tags/v2.4.7-rc1

- refs/tags/v2.4.7-rc2

- refs/tags/v2.4.7-rc3

- refs/tags/v2.4.8

- refs/tags/v2.4.8-rc1

- refs/tags/v2.4.8-rc2

- refs/tags/v2.4.8-rc3

- refs/tags/v2.4.8-rc4

- refs/tags/v3.0.0

- refs/tags/v3.0.0-preview2

- refs/tags/v3.0.0-preview2-rc1

- refs/tags/v3.0.0-preview2-rc2

- refs/tags/v3.0.0-rc1

- refs/tags/v3.0.0-rc2

- refs/tags/v3.0.0-rc3

- refs/tags/v3.0.1

- refs/tags/v3.0.1-rc1

- refs/tags/v3.0.1-rc2

- refs/tags/v3.0.1-rc3

- refs/tags/v3.0.2

- refs/tags/v3.0.2-rc1

- refs/tags/v3.0.3

- refs/tags/v3.0.3-rc1

- refs/tags/v3.1.0-rc1

- refs/tags/v3.1.1

- refs/tags/v3.1.1-rc1

- refs/tags/v3.1.1-rc2

- refs/tags/v3.1.1-rc3

- refs/tags/v3.1.2

- refs/tags/v3.1.2-rc1

- refs/tags/v3.1.3

- refs/tags/v3.1.3-rc1

- refs/tags/v3.1.3-rc2

- refs/tags/v3.1.3-rc3

- refs/tags/v3.1.3-rc4

- refs/tags/v3.2.0

- refs/tags/v3.2.0-rc1

- refs/tags/v3.2.0-rc2

- refs/tags/v3.2.0-rc3

- refs/tags/v3.2.0-rc4

- refs/tags/v3.2.0-rc5

- refs/tags/v3.2.0-rc6

- refs/tags/v3.2.0-rc7

- refs/tags/v3.2.1

- refs/tags/v3.2.1-rc1

- refs/tags/v3.2.1-rc2

- refs/tags/v3.2.2

- refs/tags/v3.2.2-rc1

- refs/tags/v3.2.3

- refs/tags/v3.2.3-rc1

- refs/tags/v3.2.4

- refs/tags/v3.2.4-rc1

- refs/tags/v3.3.0

- refs/tags/v3.3.0-rc1

- refs/tags/v3.3.0-rc2

- refs/tags/v3.3.0-rc3

- refs/tags/v3.3.0-rc4

- refs/tags/v3.3.0-rc5

- refs/tags/v3.3.0-rc6

- refs/tags/v3.3.1

- refs/tags/v3.3.1-rc1

- refs/tags/v3.3.1-rc2

- refs/tags/v3.3.1-rc3

- refs/tags/v3.3.1-rc4

- refs/tags/v3.3.2

- refs/tags/v3.3.2-rc1

- refs/tags/v3.3.3

- refs/tags/v3.3.3-rc1

- refs/tags/v3.3.4

- refs/tags/v3.3.4-rc1

- refs/tags/v3.4.0

- refs/tags/v3.4.0-rc1

- refs/tags/v3.4.0-rc2

- refs/tags/v3.4.0-rc3

- refs/tags/v3.4.0-rc4

- refs/tags/v3.4.0-rc5

- refs/tags/v3.4.0-rc6

- refs/tags/v3.4.0-rc7

- refs/tags/v3.4.1

- refs/tags/v3.4.1-rc1

- refs/tags/v3.4.2

- refs/tags/v3.4.2-rc1

- refs/tags/v3.5.0

- refs/tags/v3.5.0-rc1

- refs/tags/v3.5.0-rc2

- refs/tags/v3.5.0-rc3

- refs/tags/v3.5.0-rc4

- refs/tags/v3.5.0-rc5

- refs/tags/v3.5.1

- refs/tags/v3.5.1-rc1

- refs/tags/v3.5.1-rc2

Take a new snapshot of a software origin

If the archived software origin currently browsed is not synchronized with its upstream version (for instance when new commits have been issued), you can explicitly request Software Heritage to take a new snapshot of it.

Use the form below to proceed. Once a request has been submitted and accepted, it will be processed as soon as possible. You can then check its processing state by visiting this dedicated page.

Processing "take a new snapshot" request ...

Permalinks

To reference or cite the objects present in the Software Heritage archive, permalinks based on SoftWare Hash IDentifiers (SWHIDs) must be used.

Select below a type of object currently browsed in order to display its associated SWHID and permalink.

| Revision | Author | Date | Message | Commit Date |

|---|---|---|---|---|

| b5ea933 | Takeshi Yamamuro | 16 January 2019, 13:21:25 UTC | Preparing Spark release v2.3.3-rc1 | 16 January 2019, 13:21:25 UTC |

| 18c138b | Takeshi Yamamuro | 16 January 2019, 12:56:39 UTC | Revert "[SPARK-26576][SQL] Broadcast hint not applied to partitioned table" This reverts commit 87c2c11e742a8b35699f68ec2002f817c56bef87. | 16 January 2019, 12:56:39 UTC |

| 2a82295 | Shixiong Zhu | 21 November 2018, 01:31:12 UTC | [SPARK-26120][TESTS][SS][SPARKR] Fix a streaming query leak in Structured Streaming R tests ## What changes were proposed in this pull request? Stop the streaming query in `Specify a schema by using a DDL-formatted string when reading` to avoid outputting annoying logs. ## How was this patch tested? Jenkins Closes #23089 from zsxwing/SPARK-26120. Authored-by: Shixiong Zhu <zsxwing@gmail.com> Signed-off-by: hyukjinkwon <gurwls223@apache.org> (cherry picked from commit 4b7f7ef5007c2c8a5090f22c6e08927e9f9a407b) Signed-off-by: Felix Cheung <felixcheung@apache.org> | 16 January 2019, 12:56:15 UTC |

| 01511e4 | Felix Cheung | 29 September 2018, 21:48:32 UTC | [SPARK-25572][SPARKR] test only if not cran ## What changes were proposed in this pull request? CRAN doesn't seem to respect the system requirements as running tests - we have seen cases where SparkR is run on Java 10, which unfortunately Spark does not start on. For 2.4, lets attempt skipping all tests ## How was this patch tested? manual, jenkins, appveyor Author: Felix Cheung <felixcheung_m@hotmail.com> Closes #22589 from felixcheung/ralltests. (cherry picked from commit f4b138082ff91be74b0f5bbe19cdb90dd9e5f131) Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org> | 13 January 2019, 01:45:36 UTC |

| 20b7490 | Felix Cheung | 13 November 2018, 03:03:30 UTC | [SPARK-26010][R] fix vignette eval with Java 11 ## What changes were proposed in this pull request? changes in vignette only to disable eval ## How was this patch tested? Jenkins Author: Felix Cheung <felixcheung_m@hotmail.com> Closes #23007 from felixcheung/rjavavervig. (cherry picked from commit 88c82627267a9731b2438f0cc28dd656eb3dc834) Signed-off-by: Felix Cheung <felixcheung@apache.org> | 13 January 2019, 01:45:36 UTC |

| 6d063ee | Oleksii Shkarupin | 12 January 2019, 19:06:39 UTC | [SPARK-26538][SQL] Set default precision and scale for elements of postgres numeric array ## What changes were proposed in this pull request? When determining CatalystType for postgres columns with type `numeric[]` set the type of array element to `DecimalType(38, 18)` instead of `DecimalType(0,0)`. ## How was this patch tested? Tested with modified `org.apache.spark.sql.jdbc.JDBCSuite`. Ran the `PostgresIntegrationSuite` manually. Closes #23456 from a-shkarupin/postgres_numeric_array. Lead-authored-by: Oleksii Shkarupin <a.shkarupin@gmail.com> Co-authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 5b37092311bfc1255f1d4d81127ae4242ba1d1aa) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 12 January 2019, 19:07:12 UTC |

| b6c4649 | Dongjoon Hyun | 12 January 2019, 06:53:58 UTC | [SPARK-26607][SQL][TEST] Remove Spark 2.2.x testing from HiveExternalCatalogVersionsSuite The vote of final release of `branch-2.2` passed and the branch goes EOL. This PR removes Spark 2.2.x from the testing coverage. Pass the Jenkins. Closes #23526 from dongjoon-hyun/SPARK-26607. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 3587a9a2275615b82492b89204b141636542ce52) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 12 January 2019, 06:56:26 UTC |

| 87c2c11 | John Zhuge | 11 January 2019, 17:21:13 UTC | [SPARK-26576][SQL] Broadcast hint not applied to partitioned table ## What changes were proposed in this pull request? Make sure broadcast hint is applied to partitioned tables. Since the issue exists in branch 2.0 to 2.4, but not in master, I created this PR for branch-2.4. ## How was this patch tested? - A new unit test in PruneFileSourcePartitionsSuite - Unit test suites touched by SPARK-14581: JoinOptimizationSuite, FilterPushdownSuite, ColumnPruningSuite, and PruneFiltersSuite cloud-fan davies rxin Closes #23507 from jzhuge/SPARK-26576. Authored-by: John Zhuge <jzhuge@apache.org> Signed-off-by: gatorsmile <gatorsmile@gmail.com> (cherry picked from commit b9eb0e85de3317a7f4c89a90082f7793b645c6ea) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 11 January 2019, 17:22:00 UTC |

| 9052a5e | Dongjoon Hyun | 07 January 2019, 06:45:18 UTC | [MINOR][BUILD] Fix script name in `release-tag.sh` usage message ## What changes were proposed in this pull request? This PR fixes the old script name in `release-tag.sh`. $ ./release-tag.sh --help | head -n1 usage: tag-release.sh ## How was this patch tested? Manual. $ ./release-tag.sh --help | head -n1 usage: release-tag.sh Closes #23477 from dongjoon-hyun/SPARK-RELEASE-TAG. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 468d25ec7419b4c55955ead877232aae5654260e) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 07 January 2019, 06:46:16 UTC |

| 38fe12b | cclauss | 30 August 2018, 00:13:11 UTC | [SPARK-25253][PYSPARK][FOLLOWUP] Undefined name: from pyspark.util import _exception_message HyukjinKwon ## What changes were proposed in this pull request? add __from pyspark.util import \_exception_message__ to python/pyspark/java_gateway.py ## How was this patch tested? [flake8](http://flake8.pycqa.org) testing of https://github.com/apache/spark on Python 3.7.0 $ __flake8 . --count --select=E901,E999,F821,F822,F823 --show-source --statistics__ ``` ./python/pyspark/java_gateway.py:172:20: F821 undefined name '_exception_message' emsg = _exception_message(e) ^ 1 F821 undefined name '_exception_message' 1 ``` Please review http://spark.apache.org/contributing.html before opening a pull request. Closes #22265 from cclauss/patch-2. Authored-by: cclauss <cclauss@bluewin.ch> Signed-off-by: hyukjinkwon <gurwls223@apache.org> | 07 January 2019, 03:58:27 UTC |

| bb52170 | shane knapp | 06 January 2019, 02:31:02 UTC | [SPARK-26537][BUILD][BRANCH-2.3] change git-wip-us to gitbox ## What changes were proposed in this pull request? This is a backport of https://github.com/apache/spark/pull/23454 due to apache recently moving from git-wip-us.apache.org to gitbox.apache.org, we need to update the packaging scripts to point to the new repo location. this will also need to be backported to 2.4, 2.3, 2.1, 2.0 and 1.6. ## How was this patch tested? the build system will test this. Closes #23472 from dongjoon-hyun/SPARK-26537. Authored-by: shane knapp <incomplete@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 06 January 2019, 02:31:02 UTC |

| 64fce5c | Kris Mok | 05 January 2019, 22:37:04 UTC | [SPARK-26545] Fix typo in EqualNullSafe's truth table comment ## What changes were proposed in this pull request? The truth table comment in EqualNullSafe incorrectly marked FALSE results as UNKNOWN. ## How was this patch tested? N/A Closes #23461 from rednaxelafx/fix-typo. Authored-by: Kris Mok <kris.mok@databricks.com> Signed-off-by: gatorsmile <gatorsmile@gmail.com> (cherry picked from commit 4ab5b5b9185f60f671d90d94732d0d784afa5f84) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 05 January 2019, 22:38:29 UTC |

| d618d27 | Marco Gaido | 05 January 2019, 12:43:38 UTC | [SPARK-26078][SQL][BACKPORT-2.3] Dedup self-join attributes on IN subqueries ## What changes were proposed in this pull request? When there is a self-join as result of a IN subquery, the join condition may be invalid, resulting in trivially true predicates and return wrong results. The PR deduplicates the subquery output in order to avoid the issue. ## How was this patch tested? added UT Closes #23450 from mgaido91/SPARK-26078_2.3. Authored-by: Marco Gaido <marcogaido91@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 05 January 2019, 12:43:38 UTC |

| 30b82a3 | Dongjoon Hyun | 04 January 2019, 04:01:19 UTC | [MINOR][NETWORK][TEST] Fix TransportFrameDecoderSuite to use ByteBuf instead of ByteBuffer ## What changes were proposed in this pull request? `fireChannelRead` expects `io.netty.buffer.ByteBuf`.I checked that this is the only place which misuse `java.nio.ByteBuffer` in `network` module. ## How was this patch tested? Pass the Jenkins with the existing tests. Closes #23442 from dongjoon-hyun/SPARK-NETWORK-COMMON. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 27e42c1de502da80fa3e22bb69de47fb00158174) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 04 January 2019, 04:02:54 UTC |

| 30a811b | Imran Rashid | 03 January 2019, 03:10:55 UTC | [SPARK-26019][PYSPARK] Allow insecure py4j gateways Spark always creates secure py4j connections between java and python, but it also allows users to pass in their own connection. This restores the ability for users to pass in an _insecure_ connection, though it forces them to set the env variable 'PYSPARK_ALLOW_INSECURE_GATEWAY=1', and still issues a warning. Added test cases verifying the failure without the extra configuration, and verifying things still work with an insecure configuration (in particular, accumulators, as those were broken with an insecure py4j gateway before). For the tests, I added ways to create insecure gateways, but I tried to put in protections to make sure that wouldn't get used incorrectly. Closes #23337 from squito/SPARK-26019. Authored-by: Imran Rashid <irashid@cloudera.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 1e99f4ec5d030b80971603f090afa4e51079c5e7) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 03 January 2019, 03:11:54 UTC |

| 70a99ba | Liang-Chi Hsieh | 03 January 2019, 02:58:43 UTC | [SPARK-25591][PYSPARK][SQL][BRANCH-2.3] Avoid overwriting deserialized accumulator ## What changes were proposed in this pull request? If we use accumulators in more than one UDFs, it is possible to overwrite deserialized accumulators and its values. We should check if an accumulator was deserialized before overwriting it in accumulator registry. ## How was this patch tested? Added test. Closes #23432 from viirya/SPARK-25591-2.3. Authored-by: Liang-Chi Hsieh <viirya@gmail.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 03 January 2019, 02:58:43 UTC |

| c3d759f | Hyukjin Kwon | 29 December 2018, 20:11:45 UTC | [SPARK-26496][SS][TEST] Avoid to use Random.nextString in StreamingInnerJoinSuite ## What changes were proposed in this pull request? Similar with https://github.com/apache/spark/pull/21446. Looks random string is not quite safe as a directory name. ```scala scala> val prefix = Random.nextString(10); val dir = new File("/tmp", "del_" + prefix + "-" + UUID.randomUUID.toString); dir.mkdirs() prefix: String = 窽텘⒘駖ⵚ駢⡞Ρ닋 dir: java.io.File = /tmp/del_窽텘⒘駖ⵚ駢⡞Ρ닋-a3f99855-c429-47a0-a108-47bca6905745 res40: Boolean = false // nope, didn't like this one ``` ## How was this patch tested? Unit test was added, and manually. Closes #23405 from HyukjinKwon/SPARK-26496. Authored-by: Hyukjin Kwon <gurwls223@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit e63243df8aca9f44255879e931e0c372beef9fc2) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 29 December 2018, 20:12:42 UTC |

| acbfb31 | seancxmao | 28 December 2018, 13:40:59 UTC | [SPARK-26444][WEBUI] Stage color doesn't change with it's status ## What changes were proposed in this pull request? On job page, in event timeline section, stage color doesn't change according to its status. Below are some screenshots. ACTIVE: <img width="550" alt="active" src="https://user-images.githubusercontent.com/12194089/50438844-c763e580-092a-11e9-84f6-6fc30e08d69b.png"> COMPLETE: <img width="516" alt="complete" src="https://user-images.githubusercontent.com/12194089/50438847-ca5ed600-092a-11e9-9d2e-5d79807bc1ce.png"> FAILED: <img width="325" alt="failed" src="https://user-images.githubusercontent.com/12194089/50438852-ccc13000-092a-11e9-9b6b-782b96b283b1.png"> This PR lets stage color change with it's status. The main idea is to make css style class name match the corresponding stage status. ## How was this patch tested? Manually tested locally. ``` // active/complete stage sc.parallelize(1 to 3, 3).map { n => Thread.sleep(10* 1000); n }.count // failed stage sc.parallelize(1 to 3, 3).map { n => Thread.sleep(10* 1000); throw new Exception() }.count ``` Note we need to clear browser cache to let new `timeline-view.css` take effect. Below are screenshots after this PR. ACTIVE: <img width="569" alt="active-after" src="https://user-images.githubusercontent.com/12194089/50439986-08f68f80-092f-11e9-85d9-be1c31aed13b.png"> COMPLETE: <img width="567" alt="complete-after" src="https://user-images.githubusercontent.com/12194089/50439990-0bf18000-092f-11e9-8624-723958906e90.png"> FAILED: <img width="352" alt="failed-after" src="https://user-images.githubusercontent.com/12194089/50439993-101d9d80-092f-11e9-8dfd-3e20536f2fa5.png"> Closes #23385 from seancxmao/timeline-stage-color. Authored-by: seancxmao <seancxmao@gmail.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 5bef4fedfe1916320223b1245bacb58f151cee66) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 28 December 2018, 13:42:10 UTC |

| acf20d2 | Marco Gaido | 23 December 2018, 00:09:14 UTC | [SPARK-26366][SQL][BACKPORT-2.3] ReplaceExceptWithFilter should consider NULL as False ## What changes were proposed in this pull request? In `ReplaceExceptWithFilter` we do not consider properly the case in which the condition returns NULL. Indeed, in that case, since negating NULL still returns NULL, so it is not true the assumption that negating the condition returns all the rows which didn't satisfy it, rows returning NULL may not be returned. This happens when constraints inferred by `InferFiltersFromConstraints` are not enough, as it happens with `OR` conditions. The rule had also problems with non-deterministic conditions: in such a scenario, this rule would change the probability of the output. The PR fixes these problem by: - returning False for the condition when it is Null (in this way we do return all the rows which didn't satisfy it); - avoiding any transformation when the condition is non-deterministic. ## How was this patch tested? added UTs Closes #23372 from mgaido91/SPARK-26366_2.3_2. Authored-by: Marco Gaido <marcogaido91@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 23 December 2018, 00:09:14 UTC |

| d9d3bea | Dongjoon Hyun | 22 December 2018, 03:57:07 UTC | Revert "[SPARK-26366][SQL][BACKPORT-2.3] ReplaceExceptWithFilter should consider NULL as False" This reverts commit a7d50ae24a5f92e8d9b6622436f0bb4c2e06cbe1. | 22 December 2018, 03:57:07 UTC |

| a7d50ae | Marco Gaido | 21 December 2018, 22:52:29 UTC | [SPARK-26366][SQL][BACKPORT-2.3] ReplaceExceptWithFilter should consider NULL as False ## What changes were proposed in this pull request? In `ReplaceExceptWithFilter` we do not consider properly the case in which the condition returns NULL. Indeed, in that case, since negating NULL still returns NULL, so it is not true the assumption that negating the condition returns all the rows which didn't satisfy it, rows returning NULL may not be returned. This happens when constraints inferred by `InferFiltersFromConstraints` are not enough, as it happens with `OR` conditions. The rule had also problems with non-deterministic conditions: in such a scenario, this rule would change the probability of the output. The PR fixes these problem by: - returning False for the condition when it is Null (in this way we do return all the rows which didn't satisfy it); - avoiding any transformation when the condition is non-deterministic. ## How was this patch tested? added UTs Closes #23350 from mgaido91/SPARK-26366_2.3. Authored-by: Marco Gaido <marcogaido91@gmail.com> Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 21 December 2018, 22:52:29 UTC |

| b4aeb81 | Hyukjin Kwon | 21 December 2018, 08:09:30 UTC | [SPARK-26422][R] Support to disable Hive support in SparkR even for Hadoop versions unsupported by Hive fork ## What changes were proposed in this pull request? Currently, even if I explicitly disable Hive support in SparkR session as below: ```r sparkSession <- sparkR.session("local[4]", "SparkR", Sys.getenv("SPARK_HOME"), enableHiveSupport = FALSE) ``` produces when the Hadoop version is not supported by our Hive fork: ``` java.lang.reflect.InvocationTargetException ... Caused by: java.lang.IllegalArgumentException: Unrecognized Hadoop major version number: 3.1.1.3.1.0.0-78 at org.apache.hadoop.hive.shims.ShimLoader.getMajorVersion(ShimLoader.java:174) at org.apache.hadoop.hive.shims.ShimLoader.loadShims(ShimLoader.java:139) at org.apache.hadoop.hive.shims.ShimLoader.getHadoopShims(ShimLoader.java:100) at org.apache.hadoop.hive.conf.HiveConf$ConfVars.<clinit>(HiveConf.java:368) ... 43 more Error in handleErrors(returnStatus, conn) : java.lang.ExceptionInInitializerError at org.apache.hadoop.hive.conf.HiveConf.<clinit>(HiveConf.java:105) at java.lang.Class.forName0(Native Method) at java.lang.Class.forName(Class.java:348) at org.apache.spark.util.Utils$.classForName(Utils.scala:193) at org.apache.spark.sql.SparkSession$.hiveClassesArePresent(SparkSession.scala:1116) at org.apache.spark.sql.api.r.SQLUtils$.getOrCreateSparkSession(SQLUtils.scala:52) at org.apache.spark.sql.api.r.SQLUtils.getOrCreateSparkSession(SQLUtils.scala) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ``` The root cause is that: ``` SparkSession.hiveClassesArePresent ``` check if the class is loadable or not to check if that's in classpath but `org.apache.hadoop.hive.conf.HiveConf` has a check for Hadoop version as static logic which is executed right away. This throws an `IllegalArgumentException` and that's not caught: https://github.com/apache/spark/blob/36edbac1c8337a4719f90e4abd58d38738b2e1fb/sql/core/src/main/scala/org/apache/spark/sql/SparkSession.scala#L1113-L1121 So, currently, if users have a Hive built-in Spark with unsupported Hadoop version by our fork (namely 3+), there's no way to use SparkR even though it could work. This PR just propose to change the order of bool comparison so that we can don't execute `SparkSession.hiveClassesArePresent` when: 1. `enableHiveSupport` is explicitly disabled 2. `spark.sql.catalogImplementation` is `in-memory` so that we **only** check `SparkSession.hiveClassesArePresent` when Hive support is explicitly enabled by short circuiting. ## How was this patch tested? It's difficult to write a test since we don't run tests against Hadoop 3 yet. See https://github.com/apache/spark/pull/21588. Manually tested. Closes #23356 from HyukjinKwon/SPARK-26422. Authored-by: Hyukjin Kwon <gurwls223@apache.org> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 305e9b5ad22b428501fd42d3730d73d2e09ad4c5) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 21 December 2018, 08:11:00 UTC |

| a22a11b | zhoukang | 20 December 2018, 14:26:25 UTC | [SPARK-24687][CORE] Avoid job hanging when generate task binary causes fatal error ## What changes were proposed in this pull request? When NoClassDefFoundError thrown,it will cause job hang. `Exception in thread "dag-scheduler-event-loop" java.lang.NoClassDefFoundError: Lcom/xxx/data/recommend/aggregator/queue/QueueName; at java.lang.Class.getDeclaredFields0(Native Method) at java.lang.Class.privateGetDeclaredFields(Class.java:2436) at java.lang.Class.getDeclaredField(Class.java:1946) at java.io.ObjectStreamClass.getDeclaredSUID(ObjectStreamClass.java:1659) at java.io.ObjectStreamClass.access$700(ObjectStreamClass.java:72) at java.io.ObjectStreamClass$2.run(ObjectStreamClass.java:480) at java.io.ObjectStreamClass$2.run(ObjectStreamClass.java:468) at java.security.AccessController.doPrivileged(Native Method) at java.io.ObjectStreamClass.<init>(ObjectStreamClass.java:468) at java.io.ObjectStreamClass.lookup(ObjectStreamClass.java:365) at java.io.ObjectOutputStream.writeClass(ObjectOutputStream.java:1212) at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1119) at java.io.ObjectOutputStream.defaultWriteFields(ObjectOutputStream.java:1547) at java.io.ObjectOutputStream.writeSerialData(ObjectOutputStream.java:1508) at java.io.ObjectOutputStream.writeOrdinaryObject(ObjectOutputStream.java:1431) at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1177) at java.io.ObjectOutputStream.defaultWriteFields(ObjectOutputStream.java:1547) at java.io.ObjectOutputStream.writeSerialData(ObjectOutputStream.java:1508) at java.io.ObjectOutputStream.writeOrdinaryObject(ObjectOutputStream.java:1431) at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1177) at java.io.ObjectOutputStream.defaultWriteFields(ObjectOutputStream.java:1547) at java.io.ObjectOutputStream.writeSerialData(ObjectOutputStream.java:1508) at java.io.ObjectOutputStream.writeOrdinaryObject(ObjectOutputStream.java:1431) at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1177) at java.io.ObjectOutputStream.writeArray(ObjectOutputStream.java:1377) at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1173) at java.io.ObjectOutputStream.defaultWriteFields(ObjectOutputStream.java:1547) at java.io.ObjectOutputStream.writeSerialData(ObjectOutputStream.java:1508) at java.io.ObjectOutputStream.writeOrdinaryObject(ObjectOutputStream.java:1431) at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1177) at java.io.ObjectOutputStream.defaultWriteFields(ObjectOutputStream.java:1547) at java.io.ObjectOutputStream.writeSerialData(ObjectOutputStream.java:1508) at java.io.ObjectOutputStream.writeOrdinaryObject(ObjectOutputStream.java:1431) at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1177) at java.io.ObjectOutputStream.defaultWriteFields(ObjectOutputStream.java:1547) at java.io.ObjectOutputStream.writeSerialData(ObjectOutputStream.java:1508) at java.io.ObjectOutputStream.writeOrdinaryObject(ObjectOutputStream.java:1431) at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1177) at java.io.ObjectOutputStream.writeArray(ObjectOutputStream.java:1377)` It is caused by NoClassDefFoundError will not catch up during task seriazation. `var taskBinary: Broadcast[Array[Byte]] = null try { // For ShuffleMapTask, serialize and broadcast (rdd, shuffleDep). // For ResultTask, serialize and broadcast (rdd, func). val taskBinaryBytes: Array[Byte] = stage match { case stage: ShuffleMapStage => JavaUtils.bufferToArray( closureSerializer.serialize((stage.rdd, stage.shuffleDep): AnyRef)) case stage: ResultStage => JavaUtils.bufferToArray(closureSerializer.serialize((stage.rdd, stage.func): AnyRef)) } taskBinary = sc.broadcast(taskBinaryBytes) } catch { // In the case of a failure during serialization, abort the stage. case e: NotSerializableException => abortStage(stage, "Task not serializable: " + e.toString, Some(e)) runningStages -= stage // Abort execution return case NonFatal(e) => abortStage(stage, s"Task serialization failed: $e\n${Utils.exceptionString(e)}", Some(e)) runningStages -= stage return }` image below shows that stage 33 blocked and never be scheduled. <img width="1273" alt="2018-06-28 4 28 42" src="https://user-images.githubusercontent.com/26762018/42621188-b87becca-85ef-11e8-9a0b-0ddf07504c96.png"> <img width="569" alt="2018-06-28 4 28 49" src="https://user-images.githubusercontent.com/26762018/42621191-b8b260e8-85ef-11e8-9d10-e97a5918baa6.png"> ## How was this patch tested? UT Closes #21664 from caneGuy/zhoukang/fix-noclassdeferror. Authored-by: zhoukang <zhoukang199191@gmail.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 7c8f4756c34a0b00931c2987c827a18d989e6c08) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 20 December 2018, 14:27:46 UTC |

| 832812e | Jackey Lee | 18 December 2018, 18:15:36 UTC | [SPARK-26394][CORE] Fix annotation error for Utils.timeStringAsMs ## What changes were proposed in this pull request? Change microseconds to milliseconds in annotation of Utils.timeStringAsMs. Closes #23346 from stczwd/stczwd. Authored-by: Jackey Lee <qcsd2011@163.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 428eb2ad0ad8a141427120b13de3287962258c2d) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 18 December 2018, 18:16:38 UTC |

| 35c4235 | jiake | 18 December 2018, 16:56:53 UTC | [SPARK-26316][SPARK-21052][BRANCH-2.3] Revert hash join metrics in that causes performance degradation ## What changes were proposed in this pull request? Revert spark 21052 in spark 2.3 because of the discussion in [PR23269](https://github.com/apache/spark/pull/23269) ## How was this patch tested? N/A Closes #23319 from JkSelf/branch-2.3-revert21052. Authored-by: jiake <ke.a.jia@intel.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 18 December 2018, 16:56:53 UTC |

| bccefa5 | Kris Mok | 17 December 2018, 14:58:27 UTC | [SPARK-26352][SQL][FOLLOWUP-2.3] Fix missing sameOutput in branch-2.3 ## What changes were proposed in this pull request? This is the branch-2.3 equivalent of https://github.com/apache/spark/pull/23330. After https://github.com/apache/spark/pull/23303 was merged to branch-2.3/2.4, the builds on those branches were broken due to missing a `LogicalPlan.sameOutput` function which came from https://github.com/apache/spark/pull/22713 only available on master. This PR is to follow-up with the broken 2.3/2.4 branches and make a copy of the new `LogicalPlan.sameOutput` into `ReorderJoin` to make it locally available. ## How was this patch tested? Fix the build of 2.3/2.4. Closes #23333 from rednaxelafx/branch-2.3. Authored-by: Kris Mok <rednaxelafx@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 17 December 2018, 14:58:27 UTC |

| 1576bd7 | Kris Mok | 17 December 2018, 05:41:20 UTC | [SPARK-26352][SQL] join reorder should not change the order of output attributes ## What changes were proposed in this pull request? The optimizer rule `org.apache.spark.sql.catalyst.optimizer.ReorderJoin` performs join reordering on inner joins. This was introduced from SPARK-12032 (https://github.com/apache/spark/pull/10073) in 2015-12. After it had reordered the joins, though, it didn't check whether or not the output attribute order is still the same as before. Thus, it's possible to have a mismatch between the reordered output attributes order vs the schema that a DataFrame thinks it has. The same problem exists in the CBO version of join reordering (`CostBasedJoinReorder`) too. This can be demonstrated with the example: ```scala spark.sql("create table table_a (x int, y int) using parquet") spark.sql("create table table_b (i int, j int) using parquet") spark.sql("create table table_c (a int, b int) using parquet") val df = spark.sql(""" with df1 as (select * from table_a cross join table_b) select * from df1 join table_c on a = x and b = i """) ``` here's what the DataFrame thinks: ``` scala> df.printSchema root |-- x: integer (nullable = true) |-- y: integer (nullable = true) |-- i: integer (nullable = true) |-- j: integer (nullable = true) |-- a: integer (nullable = true) |-- b: integer (nullable = true) ``` here's what the optimized plan thinks, after join reordering: ``` scala> df.queryExecution.optimizedPlan.output.foreach(a => println(s"|-- ${a.name}: ${a.dataType.typeName}")) |-- x: integer |-- y: integer |-- a: integer |-- b: integer |-- i: integer |-- j: integer ``` If we exclude the `ReorderJoin` rule (using Spark 2.4's optimizer rule exclusion feature), it's back to normal: ``` scala> spark.conf.set("spark.sql.optimizer.excludedRules", "org.apache.spark.sql.catalyst.optimizer.ReorderJoin") scala> val df = spark.sql("with df1 as (select * from table_a cross join table_b) select * from df1 join table_c on a = x and b = i") df: org.apache.spark.sql.DataFrame = [x: int, y: int ... 4 more fields] scala> df.queryExecution.optimizedPlan.output.foreach(a => println(s"|-- ${a.name}: ${a.dataType.typeName}")) |-- x: integer |-- y: integer |-- i: integer |-- j: integer |-- a: integer |-- b: integer ``` Note that this output attribute ordering problem leads to data corruption, and can manifest itself in various symptoms: * Silently corrupting data, if the reordered columns happen to either have matching types or have sufficiently-compatible types (e.g. all fixed length primitive types are considered as "sufficiently compatible" in an `UnsafeRow`), then only the resulting data is going to be wrong but it might not trigger any alarms immediately. Or * Weird Java-level exceptions like `java.lang.NegativeArraySizeException`, or even SIGSEGVs. ## How was this patch tested? Added new unit test in `JoinReorderSuite` and new end-to-end test in `JoinSuite`. Also made `JoinReorderSuite` and `StarJoinReorderSuite` assert more strongly on maintaining output attribute order. Closes #23303 from rednaxelafx/fix-join-reorder. Authored-by: Kris Mok <rednaxelafx@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 56448c662398f4c5319a337e6601450270a6a27c) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 17 December 2018, 05:46:10 UTC |

| 20558f7 | Jing Chen He | 15 December 2018, 14:41:16 UTC | [SPARK-26315][PYSPARK] auto cast threshold from Integer to Float in approxSimilarityJoin of BucketedRandomProjectionLSHModel ## What changes were proposed in this pull request? If the input parameter 'threshold' to the function approxSimilarityJoin is not a float, we would get an exception. The fix is to convert the 'threshold' into a float before calling the java implementation method. ## How was this patch tested? Added a new test case. Without this fix, the test will throw an exception as reported in the JIRA. With the fix, the test passes. Please review http://spark.apache.org/contributing.html before opening a pull request. Closes #23313 from jerryjch/SPARK-26315. Authored-by: Jing Chen He <jinghe@us.ibm.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 860f4497f2a59b21d455ec8bfad9ae15d2fd4d2e) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 15 December 2018, 14:42:27 UTC |

| 7930fbd | Yuanjian Li | 14 December 2018, 21:05:14 UTC | [SPARK-26327][SQL][BACKPORT-2.3] Bug fix for `FileSourceScanExec` metrics update ## What changes were proposed in this pull request? Backport #23277 to branch 2.3 without the metrics renaming. ## How was this patch tested? New test case in `SQLMetricsSuite`. Closes #23299 from xuanyuanking/SPARK-26327-2.3. Authored-by: Yuanjian Li <xyliyuanjian@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 14 December 2018, 21:05:14 UTC |

| 3772d93 | gatorsmile | 10 December 2018, 06:57:20 UTC | [SPARK-26307][SQL] Fix CTAS when INSERT a partitioned table using Hive serde This is a Spark 2.3 regression introduced in https://github.com/apache/spark/pull/20521. We should add the partition info for InsertIntoHiveTable in CreateHiveTableAsSelectCommand. Otherwise, we will hit the following error by running the newly added test case: ``` [info] - CTAS: INSERT a partitioned table using Hive serde *** FAILED *** (829 milliseconds) [info] org.apache.spark.SparkException: Requested partitioning does not match the tab1 table: [info] Requested partitions: [info] Table partitions: part [info] at org.apache.spark.sql.hive.execution.InsertIntoHiveTable.processInsert(InsertIntoHiveTable.scala:179) [info] at org.apache.spark.sql.hive.execution.InsertIntoHiveTable.run(InsertIntoHiveTable.scala:107) ``` Added a test case. Closes #23255 from gatorsmile/fixCTAS. Authored-by: gatorsmile <gatorsmile@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 3bc83de3cce86a06c275c86b547a99afd781761f) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 10 December 2018, 06:59:35 UTC |

| 1899dd2 | Marco Gaido | 05 December 2018, 17:12:29 UTC | [SPARK-26233][SQL][BACKPORT-2.3] CheckOverflow when encoding a decimal value ## What changes were proposed in this pull request? When we encode a Decimal from external source we don't check for overflow. That method is useful not only in order to enforce that we can represent the correct value in the specified range, but it also changes the underlying data to the right precision/scale. Since in our code generation we assume that a decimal has exactly the same precision and scale of its data type, missing to enforce it can lead to corrupted output/results when there are subsequent transformations. ## How was this patch tested? added UT Closes #23233 from mgaido91/SPARK-26233_2.3. Authored-by: Marco Gaido <marcogaido91@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 05 December 2018, 17:12:29 UTC |

| 8236f64 | Yuming Wang | 02 December 2018, 14:52:01 UTC | [SPARK-26198][SQL] Fix Metadata serialize null values throw NPE How to reproduce this issue: ```scala scala> val meta = new org.apache.spark.sql.types.MetadataBuilder().putNull("key").build().json java.lang.NullPointerException at org.apache.spark.sql.types.Metadata$.org$apache$spark$sql$types$Metadata$$toJsonValue(Metadata.scala:196) at org.apache.spark.sql.types.Metadata$$anonfun$1.apply(Metadata.scala:180) ``` This pr fix `NullPointerException` when `Metadata` serialize `null` values. unit tests Closes #23164 from wangyum/SPARK-26198. Authored-by: Yuming Wang <yumwang@ebay.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 676bbb2446af1f281b8f76a5428b7ba75b7588b3) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 03 December 2018, 05:44:00 UTC |

| 0058986 | liuxian | 01 December 2018, 13:11:31 UTC | [MINOR][DOC] Correct some document description errors Correct some document description errors. N/A Closes #23162 from 10110346/docerror. Authored-by: liuxian <liu.xian3@zte.com.cn> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 60e4239a1e3506d342099981b6e3b3b8431a203e) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 01 December 2018, 13:17:48 UTC |

| 4ee463a | schintap | 30 November 2018, 18:48:56 UTC | [SPARK-26201] Fix python broadcast with encryption ## What changes were proposed in this pull request? Python with rpc and disk encryption enabled along with a python broadcast variable and just read the value back on the driver side the job failed with: Traceback (most recent call last): File "broadcast.py", line 37, in <module> words_new.value File "/pyspark.zip/pyspark/broadcast.py", line 137, in value File "pyspark.zip/pyspark/broadcast.py", line 122, in load_from_path File "pyspark.zip/pyspark/broadcast.py", line 128, in load EOFError: Ran out of input To reproduce use configs: --conf spark.network.crypto.enabled=true --conf spark.io.encryption.enabled=true Code: words_new = sc.broadcast(["scala", "java", "hadoop", "spark", "akka"]) words_new.value print(words_new.value) ## How was this patch tested? words_new = sc.broadcast([“scala”, “java”, “hadoop”, “spark”, “akka”]) textFile = sc.textFile(“README.md”) wordCounts = textFile.flatMap(lambda line: line.split()).map(lambda word: (word + words_new.value[1], 1)).reduceByKey(lambda a, b: a+b) count = wordCounts.count() print(count) words_new.value print(words_new.value) Closes #23166 from redsanket/SPARK-26201. Authored-by: schintap <schintap@oath.com> Signed-off-by: Thomas Graves <tgraves@apache.org> (cherry picked from commit 9b23be2e95fec756066ca0ed3188c3db2602b757) Signed-off-by: Thomas Graves <tgraves@apache.org> | 30 November 2018, 18:49:30 UTC |

| e96ba84 | Takuya UESHIN | 29 November 2018, 14:37:02 UTC | [SPARK-26211][SQL] Fix InSet for binary, and struct and array with null. Currently `InSet` doesn't work properly for binary type, or struct and array type with null value in the set. Because, as for binary type, the `HashSet` doesn't work properly for `Array[Byte]`, and as for struct and array type with null value in the set, the `ordering` will throw a `NPE`. Added a few tests. Closes #23176 from ueshin/issues/SPARK-26211/inset. Authored-by: Takuya UESHIN <ueshin@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit b9b68a6dc7d0f735163e980392ea957f2d589923) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 29 November 2018, 14:41:51 UTC |

| 96a5a12 | Mark Pavey | 28 November 2018, 15:19:47 UTC | [SPARK-26137][CORE] Use Java system property "file.separator" inste… … of hard coded "/" in DependencyUtils ## What changes were proposed in this pull request? Use Java system property "file.separator" instead of hard coded "/" in DependencyUtils. ## How was this patch tested? Manual test: Submit Spark application via REST API that reads data from Elasticsearch using spark-elasticsearch library. Without fix application fails with error: 18/11/22 10:36:20 ERROR Version: Multiple ES-Hadoop versions detected in the classpath; please use only one jar:file:/C:/<...>/spark-2.4.0-bin-hadoop2.6/work/driver-20181122103610-0001/myApp-assembly-1.0.jar jar:file:/C:/<...>/myApp-assembly-1.0.jar 18/11/22 10:36:20 ERROR Main: Application [MyApp] failed: java.lang.Error: Multiple ES-Hadoop versions detected in the classpath; please use only one jar:file:/C:/<...>/spark-2.4.0-bin-hadoop2.6/work/driver-20181122103610-0001/myApp-assembly-1.0.jar jar:file:/C:/<...>/myApp-assembly-1.0.jar at org.elasticsearch.hadoop.util.Version.<clinit>(Version.java:73) at org.elasticsearch.hadoop.rest.RestService.findPartitions(RestService.java:214) at org.elasticsearch.spark.rdd.AbstractEsRDD.esPartitions$lzycompute(AbstractEsRDD.scala:73) at org.elasticsearch.spark.rdd.AbstractEsRDD.esPartitions(AbstractEsRDD.scala:72) at org.elasticsearch.spark.rdd.AbstractEsRDD.getPartitions(AbstractEsRDD.scala:44) at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:253) at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:251) at scala.Option.getOrElse(Option.scala:121) at org.apache.spark.rdd.RDD.partitions(RDD.scala:251) at org.apache.spark.rdd.MapPartitionsRDD.getPartitions(MapPartitionsRDD.scala:49) at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:253) at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:251) at scala.Option.getOrElse(Option.scala:121) at org.apache.spark.rdd.RDD.partitions(RDD.scala:251) at org.apache.spark.SparkContext.runJob(SparkContext.scala:2126) at org.apache.spark.rdd.RDD$$anonfun$collect$1.apply(RDD.scala:945) at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151) at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112) at org.apache.spark.rdd.RDD.withScope(RDD.scala:363) at org.apache.spark.rdd.RDD.collect(RDD.scala:944) ... at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.spark.deploy.worker.DriverWrapper$.main(DriverWrapper.scala:65) at org.apache.spark.deploy.worker.DriverWrapper.main(DriverWrapper.scala) With fix application runs successfully. Closes #23102 from markpavey/JIRA_SPARK-26137_DependencyUtilsFileSeparatorFix. Authored-by: Mark Pavey <markpavey@exabre.co.uk> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit ce61bac1d84f8577b180400e44bd9bf22292e0b6) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 28 November 2018, 15:20:27 UTC |

| de5f489 | liuxian | 24 November 2018, 15:10:15 UTC | [SPARK-25786][CORE] If the ByteBuffer.hasArray is false , it will throw UnsupportedOperationException for Kryo `deserialize` for kryo, the type of input parameter is ByteBuffer, if it is not backed by an accessible byte array. it will throw `UnsupportedOperationException` Exception Info: ``` java.lang.UnsupportedOperationException was thrown. java.lang.UnsupportedOperationException at java.nio.ByteBuffer.array(ByteBuffer.java:994) at org.apache.spark.serializer.KryoSerializerInstance.deserialize(KryoSerializer.scala:362) ``` Added a unit test Closes #22779 from 10110346/InputStreamKryo. Authored-by: liuxian <liu.xian3@zte.com.cn> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 7f5f7a967d36d78f73d8fa1e178dfdb324d73bf1) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 24 November 2018, 15:25:06 UTC |

| 62010d6 | “attilapiros” | 22 November 2018, 06:58:30 UTC | [SPARK-26118][BACKPORT-2.3][WEB UI] Introducing spark.ui.requestHeaderSize for setting HTTP requestHeaderSize ## What changes were proposed in this pull request? Introducing spark.ui.requestHeaderSize for configuring Jetty's HTTP requestHeaderSize. This way long authorization field does not lead to HTTP 413. ## How was this patch tested? Manually with curl (which version must be at least 7.55). With the original default value (8k limit): ```bash $ ./sbin/start-history-server.sh starting org.apache.spark.deploy.history.HistoryServer, logging to /Users/attilapiros/github/spark/logs/spark-attilapiros-org.apache.spark.deploy.history.HistoryServer-1-apiros-MBP.lan.out $ echo -n "X-Custom-Header: " > cookie $ printf 'A%.0s' {1..9500} >> cookie $ curl -H cookie http://458apiros-MBP.lan:18080/ <h1>Bad Message 431</h1><pre>reason: Request Header Fields Too Large</pre> $ tail -1 /Users/attilapiros/github/spark/logs/spark-attilapiros-org.apache.spark.deploy.history.HistoryServer-1-apiros-MBP.lan.out 18/11/19 21:24:28 WARN HttpParser: Header is too large 8193>8192 ``` After: ```bash $ echo spark.ui.requestHeaderSize=10000 > history.properties $ ./sbin/start-history-server.sh --properties-file history.properties starting org.apache.spark.deploy.history.HistoryServer, logging to /Users/attilapiros/github/spark/logs/spark-attilapiros-org.apache.spark.deploy.history.HistoryServer-1-apiros-MBP.lan.out $ curl -H cookie http://458apiros-MBP.lan:18080/ <!DOCTYPE html><html> <head>... <link rel="shortcut icon" href="/static/spark-logo-77x50px-hd.png"></link> <title>History Server</title> </head> <body> ... ``` (cherry picked from commit ab61ddb34d58ab5701191c8fd3a24a62f6ebf37b) Closes #23114 from attilapiros/julianOffByDays-2.3. Authored-by: “attilapiros” <piros.attila.zsolt@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 22 November 2018, 06:58:30 UTC |

| 8b6504e | Shahid | 21 November 2018, 15:31:35 UTC | [SPARK-26109][WEBUI] Duration in the task summary metrics table and the task table are different ## What changes were proposed in this pull request? Task summary table displays the summary of the task table in the stage page. However, the 'Duration' metrics of 'task summary' table and 'task table' are not matching. The reason is because, in the 'task summary' we display 'executorRunTime' as the duration, and in the 'task table' the actual duration of the task. Except duration metrics, all other metrics are properly displaying in the task summary. In Spark2.2, used to show 'executorRunTime' as duration in the 'taskTable'. That is why, in summary metrics also the 'exeuctorRunTime' shows as the duration. So, we need to show 'executorRunTime' as the duration in the tasks table to follow the same behaviour as the previous versions of spark. ## How was this patch tested? Before patch:  After patch:  Closes #23081 from shahidki31/duratinSummary. Authored-by: Shahid <shahidki31@gmail.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 540afc2b18ef61cceb50b9a5b327e6fcdbe1e7e4) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 21 November 2018, 15:32:19 UTC |

| 0fb830c | Simeon Simeonov | 20 November 2018, 20:29:56 UTC | [SPARK-26084][SQL] Fixes unresolved AggregateExpression.references exception ## What changes were proposed in this pull request? This PR fixes an exception in `AggregateExpression.references` called on unresolved expressions. It implements the solution proposed in [SPARK-26084](https://issues.apache.org/jira/browse/SPARK-26084), a minor refactoring that removes the unnecessary dependence on `AttributeSet.toSeq`, which requires expression IDs and, therefore, can only execute successfully for resolved expressions. The refactored implementation is both simpler and faster, eliminating the conversion of a `Set` to a `Seq` and back to `Set`. ## How was this patch tested? Added a new test based on the failing case in [SPARK-26084](https://issues.apache.org/jira/browse/SPARK-26084). hvanhovell Closes #23075 from ssimeonov/ss_SPARK-26084. Authored-by: Simeon Simeonov <sim@fastignite.com> Signed-off-by: Herman van Hovell <hvanhovell@databricks.com> (cherry picked from commit db136d360e54e13f1d7071a0428964a202cf7e31) Signed-off-by: Herman van Hovell <hvanhovell@databricks.com> | 20 November 2018, 20:31:39 UTC |

| 90e4dd1 | Dongjoon Hyun | 17 November 2018, 10:18:41 UTC | [MINOR][SQL] Fix typo in CTAS plan database string ## What changes were proposed in this pull request? Since [Spark 1.6.0](https://github.com/apache/spark/commit/56d7da14ab8f89bf4f303b27f51fd22d23967ffb#diff-6f38a103058a6e233b7ad80718452387R96), there was a redundant '}' character in CTAS string plan's database argument string; `default}`. This PR aims to fix it. **BEFORE** ```scala scala> sc.version res1: String = 1.6.0 scala> sql("create table t as select 1").explain == Physical Plan == ExecutedCommand CreateTableAsSelect [Database:default}, TableName: t, InsertIntoHiveTable] +- Project [1 AS _c0#3] +- OneRowRelation$ ``` **AFTER** ```scala scala> sql("create table t as select 1").explain == Physical Plan == Execute CreateHiveTableAsSelectCommand CreateHiveTableAsSelectCommand [Database:default, TableName: t, InsertIntoHiveTable] +- *(1) Project [1 AS 1#4] +- Scan OneRowRelation[] ``` ## How was this patch tested? Manual. Closes #23064 from dongjoon-hyun/SPARK-FIX. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: hyukjinkwon <gurwls223@apache.org> (cherry picked from commit b538c442cb3982cc4c3aac812a7d4764209dfbb7) Signed-off-by: hyukjinkwon <gurwls223@apache.org> | 17 November 2018, 10:19:19 UTC |

| 550408e | Matt Molek | 16 November 2018, 16:00:21 UTC | [SPARK-25934][MESOS] Don't propagate SPARK_CONF_DIR from spark submit ## What changes were proposed in this pull request? Don't propagate SPARK_CONF_DIR to the driver in mesos cluster mode. ## How was this patch tested? I built the 2.3.2 tag with this patch added and deployed a test job to a mesos cluster to confirm that the incorrect SPARK_CONF_DIR was no longer passed from the submit command. Closes #22937 from mpmolek/fix-conf-dir. Authored-by: Matt Molek <mpmolek@gmail.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 696b75a81013ad61d25e0552df2b019c7531f983) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 16 November 2018, 16:01:00 UTC |

| 7a59618 | Shanyu Zhao | 15 November 2018, 16:30:16 UTC | [SPARK-26011][SPARK-SUBMIT] Yarn mode pyspark app without python main resource does not honor "spark.jars.packages" SparkSubmit determines pyspark app by the suffix of primary resource but Livy uses "spark-internal" as the primary resource when calling spark-submit, therefore args.isPython is set to false in SparkSubmit.scala. In Yarn mode, SparkSubmit module is responsible for resolving maven coordinates and adding them to "spark.submit.pyFiles" so that python's system path can be set correctly. The fix is to resolve maven coordinates not only when args.isPython is true, but also when primary resource is spark-internal. Tested the patch with Livy submitting pyspark app, spark-submit, pyspark with or without packages config. Signed-off-by: Shanyu Zhao <shzhaomicrosoft.com> Closes #23009 from shanyu/shanyu-26011. Authored-by: Shanyu Zhao <shzhao@microsoft.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 9a5fda60e532dc7203d21d5fbe385cd561906ccb) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 15 November 2018, 16:31:03 UTC |

| 0c7d82b | Alex Hagerman | 03 November 2018, 17:56:59 UTC | [SPARK-25933][DOCUMENTATION] Fix pstats.Stats() reference in configuration.md ## What changes were proposed in this pull request? Change ptats.Stats() to pstats.Stats() for `spark.python.profile.dump` in configuration.md. ## How was this patch tested? Doc test Closes #22933 from AlexHagerman/doc_fix. Authored-by: Alex Hagerman <alex@unexpectedeof.net> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 1a7abf3f453f7d6012d7e842cf05f29f3afbb3bc) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 03 November 2018, 17:57:34 UTC |

| 49e1eb8 | Patrick Brown | 01 November 2018, 16:34:29 UTC | [SPARK-25837][CORE] Fix potential slowdown in AppStatusListener when cleaning up stages ## What changes were proposed in this pull request? * Update `AppStatusListener` `cleanupStages` method to remove tasks for those stages in a single pass instead of 1 for each stage. * This fixes an issue where the cleanupStages method would get backed up, causing a backup in the executor in ElementTrackingStore, resulting in stages and jobs not getting cleaned up properly. Tasks seem most susceptible to this as there are a lot of them, however a similar issue could arise in other locations the `KVStore` `view` method is used. A broader fix might involve updates to `KVStoreView` and `InMemoryView` as it appears this interface and implementation can lead to multiple and inefficient traversals of the stored data. ## How was this patch tested? Using existing tests in AppStatusListenerSuite This is my original work and I license the work to the project under the project’s open source license. Closes #22883 from patrickbrownsync/cleanup-stages-fix. Authored-by: Patrick Brown <patrick.brown@blyncsy.com> Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> (cherry picked from commit e9d3ca0b7993995f24f5c555a570bc2521119e12) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 01 November 2018, 16:38:21 UTC |

| 632c0d9 | Bruce Robbins | 29 October 2018, 05:44:58 UTC | [DOC] Fix doc for spark.sql.parquet.recordLevelFilter.enabled ## What changes were proposed in this pull request? Updated the doc string value for spark.sql.parquet.recordLevelFilter.enabled to indicate that spark.sql.parquet.enableVectorizedReader must be disabled. The code in ParquetFileFormat uses spark.sql.parquet.recordLevelFilter.enabled only after falling back to parquet-mr (see else for this if statement): https://github.com/apache/spark/blob/d5573c578a1eea9ee04886d9df37c7178e67bb30/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetFileFormat.scala#L412 https://github.com/apache/spark/blob/d5573c578a1eea9ee04886d9df37c7178e67bb30/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetFileFormat.scala#L427-L430 Tests also bear this out. ## How was this patch tested? This is just a doc string fix: I built Spark and ran a single test. Closes #22865 from bersprockets/confdocfix. Authored-by: Bruce Robbins <bersprockets@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 4e990d9dd2407dc257712c4b12b507f0990ca4e9) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 29 October 2018, 05:46:09 UTC |

| 3e0160b | seancxmao | 29 October 2018, 04:27:22 UTC | [SPARK-25797][SQL][DOCS][BACKPORT-2.3] Add migration doc for solving issues caused by view canonicalization approach change ## What changes were proposed in this pull request? Since Spark 2.2, view definitions are stored in a different way from prior versions. This may cause Spark unable to read views created by prior versions. See [SPARK-25797](https://issues.apache.org/jira/browse/SPARK-25797) for more details. Basically, we have 2 options. 1) Make Spark 2.2+ able to get older view definitions back. Since the expanded text is buggy and unusable, we have to use original text (this is possible with [SPARK-25459](https://issues.apache.org/jira/browse/SPARK-25459)). However, because older Spark versions don't save the context for the database, we cannot always get correct view definitions without view default database. 2) Recreate the views by `ALTER VIEW AS` or `CREATE OR REPLACE VIEW AS`. This PR aims to add migration doc to help users troubleshoot this issue by above option 2. ## How was this patch tested? N/A. Docs are generated and checked locally ``` cd docs SKIP_API=1 jekyll serve --watch ``` Closes #22851 from seancxmao/SPARK-25797-2.3. Authored-by: seancxmao <seancxmao@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 29 October 2018, 04:27:22 UTC |

| 53aeb3d | Peter Toth | 29 October 2018, 00:51:35 UTC | [SPARK-25816][SQL] Fix attribute resolution in nested extractors Extractors are made of 2 expressions, one of them defines the the value to be extract from (called `child`) and the other defines the way of extraction (called `extraction`). In this term extractors have 2 children so they shouldn't be `UnaryExpression`s. `ResolveReferences` was changed in this commit: https://github.com/apache/spark/commit/36b826f5d17ae7be89135cb2c43ff797f9e7fe48 which resulted a regression with nested extractors. An extractor need to define its children as the set of both `child` and `extraction`; and should try to resolve both in `ResolveReferences`. This PR changes `UnresolvedExtractValue` to a `BinaryExpression`. added UT Closes #22817 from peter-toth/SPARK-25816. Authored-by: Peter Toth <peter.toth@gmail.com> Signed-off-by: gatorsmile <gatorsmile@gmail.com> (cherry picked from commit ca2fca143277deaff58a69b7f1e0360cfc70561f) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 29 October 2018, 00:53:35 UTC |

| 3afb3a2 | shane knapp | 26 October 2018, 21:37:36 UTC | [SPARK-25854][BUILD] fix `build/mvn` not to fail during Zinc server shutdown the final line in the mvn helper script in build/ attempts to shut down the zinc server. due to the zinc server being set up w/a 30min timeout, by the time the mvn test instantiation finishes, the server times out. this means that when the mvn script tries to shut down zinc, it returns w/an exit code of 1. this will then automatically fail the entire build (even if the build passes). i set up a test build: https://amplab.cs.berkeley.edu/jenkins/job/sknapp-testing-spark-branch-2.4-test-maven-hadoop-2.7/ Closes #22854 from shaneknapp/fix-mvn-helper-script. Authored-by: shane knapp <incomplete@gmail.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 6aa506394958bfb30cd2a9085a5e8e8be927de51) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 26 October 2018, 21:40:56 UTC |

| 0a05cf9 | Shixiong Zhu | 26 October 2018, 04:53:51 UTC | [SPARK-25822][PYSPARK] Fix a race condition when releasing a Python worker ## What changes were proposed in this pull request? There is a race condition when releasing a Python worker. If `ReaderIterator.handleEndOfDataSection` is not running in the task thread, when a task is early terminated (such as `take(N)`), the task completion listener may close the worker but "handleEndOfDataSection" can still put the worker into the worker pool to reuse. https://github.com/zsxwing/spark/commit/0e07b483d2e7c68f3b5c3c118d0bf58c501041b7 is a patch to reproduce this issue. I also found a user reported this in the mail list: http://mail-archives.apache.org/mod_mbox/spark-user/201610.mbox/%3CCAAUq=H+YLUEpd23nwvq13Ms5hOStkhX3ao4f4zQV6sgO5zM-xAmail.gmail.com%3E This PR fixes the issue by using `compareAndSet` to make sure we will never return a closed worker to the work pool. ## How was this patch tested? Jenkins. Closes #22816 from zsxwing/fix-socket-closed. Authored-by: Shixiong Zhu <zsxwing@gmail.com> Signed-off-by: Takuya UESHIN <ueshin@databricks.com> (cherry picked from commit 86d469aeaa492c0642db09b27bb0879ead5d7166) Signed-off-by: Takuya UESHIN <ueshin@databricks.com> | 26 October 2018, 04:54:55 UTC |

| 8fbf3ee | Dongjoon Hyun | 22 October 2018, 23:34:33 UTC | [SPARK-25795][R][EXAMPLE] Fix CSV SparkR SQL Example ## What changes were proposed in this pull request? This PR aims to fix the following SparkR example in Spark 2.3.0 ~ 2.4.0. ```r > df <- read.df("examples/src/main/resources/people.csv", "csv") > namesAndAges <- select(df, "name", "age") ... Caused by: org.apache.spark.sql.AnalysisException: cannot resolve '`name`' given input columns: [_c0];; 'Project ['name, 'age] +- AnalysisBarrier +- Relation[_c0#97] csv ``` - https://dist.apache.org/repos/dist/dev/spark/v2.4.0-rc3-docs/_site/sql-programming-guide.html#manually-specifying-options - http://spark.apache.org/docs/2.3.2/sql-programming-guide.html#manually-specifying-options - http://spark.apache.org/docs/2.3.1/sql-programming-guide.html#manually-specifying-options - http://spark.apache.org/docs/2.3.0/sql-programming-guide.html#manually-specifying-options ## How was this patch tested? Manual test in SparkR. (Please note that `RSparkSQLExample.R` fails at the last JDBC example) ```r > df <- read.df("examples/src/main/resources/people.csv", "csv", sep=";", inferSchema=T, header=T) > namesAndAges <- select(df, "name", "age") ``` Closes #22791 from dongjoon-hyun/SPARK-25795. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 3b4556745e90a13f4ae7ebae4ab682617de25c38) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 22 October 2018, 23:35:05 UTC |

| d7a3587 | Wenchen Fan | 19 October 2018, 15:54:15 UTC | fix security issue of zinc(simplier version) | 22 October 2018, 04:22:10 UTC |

| 719ff7a | WeichenXu | 20 October 2018, 17:32:09 UTC | [DOC][MINOR] Fix minor error in the code of graphx guide ## What changes were proposed in this pull request? Fix minor error in the code "sketch of pregel implementation" of GraphX guide. This fixed error relates to `[SPARK-12995][GraphX] Remove deprecate APIs from Pregel` ## How was this patch tested? N/A Closes #22780 from WeichenXu123/minor_doc_update1. Authored-by: WeichenXu <weichen.xu@databricks.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 3b4f35f568eb3844d2a789c8a409bc705477df6b) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 20 October 2018, 17:32:45 UTC |

| 5cef11a | Wenchen Fan | 19 October 2018, 13:39:58 UTC | fix security issue of zinc | 19 October 2018, 13:39:58 UTC |

| 353d328 | Peter Toth | 19 October 2018, 13:17:14 UTC | [SPARK-25768][SQL] fix constant argument expecting UDAFs ## What changes were proposed in this pull request? Without this PR some UDAFs like `GenericUDAFPercentileApprox` can throw an exception because expecting a constant parameter (object inspector) as a particular argument. The exception is thrown because `toPrettySQL` call in `ResolveAliases` analyzer rule transforms a `Literal` parameter to a `PrettyAttribute` which is then transformed to an `ObjectInspector` instead of a `ConstantObjectInspector`. The exception comes from `getEvaluator` method of `GenericUDAFPercentileApprox` that actually shouldn't be called during `toPrettySQL` transformation. The reason why it is called are the non lazy fields in `HiveUDAFFunction`. This PR makes all fields of `HiveUDAFFunction` lazy. ## How was this patch tested? added new UT Closes #22766 from peter-toth/SPARK-25768. Authored-by: Peter Toth <peter.toth@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit f38594fc561208e17af80d17acf8da362b91fca4) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 19 October 2018, 13:18:36 UTC |

| 61b301c | Vladimir Kuriatkov | 18 October 2018, 21:46:03 UTC | [SPARK-21402][SQL][BACKPORT-2.3] Fix java array of structs deserialization This PR is to backport #22708 to branch 2.3. ## What changes were proposed in this pull request? MapObjects expression is used to map array elements to java beans. Struct type of elements is inferred from java bean structure and ends up with mixed up field order. I used UnresolvedMapObjects instead of MapObjects, which allows to provide element type for MapObjects during analysis based on the resolved input data, not on the java bean. ## How was this patch tested? Added a test case. Built complete project on travis. dongjoon-hyun cloud-fan Closes #22767 from vofque/SPARK-21402-2.3. Authored-by: Vladimir Kuriatkov <Vladimir_Kuriatkov@epam.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 18 October 2018, 21:46:03 UTC |

| 0726bc5 | gatorsmile | 16 October 2018, 00:58:29 UTC | [SPARK-25674][FOLLOW-UP] Update the stats for each ColumnarBatch This PR is a follow-up of https://github.com/apache/spark/pull/22594 . This alternative can avoid the unneeded computation in the hot code path. - For row-based scan, we keep the original way. - For the columnar scan, we just need to update the stats after each batch. N/A Closes #22731 from gatorsmile/udpateStatsFileScanRDD. Authored-by: gatorsmile <gatorsmile@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 4cee191c04f14d7272347e4b29201763c6cfb6bf) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 16 October 2018, 02:27:42 UTC |

| d87896b | gatorsmile | 16 October 2018, 01:46:17 UTC | [SPARK-25714][BACKPORT-2.3] Fix Null Handling in the Optimizer rule BooleanSimplification This PR is to backport https://github.com/apache/spark/pull/22702 to branch 2.3. --- ## What changes were proposed in this pull request? ```Scala val df1 = Seq(("abc", 1), (null, 3)).toDF("col1", "col2") df1.write.mode(SaveMode.Overwrite).parquet("/tmp/test1") val df2 = spark.read.parquet("/tmp/test1") df2.filter("col1 = 'abc' OR (col1 != 'abc' AND col2 == 3)").show() ``` Before the PR, it returns both rows. After the fix, it returns `Row ("abc", 1))`. This is to fix the bug in NULL handling in BooleanSimplification. This is a bug introduced in Spark 1.6 release. ## How was this patch tested? Added test cases Closes #22718 from gatorsmile/cherrypickSPARK-25714. Authored-by: gatorsmile <gatorsmile@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 16 October 2018, 01:46:17 UTC |

| 1e15998 | Dongjoon Hyun | 14 October 2018, 01:01:28 UTC | [SPARK-25726][SQL][TEST] Fix flaky test in SaveIntoDataSourceCommandSuite ## What changes were proposed in this pull request? [SPARK-22479](https://github.com/apache/spark/pull/19708/files#diff-5c22ac5160d3c9d81225c5dd86265d27R31) adds a test case which sometimes fails because the used password string `123` matches `41230802`. This PR aims to fix the flakiness. - https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/97343/consoleFull ```scala SaveIntoDataSourceCommandSuite: - simpleString is redacted *** FAILED *** "SaveIntoDataSourceCommand .org.apache.spark.sql.execution.datasources.jdbc.JdbcRelationProvider41230802, Map(password -> *********(redacted), url -> *********(redacted), driver -> mydriver), ErrorIfExists +- Range (0, 1, step=1, splits=Some(2)) " contained "123" (SaveIntoDataSourceCommandSuite.scala:42) ``` ## How was this patch tested? Pass the Jenkins with the updated test case Closes #22716 from dongjoon-hyun/SPARK-25726. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 6bbceb9fefe815d18001c6dd84f9ea2883d17a88) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 14 October 2018, 01:01:55 UTC |

| b3d1b1b | gatorsmile | 13 October 2018, 04:26:39 UTC | Revert "[SPARK-25714] Fix Null Handling in the Optimizer rule BooleanSimplification" This reverts commit 182bc85f2db0b3268b9b93ff91210811b00e1636. | 13 October 2018, 04:26:39 UTC |

| 182bc85 | gatorsmile | 13 October 2018, 04:02:38 UTC | [SPARK-25714] Fix Null Handling in the Optimizer rule BooleanSimplification ## What changes were proposed in this pull request? ```Scala val df1 = Seq(("abc", 1), (null, 3)).toDF("col1", "col2") df1.write.mode(SaveMode.Overwrite).parquet("/tmp/test1") val df2 = spark.read.parquet("/tmp/test1") df2.filter("col1 = 'abc' OR (col1 != 'abc' AND col2 == 3)").show() ``` Before the PR, it returns both rows. After the fix, it returns `Row ("abc", 1))`. This is to fix the bug in NULL handling in BooleanSimplification. This is a bug introduced in Spark 1.6 release. ## How was this patch tested? Added test cases Closes #22702 from gatorsmile/fixBooleanSimplify2. Authored-by: gatorsmile <gatorsmile@gmail.com> Signed-off-by: gatorsmile <gatorsmile@gmail.com> (cherry picked from commit c9ba59d38e2be17b802156b49d374a726e66c6b9) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 13 October 2018, 04:03:20 UTC |

| 5324a85 | liuxian | 11 October 2018, 21:24:15 UTC | [SPARK-25674][SQL] If the records are incremented by more than 1 at a time,the number of bytes might rarely ever get updated ## What changes were proposed in this pull request? If the records are incremented by more than 1 at a time,the number of bytes might rarely ever get updated,because it might skip over the count that is an exact multiple of UPDATE_INPUT_METRICS_INTERVAL_RECORDS. This PR just checks whether the increment causes the value to exceed a higher multiple of UPDATE_INPUT_METRICS_INTERVAL_RECORDS. ## How was this patch tested? existed unit tests Closes #22594 from 10110346/inputMetrics. Authored-by: liuxian <liu.xian3@zte.com.cn> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 69f5e9cce14632a1f912c3632243a4e20b275365) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 11 October 2018, 21:24:58 UTC |

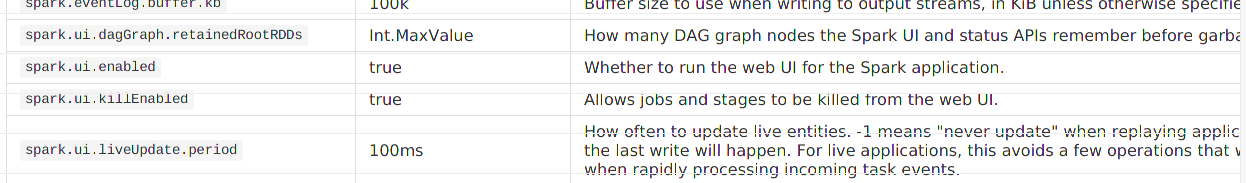

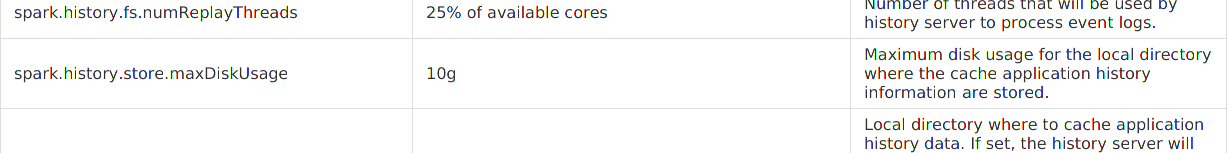

| 7102aee | Shahid | 03 October 2018, 11:10:59 UTC | [SPARK-25583][DOC][BRANCH-2.3] Add history-server related configuration in the documentation. ## What changes were proposed in this pull request? This is a follow up PR for the PR, https://github.com/apache/spark/pull/22601. Add history-server related configuration in the documentation for spark2.3 Some of the history server related configurations were missing in the documentation.Like, 'spark.history.store.maxDiskUsage', 'spark.ui.liveUpdate.period' etc. ## How was this patch tested?   Closes #22613 from shahidki31/SPARK-25583. Authored-by: Shahid <shahidki31@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 03 October 2018, 11:10:59 UTC |

| 8d7723f | Darcy Shen | 30 September 2018, 14:00:23 UTC | [CORE][MINOR] Fix obvious error and compiling for Scala 2.12.7 ## What changes were proposed in this pull request? Fix an obvious error. ## How was this patch tested? Existing tests. Closes #22577 from sadhen/minor_fix. Authored-by: Darcy Shen <sadhen@zoho.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 40e6ed89405828ff312eca0abd43cfba4b9185b2) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 30 September 2018, 14:00:54 UTC |

| 73408f0 | Shixiong Zhu | 30 September 2018, 01:10:04 UTC | [SPARK-25568][CORE] Continue to update the remaining accumulators when failing to update one accumulator ## What changes were proposed in this pull request? Since we don't fail a job when `AccumulatorV2.merge` fails, we should try to update the remaining accumulators so that they can still report correct values. ## How was this patch tested? The new unit test. Closes #22586 from zsxwing/SPARK-25568. Authored-by: Shixiong Zhu <zsxwing@gmail.com> Signed-off-by: gatorsmile <gatorsmile@gmail.com> (cherry picked from commit b6b8a6632e2b6e5482aaf4bfa093700752a9df80) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 30 September 2018, 01:10:58 UTC |

| eb78380 | Dongjoon Hyun | 29 September 2018, 03:43:58 UTC | [SPARK-25570][SQL][TEST] Replace 2.3.1 with 2.3.2 in HiveExternalCatalogVersionsSuite ## What changes were proposed in this pull request? This PR aims to prevent test slowdowns at `HiveExternalCatalogVersionsSuite` by using the latest Apache Spark 2.3.2 link because the Apache mirrors will remove the old Spark 2.3.1 binaries eventually. `HiveExternalCatalogVersionsSuite` will not fail because [SPARK-24813](https://issues.apache.org/jira/browse/SPARK-24813) implements a fallback logic. However, it will cause many trials and fallbacks in all builds over `branch-2.3/branch-2.4/master`. We had better fix this issue. ## How was this patch tested? Pass the Jenkins with the updated version. Closes #22587 from dongjoon-hyun/SPARK-25570. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: hyukjinkwon <gurwls223@apache.org> (cherry picked from commit 1e437835e96c4417117f44c29eba5ebc0112926f) Signed-off-by: hyukjinkwon <gurwls223@apache.org> | 29 September 2018, 03:44:27 UTC |

| f13565b | Shahid | 26 September 2018, 17:47:49 UTC | [SPARK-25533][CORE][WEBUI] AppSummary should hold the information about succeeded Jobs and completed stages only Currently, In the spark UI, when there are failed jobs or failed stages, display message for the completed jobs and completed stages are not consistent with the previous versions of spark. Reason is because, AppSummary holds the information about all the jobs and stages. But, In the below code, it checks against the completedJobs and completedStages. So, AppSummary should hold only successful jobs and stages. https://github.com/apache/spark/blob/66d29870c09e6050dd846336e596faaa8b0d14ad/core/src/main/scala/org/apache/spark/ui/jobs/AllJobsPage.scala#L306 https://github.com/apache/spark/blob/66d29870c09e6050dd846336e596faaa8b0d14ad/core/src/main/scala/org/apache/spark/ui/jobs/AllStagesPage.scala#L119 So, we should keep only completed jobs and stage information in the AppSummary, to make it consistent with Spark2.2 Test steps: bin/spark-shell ``` sc.parallelize(1 to 5, 5).collect() sc.parallelize(1 to 5, 2).map{ x => throw new RuntimeException("Fail")}.collect() ``` **Before fix:**   **After fix:**   Closes #22549 from shahidki31/SPARK-25533. Authored-by: Shahid <shahidki31@gmail.com> Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> (cherry picked from commit 5ee21661834e837d414bc20591982a092c0aece3) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 27 September 2018, 17:34:21 UTC |

| f40e4c7 | Shahid | 27 September 2018, 04:10:39 UTC | [SPARK-25536][CORE] metric value for METRIC_OUTPUT_RECORDS_WRITTEN is incorrect ## What changes were proposed in this pull request? changed metric value of METRIC_OUTPUT_RECORDS_WRITTEN from 'task.metrics.inputMetrics.recordsRead' to 'task.metrics.outputMetrics.recordsWritten'. This bug was introduced in SPARK-22190. https://github.com/apache/spark/pull/19426 ## How was this patch tested? Existing tests Closes #22555 from shahidki31/SPARK-25536. Authored-by: Shahid <shahidki31@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 5def10e61e49dba85f4d8b39c92bda15137990a2) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 27 September 2018, 04:15:37 UTC |

| 26d893a | Wenchen Fan | 27 September 2018, 00:47:05 UTC | [SPARK-25454][SQL] add a new config for picking minimum precision for integral literals ## What changes were proposed in this pull request? https://github.com/apache/spark/pull/20023 proposed to allow precision lose during decimal operations, to reduce the possibilities of overflow. This is a behavior change and is protected by the DECIMAL_OPERATIONS_ALLOW_PREC_LOSS config. However, that PR introduced another behavior change: pick a minimum precision for integral literals, which is not protected by a config. This PR add a new config for it: `spark.sql.literal.pickMinimumPrecision`. This can allow users to work around issue in SPARK-25454, which is caused by a long-standing bug of negative scale. ## How was this patch tested? a new test Closes #22494 from cloud-fan/decimal. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: gatorsmile <gatorsmile@gmail.com> (cherry picked from commit d0990e3dfee752a6460a6360e1a773138364d774) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 27 September 2018, 00:47:47 UTC |