https://github.com/apache/spark

- HEAD

- refs/heads/branch-0.5

- refs/heads/branch-0.6

- refs/heads/branch-0.7

- refs/heads/branch-0.8

- refs/heads/branch-0.9

- refs/heads/branch-1.0

- refs/heads/branch-1.0-jdbc

- refs/heads/branch-1.1

- refs/heads/branch-1.2

- refs/heads/branch-1.3

- refs/heads/branch-1.4

- refs/heads/branch-1.5

- refs/heads/branch-1.6

- refs/heads/branch-2.0

- refs/heads/branch-2.1

- refs/heads/branch-2.2

- refs/heads/branch-2.3

- refs/heads/branch-2.4

- refs/heads/branch-3.0

- refs/heads/branch-3.1

- refs/heads/branch-3.2

- refs/heads/branch-3.3

- refs/heads/branch-3.4

- refs/heads/branch-3.5

- refs/heads/master

- refs/remotes/origin/branch-0.8

- refs/remotes/origin/td-rdd-save

- refs/tags/0.3-scala-2.8

- refs/tags/0.3-scala-2.9

- refs/tags/2.0.0-preview

- refs/tags/alpha-0.1

- refs/tags/alpha-0.2

- refs/tags/v0.5.0

- refs/tags/v0.5.1

- refs/tags/v0.5.2

- refs/tags/v0.6.0

- refs/tags/v0.6.0-yarn

- refs/tags/v0.6.1

- refs/tags/v0.6.2

- refs/tags/v0.7.0

- refs/tags/v0.7.0-bizo-1

- refs/tags/v0.7.1

- refs/tags/v0.7.2

- refs/tags/v0.9.1

- refs/tags/v0.9.2

- refs/tags/v1.0.0

- refs/tags/v1.0.1

- refs/tags/v1.0.2

- refs/tags/v1.1.0

- refs/tags/v1.1.1

- refs/tags/v1.2.0

- refs/tags/v1.2.1

- refs/tags/v1.2.2

- refs/tags/v1.3.0

- refs/tags/v1.3.1

- refs/tags/v1.4.0

- refs/tags/v1.4.1

- refs/tags/v1.5.0-rc1

- refs/tags/v1.5.0-rc2

- refs/tags/v1.5.0-rc3

- refs/tags/v1.5.1

- refs/tags/v1.6.0

- refs/tags/v1.6.1

- refs/tags/v1.6.2

- refs/tags/v1.6.3

- refs/tags/v2.0.0

- refs/tags/v2.0.1

- refs/tags/v2.0.2

- refs/tags/v2.1.0

- refs/tags/v2.1.1

- refs/tags/v2.1.2

- refs/tags/v2.1.2-rc1

- refs/tags/v2.1.2-rc2

- refs/tags/v2.1.2-rc3

- refs/tags/v2.1.2-rc4

- refs/tags/v2.1.3

- refs/tags/v2.1.3-rc1

- refs/tags/v2.1.3-rc2

- refs/tags/v2.2.0

- refs/tags/v2.2.1

- refs/tags/v2.2.1-rc1

- refs/tags/v2.2.1-rc2

- refs/tags/v2.2.2

- refs/tags/v2.2.2-rc1

- refs/tags/v2.2.2-rc2

- refs/tags/v2.2.3

- refs/tags/v2.2.3-rc1

- refs/tags/v2.3.0

- refs/tags/v2.3.0-rc1

- refs/tags/v2.3.0-rc2

- refs/tags/v2.3.0-rc3

- refs/tags/v2.3.0-rc4

- refs/tags/v2.3.1

- refs/tags/v2.3.1-rc1

- refs/tags/v2.3.1-rc2

- refs/tags/v2.3.1-rc3

- refs/tags/v2.3.1-rc4

- refs/tags/v2.3.2

- refs/tags/v2.3.2-rc1

- refs/tags/v2.3.2-rc2

- refs/tags/v2.3.2-rc3

- refs/tags/v2.3.2-rc4

- refs/tags/v2.3.2-rc5

- refs/tags/v2.3.2-rc6

- refs/tags/v2.3.3

- refs/tags/v2.3.3-rc1

- refs/tags/v2.3.3-rc2

- refs/tags/v2.3.4

- refs/tags/v2.3.4-rc1

- refs/tags/v2.4.0

- refs/tags/v2.4.0-rc1

- refs/tags/v2.4.0-rc2

- refs/tags/v2.4.0-rc3

- refs/tags/v2.4.0-rc4

- refs/tags/v2.4.0-rc5

- refs/tags/v2.4.1

- refs/tags/v2.4.1-rc1

- refs/tags/v2.4.1-rc2

- refs/tags/v2.4.1-rc3

- refs/tags/v2.4.1-rc4

- refs/tags/v2.4.1-rc5

- refs/tags/v2.4.1-rc6

- refs/tags/v2.4.1-rc7

- refs/tags/v2.4.1-rc8

- refs/tags/v2.4.1-rc9

- refs/tags/v2.4.2

- refs/tags/v2.4.2-rc1

- refs/tags/v2.4.3

- refs/tags/v2.4.3-rc1

- refs/tags/v2.4.4

- refs/tags/v2.4.4-rc1

- refs/tags/v2.4.4-rc2

- refs/tags/v2.4.4-rc3

- refs/tags/v2.4.5

- refs/tags/v2.4.5-rc1

- refs/tags/v2.4.5-rc2

- refs/tags/v2.4.6

- refs/tags/v2.4.6-rc1

- refs/tags/v2.4.6-rc2

- refs/tags/v2.4.6-rc3

- refs/tags/v2.4.6-rc4

- refs/tags/v2.4.6-rc5

- refs/tags/v2.4.6-rc6

- refs/tags/v2.4.6-rc7

- refs/tags/v2.4.6-rc8

- refs/tags/v2.4.7

- refs/tags/v2.4.7-rc1

- refs/tags/v2.4.7-rc2

- refs/tags/v2.4.7-rc3

- refs/tags/v2.4.8

- refs/tags/v2.4.8-rc1

- refs/tags/v2.4.8-rc2

- refs/tags/v2.4.8-rc3

- refs/tags/v2.4.8-rc4

- refs/tags/v3.0.0

- refs/tags/v3.0.0-preview2

- refs/tags/v3.0.0-preview2-rc1

- refs/tags/v3.0.0-preview2-rc2

- refs/tags/v3.0.0-rc1

- refs/tags/v3.0.0-rc2

- refs/tags/v3.0.0-rc3

- refs/tags/v3.0.1

- refs/tags/v3.0.1-rc1

- refs/tags/v3.0.1-rc2

- refs/tags/v3.0.1-rc3

- refs/tags/v3.0.2

- refs/tags/v3.0.2-rc1

- refs/tags/v3.0.3

- refs/tags/v3.0.3-rc1

- refs/tags/v3.1.0-rc1

- refs/tags/v3.1.1

- refs/tags/v3.1.1-rc1

- refs/tags/v3.1.1-rc2

- refs/tags/v3.1.1-rc3

- refs/tags/v3.1.2

- refs/tags/v3.1.2-rc1

- refs/tags/v3.1.3

- refs/tags/v3.1.3-rc1

- refs/tags/v3.1.3-rc2

- refs/tags/v3.1.3-rc3

- refs/tags/v3.1.3-rc4

- refs/tags/v3.2.0

- refs/tags/v3.2.0-rc1

- refs/tags/v3.2.0-rc2

- refs/tags/v3.2.0-rc3

- refs/tags/v3.2.0-rc4

- refs/tags/v3.2.0-rc5

- refs/tags/v3.2.0-rc6

- refs/tags/v3.2.0-rc7

- refs/tags/v3.2.1

- refs/tags/v3.2.1-rc1

- refs/tags/v3.2.1-rc2

- refs/tags/v3.2.2

- refs/tags/v3.2.2-rc1

- refs/tags/v3.2.3

- refs/tags/v3.2.3-rc1

- refs/tags/v3.2.4

- refs/tags/v3.2.4-rc1

- refs/tags/v3.3.0

- refs/tags/v3.3.0-rc1

- refs/tags/v3.3.0-rc2

- refs/tags/v3.3.0-rc3

- refs/tags/v3.3.0-rc4

- refs/tags/v3.3.0-rc5

- refs/tags/v3.3.0-rc6

- refs/tags/v3.3.1

- refs/tags/v3.3.1-rc1

- refs/tags/v3.3.1-rc2

- refs/tags/v3.3.1-rc3

- refs/tags/v3.3.1-rc4

- refs/tags/v3.3.2

- refs/tags/v3.3.2-rc1

- refs/tags/v3.3.3

- refs/tags/v3.3.3-rc1

- refs/tags/v3.3.4

- refs/tags/v3.3.4-rc1

- refs/tags/v3.4.0

- refs/tags/v3.4.0-rc1

- refs/tags/v3.4.0-rc2

- refs/tags/v3.4.0-rc3

- refs/tags/v3.4.0-rc4

- refs/tags/v3.4.0-rc5

- refs/tags/v3.4.0-rc6

- refs/tags/v3.4.0-rc7

- refs/tags/v3.4.1

- refs/tags/v3.4.1-rc1

- refs/tags/v3.4.2

- refs/tags/v3.4.2-rc1

- refs/tags/v3.4.3

- refs/tags/v3.4.3-rc1

- refs/tags/v3.4.3-rc2

- refs/tags/v3.5.0

- refs/tags/v3.5.0-rc1

- refs/tags/v3.5.0-rc2

- refs/tags/v3.5.0-rc3

- refs/tags/v3.5.0-rc4

- refs/tags/v3.5.0-rc5

- refs/tags/v3.5.1

- refs/tags/v3.5.1-rc1

- refs/tags/v3.5.1-rc2

- refs/tags/v4.0.0-preview1

- refs/tags/v4.0.0-preview1-rc1

- refs/tags/v4.0.0-preview1-rc2

- refs/tags/v4.0.0-preview1-rc3

Take a new snapshot of a software origin

If the archived software origin currently browsed is not synchronized with its upstream version (for instance when new commits have been issued), you can explicitly request Software Heritage to take a new snapshot of it.

Use the form below to proceed. Once a request has been submitted and accepted, it will be processed as soon as possible. You can then check its processing state by visiting this dedicated page.

Processing "take a new snapshot" request ...

Permalinks

To reference or cite the objects present in the Software Heritage archive, permalinks based on SoftWare Hash IDentifiers (SWHIDs) must be used.

Select below a type of object currently browsed in order to display its associated SWHID and permalink.

| Revision | Author | Date | Message | Commit Date |

|---|---|---|---|---|

| 7955b39 | Dongjoon Hyun | 27 August 2019, 19:51:56 UTC | Preparing Spark release v2.4.4-rc3 | 27 August 2019, 19:51:56 UTC |

| c4bb486 | HyukjinKwon | 27 August 2019, 19:06:05 UTC | [SPARK-27992][SPARK-28881][PYTHON][2.4] Allow Python to join with connection thread to propagate errors ### What changes were proposed in this pull request? This PR proposes to backport https://github.com/apache/spark/pull/24834 with minimised changes, and the tests added at https://github.com/apache/spark/pull/25594. https://github.com/apache/spark/pull/24834 was not backported before because basically it targeted a better exception by propagating the exception from JVM. However, actually this PR fixed another problem accidentally (see https://github.com/apache/spark/pull/25594 and [SPARK-28881](https://issues.apache.org/jira/browse/SPARK-28881)). This regression seems introduced by https://github.com/apache/spark/pull/21546. Root cause is that, seems https://github.com/apache/spark/blob/23bed0d3c08e03085d3f0c3a7d457eedd30bd67f/sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala#L3370-L3384 `runJob` with `resultHandler` seems able to write partial output. JVM throws an exception but, since the JVM exception is not propagated into Python process, Python process doesn't know if the exception is thrown or not from JVM (it just closes the socket), which results as below: ``` ./bin/pyspark --conf spark.driver.maxResultSize=1m ``` ```python spark.conf.set("spark.sql.execution.arrow.enabled",True) spark.range(10000000).toPandas() ``` ``` Empty DataFrame Columns: [id] Index: [] ``` With this change, it lets Python process catches exceptions from JVM. ### Why are the changes needed? It returns incorrect data. And potentially it returns partial results when an exception happens in JVM sides. This is a regression. The codes work fine in Spark 2.3.3. ### Does this PR introduce any user-facing change? Yes. ``` ./bin/pyspark --conf spark.driver.maxResultSize=1m ``` ```python spark.conf.set("spark.sql.execution.arrow.enabled",True) spark.range(10000000).toPandas() ``` ``` Traceback (most recent call last): File "<stdin>", line 1, in <module> File "/.../pyspark/sql/dataframe.py", line 2122, in toPandas batches = self._collectAsArrow() File "/.../pyspark/sql/dataframe.py", line 2184, in _collectAsArrow jsocket_auth_server.getResult() # Join serving thread and raise any exceptions File "/.../lib/py4j-0.10.7-src.zip/py4j/java_gateway.py", line 1257, in __call__ File "/.../pyspark/sql/utils.py", line 63, in deco return f(*a, **kw) File "/.../lib/py4j-0.10.7-src.zip/py4j/protocol.py", line 328, in get_return_value py4j.protocol.Py4JJavaError: An error occurred while calling o42.getResult. : org.apache.spark.SparkException: Exception thrown in awaitResult: ... Caused by: org.apache.spark.SparkException: Job aborted due to stage failure: Total size of serialized results of 1 tasks (6.5 MB) is bigger than spark.driver.maxResultSize (1024.0 KB) ``` now throws an exception as expected. ### How was this patch tested? Manually as described above. unittest added. Closes #25593 from HyukjinKwon/SPARK-27992. Lead-authored-by: HyukjinKwon <gurwls223@apache.org> Co-authored-by: Bryan Cutler <cutlerb@gmail.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 27 August 2019, 19:06:05 UTC |

| 0d0686e | Yuming Wang | 26 August 2019, 06:12:16 UTC | [SPARK-28642][SQL][TEST][FOLLOW-UP] Test spark.sql.redaction.options.regex with and without default values ### What changes were proposed in this pull request? Test `spark.sql.redaction.options.regex` with and without default values. ### Why are the changes needed? Normally, we do not rely on the default value of `spark.sql.redaction.options.regex`. ### Does this PR introduce any user-facing change? No ### How was this patch tested? N/A Closes #25579 from wangyum/SPARK-28642-f1. Authored-by: Yuming Wang <yumwang@ebay.com> Signed-off-by: Xiao Li <gatorsmile@gmail.com> (cherry picked from commit c353a84d1a991797f255ec312e5935438727536c) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 27 August 2019, 17:21:58 UTC |

| 2c13dc9 | cyq89051127 | 27 August 2019, 13:13:39 UTC | [SPARK-28871][MINOR][DOCS] WaterMark doc fix ### What changes were proposed in this pull request? The code style in the 'Policy for handling multiple watermarks' in structured-streaming-programming-guide.md ### Why are the changes needed? Making it look friendly to user. ### Does this PR introduce any user-facing change? NO ### How was this patch tested? cd docs SKIP_API=1 jekyll build Closes #25580 from cyq89051127/master. Authored-by: cyq89051127 <chaiyq@asiainfo.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 4cf81285dac0a4e901179a44bfa62cb51e33bee6) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 27 August 2019, 17:14:49 UTC |

| 3f2eea3 | Dongjoon Hyun | 25 August 2019, 23:05:03 UTC | Preparing development version 2.4.5-SNAPSHOT | 25 August 2019, 23:05:03 UTC |

| b7a15b6 | Dongjoon Hyun | 25 August 2019, 23:04:49 UTC | Preparing Spark release v2.4.4-rc2 | 25 August 2019, 23:04:49 UTC |

| e66f9d5 | Dongjoon Hyun | 25 August 2019, 22:38:41 UTC | [SPARK-28868][INFRA] Specify Jekyll version to 3.8.6 in release docker image ### What changes were proposed in this pull request? This PR aims to specify Jekyll Version explicitly in our release docker image. ### Why are the changes needed? Recently, Jekyll 4.0 is released and it dropped Ruby 2.3 support. This breaks our release docker image build. ``` Building native extensions. This could take a while... ERROR: Error installing jekyll: jekyll-sass-converter requires Ruby version >= 2.4.0. ``` ### Does this PR introduce any user-facing change? No. ### How was this patch tested? The following should succeed. ``` $ docker build -t spark-rm:test --build-arg UID=501 dev/create-release/spark-rm ... Successfully tagged spark-rm:test ``` Closes #25578 from dongjoon-hyun/SPARK-28868. Authored-by: Dongjoon Hyun <dhyun@apple.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit 6214b6a5410f1080aebed8b869498a689e978785) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 25 August 2019, 22:38:58 UTC |

| 0a5efc3 | Jungtaek Lim (HeartSaVioR) | 23 August 2019, 14:40:07 UTC | [SPARK-28025][SS][2.4] Fix FileContextBasedCheckpointFileManager leaking crc files ### What changes were proposed in this pull request? This PR fixes the leak of crc files from CheckpointFileManager when FileContextBasedCheckpointFileManager is being used. Spark hits the Hadoop bug, [HADOOP-16255](https://issues.apache.org/jira/browse/HADOOP-16255) which seems to be a long-standing issue. This is there're two `renameInternal` methods: ``` public void renameInternal(Path src, Path dst) public void renameInternal(final Path src, final Path dst, boolean overwrite) ``` which should be overridden to handle all cases but ChecksumFs only overrides method with 2 params, so when latter is called FilterFs.renameInternal(...) is called instead, and it will do rename with RawLocalFs as underlying filesystem. The bug is related to FileContext, so FileSystemBasedCheckpointFileManager is not affected. [SPARK-17475](https://issues.apache.org/jira/browse/SPARK-17475) took a workaround for this bug, but [SPARK-23966](https://issues.apache.org/jira/browse/SPARK-23966) seemed to bring regression. This PR deletes crc file as "best-effort" when renaming, as failing to delete crc file is not that critical to fail the task. ### Why are the changes needed? This PR prevents crc files not being cleaned up even purging batches. Too many files in same directory often hurts performance, as well as each crc file occupies more space than its own size so possible to occupy nontrivial amount of space when batches go up to 100000+. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Some unit tests are modified to check leakage of crc files. Closes #25565 from HeartSaVioR/SPARK-28025-branch-2.4. Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 23 August 2019, 14:40:07 UTC |

| b913abd | Eyal Zituny | 23 August 2019, 14:37:48 UTC | [SPARK-27330][SS][2.4] support task abort in foreach writer ### What changes were proposed in this pull request? in order to address cases where foreach writer task is failing without calling the close() method, (for example when a task is interrupted) abort() method will be called when the task is aborted. the abort will call writer.close() ### How was this patch tested? update existing unit tests. Closes #25562 from eyalzit/SPARK-27330-foreach-writer-abort-2.4. Authored-by: Eyal Zituny <eyal.zituny@equalum.io> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 23 August 2019, 14:37:48 UTC |

| 0415d9d | Yuming Wang | 23 August 2019, 07:25:47 UTC | [SPARK-28642][SQL][2.4] Hide credentials in show create table This is a Spark branch-2.4 backport of #25375. Original description follows below: ## What changes were proposed in this pull request? [SPARK-17783](https://issues.apache.org/jira/browse/SPARK-17783) hided Credentials in `CREATE` and `DESC FORMATTED/EXTENDED` a PERSISTENT/TEMP Table for JDBC. But `SHOW CREATE TABLE` exposed the credentials: ```sql spark-sql> show create table mysql_federated_sample; CREATE TABLE `mysql_federated_sample` (`TBL_ID` BIGINT, `CREATE_TIME` INT, `DB_ID` BIGINT, `LAST_ACCESS_TIME` INT, `OWNER` STRING, `RETENTION` INT, `SD_ID` BIGINT, `TBL_NAME` STRING, `TBL_TYPE` STRING, `VIEW_EXPANDED_TEXT` STRING, `VIEW_ORIGINAL_TEXT` STRING, `IS_REWRITE_ENABLED` BOOLEAN) USING org.apache.spark.sql.jdbc OPTIONS ( `url` 'jdbc:mysql://localhost/hive?user=root&password=mypasswd', `driver` 'com.mysql.jdbc.Driver', `dbtable` 'TBLS' ) ``` This pr fix this issue. ## How was this patch tested? unit tests and manual tests: ```sql spark-sql> show create table mysql_federated_sample; CREATE TABLE `mysql_federated_sample` (`TBL_ID` BIGINT, `CREATE_TIME` INT, `DB_ID` BIGINT, `LAST_ACCESS_TIME` INT, `OWNER` STRING, `RETENTION` INT, `SD_ID` BIGINT, `TBL_NAME` STRING, `TBL_TYPE` STRING, `VIEW_EXPANDED_TEXT` STRING, `VIEW_ORIGINAL_TEXT` STRING, `IS_REWRITE_ENABLED` BOOLEAN) USING org.apache.spark.sql.jdbc OPTIONS ( `url` '*********(redacted)', `driver` 'com.mysql.jdbc.Driver', `dbtable` 'TBLS' ) ``` Closes #25560 from wangyum/SPARK-28642-branch-2.4. Authored-by: Yuming Wang <yumwang@ebay.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 23 August 2019, 07:25:47 UTC |

| e468576 | Yuanjian Li | 22 August 2019, 07:47:31 UTC | [SPARK-28699][CORE][2.4] Fix a corner case for aborting indeterminate stage ### What changes were proposed in this pull request? Change the logic of collecting the indeterminate stage, we should look at stages from mapStage, not failedStage during handle FetchFailed. ### Why are the changes needed? In the fetch failed error handle logic, the original logic of collecting indeterminate stage from the fetch failed stage. And in the scenario of the fetch failed happened in the first task of this stage, this logic will cause the indeterminate stage to resubmit partially. Eventually, we are capable of getting correctness bug. ### Does this PR introduce any user-facing change? It makes the corner case of indeterminate stage abort as expected. ### How was this patch tested? New UT in DAGSchedulerSuite. Run below integrated test with `local-cluster[5, 2, 5120]`, and set `spark.sql.execution.sortBeforeRepartition`=false, it will abort the indeterminate stage as expected: ```scala import scala.sys.process._ import org.apache.spark.TaskContext val res = spark.range(0, 10000 * 10000, 1).map{ x => (x % 1000, x)} // kill an executor in the stage that performs repartition(239) val df = res.repartition(113).map{ x => (x._1 + 1, x._2)}.repartition(239).map { x => if (TaskContext.get.attemptNumber == 0 && TaskContext.get.partitionId < 1 && TaskContext.get.stageAttemptNumber == 0) { throw new Exception("pkill -f -n java".!!) } x } val r2 = df.distinct.count() ``` Closes #25509 from xuanyuanking/SPARK-28699-backport-2.4. Authored-by: Yuanjian Li <xyliyuanjian@gmail.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 22 August 2019, 07:47:31 UTC |

| 001e32a | zhengruifeng | 22 August 2019, 07:15:46 UTC | [SPARK-28780][ML][2.4] deprecate LinearSVCModel.setWeightCol ### What changes were proposed in this pull request? deprecate `LinearSVCModel.setWeightCol`, and make it a no-op ### Why are the changes needed? `LinearSVCModel` should not provide this setter, moreover, this method is wrongly defined. ### Does this PR introduce any user-facing change? no, this method is only deprecated ### How was this patch tested? existing suites Closes #25547 from zhengruifeng/svc_model_deprecate_setWeight_2.4. Authored-by: zhengruifeng <ruifengz@foxmail.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 22 August 2019, 07:15:46 UTC |

| ff9339d | triplesheep | 22 August 2019, 07:07:40 UTC | [SPARK-28844][SQL] Fix typo in SQLConf FILE_COMRESSION_FACTOR Fix minor typo in SQLConf. `FILE_COMRESSION_FACTOR` -> `FILE_COMPRESSION_FACTOR` Make conf more understandable. No. (`spark.sql.sources.fileCompressionFactor` is unchanged.) Pass the Jenkins with the existing tests. Closes #25538 from triplesheep/TYPO-FIX. Authored-by: triplesheep <triplesheep0419@gmail.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit 48578a41b50308185c7eefd6e562bd0f6575a921) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 22 August 2019, 07:09:11 UTC |

| 21bba9c | Yuanjian Li | 21 August 2019, 17:56:50 UTC | [SPARK-28699][SQL] Disable using radix sort for ShuffleExchangeExec in repartition case ## What changes were proposed in this pull request? Disable using radix sort in ShuffleExchangeExec when we do repartition. In #20393, we fixed the indeterministic result in the shuffle repartition case by performing a local sort before repartitioning. But for the newly added sort operation, we use radix sort which is wrong because binary data can't be compared by only the prefix. This makes the sort unstable and fails to solve the indeterminate shuffle output problem. ### Why are the changes needed? Fix the correctness bug caused by repartition after a shuffle. ### Does this PR introduce any user-facing change? Yes, user will get the right result in the case of repartition stage rerun. ## How was this patch tested? Test with `local-cluster[5, 2, 5120]`, use the integrated test below, it can return a right answer 100000000. ``` import scala.sys.process._ import org.apache.spark.TaskContext val res = spark.range(0, 10000 * 10000, 1).map{ x => (x % 1000, x)} // kill an executor in the stage that performs repartition(239) val df = res.repartition(113).map{ x => (x._1 + 1, x._2)}.repartition(239).map { x => if (TaskContext.get.attemptNumber == 0 && TaskContext.get.partitionId < 1 && TaskContext.get.stageAttemptNumber == 0) { throw new Exception("pkill -f -n java".!!) } x } val r2 = df.distinct.count() ``` Closes #25491 from xuanyuanking/SPARK-28699-fix. Authored-by: Yuanjian Li <xyliyuanjian@gmail.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit 2d9cc42aa83beb5952bb44d3cd0327d4432d385e) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 21 August 2019, 17:57:05 UTC |

| fd2fe15 | Alessandro Bellina | 21 August 2019, 15:21:55 UTC | [SPARK-26895][CORE][2.4] prepareSubmitEnvironment should be called within doAs for proxy users This also includes the follow-up fix by Hyukjin Kwon: [SPARK-26895][CORE][FOLLOW-UP] Uninitializing log after `prepareSubmitEnvironment` in SparkSubmit `prepareSubmitEnvironment` performs globbing that will fail in the case where a proxy user (`--proxy-user`) doesn't have permission to the file. This is a bug also with 2.3, so we should backport, as currently you can't launch an application that for instance is passing a file under `--archives`, and that file is owned by the target user. The solution is to call `prepareSubmitEnvironment` within a doAs context if proxying. Manual tests running with `--proxy-user` and `--archives`, before and after, showing that the globbing is successful when the resource is owned by the target user. I've looked at writing unit tests, but I am not sure I can do that cleanly (perhaps with a custom FileSystem). Open to ideas. Closes #25516 from vanzin/SPARK-26895-2.4. Authored-by: Alessandro Bellina <abellina@gmail.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 21 August 2019, 15:21:55 UTC |

| aff5e2b | Jungtaek Lim (HeartSaVioR) | 20 August 2019, 07:56:53 UTC | [SPARK-28650][SS][DOC] Correct explanation of guarantee for ForeachWriter # What changes were proposed in this pull request? This patch modifies the explanation of guarantee for ForeachWriter as it doesn't guarantee same output for `(partitionId, epochId)`. Refer the description of [SPARK-28650](https://issues.apache.org/jira/browse/SPARK-28650) for more details. Spark itself still guarantees same output for same epochId (batch) if the preconditions are met, 1) source is always providing the same input records for same offset request. 2) the query is idempotent in overall (indeterministic calculation like now(), random() can break this). Assuming breaking preconditions as an exceptional case (the preconditions are implicitly required even before), we still can describe the guarantee with `epochId`, though it will be harder to leverage the guarantee: 1) ForeachWriter should implement a feature to track whether all the partitions are written successfully for given `epochId` 2) There's pretty less chance to leverage the fact, as the chance for Spark to successfully write all partitions and fail to checkpoint the batch is small. Credit to zsxwing on discovering the broken guarantee. ## How was this patch tested? This is just a documentation change, both on javadoc and guide doc. Closes #25407 from HeartSaVioR/SPARK-28650. Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com> Signed-off-by: Shixiong Zhu <zsxwing@gmail.com> (cherry picked from commit b37c8d5cea2e31e7821d848e42277f8fb7b68f30) Signed-off-by: Shixiong Zhu <zsxwing@gmail.com> | 20 August 2019, 07:58:08 UTC |

| 75076ff | darrentirto | 20 August 2019, 03:44:46 UTC | [SPARK-28777][PYTHON][DOCS] Fix format_string doc string with the correct parameters ### What changes were proposed in this pull request? The parameters doc string of the function format_string was changed from _col_, _d_ to _format_, _cols_ which is what the actual function declaration states ### Why are the changes needed? The parameters stated by the documentation was inaccurate ### Does this PR introduce any user-facing change? Yes. **BEFORE**  **AFTER**  ### How was this patch tested? N/A: documentation only <!-- If tests were added, say they were added here. Please make sure to add some test cases that check the changes thoroughly including negative and positive cases if possible. If it was tested in a way different from regular unit tests, please clarify how you tested step by step, ideally copy and paste-able, so that other reviewers can test and check, and descendants can verify in the future. If tests were not added, please describe why they were not added and/or why it was difficult to add. --> Closes #25506 from darrentirto/SPARK-28777. Authored-by: darrentirto <darrentirto@gmail.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit a787bc28840eafae53a08137a53ea56500bfd675) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 20 August 2019, 03:45:08 UTC |

| 7e4825c | Sean Owen | 20 August 2019, 00:54:25 UTC | [SPARK-28775][CORE][TESTS] Skip date 8633 in Kwajalein due to changes in tzdata2018i that only some JDK 8s use ### What changes were proposed in this pull request? Some newer JDKs use the tzdata2018i database, which changes how certain (obscure) historical dates and timezones are handled. As previously, we can pretty much safely ignore these in tests, as the value may vary by JDK. ### Why are the changes needed? Test otherwise fails using, for example, JDK 1.8.0_222. https://bugs.openjdk.java.net/browse/JDK-8215982 has a full list of JDKs which has this. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Existing tests Closes #25504 from srowen/SPARK-28775. Authored-by: Sean Owen <sean.owen@databricks.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit 3b4e345fa1afa0d4004988f8800b63150c305fd4) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 20 August 2019, 00:54:49 UTC |

| 154b325 | Matt Foley | 19 August 2019, 23:45:30 UTC | [SPARK-28749][TEST][BRANCH-2.4] Fix PySpark tests not to require kafka-0-8 ### What changes were proposed in this pull request? Simple fix of https://issues.apache.org/jira/browse/SPARK-28749 ### Why are the changes needed? As discussed in the referenced Jira, currently, the PySpark tests invoked by `python/run-tests` demand the presence of kafka-0-8 libraries. If not present, a failure message will be generated regardless of whether the tests are enabled by env variables. Since Kafka-0-8 libraries are not compatible with Scala-2.12, this means we can’t successfully run pyspark tests on a Scala-2.12 build. These proposed changes fix the problem. ### Does this PR introduce any user-facing change? No. It only changes a test behavior. ### How was this patch tested? This is a fix of a test bug. The current behavior is demonstrably wrong under Scala-2.12, as stated above. The corrected behavior allows the tests to run to completion, with results that are consistent with the expected results, similar to the successful Scala-2.11 results. We performed the following: - Full mvn build under Scala-2.11, with kafka-0-8 profile - Full mvn build under Scala-2.12, without kafka-0-8 profile - Full maven Unit Test of both, with no change (as expected) - PySpark tests via `python/run-tests` with both. Both complete successfully. Former behavior before this patch was that the last step would post a Failure on the Scala-2.12 build. ### Notes for reviewers There are of course many ways to fix the problem. I chose to follow the pattern established by the Kinesis testing routines that were right “next to” the Kafka-0-8 test routines in the same file. They showed a presumably acceptable way of dealing with missing jars. I ignored the env variable, because the presence or absence of the jar seemed a sufficient and more important determinant. But if you want me to use the env variable exactly the same way as the Kinesis testing code, I’ll be happy to do so. Closes #25482 from mattf-apache/branch-2.4. Lead-authored-by: Matt Foley <mattf@apache.org> Co-authored-by: Matt Foley <matthew_foley@apple.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> | 19 August 2019, 23:45:30 UTC |

| 5a558a4 | Dongjoon Hyun | 19 August 2019, 07:11:44 UTC | Preparing development version 2.4.5-SNAPSHOT | 19 August 2019, 07:11:44 UTC |

| 13f2465 | Dongjoon Hyun | 19 August 2019, 07:11:32 UTC | Preparing Spark release v2.4.4-rc1 | 19 August 2019, 07:11:32 UTC |

| 73032a0 | Dongjoon Hyun | 18 August 2019, 14:35:06 UTC | Revert "[SPARK-25474][SQL][2.4] Support `spark.sql.statistics.fallBackToHdfs` in data source tables" This reverts commit 5f4feeb0ae236cc2b4cff80889bf23c8e017d9d4. | 18 August 2019, 14:35:06 UTC |

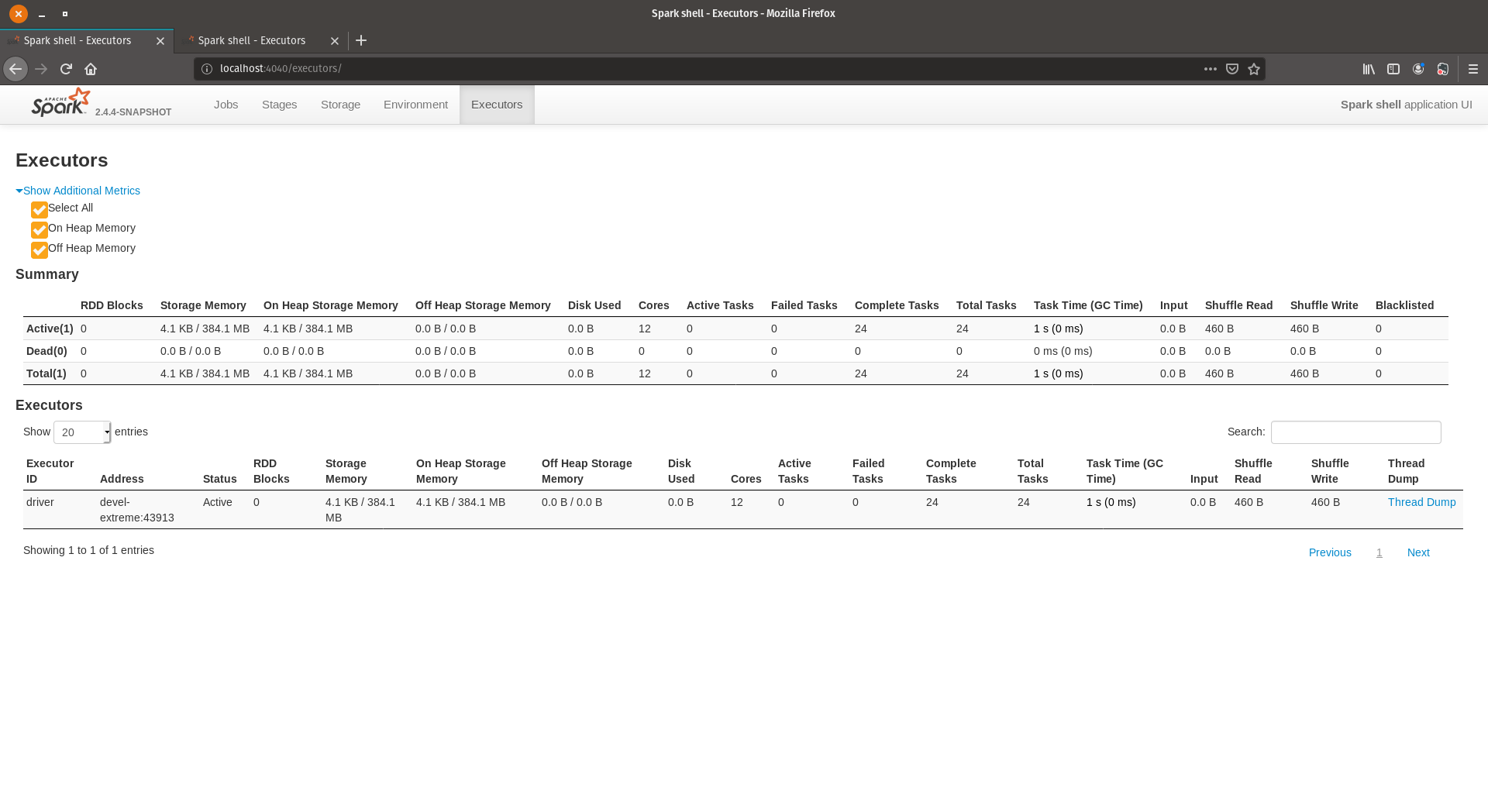

| b98a372 | Kousuke Saruta | 18 August 2019, 14:11:18 UTC | [SPARK-28647][WEBUI][2.4] Recover additional metric feature This PR is for backporting SPARK-28647(#25374) to branch-2.4. The original PR removed `additional-metrics.js` but branch-2.4 still uses it so I don't remove it and related things for branch-2.4. ### What changes were proposed in this pull request? Added checkboxes to enable users to select which optional metrics (`On Heap Memory`, `Off Heap Memory` and `Select All` in this case) to be shown in `ExecuorPage`. ### Why are the changes needed? By SPARK-17019, `On Heap Memory` and `Off Heap Memory` are introduced as optional metrics. But they are not displayed because they are made `display: none` in css and there are no way to appear them. ### Does this PR introduce any user-facing change? The previous `ExecutorPage` doesn't show optional metrics. This change adds checkboxes to `ExecutorPage` for optional metrics. We can choose which metrics should be shown by checking corresponding checkboxes.  ### How was this patch tested? Manual test. Closes #25484 from sarutak/backport-SPARK-28647-branch-2.4. Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 18 August 2019, 14:11:18 UTC |

| 0246f48 | Dongjoon Hyun | 17 August 2019, 18:11:36 UTC | [SPARK-28766][R][DOC] Fix CRAN incoming feasibility warning on invalid URL ### What changes were proposed in this pull request? This updates an URL in R doc to fix `Had CRAN check errors; see logs`. ### Why are the changes needed? Currently, this invalid link causes a warning during CRAN incoming feasibility. We had better fix this before submitting `3.0.0/2.4.4/2.3.4`. **BEFORE** ``` * checking CRAN incoming feasibility ... NOTE Maintainer: ‘Shivaram Venkataraman <shivaramcs.berkeley.edu>’ Found the following (possibly) invalid URLs: URL: https://wiki.apache.org/hadoop/HCFS (moved to https://cwiki.apache.org/confluence/display/hadoop/HCFS) From: man/spark.addFile.Rd Status: 404 Message: Not Found ``` **AFTER** ``` * checking CRAN incoming feasibility ... Note_to_CRAN_maintainers Maintainer: ‘Shivaram Venkataraman <shivaramcs.berkeley.edu>’ ``` ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Check the warning message during R testing. ``` $ R/install-dev.sh $ R/run-tests.sh ``` Closes #25483 from dongjoon-hyun/SPARK-28766. Authored-by: Dongjoon Hyun <dhyun@apple.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit 5756a47a9fafca2d0b31de2b2374429f73b6e5e2) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 17 August 2019, 18:11:51 UTC |

| 97471bd | Dongjoon Hyun | 15 August 2019, 18:22:57 UTC | [MINOR][DOC] Use `Java 8` instead of `Java 8+` as a running environment After Apache Spark 3.0.0 supports JDK11 officially, people will try JDK11 on old Spark releases (especially 2.4.4/2.3.4) in the same way because our document says `Java 8+`. We had better avoid that misleading situation. This PR aims to remove `+` from `Java 8+` in the documentation (master/2.4/2.3). Especially, 2.4.4 release and 2.3.4 release (cc kiszk ) On master branch, we will add JDK11 after [SPARK-24417.](https://issues.apache.org/jira/browse/SPARK-24417) This is a documentation only change. <img width="923" alt="java8" src="https://user-images.githubusercontent.com/9700541/63116589-e1504800-bf4e-11e9-8904-b160ec7a42c0.png"> Closes #25466 from dongjoon-hyun/SPARK-DOC-JDK8. Authored-by: Dongjoon Hyun <dhyun@apple.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit 123eb58d61ad7c7ebe540f1634088696a3cc85bc) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 15 August 2019, 18:24:02 UTC |

| 5a06584 | HyukjinKwon | 15 August 2019, 04:36:20 UTC | [SPARK-27234][SS][PYTHON][BRANCH-2.4] Use InheritableThreadLocal for current epoch in EpochTracker (to support Python UDFs) ## What changes were proposed in this pull request? This PR proposes to use `InheritableThreadLocal` instead of `ThreadLocal` for current epoch in `EpochTracker`. Python UDF needs threads to write out to and read it from Python processes and when there are new threads, previously set epoch is lost. After this PR, Python UDFs can be used at Structured Streaming with the continuous mode. ## How was this patch tested? Manually tested: ```python from pyspark.sql.functions import col, udf fooUDF = udf(lambda p: "foo") spark \ .readStream \ .format("rate") \ .load()\ .withColumn("foo", fooUDF(col("value")))\ .writeStream\ .format("console")\ .trigger(continuous="1 second").start() ``` Note that test was not ported because: 1. `IntegratedUDFTestUtils` only exists in master. 2. Missing SS testing utils in PySpark code base. 3. Writing new test for branch-2.4 specifically might bring even more overhead due to mismatch against master. Closes #25457 from HyukjinKwon/SPARK-27234-backport. Authored-by: HyukjinKwon <gurwls223@apache.org> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 15 August 2019, 04:36:20 UTC |

| dfcebca | Fokko Driesprong | 13 August 2019, 23:03:23 UTC | [SPARK-28713][BUILD][2.4] Bump checkstyle from 8.2 to 8.23 ## What changes were proposed in this pull request? Backport to `branch-2.4` of https://github.com/apache/spark/pull/25432 Fixes a vulnerability from the GitHub Security Advisory Database: _Moderate severity vulnerability that affects com.puppycrawl.tools:checkstyle_ Checkstyle prior to 8.18 loads external DTDs by default, which can potentially lead to denial of service attacks or the leaking of confidential information. https://github.com/checkstyle/checkstyle/issues/6474 Affected versions: < 8.18 ## How was this patch tested? Ran checkstyle locally. Closes #25437 from Fokko/branch-2.4. Authored-by: Fokko Driesprong <fokko@apache.org> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 13 August 2019, 23:03:23 UTC |

| c37abba | Gengliang Wang | 12 August 2019, 18:47:29 UTC | [SPARK-28638][WEBUI] Task summary should only contain successful tasks' metrics ## What changes were proposed in this pull request? Currently, on requesting summary metrics, cached data are returned if the current number of "SUCCESS" tasks is the same as the value in cached data. However, the number of "SUCCESS" tasks is wrong when there are running tasks. In `AppStatusStore`, the KVStore is `ElementTrackingStore`, instead of `InMemoryStore`. The value count is always the number of "SUCCESS" tasks + "RUNNING" tasks. Thus, even when the running tasks are finished, the out-of-update cached data is returned. This PR is to fix the code in getting the number of "SUCCESS" tasks. ## How was this patch tested? Test manually, run ``` sc.parallelize(1 to 160, 40).map(i => Thread.sleep(i*100)).collect() ``` and keep refreshing the stage page , we can see the task summary metrics is wrong. ### Before fix:  ### After fix:  Closes #25369 from gengliangwang/fixStagePage. Authored-by: Gengliang Wang <gengliang.wang@databricks.com> Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> (cherry picked from commit 48d04f74ca895497b9d8bab18c7708f76f55c520) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 12 August 2019, 18:48:00 UTC |

| a2bbbf8 | Rob Vesse | 07 August 2019, 23:55:11 UTC | [SPARK-28649][INFRA] Add Python .eggs to .gitignore ## What changes were proposed in this pull request? If you build Spark distributions you potentially end up with a `python/.eggs` directory in your working copy which is not currently ignored by Spark's `.gitignore` file. Since these are transient build artifacts there is no reason to ever commit these to Git so this should be placed in the `.gitignore` list ## How was this patch tested? Verified the offending artifacts were no longer reported as untracked content by Git Closes #25380 from rvesse/patch-1. Authored-by: Rob Vesse <rvesse@dotnetrdf.org> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit 6ea4a737eaf6de41deb3ca9595c84a19b1b35554) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 07 August 2019, 23:55:29 UTC |

| 04d9f8f | Dongjoon Hyun | 04 August 2019, 16:42:47 UTC | [SPARK-28609][DOC] Fix broken styles/links and make up-to-date This PR aims to fix the broken styles/links and make the doc up-to-date for Apache Spark 2.4.4 and 3.0.0 release. - `building-spark.md`  - `configuration.md`  - `sql-pyspark-pandas-with-arrow.md`  - `streaming-programming-guide.md`  - `structured-streaming-programming-guide.md` (1/2)  - `structured-streaming-programming-guide.md` (2/2)  - `submitting-applications.md`  Manual. Build the doc. ``` SKIP_API=1 jekyll build ``` Closes #25345 from dongjoon-hyun/SPARK-28609. Authored-by: Dongjoon Hyun <dhyun@apple.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit 4856c0e33abd1666f606d57102c198adf5cb5fc2) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 04 August 2019, 16:45:04 UTC |

| 6c61321 | WeichenXu | 03 August 2019, 01:31:15 UTC | [SPARK-28582][PYTHON] Fix flaky test DaemonTests.do_termination_test which fail on Python 3.7 This PR picks up https://github.com/apache/spark/pull/25315 back after removing `Popen.wait` usage which exists in Python 3 only. I saw the last test results wrongly and thought it was passed. Fix flaky test DaemonTests.do_termination_test which fail on Python 3.7. I add a sleep after the test connection to daemon. Run test ``` python/run-tests --python-executables=python3.7 --testname "pyspark.tests.test_daemon DaemonTests" ``` **Before** Fail on test "test_termination_sigterm". And we can see daemon process do not exit. **After** Test passed Closes #25343 from HyukjinKwon/SPARK-28582. Authored-by: WeichenXu <weichen.xu@databricks.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit b3394db1930b3c9f55438cb27bb2c584bf041f8e) Signed-off-by: HyukjinKwon <gurwls223@apache.org> | 03 August 2019, 01:33:13 UTC |

| be52903 | Dongjoon Hyun | 02 August 2019, 23:41:00 UTC | [SPARK-28606][INFRA] Update CRAN key to recover docker image generation CRAN repo changed the key and it causes our release script failure. This is a release blocker for Apache Spark 2.4.4 and 3.0.0. - https://cran.r-project.org/bin/linux/ubuntu/README.html ``` Err:1 https://cloud.r-project.org/bin/linux/ubuntu bionic-cran35/ InRelease The following signatures couldn't be verified because the public key is not available: NO_PUBKEY 51716619E084DAB9 ... W: GPG error: https://cloud.r-project.org/bin/linux/ubuntu bionic-cran35/ InRelease: The following signatures couldn't be verified because the public key is not available: NO_PUBKEY 51716619E084DAB9 E: The repository 'https://cloud.r-project.org/bin/linux/ubuntu bionic-cran35/ InRelease' is not signed. ``` Note that they are reusing `cran35` for R 3.6 although they changed the key. ``` Even though R has moved to version 3.6, for compatibility the sources.list entry still uses the cran3.5 designation. ``` This PR aims to recover the docker image generation first. We will verify the R doc generation in a separate JIRA and PR. Manual. After `docker-build.log`, it should continue to the next stage, `Building v3.0.0-rc1`. ``` $ dev/create-release/do-release-docker.sh -d /tmp/spark-3.0.0 -n -s docs ... Log file: docker-build.log Building v3.0.0-rc1; output will be at /tmp/spark-3.0.0/output ``` Closes #25339 from dongjoon-hyun/SPARK-28606. Authored-by: Dongjoon Hyun <dhyun@apple.com> Signed-off-by: DB Tsai <d_tsai@apple.com> (cherry picked from commit 0c6874fb37f97c36a5265455066de9e516845df2) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 03 August 2019, 00:04:15 UTC |

| fe0f53a | Dongjoon Hyun | 02 August 2019, 17:08:29 UTC | Revert "[SPARK-28582][PYSPARK] Fix flaky test DaemonTests.do_termination_test which fail on Python 3.7" This reverts commit 20e46ef6e3e49e754062717c2cb249c6eb99e86a. | 02 August 2019, 17:08:29 UTC |

| dad1cd6 | Jungtaek Lim (HeartSaVioR) | 02 August 2019, 16:12:54 UTC | [MINOR][DOC][SS] Correct description of minPartitions in Kafka option ## What changes were proposed in this pull request? `minPartitions` has been used as a hint and relevant method (KafkaOffsetRangeCalculator.getRanges) doesn't guarantee the behavior that partitions will be equal or more than given value. https://github.com/apache/spark/blob/d67b98ea016e9b714bef68feaac108edd08159c9/external/kafka-0-10-sql/src/main/scala/org/apache/spark/sql/kafka010/KafkaOffsetRangeCalculator.scala#L32-L46 This patch makes clear the configuration is a hint, and actual partitions could be less or more. ## How was this patch tested? Just a documentation change. Closes #25332 from HeartSaVioR/MINOR-correct-kafka-structured-streaming-doc-minpartition. Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit 7ffc00ccc37fc94a45b7241bb3c6a17736b55ba3) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 02 August 2019, 16:13:08 UTC |

| 20e46ef | WeichenXu | 02 August 2019, 13:07:06 UTC | [SPARK-28582][PYSPARK] Fix flaky test DaemonTests.do_termination_test which fail on Python 3.7 Fix flaky test DaemonTests.do_termination_test which fail on Python 3.7. I add a sleep after the test connection to daemon. Run test ``` python/run-tests --python-executables=python3.7 --testname "pyspark.tests.test_daemon DaemonTests" ``` **Before** Fail on test "test_termination_sigterm". And we can see daemon process do not exit. **After** Test passed Closes #25315 from WeichenXu123/fix_py37_daemon. Authored-by: WeichenXu <weichen.xu@databricks.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit fbeee0c5bcea32346b2279c5b67044f12e5faf7d) Signed-off-by: HyukjinKwon <gurwls223@apache.org> | 02 August 2019, 13:13:27 UTC |

| a065a50 | HyukjinKwon | 02 August 2019, 13:12:04 UTC | Revert "[SPARK-28582][PYSPARK] Fix flaky test DaemonTests.do_termination_test which fail on Python 3.7" This reverts commit dc09a02c142d3787e728c8b25eb8417649d98e9f. | 02 August 2019, 13:12:04 UTC |

| dc09a02 | WeichenXu | 02 August 2019, 13:07:06 UTC | [SPARK-28582][PYSPARK] Fix flaky test DaemonTests.do_termination_test which fail on Python 3.7 Fix flaky test DaemonTests.do_termination_test which fail on Python 3.7. I add a sleep after the test connection to daemon. Run test ``` python/run-tests --python-executables=python3.7 --testname "pyspark.tests.test_daemon DaemonTests" ``` **Before** Fail on test "test_termination_sigterm". And we can see daemon process do not exit. **After** Test passed Closes #25315 from WeichenXu123/fix_py37_daemon. Authored-by: WeichenXu <weichen.xu@databricks.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit fbeee0c5bcea32346b2279c5b67044f12e5faf7d) Signed-off-by: HyukjinKwon <gurwls223@apache.org> | 02 August 2019, 13:10:56 UTC |

| 9c8c8ba | HyukjinKwon | 01 August 2019, 09:27:22 UTC | [SPARK-28153][PYTHON][BRANCH-2.4] Use AtomicReference at InputFileBlockHolder (to support input_file_name with Python UDF) ## What changes were proposed in this pull request? This PR backports https://github.com/apache/spark/pull/24958 to branch-2.4. This PR proposes to use `AtomicReference` so that parent and child threads can access to the same file block holder. Python UDF expressions are turned to a plan and then it launches a separate thread to consume the input iterator. In the separate child thread, the iterator sets `InputFileBlockHolder.set` before the parent does which the parent thread is unable to read later. 1. In this separate child thread, if it happens to call `InputFileBlockHolder.set` first without initialization of the parent's thread local (which is done when the `ThreadLocal.get()` is first called), the child thread seems calling its own `initialValue` to initialize. 2. After that, the parent calls its own `initialValue` to initializes at the first call of `ThreadLocal.get()`. 3. Both now have two different references. Updating at child isn't reflected to parent. This PR fixes it via initializing parent's thread local with `AtomicReference` for file status so that they can be used in each task, and children thread's update is reflected. I also tried to explain this a bit more at https://github.com/apache/spark/pull/24958#discussion_r297203041. ## How was this patch tested? Manually tested and unittest was added. Closes #25321 from HyukjinKwon/backport-SPARK-28153. Authored-by: HyukjinKwon <gurwls223@apache.org> Signed-off-by: HyukjinKwon <gurwls223@apache.org> | 01 August 2019, 09:27:22 UTC |

| 93f5fb8 | Marcelo Vanzin | 01 August 2019, 00:44:20 UTC | [SPARK-24352][CORE][TESTS] De-flake StandaloneDynamicAllocationSuite blacklist test The issue is that the test tried to stop an existing scheduler and replace it with a new one set up for the test. That can cause issues because both were sharing the same RpcEnv underneath, and unregistering RpcEndpoints is actually asynchronous (see comment in Dispatcher.unregisterRpcEndpoint). So that could lead to races where the new scheduler tried to register before the old one was fully unregistered. The updated test avoids the issue by using a separate RpcEnv / scheduler instance altogether, and also avoids a misleading NPE in the test logs. Closes #25318 from vanzin/SPARK-24352. Authored-by: Marcelo Vanzin <vanzin@cloudera.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit b3ffd8be14779cbb824d14b409f0a6eab93444ba) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 01 August 2019, 00:45:32 UTC |

| 6a361d4 | sychen | 31 July 2019, 20:24:36 UTC | [SPARK-28564][CORE] Access history application defaults to the last attempt id ## What changes were proposed in this pull request? When we set ```spark.history.ui.maxApplications``` to a small value, we can't get some apps from the page search. If the url is spliced (http://localhost:18080/history/local-xxx), it can be accessed if the app has no attempt. But in the case of multiple attempted apps, such a url cannot be accessed, and the page displays Not Found. ## How was this patch tested? Add UT Closes #25301 from cxzl25/hs_app_last_attempt_id. Authored-by: sychen <sychen@ctrip.com> Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> (cherry picked from commit 70ef9064a8aa605b09e639d5a40528b063af25b7) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 31 July 2019, 20:24:52 UTC |

| 992b1bb | gengjiaan | 31 July 2019, 03:17:44 UTC | [MINOR][CORE][DOCS] Fix inconsistent description of showConsoleProgress ## What changes were proposed in this pull request? The latest docs http://spark.apache.org/docs/latest/configuration.html contains some description as below: spark.ui.showConsoleProgress | true | Show the progress bar in the console. The progress bar shows the progress of stages that run for longer than 500ms. If multiple stages run at the same time, multiple progress bars will be displayed on the same line. -- | -- | -- But the class `org.apache.spark.internal.config.UI` define the config `spark.ui.showConsoleProgress` as below: ``` val UI_SHOW_CONSOLE_PROGRESS = ConfigBuilder("spark.ui.showConsoleProgress") .doc("When true, show the progress bar in the console.") .booleanConf .createWithDefault(false) ``` So I think there are exists some little mistake and lead to confuse reader. ## How was this patch tested? No need UT. Closes #25297 from beliefer/inconsistent-desc-showConsoleProgress. Authored-by: gengjiaan <gengjiaan@360.cn> Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit dba4375359a2dfed1f009edc3b1bcf6b3253fe02) Signed-off-by: HyukjinKwon <gurwls223@apache.org> | 31 July 2019, 03:18:07 UTC |

| 9d9c5a5 | Ajith | 30 July 2019, 22:43:44 UTC | [SPARK-26152][CORE][2.4] Synchronize Worker Cleanup with Worker Shutdown ## What changes were proposed in this pull request? The race between org.apache.spark.deploy.DeployMessages.WorkDirCleanup event and org.apache.spark.deploy.worker.Worker#onStop. Here its possible that while the WorkDirCleanup event is being processed, org.apache.spark.deploy.worker.Worker#cleanupThreadExecutor was shutdown. hence any submission after ThreadPoolExecutor will result in java.util.concurrent.RejectedExecutionException ## How was this patch tested? Manually Closes #25302 from dbtsai/branch-2.4-fix. Authored-by: Ajith <ajith2489@gmail.com> Signed-off-by: DB Tsai <d_tsai@apple.com> | 30 July 2019, 22:43:44 UTC |

| 5f4feeb | shahid | 29 July 2019, 16:20:09 UTC | [SPARK-25474][SQL][2.4] Support `spark.sql.statistics.fallBackToHdfs` in data source tables ## What changes were proposed in this pull request? Backport the commit https://github.com/apache/spark/commit/485ae6d1818e8756a86da38d6aefc8f1dbde49c2 into SPARK-2.4 branch. ## How was this patch tested? Added tests. Closes #25284 from shahidki31/branch-2.4. Authored-by: shahid <shahidki31@gmail.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 29 July 2019, 16:20:09 UTC |

| 8934560 | Dongjoon Hyun | 28 July 2019, 01:55:36 UTC | [SPARK-28545][SQL] Add the hash map size to the directional log of ObjectAggregationIterator ## What changes were proposed in this pull request? `ObjectAggregationIterator` shows a directional info message to increase `spark.sql.objectHashAggregate.sortBased.fallbackThreshold` when the size of the in-memory hash map grows too large and it falls back to sort-based aggregation. However, we don't know how much we need to increase. This PR adds the size of the current in-memory hash map size to the log message. **BEFORE** ``` 15:21:41.669 Executor task launch worker for task 0 INFO ObjectAggregationIterator: Aggregation hash map reaches threshold capacity (2 entries), ... ``` **AFTER** ``` 15:20:05.742 Executor task launch worker for task 0 INFO ObjectAggregationIterator: Aggregation hash map size 2 reaches threshold capacity (2 entries), ... ``` ## How was this patch tested? Manual. For example, run `ObjectHashAggregateSuite.scala`'s `typed_count fallback to sort-based aggregation` and search the above message in `target/unit-tests.log`. Closes #25276 from dongjoon-hyun/SPARK-28545. Authored-by: Dongjoon Hyun <dhyun@apple.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit d943ee0a881540aa356cdce533b693baaf7c644f) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 28 July 2019, 01:55:50 UTC |

| 2e0763b | Marcelo Vanzin | 27 July 2019, 18:06:35 UTC | [SPARK-28535][CORE][TEST] Slow down tasks to de-flake JobCancellationSuite This test tries to detect correct behavior in racy code, where the event thread is racing with the executor thread that's trying to kill the running task. If the event that signals the stage end arrives first, any delay in the delivery of the message to kill the task causes the code to rapidly process elements, and may cause the test to assert. Adding a 10ms delay in LocalSchedulerBackend before the task kill makes the test run through ~1000 elements. A longer delay can easily cause the 10000 elements to be processed. Instead, by adding a small delay (10ms) in the test code that processes elements, there's a much lower probability that the kill event will not arrive before the end; that leaves a window of 100s for the event to be delivered to the executor. And because each element only sleeps for 10ms, the test is not really slowed down at all. Closes #25270 from vanzin/SPARK-28535. Authored-by: Marcelo Vanzin <vanzin@cloudera.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit 7f84104b3981dc69238730e0bed7c8c5bd113d76) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 27 July 2019, 18:06:52 UTC |

| afb7492 | Shixiong Zhu | 26 July 2019, 07:10:56 UTC | [SPARK-28489][SS] Fix a bug that KafkaOffsetRangeCalculator.getRanges may drop offsets ## What changes were proposed in this pull request? `KafkaOffsetRangeCalculator.getRanges` may drop offsets due to round off errors. The test added in this PR is one example. This PR rewrites the logic in `KafkaOffsetRangeCalculator.getRanges` to ensure it never drops offsets. ## How was this patch tested? The regression test. Closes #25237 from zsxwing/fix-range. Authored-by: Shixiong Zhu <zsxwing@gmail.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit b9c2521de2bb6281356be55685df94f2d51bcc02) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 26 July 2019, 07:11:13 UTC |

| 2c2b102 | Dongjoon Hyun | 26 July 2019, 00:25:13 UTC | [MINOR][SQL] Fix log messages of DataWritingSparkTask ## What changes were proposed in this pull request? This PR fixes the log messages like `attempt 0stage 9.0` by adding a comma followed by space. These are all instances in `DataWritingSparkTask` which was introduced at https://github.com/apache/spark/commit/6d16b9885d6ad01e1cc56d5241b7ebad99487a0c. This should be fixed in `branch-2.4`, too. ``` 19/07/25 18:35:01 INFO DataWritingSparkTask: Commit authorized for partition 65 (task 153, attempt 0stage 9.0) 19/07/25 18:35:01 INFO DataWritingSparkTask: Committed partition 65 (task 153, attempt 0stage 9.0) ``` ## How was this patch tested? This only changes log messages. Pass the Jenkins with the existing tests. Closes #25257 from dongjoon-hyun/DataWritingSparkTask. Authored-by: Dongjoon Hyun <dhyun@apple.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit cefce21acc64ab88d1286fa5486be489bc707a89) Signed-off-by: HyukjinKwon <gurwls223@apache.org> | 26 July 2019, 00:25:37 UTC |

| a285c0d | zhengruifeng | 24 July 2019, 01:20:22 UTC | [SPARK-28421][ML] SparseVector.apply performance optimization ## What changes were proposed in this pull request? optimize the `SparseVector.apply` by avoiding internal conversion Since the speed up is significant (2.5X ~ 5X), and this method is widely used in ml, I suggest back porting. | size| nnz | apply(old) | apply2(new impl) | apply3(new impl with extra range check)| |------|----------|------------|----------|----------| |10000000|100|75294|12208|18682| |10000000|10000|75616|23132|32932| |10000000|1000000|92949|42529|48821| ## How was this patch tested? existing tests using following code to test performance (here the new impl is named `apply2`, and another impl with extra range check is named `apply3`): ``` import scala.util.Random import org.apache.spark.ml.linalg._ val size = 10000000 for (nnz <- Seq(100, 10000, 1000000)) { val rng = new Random(123) val indices = Array.fill(nnz + nnz)(rng.nextInt.abs % size).distinct.take(nnz).sorted val values = Array.fill(nnz)(rng.nextDouble) val vec = Vectors.sparse(size, indices, values).toSparse val tic1 = System.currentTimeMillis; (0 until 100).foreach{ round => var i = 0; var sum = 0.0; while(i < size) {sum+=vec(i); i+=1} }; val toc1 = System.currentTimeMillis; val tic2 = System.currentTimeMillis; (0 until 100).foreach{ round => var i = 0; var sum = 0.0; while(i < size) {sum+=vec.apply2(i); i+=1} }; val toc2 = System.currentTimeMillis; val tic3 = System.currentTimeMillis; (0 until 100).foreach{ round => var i = 0; var sum = 0.0; while(i < size) {sum+=vec.apply3(i); i+=1} }; val toc3 = System.currentTimeMillis; println((size, nnz, toc1 - tic1, toc2 - tic2, toc3 - tic3)) } ``` Closes #25178 from zhengruifeng/sparse_vec_apply. Authored-by: zhengruifeng <ruifengz@foxmail.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> | 25 July 2019, 12:57:02 UTC |

| 59137e2 | Luca Canali | 25 July 2019, 08:51:51 UTC | [SPARK-26995][K8S][2.4] Make ld-linux-x86-64.so.2 visible to snappy native library under /lib in docker image with Alpine Linux ## What changes were proposed in this pull request? This is a back port of #23898. Running Spark in Docker image with Alpine Linux 3.9.0 throws errors when using snappy. The issue can be reproduced for example as follows: `Seq(1,2).toDF("id").write.format("parquet").save("DELETEME1")` The key part of the error stack is as follows `SparkException: Task failed while writing rows. .... Caused by: java.lang.UnsatisfiedLinkError: /tmp/snappy-1.1.7-2b4872f1-7c41-4b84-bda1-dbcb8dd0ce4c-libsnappyjava.so: Error loading shared library ld-linux-x86-64.so.2: Noded by /tmp/snappy-1.1.7-2b4872f1-7c41-4b84-bda1-dbcb8dd0ce4c-libsnappyjava.so)` The source of the error appears to be that libsnappyjava.so needs ld-linux-x86-64.so.2 and looks for it in /lib, while in Alpine Linux 3.9.0 with libc6-compat version 1.1.20-r3 ld-linux-x86-64.so.2 is located in /lib64. Note: this issue is not present with Alpine Linux 3.8 and libc6-compat version 1.1.19-r10 A possible workaround proposed with this PR is to modify the Dockerfile by adding a symbolic link between /lib and /lib64 so that linux-x86-64.so.2 can be found in /lib. This is probably not the cleanest solution, but I have observed that this is what happened/happens already when using Alpine Linux 3.8.1 (a version of Alpine Linux which was not affected by the issue reported here). ## How was this patch tested? Manually tested by running a simple workload with spark-shell, using docker on a client machine and using Spark on a Kubernetes cluster. The test workload is: `Seq(1,2).toDF("id").write.format("parquet").save("DELETEME1")` Added a test to the KubernetesSuite / BasicTestsSuite Closes #25255 from dongjoon-hyun/SPARK-26995. Authored-by: Luca Canali <luca.canali@cern.ch> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 25 July 2019, 08:51:51 UTC |

| 771db3b | Bruce Robbins | 25 July 2019, 08:36:26 UTC | [SPARK-28156][SQL][BACKPORT-2.4] Self-join should not miss cached view Back-port of #24960 to branch-2.4. The issue is when self-join a cached view, only one side of join uses cached relation. The cause is in `ResolveReferences` we do deduplicate for a view to have new output attributes. Then in `AliasViewChild`, the rule adds extra project under a view. So it breaks cache matching. The fix is when dedup, we only dedup a view which has output different to its child plan. Otherwise, we dedup on the view's child plan. ```scala val df = Seq.tabulate(5) { x => (x, x + 1, x + 2, x + 3) }.toDF("a", "b", "c", "d") df.write.mode("overwrite").format("orc").saveAsTable("table1") sql("drop view if exists table1_vw") sql("create view table1_vw as select * from table1") val cachedView = sql("select a, b, c, d from table1_vw") cachedView.createOrReplaceTempView("cachedview") cachedView.persist() val queryDf = sql( s"""select leftside.a, leftside.b |from cachedview leftside |join cachedview rightside |on leftside.a = rightside.a """.stripMargin) ``` Query plan before this PR: ```scala == Physical Plan == *(2) Project [a#12664, b#12665] +- *(2) BroadcastHashJoin [a#12664], [a#12660], Inner, BuildRight :- *(2) Filter isnotnull(a#12664) : +- *(2) InMemoryTableScan [a#12664, b#12665], [isnotnull(a#12664)] : +- InMemoryRelation [a#12664, b#12665, c#12666, d#12667], StorageLevel(disk, memory, deserialized, 1 replicas) : +- *(1) FileScan orc default.table1[a#12660,b#12661,c#12662,d#12663] Batched: true, DataFilters: [], Format: ORC, Location: InMemoryF ileIndex[file:/Users/viirya/repos/spark-1/sql/core/spark-warehouse/org.apache.spark.sql...., PartitionFilters: [], PushedFilters: [], ReadSchema: struc t<a:int,b:int,c:int,d:int> +- BroadcastExchange HashedRelationBroadcastMode(List(cast(input[0, int, true] as bigint))) +- *(1) Project [a#12660] +- *(1) Filter isnotnull(a#12660) +- *(1) FileScan orc default.table1[a#12660] Batched: true, DataFilters: [isnotnull(a#12660)], Format: ORC, Location: InMemoryFileIndex[fil e:/Users/viirya/repos/spark-1/sql/core/spark-warehouse/org.apache.spark.sql...., PartitionFilters: [], PushedFilters: [IsNotNull(a)], ReadSchema: struc t<a:int> ``` Query plan after this PR: ```scala == Physical Plan == *(2) Project [a#12664, b#12665] +- *(2) BroadcastHashJoin [a#12664], [a#12692], Inner, BuildRight :- *(2) Filter isnotnull(a#12664) : +- *(2) InMemoryTableScan [a#12664, b#12665], [isnotnull(a#12664)] : +- InMemoryRelation [a#12664, b#12665, c#12666, d#12667], StorageLevel(disk, memory, deserialized, 1 replicas) : +- *(1) FileScan orc default.table1[a#12660,b#12661,c#12662,d#12663] Batched: true, DataFilters: [], Format: ORC, Location: InMemoryFileIndex[file:/Users/viirya/repos/spark-1/sql/core/spark-warehouse/org.apache.spark.sql...., PartitionFilters: [], PushedFilters: [], ReadSchema: struct<a:int,b:int,c:int,d:int> +- BroadcastExchange HashedRelationBroadcastMode(List(cast(input[0, int, false] as bigint))) +- *(1) Filter isnotnull(a#12692) +- *(1) InMemoryTableScan [a#12692], [isnotnull(a#12692)] +- InMemoryRelation [a#12692, b#12693, c#12694, d#12695], StorageLevel(disk, memory, deserialized, 1 replicas) +- *(1) FileScan orc default.table1[a#12660,b#12661,c#12662,d#12663] Batched: true, DataFilters: [], Format: ORC, Location: InMemoryFileIndex[file:/Users/viirya/repos/spark-1/sql/core/spark-warehouse/org.apache.spark.sql...., PartitionFilters: [], PushedFilters: [], ReadSchema: struct<a:int,b:int,c:int,d:int> ``` Added test. Closes #25068 from bersprockets/selfjoin_24. Lead-authored-by: Bruce Robbins <bersprockets@gmail.com> Co-authored-by: Liang-Chi Hsieh <viirya@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 25 July 2019, 08:36:26 UTC |

| 98ba2f6 | shivsood | 25 July 2019, 02:35:13 UTC | [SPARK-28152][SQL][2.4] Mapped ShortType to SMALLINT and FloatType to REAL for MsSqlServerDialect ## What changes were proposed in this pull request? This is a backport of SPARK-28152 to Spark 2.4. SPARK-28152 PR aims to correct mappings in `MsSqlServerDialect`. `ShortType` is mapped to `SMALLINT` and `FloatType` is mapped to `REAL` per [JBDC mapping]( https://docs.microsoft.com/en-us/sql/connect/jdbc/using-basic-data-types?view=sql-server-2017) respectively. ShortType and FloatTypes are not correctly mapped to right JDBC types when using JDBC connector. This results in tables and spark data frame being created with unintended types. The issue was observed when validating against SQLServer. Refer [JBDC mapping]( https://docs.microsoft.com/en-us/sql/connect/jdbc/using-basic-data-types?view=sql-server-2017 ) for guidance on mappings between SQLServer, JDBC and Java. Note that java "Short" type should be mapped to JDBC "SMALLINT" and java Float should be mapped to JDBC "REAL". Some example issue that can happen because of wrong mappings - Write from df with column type results in a SQL table of with column type as INTEGER as opposed to SMALLINT.Thus a larger table that expected. - Read results in a dataframe with type INTEGER as opposed to ShortType - ShortType has a problem in both the the write and read path - FloatTypes only have an issue with read path. In the write path Spark data type 'FloatType' is correctly mapped to JDBC equivalent data type 'Real'. But in the read path when JDBC data types need to be converted to Catalyst data types ( getCatalystType) 'Real' gets incorrectly gets mapped to 'DoubleType' rather than 'FloatType'. Refer #28151 which contained this fix as one part of a larger PR. Following PR #28151 discussion it was decided to file seperate PRs for each of the fixes. ## How was this patch tested? UnitTest added in JDBCSuite.scala and these were tested. Integration test updated and passed in MsSqlServerDialect.scala E2E test done with SQLServer Closes #25248 from shivsood/PR_28152_2.4. Authored-by: shivsood <shivsood@microsoft.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 25 July 2019, 02:35:13 UTC |

| 366519d | Dongjoon Hyun | 24 July 2019, 21:20:25 UTC | [SPARK-28496][INFRA] Use branch name instead of tag during dry-run ## What changes were proposed in this pull request? There are two cases when we use `dry run`. First, when the tag already exists, we can ask `confirmation` on the existing tag name. ``` $ dev/create-release/do-release-docker.sh -d /tmp/spark-2.4.4 -n -s docs Output directory already exists. Overwrite and continue? [y/n] y Branch [branch-2.4]: Current branch version is 2.4.4-SNAPSHOT. Release [2.4.4]: 2.4.3 RC # [1]: v2.4.3-rc1 already exists. Continue anyway [y/n]? y This is a dry run. Please confirm the ref that will be built for testing. Ref [v2.4.3-rc1]: ``` Second, when the tag doesn't exist, we had better ask `confirmation` on the branch name. If we do not change the default value, it will fail eventually. ``` $ dev/create-release/do-release-docker.sh -d /tmp/spark-2.4.4 -n -s docs Branch [branch-2.4]: Current branch version is 2.4.4-SNAPSHOT. Release [2.4.4]: RC # [1]: This is a dry run. Please confirm the ref that will be built for testing. Ref [v2.4.4-rc1]: ``` This PR improves the second case by providing the branch name instead. This helps the release testing before tagging. ## How was this patch tested? Manually do the following and check the default value of `Ref` field. ``` $ dev/create-release/do-release-docker.sh -d /tmp/spark-2.4.4 -n -s docs Branch [branch-2.4]: Current branch version is 2.4.4-SNAPSHOT. Release [2.4.4]: RC # [1]: This is a dry run. Please confirm the ref that will be built for testing. Ref [branch-2.4]: ... ``` Closes #25240 from dongjoon-hyun/SPARK-28496. Authored-by: Dongjoon Hyun <dhyun@apple.com> Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> (cherry picked from commit cfca26e97384246f21ecb9d70eb0f7792607fc47) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 24 July 2019, 22:07:58 UTC |

| 73bb605 | Zhu, Lipeng | 19 March 2019, 15:43:23 UTC | [SPARK-27168][SQL][TEST] Add docker integration test for MsSql server ## What changes were proposed in this pull request? This PR aims to add a JDBC integration test for MsSql server. ## How was this patch tested? ``` ./build/mvn clean install -DskipTests ./build/mvn test -Pdocker-integration-tests -pl :spark-docker-integration-tests_2.12 \ -Dtest=none -DwildcardSuites=org.apache.spark.sql.jdbc.MsSqlServerIntegrationSuite ``` Closes #24099 from lipzhu/SPARK-27168. Lead-authored-by: Zhu, Lipeng <lipzhu@ebay.com> Co-authored-by: Dongjoon Hyun <dhyun@apple.com> Co-authored-by: Lipeng Zhu <lipzhu@icloud.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 24 July 2019, 02:13:53 UTC |

| 4336d1c | Zhu, Lipeng | 16 March 2019, 01:21:59 UTC | [SPARK-27159][SQL] update mssql server dialect to support binary type ## What changes were proposed in this pull request? Change the binary type mapping from default blob to varbinary(max) for mssql server. https://docs.microsoft.com/en-us/sql/t-sql/data-types/binary-and-varbinary-transact-sql?view=sql-server-2017  ## How was this patch tested? Unit test. Closes #24091 from lipzhu/SPARK-27159. Authored-by: Zhu, Lipeng <lipzhu@ebay.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> | 24 July 2019, 02:08:01 UTC |

| c01c294 | Dongjoon Hyun | 22 July 2019, 17:45:18 UTC | [SPARK-28468][INFRA][2.4] Upgrade pip to fix `sphinx` install error ## What changes were proposed in this pull request? Spark 2.4.x should be a LTS version and we should use the release script in `branch-2.4` to avoid the previous mistakes. Currently, `do-release-docker.sh` fails at `sphinx` installation to `Python 2.7` at `branch-2.4` only. This PR aims to upgrade `pip` to handle this. ``` $ dev/create-release/do-release-docker.sh -d /tmp/spark-2.4.4 -n ... = Building spark-rm image with tag latest... Command: docker build -t spark-rm:latest --build-arg UID=501 /Users/dhyun/APACHE/spark-2.4/dev/create-release/spark-rm Log file: docker-build.log // Terminated. ``` ``` $ tail /tmp/spark-2.4.4/docker-build.log Collecting sphinx Downloading https://files.pythonhosted.org/packages/89/1e/64c77163706556b647f99d67b42fced9d39ae6b1b86673965a2cd28037b5/Sphinx-2.1.2.tar.gz (6.3MB) Complete output from command python setup.py egg_info: ERROR: Sphinx requires at least Python 3.5 to run. ---------------------------------------- Command "python setup.py egg_info" failed with error code 1 in /tmp/pip-build-2tylGA/sphinx/ You are using pip version 8.1.1, however version 19.1.1 is available. You should consider upgrading via the 'pip install --upgrade pip' command. ``` The following is the short reproducible step. ``` $ docker build -t spark-rm-test2 --build-arg UID=501 dev/create-release/spark-rm ``` ## How was this patch tested? Manual. ``` $ docker build -t spark-rm-test2 --build-arg UID=501 dev/create-release/spark-rm ``` Closes #25226 from dongjoon-hyun/SPARK-28468. Authored-by: Dongjoon Hyun <dhyun@apple.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 22 July 2019, 17:45:18 UTC |

| b26e82f | pengbo | 22 July 2019, 05:12:49 UTC | [SPARK-27416][SQL][BRANCH-2.4] UnsafeMapData & UnsafeArrayData Kryo serialization … This is a Spark 2.4.x backport of #24357 by pengbo. Original description follows below: --- ## What changes were proposed in this pull request? Finish the rest work of https://github.com/apache/spark/pull/24317, https://github.com/apache/spark/pull/9030 a. Implement Kryo serialization for UnsafeArrayData b. fix UnsafeMapData Java/Kryo Serialization issue when two machines have different Oops size c. Move the duplicate code "getBytes()" to Utils. ## How was this patch tested? According Units has been added & tested Closes #25223 from JoshRosen/SPARK-27416-2.4. Authored-by: pengbo <bo.peng1019@gmail.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 22 July 2019, 05:12:49 UTC |

| a7e2de8 | Arun Pandian | 21 July 2019, 20:07:22 UTC | [SPARK-28464][DOC][SS] Document Kafka source minPartitions option Adding doc for the kafka source minPartitions option to "Structured Streaming + Kafka Integration Guide" The text is based on the content in https://docs.databricks.com/spark/latest/structured-streaming/kafka.html#configuration Closes #25219 from arunpandianp/SPARK-28464. Authored-by: Arun Pandian <apandian@groupon.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit a0a58cf2effc4f4fb17ef3b1ca3def2d4022c970) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 21 July 2019, 20:23:49 UTC |

| 5b8b9fb | Josh Rosen | 18 July 2019, 20:15:39 UTC | [SPARK-28430][UI] Fix stage table rendering when some tasks' metrics are missing ## What changes were proposed in this pull request? The Spark UI's stages table misrenders the input/output metrics columns when some tasks are missing input metrics. See the screenshot below for an example of the problem:  This is because those columns' are defined as ```scala {if (hasInput(stage)) { metricInfo(task) { m => ... <td>....</td> } } ``` where `metricInfo` renders the node returned by the closure in case metrics are defined or returns `Nil` in case metrics are not defined. If metrics are undefined then we'll fail to render the empty `<td></td>` tag, causing columns to become misaligned as shown in the screenshot. To fix this, this patch changes this to ```scala {if (hasInput(stage)) { <td>{ metricInfo(task) { m => ... Unparsed(...) } }</td> } ``` which is an idiom that's already in use for the shuffle read / write columns. ## How was this patch tested? It isn't. I'm arguing for correctness because the modifications are consistent with rendering methods that work correctly for other columns. Closes #25183 from JoshRosen/joshrosen/fix-task-table-with-partial-io-metrics. Authored-by: Josh Rosen <rosenville@gmail.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit 3776fbdfdeac07d191f231b29cf906cabdc6de3f) Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 18 July 2019, 20:15:54 UTC |

| 76251c3 | HyukjinKwon | 17 July 2019, 09:44:11 UTC | [SPARK-28418][PYTHON][SQL] Wait for event process in 'test_query_execution_listener_on_collect' It fixes a flaky test: ``` ERROR [0.164s]: test_query_execution_listener_on_collect (pyspark.sql.tests.test_dataframe.QueryExecutionListenerTests) ---------------------------------------------------------------------- Traceback (most recent call last): File "/home/jenkins/python/pyspark/sql/tests/test_dataframe.py", line 758, in test_query_execution_listener_on_collect "The callback from the query execution listener should be called after 'collect'") AssertionError: The callback from the query execution listener should be called after 'collect' ``` Seems it can be failed because the event was somehow delayed but checked first. Manually. Closes #25177 from HyukjinKwon/SPARK-28418. Authored-by: HyukjinKwon <gurwls223@apache.org> Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit 66179fa8426324e11819e04af4cf3eabf9f2627f) Signed-off-by: HyukjinKwon <gurwls223@apache.org> | 17 July 2019, 09:47:16 UTC |

| 198f2f3 | herman | 17 July 2019, 01:01:15 UTC | [SPARK-27485][BRANCH-2.4] EnsureRequirements.reorder should handle duplicate expressions gracefully Backport of 421d9d56efd447d31787e77316ce0eafb5fe45a5 ## What changes were proposed in this pull request? When reordering joins EnsureRequirements only checks if all the join keys are present in the partitioning expression seq. This is problematic when the joins keys and and partitioning expressions both contain duplicates but not the same number of duplicates for each expression, e.g. `Seq(a, a, b)` vs `Seq(a, b, b)`. This fails with an index lookup failure in the `reorder` function. This PR fixes this removing the equality checking logic from the `reorderJoinKeys` function, and by doing the multiset equality in the `reorder` function while building the reordered key sequences. ## How was this patch tested? Added a unit test to the `PlannerSuite` and added an integration test to `JoinSuite` Closes #25174 from hvanhovell/SPARK-27485-2.4. Authored-by: herman <herman@databricks.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> | 17 July 2019, 01:01:15 UTC |

| 63898cb | gatorsmile | 16 July 2019, 13:57:14 UTC | Revert "[SPARK-27485] EnsureRequirements.reorder should handle duplicate expressions gracefully" This reverts commit 72f547d4a960ba0ba9cace53a0a5553eca1b4dd6. | 16 July 2019, 13:57:14 UTC |

| 3f5a114 | Jungtaek Lim (HeartSaVioR) | 16 July 2019, 13:27:53 UTC | [SPARK-28247][SS][BRANCH-2.4] Fix flaky test "query without test harness" on ContinuousSuite ## What changes were proposed in this pull request? This patch fixes the flaky test "query without test harness" on ContinuousSuite, via adding some more gaps on waiting query to commit the epoch which writes output rows. The observation of this issue is below (injected some debug logs to get them): ``` reader creation time 1562225320210 epoch 1 launched 1562225320593 (+380ms from reader creation time) epoch 13 launched 1562225321702 (+1.5s from reader creation time) partition reader creation time 1562225321715 (+1.5s from reader creation time) next read time for first next call 1562225321210 (+1s from reader creation time) first next called in partition reader 1562225321746 (immediately after creation of partition reader) wait finished in next called in partition reader 1562225321746 (no wait) second next called in partition reader 1562225321747 (immediately after first next()) epoch 0 commit started 1562225321861 writing rows (0, 1) (belong to epoch 13) 1562225321866 (+100ms after first next()) wait start in waitForRateSourceTriggers(2) 1562225322059 next read time for second next call 1562225322210 (+1s from previous "next read time") wait finished in next called in partition reader 1562225322211 (+450ms wait) writing rows (2, 3) (belong to epoch 13) 1562225322211 (immediately after next()) epoch 14 launched 1562225322246 desired wait time in waitForRateSourceTriggers(2) 1562225322510 (+2.3s from reader creation time) epoch 12 committed 1562225323034 ``` These rows were written within desired wait time, but the epoch 13 couldn't be committed within it. Interestingly, epoch 12 was lucky to be committed within a gap between finished waiting in waitForRateSourceTriggers and query.stop() - but even suppose the rows were written in epoch 12, it would be just in luck and epoch should be committed within desired wait time. This patch modifies Rate continuous stream to track the highest committed value, so that test can wait until desired value is reported to the stream as committed. This patch also modifies Rate continuous stream to track the timestamp at stream gets the first committed offset, and let `waitForRateSourceTriggers` use the timestamp. This also relies on waiting for specific period, but safer approach compared to current based on the observation above. Based on the change, this patch saves couple of seconds in test time. ## How was this patch tested? 3 sequential test runs succeeded locally. Closes #25154 from HeartSaVioR/SPARK-28247-branch-2.4. Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> | 16 July 2019, 13:27:53 UTC |