https://github.com/apache/spark

- HEAD

- refs/heads/branch-0.5

- refs/heads/branch-0.6

- refs/heads/branch-0.7

- refs/heads/branch-0.8

- refs/heads/branch-0.9

- refs/heads/branch-1.0

- refs/heads/branch-1.0-jdbc

- refs/heads/branch-1.1

- refs/heads/branch-1.2

- refs/heads/branch-1.3

- refs/heads/branch-1.4

- refs/heads/branch-1.5

- refs/heads/branch-1.6

- refs/heads/branch-2.0

- refs/heads/branch-2.1

- refs/heads/branch-2.2

- refs/heads/branch-2.3

- refs/heads/branch-2.4

- refs/heads/branch-3.0

- refs/heads/branch-3.1

- refs/heads/branch-3.2

- refs/heads/branch-3.3

- refs/heads/branch-3.4

- refs/heads/branch-3.5

- refs/heads/master

- refs/remotes/origin/branch-0.8

- refs/remotes/origin/td-rdd-save

- refs/tags/0.3-scala-2.8

- refs/tags/0.3-scala-2.9

- refs/tags/2.0.0-preview

- refs/tags/alpha-0.1

- refs/tags/alpha-0.2

- refs/tags/v0.5.0

- refs/tags/v0.5.1

- refs/tags/v0.5.2

- refs/tags/v0.6.0

- refs/tags/v0.6.0-yarn

- refs/tags/v0.6.1

- refs/tags/v0.6.2

- refs/tags/v0.7.0

- refs/tags/v0.7.0-bizo-1

- refs/tags/v0.7.1

- refs/tags/v0.7.2

- refs/tags/v0.9.1

- refs/tags/v0.9.2

- refs/tags/v1.0.0

- refs/tags/v1.0.1

- refs/tags/v1.0.2

- refs/tags/v1.1.0

- refs/tags/v1.1.1

- refs/tags/v1.2.0

- refs/tags/v1.2.1

- refs/tags/v1.2.2

- refs/tags/v1.3.0

- refs/tags/v1.3.1

- refs/tags/v1.4.0

- refs/tags/v1.4.1

- refs/tags/v1.5.0-rc1

- refs/tags/v1.5.0-rc2

- refs/tags/v1.5.0-rc3

- refs/tags/v1.5.1

- refs/tags/v1.6.0

- refs/tags/v1.6.1

- refs/tags/v1.6.2

- refs/tags/v1.6.3

- refs/tags/v2.0.0

- refs/tags/v2.0.1

- refs/tags/v2.0.2

- refs/tags/v2.1.0

- refs/tags/v2.1.1

- refs/tags/v2.1.2

- refs/tags/v2.1.2-rc1

- refs/tags/v2.1.2-rc2

- refs/tags/v2.1.2-rc3

- refs/tags/v2.1.2-rc4

- refs/tags/v2.1.3

- refs/tags/v2.1.3-rc1

- refs/tags/v2.1.3-rc2

- refs/tags/v2.2.0

- refs/tags/v2.2.1

- refs/tags/v2.2.1-rc1

- refs/tags/v2.2.1-rc2

- refs/tags/v2.2.2

- refs/tags/v2.2.2-rc1

- refs/tags/v2.2.2-rc2

- refs/tags/v2.2.3

- refs/tags/v2.2.3-rc1

- refs/tags/v2.3.0

- refs/tags/v2.3.0-rc1

- refs/tags/v2.3.0-rc2

- refs/tags/v2.3.0-rc3

- refs/tags/v2.3.0-rc4

- refs/tags/v2.3.1

- refs/tags/v2.3.1-rc1

- refs/tags/v2.3.1-rc2

- refs/tags/v2.3.1-rc3

- refs/tags/v2.3.1-rc4

- refs/tags/v2.3.2

- refs/tags/v2.3.2-rc1

- refs/tags/v2.3.2-rc2

- refs/tags/v2.3.2-rc3

- refs/tags/v2.3.2-rc4

- refs/tags/v2.3.2-rc5

- refs/tags/v2.3.2-rc6

- refs/tags/v2.3.3

- refs/tags/v2.3.3-rc1

- refs/tags/v2.3.3-rc2

- refs/tags/v2.3.4

- refs/tags/v2.3.4-rc1

- refs/tags/v2.4.0

- refs/tags/v2.4.0-rc1

- refs/tags/v2.4.0-rc2

- refs/tags/v2.4.0-rc3

- refs/tags/v2.4.0-rc4

- refs/tags/v2.4.0-rc5

- refs/tags/v2.4.1

- refs/tags/v2.4.1-rc1

- refs/tags/v2.4.1-rc2

- refs/tags/v2.4.1-rc3

- refs/tags/v2.4.1-rc4

- refs/tags/v2.4.1-rc5

- refs/tags/v2.4.1-rc6

- refs/tags/v2.4.1-rc7

- refs/tags/v2.4.1-rc8

- refs/tags/v2.4.1-rc9

- refs/tags/v2.4.2

- refs/tags/v2.4.2-rc1

- refs/tags/v2.4.3

- refs/tags/v2.4.3-rc1

- refs/tags/v2.4.4

- refs/tags/v2.4.4-rc1

- refs/tags/v2.4.4-rc2

- refs/tags/v2.4.4-rc3

- refs/tags/v2.4.5

- refs/tags/v2.4.5-rc1

- refs/tags/v2.4.5-rc2

- refs/tags/v2.4.6

- refs/tags/v2.4.6-rc1

- refs/tags/v2.4.6-rc2

- refs/tags/v2.4.6-rc3

- refs/tags/v2.4.6-rc4

- refs/tags/v2.4.6-rc5

- refs/tags/v2.4.6-rc6

- refs/tags/v2.4.6-rc7

- refs/tags/v2.4.6-rc8

- refs/tags/v2.4.7

- refs/tags/v2.4.7-rc1

- refs/tags/v2.4.7-rc2

- refs/tags/v2.4.7-rc3

- refs/tags/v2.4.8

- refs/tags/v2.4.8-rc1

- refs/tags/v2.4.8-rc2

- refs/tags/v2.4.8-rc3

- refs/tags/v2.4.8-rc4

- refs/tags/v3.0.0

- refs/tags/v3.0.0-preview2

- refs/tags/v3.0.0-preview2-rc1

- refs/tags/v3.0.0-preview2-rc2

- refs/tags/v3.0.0-rc1

- refs/tags/v3.0.0-rc2

- refs/tags/v3.0.0-rc3

- refs/tags/v3.0.1

- refs/tags/v3.0.1-rc1

- refs/tags/v3.0.1-rc2

- refs/tags/v3.0.1-rc3

- refs/tags/v3.0.2

- refs/tags/v3.0.2-rc1

- refs/tags/v3.0.3

- refs/tags/v3.0.3-rc1

- refs/tags/v3.1.0-rc1

- refs/tags/v3.1.1

- refs/tags/v3.1.1-rc1

- refs/tags/v3.1.1-rc2

- refs/tags/v3.1.1-rc3

- refs/tags/v3.1.2

- refs/tags/v3.1.2-rc1

- refs/tags/v3.1.3

- refs/tags/v3.1.3-rc1

- refs/tags/v3.1.3-rc2

- refs/tags/v3.1.3-rc3

- refs/tags/v3.1.3-rc4

- refs/tags/v3.2.0

- refs/tags/v3.2.0-rc1

- refs/tags/v3.2.0-rc2

- refs/tags/v3.2.0-rc3

- refs/tags/v3.2.0-rc4

- refs/tags/v3.2.0-rc5

- refs/tags/v3.2.0-rc6

- refs/tags/v3.2.0-rc7

- refs/tags/v3.2.1

- refs/tags/v3.2.1-rc1

- refs/tags/v3.2.1-rc2

- refs/tags/v3.2.2

- refs/tags/v3.2.2-rc1

- refs/tags/v3.2.3

- refs/tags/v3.2.3-rc1

- refs/tags/v3.2.4

- refs/tags/v3.2.4-rc1

- refs/tags/v3.3.0

- refs/tags/v3.3.0-rc1

- refs/tags/v3.3.0-rc2

- refs/tags/v3.3.0-rc3

- refs/tags/v3.3.0-rc4

- refs/tags/v3.3.0-rc5

- refs/tags/v3.3.0-rc6

- refs/tags/v3.3.1

- refs/tags/v3.3.1-rc1

- refs/tags/v3.3.1-rc2

- refs/tags/v3.3.1-rc3

- refs/tags/v3.3.1-rc4

- refs/tags/v3.3.2

- refs/tags/v3.3.2-rc1

- refs/tags/v3.3.3

- refs/tags/v3.3.3-rc1

- refs/tags/v3.3.4

- refs/tags/v3.3.4-rc1

- refs/tags/v3.4.0

- refs/tags/v3.4.0-rc1

- refs/tags/v3.4.0-rc2

- refs/tags/v3.4.0-rc3

- refs/tags/v3.4.0-rc4

- refs/tags/v3.4.0-rc5

- refs/tags/v3.4.0-rc6

- refs/tags/v3.4.0-rc7

- refs/tags/v3.4.1

- refs/tags/v3.4.1-rc1

- refs/tags/v3.4.2

- refs/tags/v3.4.2-rc1

- refs/tags/v3.4.3

- refs/tags/v3.4.3-rc1

- refs/tags/v3.4.3-rc2

- refs/tags/v3.5.0

- refs/tags/v3.5.0-rc1

- refs/tags/v3.5.0-rc2

- refs/tags/v3.5.0-rc3

- refs/tags/v3.5.0-rc4

- refs/tags/v3.5.0-rc5

- refs/tags/v3.5.1

- refs/tags/v3.5.1-rc1

- refs/tags/v3.5.1-rc2

- refs/tags/v3.5.2-rc1

- refs/tags/v3.5.2-rc2

- refs/tags/v3.5.2-rc3

- refs/tags/v3.5.2-rc4

- refs/tags/v4.0.0-preview1

- refs/tags/v4.0.0-preview1-rc1

- refs/tags/v4.0.0-preview1-rc2

- refs/tags/v4.0.0-preview1-rc3

Take a new snapshot of a software origin

If the archived software origin currently browsed is not synchronized with its upstream version (for instance when new commits have been issued), you can explicitly request Software Heritage to take a new snapshot of it.

Use the form below to proceed. Once a request has been submitted and accepted, it will be processed as soon as possible. You can then check its processing state by visiting this dedicated page.

Processing "take a new snapshot" request ...

Permalinks

To reference or cite the objects present in the Software Heritage archive, permalinks based on SoftWare Hash IDentifiers (SWHIDs) must be used.

Select below a type of object currently browsed in order to display its associated SWHID and permalink.

| Revision | Author | Date | Message | Commit Date |

|---|---|---|---|---|

| 5103e00 | Liang-Chi Hsieh | 10 February 2023, 17:25:33 UTC | Preparing Spark release v3.3.2-rc1 | 10 February 2023, 17:25:33 UTC |

| 307ec98 | Liang-Chi Hsieh | 10 February 2023, 02:17:27 UTC | [MINOR][SS] Fix setTimeoutTimestamp doc ### What changes were proposed in this pull request? This patch updates the API doc of `setTimeoutTimestamp` of `GroupState`. ### Why are the changes needed? Update incorrect API doc. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Doc change only. Closes #39958 from viirya/fix_group_state. Authored-by: Liang-Chi Hsieh <viirya@gmail.com> Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com> (cherry picked from commit a180e67d3859a4e145beaf671c1221fb4d6cbda7) Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com> | 10 February 2023, 02:17:51 UTC |

| 7205567 | awdavidson | 09 February 2023, 00:02:12 UTC | [SPARK-40819][SQL][FOLLOWUP] Update SqlConf version for nanosAsLong configuration As requested by HyukjinKwon in https://github.com/apache/spark/pull/38312 NB: This change needs to be backported ### What changes were proposed in this pull request? Update version set for "spark.sql.legacy.parquet.nanosAsLong" configuration in SqlConf. This update is required because the previous PR set version to `3.2.3` which has already been released. Updating to version `3.2.4` will correctly reflect when this configuration element was added ### Why are the changes needed? Correctness and to complete SPARK-40819 ### Does this PR introduce _any_ user-facing change? No, this is merely so this configuration element has the correct version ### How was this patch tested? N/A Closes #39943 from awdavidson/SPARK-40819_sql-conf. Authored-by: awdavidson <54780428+awdavidson@users.noreply.github.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 409c661542c4b966876f0af4119803de25670649) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 09 February 2023, 00:02:36 UTC |

| 3ec9b05 | alfreddavidson | 08 February 2023, 02:07:25 UTC | [SPARK-40819][SQL][3.3] Timestamp nanos behaviour regression As per HyukjinKwon request on https://github.com/apache/spark/pull/38312 to backport fix into 3.3 ### What changes were proposed in this pull request? Handle `TimeUnit.NANOS` for parquet `Timestamps` addressing a regression in behaviour since 3.2 ### Why are the changes needed? Since version 3.2 reading parquet files that contain attributes with type `TIMESTAMP(NANOS,true)` is not possible as ParquetSchemaConverter returns ``` Caused by: org.apache.spark.sql.AnalysisException: Illegal Parquet type: INT64 (TIMESTAMP(NANOS,true)) ``` https://issues.apache.org/jira/browse/SPARK-34661 introduced a change matching on the `LogicalTypeAnnotation` which only covers Timestamp cases for `TimeUnit.MILLIS` and `TimeUnit.MICROS` meaning `TimeUnit.NANOS` would return `illegalType()` Prior to 3.2 the matching used the `originalType` which for `TIMESTAMP(NANOS,true)` return `null` and therefore resulted to a `LongType`, the change proposed is too consider `TimeUnit.NANOS` and return `LongType` making behaviour the same as before. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Added unit test covering this scenario. Internally deployed to read parquet files that contain `TIMESTAMP(NANOS,true)` Closes #39904 from awdavidson/ts-nanos-fix-3.3. Lead-authored-by: alfreddavidson <alfie.davidson9@gmail.com> Co-authored-by: awdavidson <54780428+awdavidson@users.noreply.github.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 08 February 2023, 02:07:25 UTC |

| 51ed6ba | wayneguow | 07 February 2023, 07:11:09 UTC | [SPARK-41962][MINOR][SQL] Update the order of imports in class SpecificParquetRecordReaderBase ### What changes were proposed in this pull request? Update the order of imports in class SpecificParquetRecordReaderBase. ### Why are the changes needed? Follow the code style. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Passed GA. Closes #39906 from wayneguow/import. Authored-by: wayneguow <guow93@gmail.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit d6134f78d3d448a990af53beb8850ff91b71aef6) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 07 February 2023, 07:11:29 UTC |

| 17b7123 | Peter Toth | 06 February 2023, 12:36:57 UTC | [SPARK-42346][SQL] Rewrite distinct aggregates after subquery merge ### What changes were proposed in this pull request? Unfortunately https://github.com/apache/spark/pull/32298 introduced a regression from Spark 3.2 to 3.3 as after that change a merged subquery can contain multiple distict type aggregates. Those aggregates need to be rewritten by the `RewriteDistinctAggregates` rule to get the correct results. This PR fixed that. ### Why are the changes needed? The following query: ``` SELECT (SELECT count(distinct c1) FROM t1), (SELECT count(distinct c2) FROM t1) ``` currently fails with: ``` java.lang.IllegalStateException: You hit a query analyzer bug. Please report your query to Spark user mailing list. at org.apache.spark.sql.execution.SparkStrategies$Aggregation$.apply(SparkStrategies.scala:538) ``` but works again after this PR. ### Does this PR introduce _any_ user-facing change? Yes, the above query works again. ### How was this patch tested? Added new UT. Closes #39887 from peter-toth/SPARK-42346-rewrite-distinct-aggregates-after-subquery-merge. Authored-by: Peter Toth <peter.toth@gmail.com> Signed-off-by: Yuming Wang <yumwang@ebay.com> (cherry picked from commit 5940b9884b4b172f65220da7857d2952b137bc51) Signed-off-by: Yuming Wang <yumwang@ebay.com> | 06 February 2023, 13:22:58 UTC |

| cdb494b | Yan Wei | 05 February 2023, 11:08:01 UTC | [SPARK-42344][K8S] Change the default size of the CONFIG_MAP_MAXSIZE The default size of the CONFIG_MAP_MAXSIZE should not be greater than 1048576 ### What changes were proposed in this pull request? This PR changed the default size of the CONFIG_MAP_MAXSIZE from 1572864(1.5 MiB) to 1048576(1.0 MiB) ### Why are the changes needed? When a job is submitted by the spark to the K8S with a configmap , The Spark-Submit will call the K8S‘s POST API "api/v1/namespaces/default/configmaps". And the size of the configmaps will be validated by this K8S API,the max value shoud not be greater than 1048576. In the previous comment,the explain in https://etcd.io/docs/v3.4/dev-guide/limit/ is: "etcd is designed to handle small key value pairs typical for metadata. Larger requests will work, but may increase the latency of other requests. By default, the maximum size of any request is 1.5 MiB. This limit is configurable through --max-request-bytes flag for etcd server." This explanation is from the perspective of etcd ,not K8S. So I think the default value of the configmap in Spark should not be greate than 1048576. ### Does this PR introduce _any_ user-facing change? Yes. Generally, the size of the configmap will not exceed 1572864 or even 1048576. So the problem solved here may not be perceived by users. ### How was this patch tested? local test Closes #39884 from ninebigbig/master. Authored-by: Yan Wei <ninebigbig@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 9ac46408ec943d5121bbc14f2ce0d8b2ff453de5) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit d07a0e9b476e1d846e7be13394c8251244cc832e) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 05 February 2023, 11:12:19 UTC |

| 2d539c5 | oleksii.diagiliev | 03 February 2023, 16:48:56 UTC | [SPARK-41554] fix changing of Decimal scale when scale decreased by m… …ore than 18 This is a backport PR for https://github.com/apache/spark/pull/39099 Closes #39813 from fe2s/branch-3.3-fix-decimal-scaling. Authored-by: oleksii.diagiliev <oleksii.diagiliev@workday.com> Signed-off-by: Sean Owen <srowen@gmail.com> | 03 February 2023, 16:48:56 UTC |

| 6e0dfa9 | Deepyaman Datta | 03 February 2023, 06:50:32 UTC | [MINOR][DOCS][PYTHON][PS] Fix the `.groupby()` method docstring ### What changes were proposed in this pull request? Update the docstring for the `.groupby()` method. ### Why are the changes needed? The `.groupby()` method accept a list of columns (or a single column), and a column is defined by a `Series` or name (`Label`). It's a bit confusing to say "using a Series of columns", because `Series` (capitalized) is a specific object that isn't actually used/reasonable to use here. ### Does this PR introduce _any_ user-facing change? Yes (documentation) ### How was this patch tested? N/A Closes #38625 from deepyaman/patch-3. Authored-by: Deepyaman Datta <deepyaman.datta@utexas.edu> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 71154dc1b35c7227ef9033fe5abc2a8b3f2d0990) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 03 February 2023, 06:51:15 UTC |

| 80e8df1 | Wenchen Fan | 01 February 2023, 09:36:14 UTC | [SPARK-42259][SQL] ResolveGroupingAnalytics should take care of Python UDAF This is a long-standing correctness issue with Python UDAF and grouping analytics. The rule `ResolveGroupingAnalytics` should take care of Python UDAF when matching aggregate expressions. bug fix Yes, the query result was wrong before existing tests Closes #39824 from cloud-fan/python. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 1219c8492376e038894111cd5d922229260482e7) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 01 February 2023, 09:40:56 UTC |

| 0bb8f22 | Dongjoon Hyun | 30 January 2023, 19:59:27 UTC | [SPARK-42230][INFRA][FOLLOWUP] Add `GITHUB_PREV_SHA` and `APACHE_SPARK_REF` to lint job ### What changes were proposed in this pull request? Like the other jobs, this PR aims to add `GITHUB_PREV_SHA` and `APACHE_SPARK_REF` environment variables to `lint` job. ### Why are the changes needed? This is required to detect the changed module accurately. ### Does this PR introduce _any_ user-facing change? No, this is a infra-only bug fix. ### How was this patch tested? Manual review. Closes #39809 from dongjoon-hyun/SPARK-42230-2. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 1304a3329d7feb1bd6f9a9dba09f37494c9bb4a2) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 30 January 2023, 19:59:45 UTC |

| 9d7373a | Dongjoon Hyun | 30 January 2023, 06:15:52 UTC | [SPARK-42230][INFRA] Improve `lint` job by skipping PySpark and SparkR docs if unchanged ### What changes were proposed in this pull request? This PR aims to improve `GitHub Action lint` job by skipping `PySpark` and `SparkR` documentation generations if PySpark and R module is unchanged. ### Why are the changes needed? `Documentation Generation` took over 53 minutes because it generates all Java/Scala/SQL/PySpark/R documentation always. However, `R` module is not changed frequently so that the documentation is always identical. `PySpark` module is more frequently changed, but still we can skip in many cases. This PR shows the reduction from `53` minutes to `18` minutes. **BEFORE**  **AFTER**  ### Does this PR introduce _any_ user-facing change? No, this is an infra only change. ### How was this patch tested? Manual review. Closes #39792 from dongjoon-hyun/SPARK-42230. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 1d3c2681d26bf6034d15ee261e5395e9f45d67f8) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 30 January 2023, 07:38:48 UTC |

| 6cd4ff6 | Enrico Minack | 29 January 2023, 07:25:13 UTC | [SPARK-42168][3.3][SQL][PYTHON][FOLLOW-UP] Test FlatMapCoGroupsInPandas with Window function ### What changes were proposed in this pull request? This ports tests from #39717 in branch-3.2 to branch-3.3. See https://github.com/apache/spark/pull/39752#issuecomment-1407157253. ### Why are the changes needed? To make sure this use case is tested. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? E2E test in `test_pandas_cogrouped_map.py` and analysis test in `EnsureRequirementsSuite.scala`. Closes #39781 from EnricoMi/branch-3.3-cogroup-window-bug-test. Authored-by: Enrico Minack <github@enrico.minack.dev> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 29 January 2023, 07:25:13 UTC |

| 289e650 | Dongjoon Hyun | 27 January 2023, 00:39:17 UTC | [SPARK-42201][BUILD] `build/sbt` should allow `SBT_OPTS` to override JVM memory setting ### What changes were proposed in this pull request? This PR aims to fix a bug which `build/sbt` doesn't allow JVM memory setting via `SBT_OPTS`. ### Why are the changes needed? `SBT_OPTS` is supposed to be used in this way in the community. https://github.com/apache/spark/blob/e30bb538e480940b1963eb14c3267662912d8584/appveyor.yml#L54 However, `SBT_OPTS` memory setting like the following is ignored because ` -Xms4096m -Xmx4096m -XX:ReservedCodeCacheSize=512m` is injected by default after `SBT_OPTS`. We should switch the order. ``` $ SBT_OPTS="-Xmx6g" build/sbt package ``` https://github.com/apache/spark/blob/e30bb538e480940b1963eb14c3267662912d8584/build/sbt-launch-lib.bash#L124 ### Does this PR introduce _any_ user-facing change? No. This is a dev-only change. ### How was this patch tested? Manually run the following. ``` $ SBT_OPTS="-Xmx6g" build/sbt package ``` While running the above command, check the JVM options. ``` $ ps aux | grep java dongjoon 36683 434.3 3.1 418465456 1031888 s001 R+ 1:11PM 0:19.86 /Users/dongjoon/.jenv/versions/temurin17/bin/java -Xms4096m -Xmx4096m -XX:ReservedCodeCacheSize=512m -Xmx6g -jar build/sbt-launch-1.8.2.jar package ``` Closes #39758 from dongjoon-hyun/SPARK-42201. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 66ec1eb630a4682f5ad2ed2ee989ffcce9031608) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 27 January 2023, 00:39:35 UTC |

| 518a24c | Steve Vaughan Jr | 26 January 2023, 02:17:39 UTC | [SPARK-42188][BUILD][3.3] Force SBT protobuf version to match Maven ### What changes were proposed in this pull request? Update `SparkBuild.scala` to force SBT use of `protobuf-java` to match the Maven version. The Maven dependencyManagement section forces `protobuf-java` to use `2.5.0`, but SBT is using `3.14.0`. ### Why are the changes needed? Define `protoVersion` in `SparkBuild.scala` and use it in `DependencyOverrides` to force the SBT version of `protobuf-java` to match the setting defined in the Maven top-level `pom.xml`. Add comments to both `pom.xml` and `SparkBuild.scala` to ensure that the values are kept in sync. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Before the update, SBT reported using `3.14.0`: ``` % build/sbt dependencyTree | grep proto | sed 's/^.*-com/com/' | sort | uniq -c 8 com.google.protobuf:protobuf-java:2.5.0 (evicted by: 3.14.0) 70 com.google.protobuf:protobuf-java:3.14.0 ``` After the patch is applied, SBT reports using `2.5.0`: ``` % build/sbt dependencyTree | grep proto | sed 's/^.*-com/com/' | sort | uniq -c 70 com.google.protobuf:protobuf-java:2.5.0 ``` Closes #39746 from snmvaughan/feature/SPARK-42188-3.3. Authored-by: Steve Vaughan Jr <s_vaughan@apple.com> Signed-off-by: huaxingao <huaxin_gao@apple.com> | 26 January 2023, 02:17:39 UTC |

| d69e7b6 | Dongjoon Hyun | 25 January 2023, 11:24:22 UTC | [SPARK-42179][BUILD][SQL][3.3] Upgrade ORC to 1.7.8 ### What changes were proposed in this pull request? This PR aims to upgrade ORC to 1.7.8 for Apache Spark 3.3.2. ### Why are the changes needed? Apache ORC 1.7.8 is a maintenance release with important bug fixes. - https://orc.apache.org/news/2023/01/21/ORC-1.7.8/ - [ORC-1332](https://issues.apache.org/jira/browse/ORC-1332) Avoid NegativeArraySizeException when using searchArgument - [ORC-1343](https://issues.apache.org/jira/browse/ORC-1343) Ignore orc.create.index ### Does this PR introduce _any_ user-facing change? The ORC dependency is going to be changed from 1.7.6 (Apache Spark 3.3.1) to 1.7.8 (Apache Spark 3.3.2) ### How was this patch tested? Pass the CIs. Closes #39735 from dongjoon-hyun/SPARK-42179. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 25 January 2023, 11:24:22 UTC |

| 04edc7e | Ivan Sadikov | 25 January 2023, 03:32:25 UTC | [SPARK-42176][SQL] Fix cast of a boolean value to timestamp The PR fixes an issue when casting a boolean to timestamp. While `select cast(true as timestamp)` works and returns `1970-01-01 00:00:00.000001`, casting `false` to timestamp fails with the following error: > IllegalArgumentException: requirement failed: Literal must have a corresponding value to timestamp, but class Integer found. SBT test also fails with this error: ``` [info] java.lang.ClassCastException: java.lang.Integer cannot be cast to java.lang.Long [info] at scala.runtime.BoxesRunTime.unboxToLong(BoxesRunTime.java:107) [info] at org.apache.spark.sql.catalyst.InternalRow$.$anonfun$getWriter$5(InternalRow.scala:178) [info] at org.apache.spark.sql.catalyst.InternalRow$.$anonfun$getWriter$5$adapted(InternalRow.scala:178) ``` The issue was that we need to return `0L` instead of `0` when converting `false` to a long. Fixes a small bug in cast. No. I added a unit test to verify the fix. Closes #39729 from sadikovi/fix_spark_boolean_to_timestamp. Authored-by: Ivan Sadikov <ivan.sadikov@databricks.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 866343c7be47d71b88ae9a6b4dda26f8c4f5964b) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 25 January 2023, 03:33:15 UTC |

| b5cbee8 | Ted Yu | 24 January 2023, 18:23:25 UTC | [SPARK-42090][3.3] Introduce sasl retry count in RetryingBlockTransferor ### What changes were proposed in this pull request? This PR introduces sasl retry count in RetryingBlockTransferor. ### Why are the changes needed? Previously a boolean variable, saslTimeoutSeen, was used. However, the boolean variable wouldn't cover the following scenario: 1. SaslTimeoutException 2. IOException 3. SaslTimeoutException 4. IOException Even though IOException at #2 is retried (resulting in increment of retryCount), the retryCount would be cleared at step #4. Since the intention of saslTimeoutSeen is to undo the increment due to retrying SaslTimeoutException, we should keep a counter for SaslTimeoutException retries and subtract the value of this counter from retryCount. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? New test is added, courtesy of Mridul. Closes #39611 from tedyu/sasl-cnt. Authored-by: Ted Yu <yuzhihonggmail.com> Signed-off-by: Mridul Muralidharan <mridul<at>gmail.com> Closes #39709 from akpatnam25/SPARK-42090-backport-3.3. Authored-by: Ted Yu <yuzhihong@gmail.com> Signed-off-by: Mridul Muralidharan <mridul<at>gmail.com> | 24 January 2023, 18:23:25 UTC |

| 0fff27d | Dongjoon Hyun | 24 January 2023, 08:06:25 UTC | [MINOR][K8S][DOCS] Add all resource managers in `Scheduling Within an Application` section ### What changes were proposed in this pull request? `Job Scheduling` document doesn't mention `K8s resource manager` so far because `Scheduling Across Applications` section only mentions all resource managers except K8s. This PR aims to add all supported resource managers in `Scheduling Within an Application section` section. ### Why are the changes needed? K8s also supports `FAIR` schedule within an application. ### Does this PR introduce _any_ user-facing change? No. This is a doc-only update. ### How was this patch tested? N/A Closes #39704 from dongjoon-hyun/minor_job_scheduling. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 45dbc44410f9bf74c7fb4431aad458db32960461) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 24 January 2023, 08:06:32 UTC |

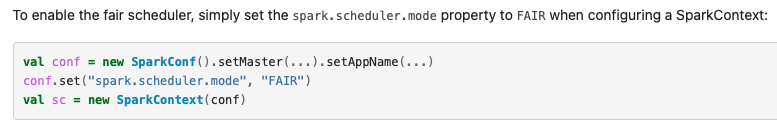

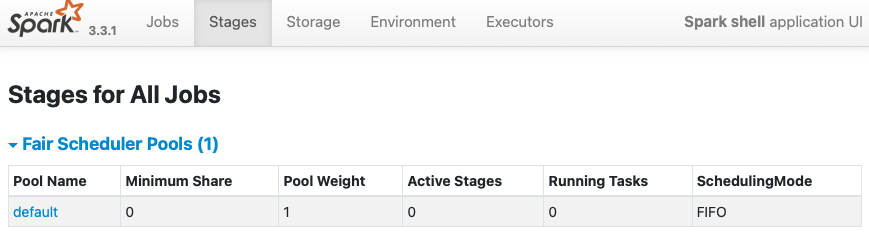

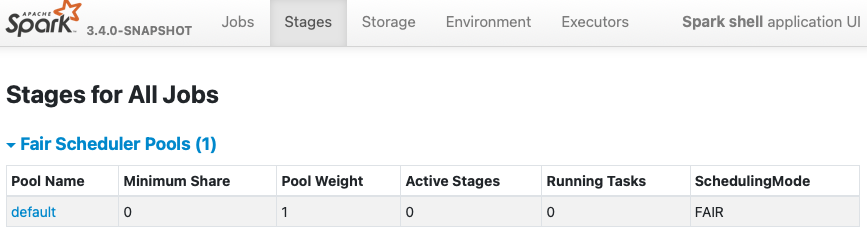

| 41e6875 | Dongjoon Hyun | 24 January 2023, 07:47:26 UTC | [SPARK-42157][CORE] `spark.scheduler.mode=FAIR` should provide FAIR scheduler ### What changes were proposed in this pull request? Like our documentation, `spark.sheduler.mode=FAIR` should provide a `FAIR Scheduling Within an Application`. https://spark.apache.org/docs/latest/job-scheduling.html#scheduling-within-an-application  This bug is hidden in our CI because we have `fairscheduler.xml` always as one of test resources. - https://github.com/apache/spark/blob/master/core/src/test/resources/fairscheduler.xml ### Why are the changes needed? Currently, when `spark.scheduler.mode=FAIR` is given without scheduler allocation file, Spark creates `Fair Scheduler Pools` with `FIFO` scheduler which is wrong. We need to switch the mode to `FAIR` from `FIFO`. **BEFORE** ``` $ bin/spark-shell -c spark.scheduler.mode=FAIR Setting default log level to "WARN". To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel). 23/01/22 14:47:37 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 23/01/22 14:47:38 WARN FairSchedulableBuilder: Fair Scheduler configuration file not found so jobs will be scheduled in FIFO order. To use fair scheduling, configure pools in fairscheduler.xml or set spark.scheduler.allocation.file to a file that contains the configuration. Spark context Web UI available at http://localhost:4040 ```  **AFTER** ``` $ bin/spark-shell -c spark.scheduler.mode=FAIR Setting default log level to "WARN". To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel). 23/01/22 14:48:18 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Spark context Web UI available at http://localhost:4040 ```  ### Does this PR introduce _any_ user-facing change? Yes, but this is a bug fix to match with Apache Spark official documentation. ### How was this patch tested? Pass the CIs. Closes #39703 from dongjoon-hyun/SPARK-42157. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 4d51bfa725c26996641f566e42ae392195d639c5) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 24 January 2023, 07:48:27 UTC |

| b7ababf | Aravind Patnam | 21 January 2023, 03:30:51 UTC | [SPARK-41415][3.3] SASL Request Retries ### What changes were proposed in this pull request? Add the ability to retry SASL requests. Will add it as a metric too soon to track SASL retries. ### Why are the changes needed? We are seeing increased SASL timeouts internally, and this issue would mitigate the issue. We already have this feature enabled for our 2.3 jobs, and we have seen failures significantly decrease. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Added unit tests, and tested on cluster to ensure the retries are being triggered correctly. Closes #38959 from akpatnam25/SPARK-41415. Authored-by: Aravind Patnam <apatnamlinkedin.com> Signed-off-by: Mridul Muralidharan <mridul<at>gmail.com> Closes #39644 from akpatnam25/SPARK-41415-backport-3.3. Authored-by: Aravind Patnam <apatnam@linkedin.com> Signed-off-by: Mridul Muralidharan <mridul<at>gmail.com> | 21 January 2023, 03:30:51 UTC |

| 8f09a69 | Peter Toth | 21 January 2023, 02:35:33 UTC | [SPARK-42134][SQL] Fix getPartitionFiltersAndDataFilters() to handle filters without referenced attributes ### What changes were proposed in this pull request? This is a small correctness fix to `DataSourceUtils.getPartitionFiltersAndDataFilters()` to handle filters without any referenced attributes correctly. E.g. without the fix the following query on ParquetV2 source: ``` spark.conf.set("spark.sql.sources.useV1SourceList", "") spark.range(1).write.mode("overwrite").format("parquet").save(path) df = spark.read.parquet(path).toDF("i") f = udf(lambda x: False, "boolean")(lit(1)) val r = df.filter(f) r.show() ``` returns ``` +---+ | i| +---+ | 0| +---+ ``` but it should return with empty results. The root cause of the issue is that during `V2ScanRelationPushDown` a filter that doesn't reference any column is incorrectly identified as partition filter. ### Why are the changes needed? To fix a correctness issue. ### Does this PR introduce _any_ user-facing change? Yes, fixes a correctness issue. ### How was this patch tested? Added new UT. Closes #39676 from peter-toth/SPARK-42134-fix-getpartitionfiltersanddatafilters. Authored-by: Peter Toth <peter.toth@gmail.com> Signed-off-by: huaxingao <huaxin_gao@apple.com> (cherry picked from commit dcdcb80c53681d1daff416c007cf8a2810155625) Signed-off-by: huaxingao <huaxin_gao@apple.com> | 21 January 2023, 02:35:57 UTC |

| ffa6cbf | Anton Ippolitov | 20 January 2023, 22:39:56 UTC | [SPARK-40817][K8S][3.3] `spark.files` should preserve remote files ### What changes were proposed in this pull request? Backport https://github.com/apache/spark/pull/38376 to `branch-3.3` You can find a detailed description of the issue and an example reproduction on the Jira card: https://issues.apache.org/jira/browse/SPARK-40817 The idea for this fix is to update the logic which uploads user-specified files (via `spark.jars`, `spark.files`, etc) to `spark.kubernetes.file.upload.path`. After uploading local files, it used to overwrite the initial list of URIs passed by the user and it would thus erase all remote URIs which were specified there. Small example of this behaviour: 1. User set the value of `spark.jars` to `s3a://some-bucket/my-application.jar,/tmp/some-local-jar.jar` when running `spark-submit` in cluster mode 2. `BasicDriverFeatureStep.getAdditionalPodSystemProperties()` gets called at one point while running `spark-submit` 3. This function would set `spark.jars` to a new value of `${SPARK_KUBERNETES_UPLOAD_PATH}/spark-upload-${RANDOM_STRING}/some-local-jar.jar`. Note that `s3a://some-bucket/my-application.jar` has been discarded. With the logic proposed in this PR, the new value of `spark.jars` would be `s3a://some-bucket/my-application.jar,${SPARK_KUBERNETES_UPLOAD_PATH}/spark-upload-${RANDOM_STRING}/some-local-jar.jar`, so in other words we are making sure that remote URIs are no longer discarded. ### Why are the changes needed? We encountered this issue in production when trying to launch Spark on Kubernetes jobs in cluster mode with a fix of local and remote dependencies. ### Does this PR introduce _any_ user-facing change? Yes, see description of the new behaviour above. ### How was this patch tested? - Added a unit test for the new behaviour - Added an integration test for the new behaviour - Tried this patch in our Kubernetes environment with `SparkPi`: ``` spark-submit \ --master k8s://https://$KUBERNETES_API_SERVER_URL:443 \ --deploy-mode cluster \ --name=spark-submit-test \ --class org.apache.spark.examples.SparkPi \ --conf spark.jars=/opt/my-local-jar.jar,s3a://$BUCKET_NAME/my-remote-jar.jar \ --conf spark.kubernetes.file.upload.path=s3a://$BUCKET_NAME/my-upload-path/ \ [...] /opt/spark/examples/jars/spark-examples_2.12-3.1.3.jar ``` Before applying the patch, `s3a://$BUCKET_NAME/my-remote-jar.jar` was discarded from the final value of `spark.jars`. After applying the patch and launching the job again, I confirmed that `s3a://$BUCKET_NAME/my-remote-jar.jar` was no longer discarded by looking at the Spark config for the running job. Closes #39669 from antonipp/spark-40817-branch-3.3. Authored-by: Anton Ippolitov <anton.ippolitov@datadoghq.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 20 January 2023, 22:39:56 UTC |

| 0d37682 | Dongjoon Hyun | 18 January 2023, 22:51:29 UTC | [SPARK-42110][SQL][TESTS] Reduce the number of repetition in ParquetDeltaEncodingSuite.`random data test` ### What changes were proposed in this pull request? `random data test` is consuming about 4 minutes in GitHub Action and worse in some other environment. ### Why are the changes needed? - https://github.com/apache/spark/actions/runs/3948081724/jobs/6757667891 ``` ParquetDeltaEncodingInt - random data test (1 minute, 51 seconds) ... ParquetDeltaEncodingLong ... - random data test (1 minute, 54 seconds) ``` ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Pass the CIs. Closes #39648 from dongjoon-hyun/SPARK-42110. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit d4f757baca64b4b66dd8f4e0b09bf085cce34af5) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 19 January 2023, 00:36:05 UTC |

| 9a8b652 | Wenchen Fan | 18 January 2023, 10:43:55 UTC | [SPARK-42084][SQL] Avoid leaking the qualified-access-only restriction This is a better fix than https://github.com/apache/spark/pull/39077 and https://github.com/apache/spark/pull/38862 The special attribute metadata `__qualified_access_only` is very risky, as it breaks normal column resolution. The aforementioned 2 PRs remove the restriction in `SubqueryAlias` and `Alias`, but it's not good enough as we may forget to do the same thing for new logical plans/expressions in the future. It's also problematic if advanced users manipulate logical plans and expressions directly, when there is no `SubqueryAlias` and `Alias` to remove the restriction. To be safe, we should only apply this restriction when resolving join hidden columns, which means the plan node right above `Project(Join(using or natural join))`. This PR simply removes the restriction when a column is resolved from a sequence of `Attributes`, or from star expansion, and also when adding the `Project` hidden columns to its output. This makes sure that the qualified-access-only restriction will not be leaked to normal column resolution, but only metadata column resolution. To make the join hidden column feature more robust No existing tests Closes #39596 from cloud-fan/join. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 18 January 2023, 10:58:56 UTC |

| 408b583 | Dongjoon Hyun | 15 January 2023, 09:09:54 UTC | [SPARK-42071][CORE] Register `scala.math.Ordering$Reverse` to KyroSerializer ### What changes were proposed in this pull request? This PR aims to register `scala.math.Ordering$Reverse` to KyroSerializer. ### Why are the changes needed? Scala 2.12.12 added a new class 'Reverse' via https://github.com/scala/scala/pull/8965. This affects Apache Spark 3.2.0+. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Pass the CIs with newly added test case. Closes #39578 from dongjoon-hyun/SPARK-42071. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit e3c0fbeadfe5242fa6265cb0646d72d3b5f6ef35) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 15 January 2023, 09:10:03 UTC |

| ff30903 | Stefaan Lippens | 12 January 2023, 09:24:30 UTC | [SPARK-41989][PYTHON] Avoid breaking logging config from pyspark.pandas See https://issues.apache.org/jira/browse/SPARK-41989 for in depth explanation Short summary: `pyspark/pandas/__init__.py` uses, at import time, `logging.warning()` which might silently call `logging.basicConfig()`. So by importing `pyspark.pandas` (directly or indirectly) a user might unknowingly break their own logging setup (e.g. when based on `logging.basicConfig()` or related). `logging.getLogger(...).warning()` does not trigger this behavior. User-defined logging setups will be more predictable. Manual testing so far. I'm not sure it's worthwhile to cover this with a unit test Closes #39516 from soxofaan/SPARK-41989-pyspark-pandas-logging-setup. Authored-by: Stefaan Lippens <stefaan.lippens@vito.be> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 04836babb7a1a2aafa7c65393c53c42937ef75a4) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 12 January 2023, 09:25:30 UTC |

| b97f79d | Enrico Minack | 06 January 2023, 03:32:45 UTC | [SPARK-41162][SQL][3.3] Fix anti- and semi-join for self-join with aggregations ### What changes were proposed in this pull request? Backport #39131 to branch-3.3. Rule `PushDownLeftSemiAntiJoin` should not push an anti-join below an `Aggregate` when the join condition references an attribute that exists in its right plan and its left plan's child. This usually happens when the anti-join / semi-join is a self-join while `DeduplicateRelations` cannot deduplicate those attributes (in this example due to the projection of `value` to `id`). This behaviour already exists for `Project` and `Union`, but `Aggregate` lacks this safety guard. ### Why are the changes needed? Without this change, the optimizer creates an incorrect plan. This example fails with `distinct()` (an aggregation), and succeeds without `distinct()`, but both queries are identical: ```scala val ids = Seq(1, 2, 3).toDF("id").distinct() val result = ids.withColumn("id", $"id" + 1).join(ids, Seq("id"), "left_anti").collect() assert(result.length == 1) ``` With `distinct()`, rule `PushDownLeftSemiAntiJoin` creates a join condition `(value#907 + 1) = value#907`, which can never be true. This effectively removes the anti-join. **Before this PR:** The anti-join is fully removed from the plan. ``` == Physical Plan == AdaptiveSparkPlan (16) +- == Final Plan == LocalTableScan (1) (16) AdaptiveSparkPlan Output [1]: [id#900] Arguments: isFinalPlan=true ``` This is caused by `PushDownLeftSemiAntiJoin` adding join condition `(value#907 + 1) = value#907`, which is wrong as because `id#910` in `(id#910 + 1) AS id#912` exists in the right child of the join as well as in the left grandchild: ``` === Applying Rule org.apache.spark.sql.catalyst.optimizer.PushDownLeftSemiAntiJoin === !Join LeftAnti, (id#912 = id#910) Aggregate [id#910], [(id#910 + 1) AS id#912] !:- Aggregate [id#910], [(id#910 + 1) AS id#912] +- Project [value#907 AS id#910] !: +- Project [value#907 AS id#910] +- Join LeftAnti, ((value#907 + 1) = value#907) !: +- LocalRelation [value#907] :- LocalRelation [value#907] !+- Aggregate [id#910], [id#910] +- Aggregate [id#910], [id#910] ! +- Project [value#914 AS id#910] +- Project [value#914 AS id#910] ! +- LocalRelation [value#914] +- LocalRelation [value#914] ``` The right child of the join and in the left grandchild would become the children of the pushed-down join, which creates an invalid join condition. **After this PR:** Join condition `(id#910 + 1) AS id#912` is understood to become ambiguous as both sides of the prospect join contain `id#910`. Hence, the join is not pushed down. The rule is then not applied any more. The final plan contains the anti-join: ``` == Physical Plan == AdaptiveSparkPlan (24) +- == Final Plan == * BroadcastHashJoin LeftSemi BuildRight (14) :- * HashAggregate (7) : +- AQEShuffleRead (6) : +- ShuffleQueryStage (5), Statistics(sizeInBytes=48.0 B, rowCount=3) : +- Exchange (4) : +- * HashAggregate (3) : +- * Project (2) : +- * LocalTableScan (1) +- BroadcastQueryStage (13), Statistics(sizeInBytes=1024.0 KiB, rowCount=3) +- BroadcastExchange (12) +- * HashAggregate (11) +- AQEShuffleRead (10) +- ShuffleQueryStage (9), Statistics(sizeInBytes=48.0 B, rowCount=3) +- ReusedExchange (8) (8) ReusedExchange [Reuses operator id: 4] Output [1]: [id#898] (24) AdaptiveSparkPlan Output [1]: [id#900] Arguments: isFinalPlan=true ``` ### Does this PR introduce _any_ user-facing change? It fixes correctness. ### How was this patch tested? Unit tests in `DataFrameJoinSuite` and `LeftSemiAntiJoinPushDownSuite`. Closes #39409 from EnricoMi/branch-antijoin-selfjoin-fix-3.3. Authored-by: Enrico Minack <github@enrico.minack.dev> Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 06 January 2023, 03:32:45 UTC |

| 977e445 | Dongjoon Hyun | 03 January 2023, 23:00:50 UTC | [SPARK-41864][INFRA][PYTHON] Fix mypy linter errors Currently, the GitHub Action Python linter job is broken. This PR will recover Python linter failure. There are two kind of failures. 1. https://github.com/apache/spark/actions/runs/3829330032/jobs/6524170799 ``` python/pyspark/pandas/sql_processor.py:221: error: unused "type: ignore" comment Found 1 error in 1 file (checked 380 source files) ``` 2. After fixing (1), we hit the following. ``` ModuleNotFoundError: No module named 'py._path'; 'py' is not a package ``` No. Pass the GitHub CI on this PR. Or, manually run the following. ``` $ dev/lint-python starting python compilation test... python compilation succeeded. starting black test... black checks passed. starting flake8 test... flake8 checks passed. starting mypy annotations test... annotations passed mypy checks. starting mypy examples test... examples passed mypy checks. starting mypy data test... annotations passed data checks. all lint-python tests passed! ``` Closes #39373 from dongjoon-hyun/SPARK-41864. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 13b2856e6e77392a417d2bb2ce804f873ee72b28) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 04 January 2023, 03:10:04 UTC |

| 2da30ad | Dongjoon Hyun | 03 January 2023, 23:01:43 UTC | [SPARK-41863][INFRA][PYTHON][TESTS] Skip `flake8` tests if the command is not available ### What changes were proposed in this pull request? This PR aims to skip `flake8` tests if the command is not available. ### Why are the changes needed? Linters are optional modules and we can be skip in some systems like `mypy`. ``` $ dev/lint-python starting python compilation test... python compilation succeeded. The Python library providing 'black' module was not found. Skipping black checks for now. The flake8 command was not found. Skipping for now. The mypy command was not found. Skipping for now. all lint-python tests passed! ``` ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Manual tests. Closes #39372 from dongjoon-hyun/SPARK-41863. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 1a5ef40a4d59b377b028b55ea3805caf5d55f28f) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 03 January 2023, 23:01:52 UTC |

| 02a7fda | Jungtaek Lim | 28 December 2022, 11:51:09 UTC | [SPARK-41732][SQL][SS][3.3] Apply tree-pattern based pruning for the rule SessionWindowing This PR ports back #39245 to branch-3.3. ### What changes were proposed in this pull request? This PR proposes to apply tree-pattern based pruning for the rule SessionWindowing, to minimize the evaluation of rule with SessionWindow node. ### Why are the changes needed? The rule SessionWindowing is unnecessarily evaluated multiple times without proper pruning. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Existing tests. Closes #39253 from HeartSaVioR/SPARK-41732-3.3. Authored-by: Jungtaek Lim <kabhwan.opensource@gmail.com> Signed-off-by: Jungtaek Lim <kabhwan.opensource@gmail.com> | 28 December 2022, 11:51:09 UTC |

| 0887a2f | Wenchen Fan | 28 December 2022, 08:57:18 UTC | Revert "[MINOR][TEST][SQL] Add a CTE subquery scope test case" This reverts commit aa39b06462a98f37be59e239d12edd9f09a25b88. | 28 December 2022, 08:57:18 UTC |

| aa39b06 | Reynold Xin | 23 December 2022, 22:55:14 UTC | [MINOR][TEST][SQL] Add a CTE subquery scope test case ### What changes were proposed in this pull request? I noticed we were missing a test case for this in SQL tests, so I added one. ### Why are the changes needed? To ensure we scope CTEs properly in subqueries. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? This is a test case change. Closes #39189 from rxin/cte_test. Authored-by: Reynold Xin <rxin@databricks.com> Signed-off-by: Reynold Xin <rxin@databricks.com> (cherry picked from commit 24edf8ecb5e47af294f89552dfd9957a2d9f193b) Signed-off-by: Reynold Xin <rxin@databricks.com> | 23 December 2022, 22:55:54 UTC |

| 19824cf | Tobias Stadler | 22 December 2022, 12:53:35 UTC | [SPARK-41686][SPARK-41030][BUILD][3.3] Upgrade Apache Ivy to 2.5.1 ### What changes were proposed in this pull request? Upgrade Apache Ivy from 2.5.0 to 2.5.1 ### Why are the changes needed? [CVE-2022-37865](https://www.cve.org/CVERecord?id=CVE-2022-37865) and [CVE-2022-37866](https://nvd.nist.gov/vuln/detail/CVE-2022-37866) ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Pass GA Closes #39176 from tobiasstadler/SPARK-41686. Authored-by: Tobias Stadler <ts.stadler@gmx.de> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 22 December 2022, 12:53:35 UTC |

| 9934b56 | Wenchen Fan | 22 December 2022, 03:26:19 UTC | [SPARK-41350][3.3][SQL][FOLLOWUP] Allow simple name access of join hidden columns after alias backport https://github.com/apache/spark/pull/39077 to 3.3 Closes #39121 from cloud-fan/backport. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 22 December 2022, 03:26:19 UTC |

| 7cd6907 | Gengliang Wang | 22 December 2022, 02:33:51 UTC | [SPARK-41668][SQL] DECODE function returns wrong results when passed NULL ### What changes were proposed in this pull request? The DECODE function was implemented for Oracle compatibility. It works similar to CASE expression, but it is supposed to have one major difference: NULL == NULL https://docs.oracle.com/database/121/SQLRF/functions057.htm#SQLRF00631 The Spark implementation does not observe this, however: ``` > select decode(null, 6, 'Spark', NULL, 'SQL', 4, 'rocks'); NULL ``` The result is supposed to be 'SQL'. This PR is to fix the issue. ### Why are the changes needed? Bug fix and Oracle compatibility. ### Does this PR introduce _any_ user-facing change? Yes, DECODE function will return matched value when passed null, instead of always returning null. ### How was this patch tested? New UT. Closes #39163 from gengliangwang/fixDecode. Authored-by: Gengliang Wang <gengliang@apache.org> Signed-off-by: Gengliang Wang <gengliang@apache.org> (cherry picked from commit e09fcebdfaed22b28abbe5c9336f3a6fc92bd046) Signed-off-by: Gengliang Wang <gengliang@apache.org> | 22 December 2022, 02:34:03 UTC |

| b0c9b73 | Bruce Robbins | 20 December 2022, 00:29:18 UTC | [SPARK-41535][SQL] Set null correctly for calendar interval fields in `InterpretedUnsafeProjection` and `InterpretedMutableProjection` In `InterpretedUnsafeProjection`, use `UnsafeWriter.write`, rather than `UnsafeWriter.setNullAt`, to set null for interval fields. Also, in `InterpretedMutableProjection`, use `InternalRow.setInterval`, rather than `InternalRow.setNullAt`, to set null for interval fields. This returns the wrong answer: ``` set spark.sql.codegen.wholeStage=false; set spark.sql.codegen.factoryMode=NO_CODEGEN; select first(col1), last(col2) from values (make_interval(0, 0, 0, 7, 0, 0, 0), make_interval(17, 0, 0, 2, 0, 0, 0)) as data(col1, col2); +---------------+---------------+ |first(col1) |last(col2) | +---------------+---------------+ |16 years 2 days|16 years 2 days| +---------------+---------------+ ``` In the above case, `TungstenAggregationIterator` uses `InterpretedUnsafeProjection` to create the aggregation buffer and to initialize all the fields to null. `InterpretedUnsafeProjection` incorrectly calls `UnsafeRowWriter#setNullAt`, rather than `unsafeRowWriter#write`, for the two calendar interval fields. As a result, the writer never allocates memory from the variable length region for the two intervals, and the pointers in the fixed region get left as zero. Later, when `InterpretedMutableProjection` attempts to update the first field, `UnsafeRow#setInterval` picks up the zero pointer and stores interval data on top of the null-tracking bit set. The call to UnsafeRow#setInterval for the second field also stomps the null-tracking bit set. Later updates to the null-tracking bit set (e.g., calls to `setNotNullAt`) further corrupt the interval data, turning `interval 7 years 2 days` into `interval 16 years 2 days`. Even after one fixes the above bug in `InterpretedUnsafeProjection` so that the buffer is created correctly, `InterpretedMutableProjection` has a similar bug to SPARK-41395, except this time for calendar interval data: ``` set spark.sql.codegen.wholeStage=false; set spark.sql.codegen.factoryMode=NO_CODEGEN; select first(col1), last(col2), max(col3) from values (null, null, 1), (make_interval(0, 0, 0, 7, 0, 0, 0), make_interval(17, 0, 0, 2, 0, 0, 0), 3) as data(col1, col2, col3); +---------------+---------------+---------+ |first(col1) |last(col2) |max(col3)| +---------------+---------------+---------+ |16 years 2 days|16 years 2 days|3 | +---------------+---------------+---------+ ``` These two bugs could get exercised during codegen fallback. No. New unit tests. Closes #39117 from bersprockets/unsafe_interval_issue. Authored-by: Bruce Robbins <bersprockets@gmail.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 7f153842041d66e9cf0465262f4458cfffda4f43) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 20 December 2022, 00:30:25 UTC |

| 356b56d | chenliang.lu | 16 December 2022, 19:30:01 UTC | [SPARK-41365][UI][3.3] Stages UI page fails to load for proxy in specific yarn environment backport https://github.com/apache/spark/pull/38882 ### What changes were proposed in this pull request? Stages UI page fails to load for proxy in some specific yarn environment. ### Why are the changes needed? My environment CDH 5.8 , click to enter the spark UI from the yarn Resource Manager page when visit the stage URI, it fails to load, URI is http://<yarn-url>:8088/proxy/application_1669877165233_0021/stages/stage/?id=0&attempt=0 The issue is similar to, the final phenomenon of the issue is the same, because the parameter encode twice [SPARK-32467](https://issues.apache.org/jira/browse/SPARK-32467) [SPARK-33611](https://issues.apache.org/jira/browse/SPARK-33611) The two issues solve two scenarios to avoid encode twice: 1. https redirect proxy 2. set reverse proxy enabled (spark.ui.reverseProxy) in Nginx But if encode twice due to other reasons, such as this issue (yarn proxy), it will also fail when visit stage page. It is better to decode parameter twice here. Just like fix here [SPARK-12708](https://issues.apache.org/jira/browse/SPARK-12708) [codes](https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/ui/UIUtils.scala#L626) ### Does this PR introduce any user-facing change? No ### How was this patch tested? new added UT Closes #39087 from yabola/fixui-backport. Authored-by: chenliang.lu <marssss2929@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 16 December 2022, 19:30:01 UTC |

| 48a2110 | Josh Rosen | 16 December 2022, 10:16:25 UTC | [SPARK-41541][SQL] Fix call to wrong child method in SQLShuffleWriteMetricsReporter.decRecordsWritten() ### What changes were proposed in this pull request? This PR fixes a bug in `SQLShuffleWriteMetricsReporter.decRecordsWritten()`: this method is supposed to call the delegate `metricsReporter`'s `decRecordsWritten` method but due to a typo it calls the `decBytesWritten` method instead. ### Why are the changes needed? One of the situations where `decRecordsWritten(v)` is called while reverting shuffle writes from failed/canceled tasks. Due to the mixup in these calls, the _recordsWritten_ metric ends up being _v_ records too high (since it wasn't decremented) and the _bytesWritten_ metric ends up _v_ records too low, causing some failed tasks' write metrics to look like > {"Shuffle Bytes Written":-2109,"Shuffle Write Time":2923270,"Shuffle Records Written":2109} instead of > {"Shuffle Bytes Written":0,"Shuffle Write Time":2923270,"Shuffle Records Written":0} ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Existing tests / manual code review only. The existing SQLMetricsSuite contains end-to-end tests which exercise this class but they don't exercise the decrement path because they don't exercise the shuffle write failure paths. In theory I could add new unit tests but I don't think the ROI is worth it given that this class is intended to be a simple wrapper and it ~never changes (this PR is the first change to the file in 5 years). Closes #39086 from JoshRosen/SPARK-41541. Authored-by: Josh Rosen <joshrosen@databricks.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit ed27121607cf526e69420a1faff01383759c9134) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 16 December 2022, 10:16:37 UTC |

| b23198e | Gengliang Wang | 16 December 2022, 07:43:17 UTC | [SPARK-41538][SQL] Metadata column should be appended at the end of project list ### What changes were proposed in this pull request? For the following query: ``` CREATE TABLE table_1 ( a ARRAY<STRING>, s STRUCT<id: STRING>) USING parquet; CREATE VIEW view_1 (id) AS WITH source AS ( SELECT * FROM table_1 ), renamed AS ( SELECT s.id FROM source ) SELECT id FROM renamed; with foo AS ( SELECT 'a' as id ), bar AS ( SELECT 'a' as id ) SELECT 1 FROM foo FULL OUTER JOIN bar USING(id) FULL OUTER JOIN view_1 USING(id) WHERE foo.id IS NOT NULL ``` There will be the following error: ``` class org.apache.spark.sql.types.ArrayType cannot be cast to class org.apache.spark.sql.types.StructType (org.apache.spark.sql.types.ArrayType and org.apache.spark.sql.types.StructType are in unnamed module of loader 'app') java.lang.ClassCastException: class org.apache.spark.sql.types.ArrayType cannot be cast to class org.apache.spark.sql.types.StructType (org.apache.spark.sql.types.ArrayType and org.apache.spark.sql.types.StructType are in unnamed module of loader 'app') at org.apache.spark.sql.catalyst.expressions.GetStructField.childSchema$lzycompute(complexTypeExtractors.scala:108) at org.apache.spark.sql.catalyst.expressions.GetStructField.childSchema(complexTypeExtractors.scala:108) ``` This is caused by the inconsistent metadata column positions in the following two nodes: * Table relation: at the ending position * Project list: at the beginning position <img width="1442" alt="image" src="https://user-images.githubusercontent.com/1097932/207992343-438714bc-e1d1-46f7-9a79-84ab83dd299f.png"> When the InlineCTE rule executes, the metadata column in the project is wrongly combined with the table output. <img width="1438" alt="image" src="https://user-images.githubusercontent.com/1097932/207992431-f4cfc774-4cab-4728-b109-2ebff94e5fe2.png"> Thus the column `a ARRAY<STRING>` is casted as `s STRUCT<id: STRING>` and cause the error. This PR is to fix the issue by putting the Metadata column at the end of project list, so that it is consistent with the table relation. ### Why are the changes needed? Bug fix ### Does this PR introduce _any_ user-facing change? Yes, it fixes a bug in the analysis rule `AddMetadataColumns` ### How was this patch tested? New test case Closes #39081 from gengliangwang/fixMetadata. Authored-by: Gengliang Wang <gengliang@apache.org> Signed-off-by: Max Gekk <max.gekk@gmail.com> (cherry picked from commit 172f719fffa84a2528628e08627a02cf8d1fe8a8) Signed-off-by: Max Gekk <max.gekk@gmail.com> | 16 December 2022, 07:43:29 UTC |

| 8918cbb | yangjie01 | 15 December 2022, 08:39:44 UTC | [SPARK-41522][BUILD] Pin `versions-maven-plugin` to 2.13.0 to recover `test-dependencies.sh` ### What changes were proposed in this pull request? This pr aims to pin `versions-maven-plugin` to 2.13.0 to recover `test-dependencies.sh` and make GA pass , this pr should revert after we know how to use version 2.14.0. ### Why are the changes needed? `dev/test-dependencies.sh` always use latest `versions-maven-plugin` version, and `versions-maven-plugin` 2.14.0 has not set the version of the sub-module. Run: ``` build/mvn -q versions:set -DnewVersion=spark-928034 -DgenerateBackupPoms=false ``` **2.14.0** ``` + git status On branch test-ci Changes not staged for commit: (use "git add <file>..." to update what will be committed) (use "git restore <file>..." to discard changes in working directory) modified: assembly/pom.xml modified: core/pom.xml modified: examples/pom.xml modified: graphx/pom.xml modified: hadoop-cloud/pom.xml modified: launcher/pom.xml modified: mllib-local/pom.xml modified: mllib/pom.xml modified: pom.xml modified: repl/pom.xml modified: streaming/pom.xml modified: tools/pom.xml ``` **2.13.0** ``` + git status On branch test-ci Changes not staged for commit: (use "git add <file>..." to update what will be committed) (use "git restore <file>..." to discard changes in working directory) modified: assembly/pom.xml modified: common/kvstore/pom.xml modified: common/network-common/pom.xml modified: common/network-shuffle/pom.xml modified: common/network-yarn/pom.xml modified: common/sketch/pom.xml modified: common/tags/pom.xml modified: common/unsafe/pom.xml modified: connector/avro/pom.xml modified: connector/connect/common/pom.xml modified: connector/connect/server/pom.xml modified: connector/docker-integration-tests/pom.xml modified: connector/kafka-0-10-assembly/pom.xml modified: connector/kafka-0-10-sql/pom.xml modified: connector/kafka-0-10-token-provider/pom.xml modified: connector/kafka-0-10/pom.xml modified: connector/kinesis-asl-assembly/pom.xml modified: connector/kinesis-asl/pom.xml modified: connector/protobuf/pom.xml modified: connector/spark-ganglia-lgpl/pom.xml modified: core/pom.xml modified: dev/test-dependencies.sh modified: examples/pom.xml modified: graphx/pom.xml modified: hadoop-cloud/pom.xml modified: launcher/pom.xml modified: mllib-local/pom.xml modified: mllib/pom.xml modified: pom.xml modified: repl/pom.xml modified: resource-managers/kubernetes/core/pom.xml modified: resource-managers/kubernetes/integration-tests/pom.xml modified: resource-managers/mesos/pom.xml modified: resource-managers/yarn/pom.xml modified: sql/catalyst/pom.xml modified: sql/core/pom.xml modified: sql/hive-thriftserver/pom.xml modified: sql/hive/pom.xml modified: streaming/pom.xml modified: tools/pom.xml ``` Therefore, the following compilation error will occur when using 2.14.0. ``` 2022-12-15T02:37:35.5536924Z [ERROR] [ERROR] Some problems were encountered while processing the POMs: 2022-12-15T02:37:35.5538469Z [FATAL] Non-resolvable parent POM for org.apache.spark:spark-sketch_2.12:3.4.0-SNAPSHOT: Could not find artifact org.apache.spark:spark-parent_2.12:pom:3.4.0-SNAPSHOT and 'parent.relativePath' points at wrong local POM line 22, column 11 ``` ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Pass GitHub Actions Closes #39067 from LuciferYang/test-ci. Authored-by: yangjie01 <yangjie01@baidu.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit bfe1af9a720ed235937a0fdf665376ffff7cce54) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 15 December 2022, 08:40:14 UTC |

| 9f7baae | Hyukjin Kwon | 13 December 2022, 14:34:09 UTC | [SPARK-41360][CORE][BUILD][FOLLOW-UP] Exclude BlockManagerMessages.RegisterBlockManager in MiMa This PR is a followup of https://github.com/apache/spark/pull/38876 that excludes BlockManagerMessages.RegisterBlockManager in MiMa compatibility check. It fails in MiMa check presumably with Scala 2.13 in other branches. Should be safer to exclude them all in the affected branches. No, dev-only. Filters copied from error messages. Will monitor the build in other branches. Closes #39052 from HyukjinKwon/SPARK-41360-followup. Authored-by: Hyukjin Kwon <gurwls223@apache.org> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit a2ceff29f9d1c0133fa0c8274fa84c43106e90f0) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 13 December 2022, 14:36:23 UTC |

| b9a1b71 | Yi Wu | 12 December 2022, 19:29:37 UTC | [SPARK-41360][CORE] Avoid BlockManager re-registration if the executor has been lost ### What changes were proposed in this pull request? This PR majorly proposes to reject the block manager re-registration if the executor has been already considered lost/dead from the scheduler backend. Along with the major proposal, this PR also includes a few other changes: * Only post `SparkListenerBlockManagerAdded` event when the registration succeeds * Return an "invalid" executor id when the re-registration fails * Do not report all blocks when the re-registration fails ### Why are the changes needed? BlockManager re-registration from lost executor (terminated/terminating executor or orphan executor) has led to some known issues, e.g., false-active executor shows up in UP (SPARK-35011), [block fetching to the dead executor](https://github.com/apache/spark/pull/32114#issuecomment-899979045). And since there's no re-registration from the lost executor itself, it's meaningless to have BlockManager re-registration when the executor is already lost. Regarding the corner case where the re-registration event comes earlier before the lost executor is actually removed from the scheduler backend, I think it is not possible. Because re-registration will only be required when the BlockManager doesn't see the block manager in `blockManagerInfo`. And the block manager will only be removed from `blockManagerInfo` whether when the executor is already know lost or removed by the driver proactively. So the executor should always be removed from the scheduler backend first before the re-registration event comes. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Unit test Closes #38876 from Ngone51/fix-blockmanager-reregister. Authored-by: Yi Wu <yi.wu@databricks.com> Signed-off-by: Mridul Muralidharan <mridul<at>gmail.com> (cherry picked from commit c3f46d5c6d69a9b21473dae6d86dee53833dfd52) Signed-off-by: Mridul Muralidharan <mridulatgmail.com> | 12 December 2022, 19:29:46 UTC |

| 70f3d2f | Hui An | 12 December 2022, 10:17:49 UTC | [SPARK-41448] Make consistent MR job IDs in FileBatchWriter and FileFormatWriter ### What changes were proposed in this pull request? Make consistent MR job IDs in FileBatchWriter and FileFormatWriter ### Why are the changes needed? [SPARK-26873](https://issues.apache.org/jira/browse/SPARK-26873) fix the consistent issue for FileFormatWriter, but [SPARK-33402](https://issues.apache.org/jira/browse/SPARK-33402) break this requirement by introducing a random long, we need to address this to expects identical task IDs across attempts for correctness. Also FileBatchWriter doesn't follow this requirement, need to fix it as well. ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? Closes #38980 from boneanxs/SPARK-41448. Authored-by: Hui An <hui.an@shopee.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 7801666f3b5ea3bfa0f95571c1d68147ce5240ec) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 12 December 2022, 10:18:16 UTC |

| 231c63a | Peter Toth | 12 December 2022, 10:14:24 UTC | [SPARK-41468][SQL] Fix PlanExpression handling in EquivalentExpressions ### What changes were proposed in this pull request? https://github.com/apache/spark/pull/36012 already added a check to avoid adding expressions containing `PlanExpression`s to `EquivalentExpressions` as those expressions might cause NPE on executors. But, for some reason, the check is still missing from `getExprState()` where we check the presence of an experssion in the equivalence map. This PR: - adds the check to `getExprState()` - moves the check from `updateExprTree()` to `addExprTree()` so as to run it only once. ### Why are the changes needed? To avoid exceptions like: ``` org.apache.spark.SparkException: Task failed while writing rows. at org.apache.spark.sql.errors.QueryExecutionErrors$.taskFailedWhileWritingRowsError(QueryExecutionErrors.scala:642) at org.apache.spark.sql.execution.datasources.FileFormatWriter$.executeTask(FileFormatWriter.scala:348) at org.apache.spark.sql.execution.datasources.FileFormatWriter$.$anonfun$write$21(FileFormatWriter.scala:256) at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90) at org.apache.spark.scheduler.Task.run(Task.scala:136) at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:548) at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1504) at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:551) at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128) at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628) at java.base/java.lang.Thread.run(Thread.java:834) Caused by: java.lang.NullPointerException at org.apache.spark.sql.execution.columnar.InMemoryTableScanExec.$anonfun$doCanonicalize$1(InMemoryTableScanExec.scala:51) at scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:286) at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62) at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55) at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49) at scala.collection.TraversableLike.map(TraversableLike.scala:286) at scala.collection.TraversableLike.map$(TraversableLike.scala:279) at scala.collection.AbstractTraversable.map(Traversable.scala:108) at org.apache.spark.sql.execution.columnar.InMemoryTableScanExec.doCanonicalize(InMemoryTableScanExec.scala:51) at org.apache.spark.sql.execution.columnar.InMemoryTableScanExec.doCanonicalize(InMemoryTableScanExec.scala:30) ... at org.apache.spark.sql.catalyst.plans.QueryPlan.canonicalized(QueryPlan.scala:541) at org.apache.spark.sql.execution.SubqueryExec.doCanonicalize(basicPhysicalOperators.scala:850) at org.apache.spark.sql.execution.SubqueryExec.doCanonicalize(basicPhysicalOperators.scala:814) at org.apache.spark.sql.catalyst.plans.QueryPlan.canonicalized$lzycompute(QueryPlan.scala:542) at org.apache.spark.sql.catalyst.plans.QueryPlan.canonicalized(QueryPlan.scala:541) at org.apache.spark.sql.execution.ScalarSubquery.preCanonicalized$lzycompute(subquery.scala:72) at org.apache.spark.sql.execution.ScalarSubquery.preCanonicalized(subquery.scala:71) ... at org.apache.spark.sql.catalyst.expressions.Expression.canonicalized(Expression.scala:261) at org.apache.spark.sql.catalyst.expressions.Expression.semanticHash(Expression.scala:278) at org.apache.spark.sql.catalyst.expressions.ExpressionEquals.hashCode(EquivalentExpressions.scala:226) at scala.runtime.Statics.anyHash(Statics.java:122) at scala.collection.mutable.HashTable$HashUtils.elemHashCode(HashTable.scala:416) at scala.collection.mutable.HashTable$HashUtils.elemHashCode$(HashTable.scala:416) at scala.collection.mutable.HashMap.elemHashCode(HashMap.scala:44) at scala.collection.mutable.HashTable.findEntry(HashTable.scala:136) at scala.collection.mutable.HashTable.findEntry$(HashTable.scala:135) at scala.collection.mutable.HashMap.findEntry(HashMap.scala:44) at scala.collection.mutable.HashMap.get(HashMap.scala:74) at org.apache.spark.sql.catalyst.expressions.EquivalentExpressions.getExprState(EquivalentExpressions.scala:180) at org.apache.spark.sql.catalyst.expressions.SubExprEvaluationRuntime.replaceWithProxy(SubExprEvaluationRuntime.scala:78) at org.apache.spark.sql.catalyst.expressions.SubExprEvaluationRuntime.$anonfun$proxyExpressions$3(SubExprEvaluationRuntime.scala:109) at scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:286) at scala.collection.IndexedSeqOptimized.foreach(IndexedSeqOptimized.scala:36) at scala.collection.IndexedSeqOptimized.foreach$(IndexedSeqOptimized.scala:33) at scala.collection.mutable.WrappedArray.foreach(WrappedArray.scala:38) at scala.collection.TraversableLike.map(TraversableLike.scala:286) at scala.collection.TraversableLike.map$(TraversableLike.scala:279) at scala.collection.AbstractTraversable.map(Traversable.scala:108) at org.apache.spark.sql.catalyst.expressions.SubExprEvaluationRuntime.proxyExpressions(SubExprEvaluationRuntime.scala:109) at org.apache.spark.sql.catalyst.expressions.InterpretedUnsafeProjection.<init>(InterpretedUnsafeProjection.scala:40) at org.apache.spark.sql.catalyst.expressions.InterpretedUnsafeProjection$.createProjection(InterpretedUnsafeProjection.scala:112) at org.apache.spark.sql.catalyst.expressions.UnsafeProjection$.createInterpretedObject(Projection.scala:127) at org.apache.spark.sql.catalyst.expressions.UnsafeProjection$.createInterpretedObject(Projection.scala:119) at org.apache.spark.sql.catalyst.expressions.CodeGeneratorWithInterpretedFallback.createObject(CodeGeneratorWithInterpretedFallback.scala:56) at org.apache.spark.sql.catalyst.expressions.UnsafeProjection$.create(Projection.scala:150) at org.apache.spark.sql.catalyst.expressions.UnsafeProjection$.create(Projection.scala:160) at org.apache.spark.sql.execution.ProjectExec.$anonfun$doExecute$1(basicPhysicalOperators.scala:95) at org.apache.spark.sql.execution.ProjectExec.$anonfun$doExecute$1$adapted(basicPhysicalOperators.scala:94) at org.apache.spark.rdd.RDD.$anonfun$mapPartitionsWithIndexInternal$2(RDD.scala:877) at org.apache.spark.rdd.RDD.$anonfun$mapPartitionsWithIndexInternal$2$adapted(RDD.scala:877) at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52) at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:365) at org.apache.spark.rdd.RDD.iterator(RDD.scala:329) at org.apache.spark.rdd.UnionRDD.compute(UnionRDD.scala:106) at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:365) at org.apache.spark.rdd.RDD.iterator(RDD.scala:329) at org.apache.spark.rdd.CoalescedRDD.$anonfun$compute$1(CoalescedRDD.scala:99) at scala.collection.Iterator$$anon$11.nextCur(Iterator.scala:486) at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:492) at scala.collection.Iterator$$anon$10.hasNext(Iterator.scala:460) at org.apache.spark.sql.execution.datasources.FileFormatDataWriter.writeWithIterator(FileFormatDataWriter.scala:91) at org.apache.spark.sql.execution.datasources.FileFormatWriter$.$anonfun$executeTask$1(FileFormatWriter.scala:331) at org.apache.spark.util.Utils$.tryWithSafeFinallyAndFailureCallbacks(Utils.scala:1538) at org.apache.spark.sql.execution.datasources.FileFormatWriter$.executeTask(FileFormatWriter.scala:338) ``` ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Existing UTs. Closes #39010 from peter-toth/SPARK-41468-fix-planexpressions-in-equivalentexpressions. Authored-by: Peter Toth <peter.toth@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 1b2d7001f2924738b61609a5399ebc152969b5c8) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 12 December 2022, 10:14:51 UTC |

| 1c6cb35 | yuanyimeng | 12 December 2022, 04:49:07 UTC | [SPARK-41187][CORE] LiveExecutor MemoryLeak in AppStatusListener when ExecutorLost happen ### What changes were proposed in this pull request? Ignore the SparkListenerTaskEnd with Reason "Resubmitted" in AppStatusListener to avoid memory leak ### Why are the changes needed? For a long running spark thriftserver, LiveExecutor will be accumulated in the deadExecutors HashMap and cause message event queue processing slowly. For a every task, actually always sent out a `SparkListenerTaskStart` event and a `SparkListenerTaskEnd` event, they are always pairs. But in a executor lost situation, it send out event like following steps. a) There was a pair of task start and task end event which were fired for the task (let us call it Tr) b) When executor which ran Tr was lost, while stage is still running, a task end event with reason `Resubmitted` is fired for Tr. c) Subsequently, a new task start and task end will be fired for the retry of Tr. The processing of the `Resubmitted` task end event in AppStatusListener can lead to negative `LiveStage.activeTasks` since there's no corresponding `SparkListenerTaskStart` event for each of them. The negative activeTasks will make the stage always remains in the live stage list as it can never meet the condition activeTasks == 0. This in turn causes the dead executor to never be cleaned up if that live stage's submissionTime is less than the dead executor's removeTime( see isExecutorActiveForLiveStages). Since this kind of `SparkListenerTaskEnd` is useless here, we simply ignore it. Check [SPARK-41187](https://issues.apache.org/jira/browse/SPARK-41187) for evidences. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? New UT Added Test in thriftserver env ### The way to reproduce I try to reproduce it in spark shell, but it is a little bit handy 1. start spark-shell , set spark.dynamicAllocation.maxExecutors=2 for convient ` bin/spark-shell --driver-java-options "-agentlib:jdwp=transport=dt_socket,server=y,suspend=n,address=8006"` 2. run a job with shuffle `sc.parallelize(1 to 1000, 10).map { x => Thread.sleep(1000) ; (x % 3, x) }.reduceByKey((a, b) => a + b).collect()` 3. After some ShuffleMapTask finished, kill one or two executor to let tasks resubmitted 4. check by heap dump or debug or log Closes #38702 from wineternity/SPARK-41187. Authored-by: yuanyimeng <yuanyimeng@youzan.com> Signed-off-by: Mridul Muralidharan <mridul<at>gmail.com> (cherry picked from commit 7e7bc940dcbbf918c7d571e1d27c7654ad387817) Signed-off-by: Mridul Muralidharan <mridulatgmail.com> | 12 December 2022, 04:49:48 UTC |

| 0df0fd8 | Dongjoon Hyun | 10 December 2022, 08:09:12 UTC | [SPARK-41476][INFRA] Prevent `README.md` from triggering CIs ### What changes were proposed in this pull request? This PR prevents `README.md`-only changes from triggering CIs. ### Why are the changes needed? While CIs go slower and slower, we are also getting more and more `README.md` files. ``` $ find . -name 'README.md' ./resource-managers/kubernetes/integration-tests/README.md ./core/src/main/resources/error/README.md ./core/src/main/scala/org/apache/spark/deploy/security/README.md ./hadoop-cloud/README.md ./python/README.md ./R/pkg/README.md ./docs/README.md ./README.md ./common/network-common/src/main/java/org/apache/spark/network/crypto/README.md ./common/tags/README.md ./connector/docker/README.md ./connector/docker/spark-test/README.md ./connector/connect/README.md ./connector/protobuf/README.md ./dev/README.md ./dev/ansible-for-test-node/roles/common/README.md ./dev/ansible-for-test-node/roles/jenkins-worker/README.md ./dev/ansible-for-test-node/README.md ./sql/core/src/test/README.md ./sql/core/src/main/scala/org/apache/spark/sql/test/README.md ./sql/core/src/main/scala/org/apache/spark/sql/jdbc/README.md ./sql/README.md ``` We can exclude these files in order to save the community CI resources. https://github.com/apache/spark/blob/435f6b1b3588d8c3c719f0e23b91209dd5f7bdb9/.github/workflows/build_and_test.yml#L86-L89 ### Does this PR introduce _any_ user-facing change? No. This is an infra-only change. ### How was this patch tested? Pass the doctest. ``` python -m doctest sparktestsupport/utils.py ``` Closes #39015 from dongjoon-hyun/SPARK-41476. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit c4af4b0cca4503e2fa00cac11b2d59c7dacd40df) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 10 December 2022, 08:09:20 UTC |