https://github.com/apache/spark

- HEAD

- refs/heads/branch-0.5

- refs/heads/branch-0.6

- refs/heads/branch-0.7

- refs/heads/branch-0.8

- refs/heads/branch-0.9

- refs/heads/branch-1.0

- refs/heads/branch-1.0-jdbc

- refs/heads/branch-1.1

- refs/heads/branch-1.2

- refs/heads/branch-1.3

- refs/heads/branch-1.4

- refs/heads/branch-1.5

- refs/heads/branch-1.6

- refs/heads/branch-2.0

- refs/heads/branch-2.1

- refs/heads/branch-2.2

- refs/heads/branch-2.3

- refs/heads/branch-2.4

- refs/heads/branch-3.0

- refs/heads/branch-3.1

- refs/heads/branch-3.2

- refs/heads/branch-3.3

- refs/heads/branch-3.4

- refs/heads/branch-3.5

- refs/heads/master

- refs/remotes/origin/branch-0.8

- refs/remotes/origin/td-rdd-save

- refs/tags/0.3-scala-2.8

- refs/tags/0.3-scala-2.9

- refs/tags/2.0.0-preview

- refs/tags/alpha-0.1

- refs/tags/alpha-0.2

- refs/tags/v0.5.0

- refs/tags/v0.5.1

- refs/tags/v0.5.2

- refs/tags/v0.6.0

- refs/tags/v0.6.0-yarn

- refs/tags/v0.6.1

- refs/tags/v0.6.2

- refs/tags/v0.7.0

- refs/tags/v0.7.0-bizo-1

- refs/tags/v0.7.1

- refs/tags/v0.7.2

- refs/tags/v0.9.1

- refs/tags/v0.9.2

- refs/tags/v1.0.0

- refs/tags/v1.0.1

- refs/tags/v1.0.2

- refs/tags/v1.1.0

- refs/tags/v1.1.1

- refs/tags/v1.2.0

- refs/tags/v1.2.1

- refs/tags/v1.2.2

- refs/tags/v1.3.0

- refs/tags/v1.3.1

- refs/tags/v1.4.0

- refs/tags/v1.4.1

- refs/tags/v1.5.0-rc1

- refs/tags/v1.5.0-rc2

- refs/tags/v1.5.0-rc3

- refs/tags/v1.5.1

- refs/tags/v1.6.0

- refs/tags/v1.6.1

- refs/tags/v1.6.2

- refs/tags/v1.6.3

- refs/tags/v2.0.0

- refs/tags/v2.0.1

- refs/tags/v2.0.2

- refs/tags/v2.1.0

- refs/tags/v2.1.1

- refs/tags/v2.1.2

- refs/tags/v2.1.2-rc1

- refs/tags/v2.1.2-rc2

- refs/tags/v2.1.2-rc3

- refs/tags/v2.1.2-rc4

- refs/tags/v2.1.3

- refs/tags/v2.1.3-rc1

- refs/tags/v2.1.3-rc2

- refs/tags/v2.2.0

- refs/tags/v2.2.1

- refs/tags/v2.2.1-rc1

- refs/tags/v2.2.1-rc2

- refs/tags/v2.2.2

- refs/tags/v2.2.2-rc1

- refs/tags/v2.2.2-rc2

- refs/tags/v2.2.3

- refs/tags/v2.2.3-rc1

- refs/tags/v2.3.0

- refs/tags/v2.3.0-rc1

- refs/tags/v2.3.0-rc2

- refs/tags/v2.3.0-rc3

- refs/tags/v2.3.0-rc4

- refs/tags/v2.3.1

- refs/tags/v2.3.1-rc1

- refs/tags/v2.3.1-rc2

- refs/tags/v2.3.1-rc3

- refs/tags/v2.3.1-rc4

- refs/tags/v2.3.2

- refs/tags/v2.3.2-rc1

- refs/tags/v2.3.2-rc2

- refs/tags/v2.3.2-rc3

- refs/tags/v2.3.2-rc4

- refs/tags/v2.3.2-rc5

- refs/tags/v2.3.2-rc6

- refs/tags/v2.3.3

- refs/tags/v2.3.3-rc1

- refs/tags/v2.3.3-rc2

- refs/tags/v2.3.4

- refs/tags/v2.3.4-rc1

- refs/tags/v2.4.0

- refs/tags/v2.4.0-rc1

- refs/tags/v2.4.0-rc2

- refs/tags/v2.4.0-rc3

- refs/tags/v2.4.0-rc4

- refs/tags/v2.4.0-rc5

- refs/tags/v2.4.1

- refs/tags/v2.4.1-rc1

- refs/tags/v2.4.1-rc2

- refs/tags/v2.4.1-rc3

- refs/tags/v2.4.1-rc4

- refs/tags/v2.4.1-rc5

- refs/tags/v2.4.1-rc6

- refs/tags/v2.4.1-rc7

- refs/tags/v2.4.1-rc8

- refs/tags/v2.4.1-rc9

- refs/tags/v2.4.2

- refs/tags/v2.4.2-rc1

- refs/tags/v2.4.3

- refs/tags/v2.4.3-rc1

- refs/tags/v2.4.4

- refs/tags/v2.4.4-rc1

- refs/tags/v2.4.4-rc2

- refs/tags/v2.4.4-rc3

- refs/tags/v2.4.5

- refs/tags/v2.4.5-rc1

- refs/tags/v2.4.5-rc2

- refs/tags/v2.4.6

- refs/tags/v2.4.6-rc1

- refs/tags/v2.4.6-rc2

- refs/tags/v2.4.6-rc3

- refs/tags/v2.4.6-rc4

- refs/tags/v2.4.6-rc5

- refs/tags/v2.4.6-rc6

- refs/tags/v2.4.6-rc7

- refs/tags/v2.4.6-rc8

- refs/tags/v2.4.7

- refs/tags/v2.4.7-rc1

- refs/tags/v2.4.7-rc2

- refs/tags/v2.4.7-rc3

- refs/tags/v2.4.8

- refs/tags/v2.4.8-rc1

- refs/tags/v2.4.8-rc2

- refs/tags/v2.4.8-rc3

- refs/tags/v2.4.8-rc4

- refs/tags/v3.0.0

- refs/tags/v3.0.0-preview2

- refs/tags/v3.0.0-preview2-rc1

- refs/tags/v3.0.0-preview2-rc2

- refs/tags/v3.0.0-rc1

- refs/tags/v3.0.0-rc2

- refs/tags/v3.0.0-rc3

- refs/tags/v3.0.1

- refs/tags/v3.0.1-rc1

- refs/tags/v3.0.1-rc2

- refs/tags/v3.0.1-rc3

- refs/tags/v3.0.2

- refs/tags/v3.0.2-rc1

- refs/tags/v3.0.3

- refs/tags/v3.0.3-rc1

- refs/tags/v3.1.0-rc1

- refs/tags/v3.1.1

- refs/tags/v3.1.1-rc1

- refs/tags/v3.1.1-rc2

- refs/tags/v3.1.1-rc3

- refs/tags/v3.1.2

- refs/tags/v3.1.2-rc1

- refs/tags/v3.1.3

- refs/tags/v3.1.3-rc1

- refs/tags/v3.1.3-rc2

- refs/tags/v3.1.3-rc3

- refs/tags/v3.1.3-rc4

- refs/tags/v3.2.0

- refs/tags/v3.2.0-rc1

- refs/tags/v3.2.0-rc2

- refs/tags/v3.2.0-rc3

- refs/tags/v3.2.0-rc4

- refs/tags/v3.2.0-rc5

- refs/tags/v3.2.0-rc6

- refs/tags/v3.2.0-rc7

- refs/tags/v3.2.1

- refs/tags/v3.2.1-rc1

- refs/tags/v3.2.1-rc2

- refs/tags/v3.2.2

- refs/tags/v3.2.2-rc1

- refs/tags/v3.2.3

- refs/tags/v3.2.3-rc1

- refs/tags/v3.2.4

- refs/tags/v3.2.4-rc1

- refs/tags/v3.3.0

- refs/tags/v3.3.0-rc1

- refs/tags/v3.3.0-rc2

- refs/tags/v3.3.0-rc3

- refs/tags/v3.3.0-rc4

- refs/tags/v3.3.0-rc5

- refs/tags/v3.3.0-rc6

- refs/tags/v3.3.1

- refs/tags/v3.3.1-rc1

- refs/tags/v3.3.1-rc2

- refs/tags/v3.3.1-rc3

- refs/tags/v3.3.1-rc4

- refs/tags/v3.3.2

- refs/tags/v3.3.2-rc1

- refs/tags/v3.3.3

- refs/tags/v3.3.3-rc1

- refs/tags/v3.3.4

- refs/tags/v3.3.4-rc1

- refs/tags/v3.4.0

- refs/tags/v3.4.0-rc1

- refs/tags/v3.4.0-rc2

- refs/tags/v3.4.0-rc3

- refs/tags/v3.4.0-rc4

- refs/tags/v3.4.0-rc5

- refs/tags/v3.4.0-rc6

- refs/tags/v3.4.0-rc7

- refs/tags/v3.4.1

- refs/tags/v3.4.1-rc1

- refs/tags/v3.4.2

- refs/tags/v3.4.2-rc1

- refs/tags/v3.4.3

- refs/tags/v3.4.3-rc1

- refs/tags/v3.4.3-rc2

- refs/tags/v3.5.0

- refs/tags/v3.5.0-rc1

- refs/tags/v3.5.0-rc2

- refs/tags/v3.5.0-rc3

- refs/tags/v3.5.0-rc4

- refs/tags/v3.5.0-rc5

- refs/tags/v3.5.1

- refs/tags/v3.5.1-rc1

- refs/tags/v3.5.1-rc2

- refs/tags/v4.0.0-preview1

- refs/tags/v4.0.0-preview1-rc1

- refs/tags/v4.0.0-preview1-rc2

- refs/tags/v4.0.0-preview1-rc3

Take a new snapshot of a software origin

If the archived software origin currently browsed is not synchronized with its upstream version (for instance when new commits have been issued), you can explicitly request Software Heritage to take a new snapshot of it.

Use the form below to proceed. Once a request has been submitted and accepted, it will be processed as soon as possible. You can then check its processing state by visiting this dedicated page.

Processing "take a new snapshot" request ...

Permalinks

To reference or cite the objects present in the Software Heritage archive, permalinks based on SoftWare Hash IDentifiers (SWHIDs) must be used.

Select below a type of object currently browsed in order to display its associated SWHID and permalink.

| Revision | Author | Date | Message | Commit Date |

|---|---|---|---|---|

| 8c2b331 | Yuming Wang | 04 August 2023, 04:33:19 UTC | Preparing Spark release v3.3.3-rc1 | 04 August 2023, 04:33:19 UTC |

| 407bb57 | Wenchen Fan | 04 August 2023, 03:26:36 UTC | [SPARK-44653][SQL] Non-trivial DataFrame unions should not break caching We have a long-standing tricky optimization in `Dataset.union`, which invokes the optimizer rule `CombineUnions` to pre-optimize the analyzed plan. This is to avoid too large analyzed plan for a specific dataframe query pattern `df1.union(df2).union(df3).union...`. This tricky optimization is designed to break dataframe caching, but we thought it was fine as people usually won't cache the intermediate dataframe in a union chain. However, `CombineUnions` gets improved from time to time (e.g. https://github.com/apache/spark/pull/35214) and now it can optimize a wide range of Union patterns. Now it's possible that people union two dataframe, do something with `select`, and cache it. Then the dataframe is unioned again with other dataframes and people expect the df cache to work. However the cache won't work due to the tricky optimization in `Dataset.union`. This PR updates `Dataset.union` to only combine adjacent Unions to match the original purpose. Fix perf regression due to breaking df caching no new test Closes #42315 from cloud-fan/union. Lead-authored-by: Wenchen Fan <wenchen@databricks.com> Co-authored-by: Wenchen Fan <cloud0fan@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit ce1fe57cdd7004a891ef8b97c77ac96b3719efcd) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 04 August 2023, 03:32:30 UTC |

| 9e86aed | Henry Mai | 02 August 2023, 04:07:51 UTC | [SPARK-44588][CORE][3.3] Fix double encryption issue for migrated shuffle blocks ### What changes were proposed in this pull request? Fix double encryption issue for migrated shuffle blocks Shuffle blocks upon migration are sent without decryption when io.encryption is enabled. The code on the receiving side ends up using serializer.wrapStream on the OutputStream to the file which results in the already encrypted bytes being encrypted again when the bytes are written out. This patch removes the usage of serializerManager.wrapStream on the receiving side and also adds tests that validate that this works as expected. I have also validated that the added tests will fail if the fix is not in place. Jira ticket with more details: https://issues.apache.org/jira/browse/SPARK-44588 ### Why are the changes needed? Migrated shuffle blocks will be double encrypted when `spark.io.encryption = true` without this fix. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Unit tests were added to test shuffle block migration with spark.io.encryption enabled and also fixes a test helper method to properly construct the SerializerManager with the encryption key. Closes #42277 from henrymai/branch-3.3_backport_double_encryption. Authored-by: Henry Mai <henrymai@users.noreply.github.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 02 August 2023, 04:07:51 UTC |

| 073d0b6 | Bruce Robbins | 11 July 2023, 03:18:44 UTC | [SPARK-44251][SQL] Set nullable correctly on coalesced join key in full outer USING join ### What changes were proposed in this pull request? For full outer joins employing USING, set the nullability of the coalesced join columns to true. ### Why are the changes needed? The following query produces incorrect results: ``` create or replace temp view v1 as values (1, 2), (null, 7) as (c1, c2); create or replace temp view v2 as values (2, 3) as (c1, c2); select explode(array(c1)) as x from v1 full outer join v2 using (c1); -1 <== should be null 1 2 ``` The following query fails with a `NullPointerException`: ``` create or replace temp view v1 as values ('1', 2), (null, 7) as (c1, c2); create or replace temp view v2 as values ('2', 3) as (c1, c2); select explode(array(c1)) as x from v1 full outer join v2 using (c1); 23/06/25 17:06:39 ERROR Executor: Exception in task 0.0 in stage 14.0 (TID 11) java.lang.NullPointerException at org.apache.spark.sql.catalyst.expressions.codegen.UnsafeWriter.write(UnsafeWriter.java:110) at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage3.generate_doConsume_0$(Unknown Source) at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage3.smj_consumeFullOuterJoinRow_0$(Unknown Source) at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage3.wholestagecodegen_findNextJoinRows_0$(Unknown Source) at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage3.processNext(Unknown Source) at org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43) at org.apache.spark.sql.execution.WholeStageCodegenEvaluatorFactory$WholeStageCodegenPartitionEvaluator$$anon$1.hasNext(WholeStageCodegenEvaluatorFactory.scala:43) ... ``` The above full outer joins implicitly add an aliased coalesce to the parent projection of the join: `coalesce(v1.c1, v2.c1) as c1`. In the case where only one side's key is nullable, the coalesce's nullability is false. As a result, the generator's output has nullable set as false. But this is incorrect: If one side has a row with explicit null key values, the other side's row will also have null key values (because the other side's row will be "made up"), and both the `coalesce` and the `explode` will return a null value. While `UpdateNullability` actually repairs the nullability of the `coalesce` before execution, it doesn't recreate the generator output, so the nullability remains incorrect in `Generate#output`. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? New unit test. Closes #41809 from bersprockets/using_oddity2. Authored-by: Bruce Robbins <bersprockets@gmail.com> Signed-off-by: Yuming Wang <yumwang@ebay.com> (cherry picked from commit 7a27bc68c849041837e521285e33227c3d1f9853) Signed-off-by: Yuming Wang <yumwang@ebay.com> | 11 July 2023, 03:19:49 UTC |

| edc13fd | Chandni Singh | 06 July 2023, 01:48:05 UTC | [SPARK-44215][3.3][SHUFFLE] If num chunks are 0, then server should throw a RuntimeException ### What changes were proposed in this pull request? The executor expects `numChunks` to be > 0. If it is zero, then we see that the executor fails with ``` 23/06/20 19:07:37 ERROR task 2031.0 in stage 47.0 (TID 25018) Executor: Exception in task 2031.0 in stage 47.0 (TID 25018) java.lang.ArithmeticException: / by zero at org.apache.spark.storage.PushBasedFetchHelper.createChunkBlockInfosFromMetaResponse(PushBasedFetchHelper.scala:128) at org.apache.spark.storage.ShuffleBlockFetcherIterator.next(ShuffleBlockFetcherIterator.scala:1047) at org.apache.spark.storage.ShuffleBlockFetcherIterator.next(ShuffleBlockFetcherIterator.scala:90) at org.apache.spark.util.CompletionIterator.next(CompletionIterator.scala:29) at scala.collection.Iterator$$anon$11.nextCur(Iterator.scala:484) at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:490) at scala.collection.Iterator$$anon$10.hasNext(Iterator.scala:458) at org.apache.spark.util.CompletionIterator.hasNext(CompletionIterator.scala:31) at org.apache.spark.InterruptibleIterator.hasNext(InterruptibleIterator.scala:37) at scala.collection.Iterator$$anon$10.hasNext(Iterator.scala:458) ``` Because this is an `ArithmeticException`, the executor doesn't fallback. It's not a `FetchFailure` either, so the stage is not retried and the application fails. ### Why are the changes needed? The executor should fallback to fetch original blocks and not fail because this suggests that there is an issue with push-merged block. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Modified the existing UTs to validate that RuntimeException is thrown when numChunks are 0. Closes #41859 from otterc/SPARK-44215-branch-3.3. Authored-by: Chandni Singh <singh.chandni@gmail.com> Signed-off-by: Mridul Muralidharan <mridul<at>gmail.com> | 06 July 2023, 01:48:05 UTC |

| e9b525e | meifencheng | 01 July 2023, 03:50:14 UTC | [SPARK-42784] should still create subDir when the number of subDir in merge dir is less than conf ### What changes were proposed in this pull request? Fixed a minor issue with diskBlockManager after push-based shuffle is enabled ### Why are the changes needed? this bug will affect the efficiency of push based shuffle ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Unit test Closes #40412 from Stove-hust/feature-42784. Authored-by: meifencheng <meifencheng@meituan.com> Signed-off-by: Mridul Muralidharan <mridul<at>gmail.com> (cherry picked from commit 35d51571a803b8fa7d14542236276425b517d3af) Signed-off-by: Mridul Muralidharan <mridulatgmail.com> | 01 July 2023, 03:51:05 UTC |

| 84620f2 | Kent Yao | 30 June 2023, 10:33:16 UTC | [SPARK-44241][CORE] Mistakenly set io.connectionTimeout/connectionCreationTimeout to zero or negative will cause incessant executor cons/destructions ### What changes were proposed in this pull request? This PR makes zero when io.connectionTimeout/connectionCreationTimeout is negative. Zero here means - connectionCreationTimeout = 0,an unlimited CONNNETION_TIMEOUT for connection establishment - connectionTimeout=0, `IdleStateHandler` for triggering `IdleStateEvent` is disabled. ### Why are the changes needed? 1. This PR fixes a bug when connectionCreationTimeout is 0, which means unlimited to netty, but ChannelFuture.await(0) fails directly and inappropriately. 2. This PR fixes a bug when connectionCreationTimeout is less than 0, which causes meaningless transport client reconnections and endless executor reconstructions ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? new unit tests Closes #41785 from yaooqinn/SPARK-44241. Authored-by: Kent Yao <yao@apache.org> Signed-off-by: Kent Yao <yao@apache.org> (cherry picked from commit 38645fa470b5af7c2e41efa4fb092bdf2463fbbd) Signed-off-by: Kent Yao <yao@apache.org> | 30 June 2023, 10:33:54 UTC |

| b7d8ddb | Dongjoon Hyun | 26 June 2023, 01:53:25 UTC | [SPARK-44184][PYTHON][DOCS] Remove a wrong doc about `ARROW_PRE_0_15_IPC_FORMAT` ### What changes were proposed in this pull request? This PR aims to remove a wrong documentation about `ARROW_PRE_0_15_IPC_FORMAT`. ### Why are the changes needed? Since Apache Spark 3.0.0, Spark doesn't allow `ARROW_PRE_0_15_IPC_FORMAT` environment variable at all. https://github.com/apache/spark/blob/2407183cb8637b6ac2d1b76320cae9cbde3411da/python/pyspark/sql/pandas/utils.py#L69-L73 ### Does this PR introduce _any_ user-facing change? No. This is a removal of outdated wrong documentation. ### How was this patch tested? Manual review. Closes #41730 from dongjoon-hyun/SPARK-44184. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 00e7c08606d0b6de22604d2a7350ea0711355300) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 26 June 2023, 01:53:43 UTC |

| d52aa4f | Dongjoon Hyun | 23 June 2023, 20:49:24 UTC | [SPARK-44158][K8S] Remove unused `spark.kubernetes.executor.lostCheckmaxAttempts` ### What changes were proposed in this pull request? This PR aims to remove `spark.kubernetes.executor.lostCheckmaxAttempts` because it was not used after SPARK-24248 (Apache Spark 2.4.0) ### Why are the changes needed? To clean up this from documentation and code. ### Does this PR introduce _any_ user-facing change? No because it was no-op already. ### How was this patch tested? Pass the CIs. Closes #41713 from dongjoon-hyun/SPARK-44158. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 6590e7db5212bb0dc90f22133a96e3d5e385af65) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 23 June 2023, 20:49:45 UTC |

| a7bbaca | Thomas Graves | 23 June 2023, 00:40:47 UTC | [SPARK-44134][CORE] Fix setting resources (GPU/FPGA) to 0 when they are set in spark-defaults.conf ### What changes were proposed in this pull request? https://issues.apache.org/jira/browse/SPARK-44134 With resource aware scheduling, if you specify a default value in the spark-defaults.conf, a user can't override that to set it to 0. Meaning spark-defaults.conf has something like: spark.executor.resource.{resourceName}.amount=1 spark.task.resource.{resourceName}.amount =1 If the user tries to override when submitting an application with spark.executor.resource.{resourceName}.amount=0 and spark.task.resource.{resourceName}.amount =0, the applicatoin fails to submit. it should submit and just not try to allocate those resources. This worked back in Spark 3.0 but was broken when the stage level scheduling feature was added. Here I fixed it by simply removing any task resources from the list if they are set to 0. Note I also fixed a typo in the exception message when no executor resources are specified but task resources are. Note, ideally this is backported to all of the maintenance releases ### Why are the changes needed? Fix a bug described above ### Does this PR introduce _any_ user-facing change? no api changes ### How was this patch tested? Added unit test and then ran manually on standalone and YARN clusters to verify overriding the configs now works. Closes #41703 from tgravescs/fixResource0. Authored-by: Thomas Graves <tgraves@nvidia.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit cf6e90c205bd76ff9e1fc2d88757d9d44ec93162) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 23 June 2023, 00:41:06 UTC |

| 6868d6c | Ted | 23 June 2023, 00:09:13 UTC | [SPARK-44142][PYTHON] Replace type with tpe in utility to convert python types to spark types ### What changes were proposed in this pull request? In the typehints utility to convert python types to spark types, use the variable `tpe` for comparison to the string representation of categorical types rather than `type`. ### Why are the changes needed? Currently, the Python keyword `type` is used in the comparison, which will always be false. The user's type is stored in variable `tpe`. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Existing `test_categorical.py` tests Closes #41697 from ted-jenks/patch-3. Authored-by: Ted <77486246+ted-jenks@users.noreply.github.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 5feab1072ba057da3811069c3eb8efec0de1044c) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 23 June 2023, 00:09:33 UTC |

| b969906 | Yuming Wang | 16 June 2023, 03:18:38 UTC | [SPARK-44040][SQL] Fix compute stats when AggregateExec node above QueryStageExec ### What changes were proposed in this pull request? This PR fixes compute stats when `BaseAggregateExec` nodes above `QueryStageExec`. For aggregation, when the number of shuffle output rows is 0, the final result may be 1. For example: ```sql SELECT count(*) FROM tbl WHERE false; ``` The number of shuffle output rows is 0, and the final result is 1. Please see the [UI](https://github.com/apache/spark/assets/5399861/9d9ad999-b3a9-433e-9caf-c0b931423891). ### Why are the changes needed? Fix data issue. `OptimizeOneRowPlan` will use stats to remove `Aggregate`: ``` === Applying Rule org.apache.spark.sql.catalyst.optimizer.OptimizeOneRowPlan === !Aggregate [id#5L], [id#5L] Project [id#5L] +- Union false, false +- Union false, false :- LogicalQueryStage Aggregate [sum(id#0L) AS id#5L], HashAggregate(keys=[], functions=[sum(id#0L)]) :- LogicalQueryStage Aggregate [sum(id#0L) AS id#5L], HashAggregate(keys=[], functions=[sum(id#0L)]) +- LogicalQueryStage Aggregate [sum(id#18L) AS id#12L], HashAggregate(keys=[], functions=[sum(id#18L)]) +- LogicalQueryStage Aggregate [sum(id#18L) AS id#12L], HashAggregate(keys=[], functions=[sum(id#18L)]) ``` ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Unit test. Closes #41576 from wangyum/SPARK-44040. Authored-by: Yuming Wang <yumwang@ebay.com> Signed-off-by: Yuming Wang <yumwang@ebay.com> (cherry picked from commit 55ba63c257b6617ec3d2aca5bc1d0989d4f29de8) Signed-off-by: Yuming Wang <yumwang@ebay.com> | 16 June 2023, 04:06:37 UTC |

| 3586ca3 | wangjunbo | 13 June 2023, 03:43:54 UTC | [SPARK-32559][SQL] Fix the trim logic did't handle ASCII control characters correctly ### What changes were proposed in this pull request? The trim logic in Cast expression introduced in https://github.com/apache/spark/pull/29375 trim ASCII control characters unexpectly. Before this patch  And hive  ### Why are the changes needed? The behavior described above doesn't consistent with the behavior of Hive ### Does this PR introduce _any_ user-facing change? Yes ### How was this patch tested? add ut Closes #41535 from Kwafoor/trim_bugfix. Lead-authored-by: wangjunbo <wangjunbo@qiyi.com> Co-authored-by: Junbo wang <1042815068@qq.com> Signed-off-by: Kent Yao <yao@apache.org> (cherry picked from commit 80588e4ddfac1d2e2fdf4f9a7783c56be6a97cdd) Signed-off-by: Kent Yao <yao@apache.org> | 13 June 2023, 03:44:40 UTC |

| 8840614 | Warren Zhu | 12 June 2023, 07:40:13 UTC | [SPARK-43398][CORE] Executor timeout should be max of idle shuffle and rdd timeout ### What changes were proposed in this pull request? Executor timeout should be max of idle, shuffle and rdd timeout ### Why are the changes needed? Wrong timeout value when combining idle, shuffle and rdd timeout ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Added test in `ExecutorMonitorSuite` Closes #41082 from warrenzhu25/max-timeout. Authored-by: Warren Zhu <warren.zhu25@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 7107742a381cde2e6de9425e3e436282a8c0d27c) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 12 June 2023, 07:40:57 UTC |

| ababc57 | Dongjoon Hyun | 06 June 2023, 16:34:40 UTC | [SPARK-43976][CORE] Handle the case where modifiedConfigs doesn't exist in event logs ### What changes were proposed in this pull request? This prevents NPE by handling the case where `modifiedConfigs` doesn't exist in event logs. ### Why are the changes needed? Basically, this is the same solution for that case. - https://github.com/apache/spark/pull/34907 The new code was added here, but we missed the corner case. - https://github.com/apache/spark/pull/35972 ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Pass the CIs. Closes #41472 from dongjoon-hyun/SPARK-43976. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit b4ab34bf9b22d0f0ca4ab13f9b6106f38ccfaebe) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 06 June 2023, 16:35:01 UTC |

| 0c509be | Degant Puri | 06 June 2023, 00:14:36 UTC | [SPARK-41958][CORE][3.3] Disallow arbitrary custom classpath with proxy user in cluster mode Backporting fix for SPARK-41958 to 3.3 branch from #39474 Below description from original PR. -------------------------- ### What changes were proposed in this pull request? This PR proposes to disallow arbitrary custom classpath with proxy user in cluster mode by default. ### Why are the changes needed? To avoid arbitrary classpath in spark cluster. ### Does this PR introduce _any_ user-facing change? Yes. User should reenable this feature by `spark.submit.proxyUser.allowCustomClasspathInClusterMode`. ### How was this patch tested? Manually tested. Closes #39474 from Ngone51/dev. Lead-authored-by: Peter Toth <peter.tothgmail.com> Co-authored-by: Yi Wu <yi.wudatabricks.com> Signed-off-by: Hyukjin Kwon <gurwls223apache.org> (cherry picked from commit 909da96e1471886a01a9e1def93630c4fd40e74a) ### What changes were proposed in this pull request? ### Why are the changes needed? ### Does this PR introduce _any_ user-facing change? ### How was this patch tested? Closes #41428 from degant/spark-41958-3.3. Lead-authored-by: Degant Puri <depuri@microsoft.com> Co-authored-by: Peter Toth <peter.toth@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 06 June 2023, 00:14:36 UTC |

| 86d22f4 | Jiaan Geng | 04 June 2023, 00:30:16 UTC | [SPARK-43956][SQL][3.3] Fix the bug doesn't display column's sql for Percentile[Cont|Disc] ### What changes were proposed in this pull request? This PR used to backport https://github.com/apache/spark/pull/41436 to 3.3 ### Why are the changes needed? Fix the bug doesn't display column's sql for Percentile[Cont|Disc]. ### Does this PR introduce _any_ user-facing change? 'Yes'. Users could see the correct sql information. ### How was this patch tested? Test cases updated. Closes #41446 from beliefer/SPARK-43956_followup2. Authored-by: Jiaan Geng <beliefer@163.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 04 June 2023, 00:30:16 UTC |

| 11d2f73 | Cheng Pan | 26 May 2023, 03:33:38 UTC | [SPARK-43751][SQL][DOC] Document `unbase64` behavior change ### What changes were proposed in this pull request? After SPARK-37820, `select unbase64("abcs==")`(malformed input) always throws an exception, this PR does not help in that case, it only improves the error message for `to_binary()`. So, `unbase64()`'s behavior for malformed input changed silently after SPARK-37820: - before: return a best-effort result, because it uses [LENIENT](https://github.com/apache/commons-codec/blob/rel/commons-codec-1.15/src/main/java/org/apache/commons/codec/binary/Base64InputStream.java#L46) policy: any trailing bits are composed into 8-bit bytes where possible. The remainder are discarded. - after: throw an exception And there is no way to restore the previous behavior. To tolerate the malformed input, the user should migrate `unbase64(<input>)` to `try_to_binary(<input>, 'base64')` to get NULL instead of interrupting by exception. ### Why are the changes needed? Add the behavior change to migration guide. ### Does this PR introduce _any_ user-facing change? Yes. ### How was this patch tested? Manuelly review. Closes #41280 from pan3793/SPARK-43751. Authored-by: Cheng Pan <chengpan@apache.org> Signed-off-by: Kent Yao <yao@apache.org> (cherry picked from commit af6c1ec7c795584c28e15e4963eed83917e2f06a) Signed-off-by: Kent Yao <yao@apache.org> | 26 May 2023, 03:34:25 UTC |

| 457bb34 | itholic | 23 May 2023, 07:02:24 UTC | [MINOR][PS][TESTS] Fix `SeriesDateTimeTests.test_quarter` to work properly ### What changes were proposed in this pull request? This PR proposes to fix `SeriesDateTimeTests.test_quarter` to work properly. ### Why are the changes needed? Test has not been properly testing ### Does this PR introduce _any_ user-facing change? No, test-only. ### How was this patch tested? Manually tested, and the existing CI should pass Closes #41274 from itholic/minor_quarter_test. Authored-by: itholic <haejoon.lee@databricks.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit a5c53384def22b01b8ef28bee6f2d10648bce1a1) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 23 May 2023, 07:04:42 UTC |

| a3e79f4 | Dongjoon Hyun | 23 May 2023, 05:17:11 UTC | [SPARK-43719][WEBUI] Handle `missing row.excludedInStages` field ### What changes were proposed in this pull request? This PR aims to handle a corner case when `row.excludedInStages` field is missing. ### Why are the changes needed? To fix the following type error when Spark loads some very old 2.4.x or 3.0.x logs.  We have two places and this PR protects both places. ``` $ git grep row.excludedInStages core/src/main/resources/org/apache/spark/ui/static/executorspage.js: if (typeof row.excludedInStages === "undefined" || row.excludedInStages.length == 0) { core/src/main/resources/org/apache/spark/ui/static/executorspage.js: return "Active (Excluded in Stages: [" + row.excludedInStages.join(", ") + "])"; ``` ### Does this PR introduce _any_ user-facing change? No, this will remove the error case only. ### How was this patch tested? Manual review. Closes #41266 from dongjoon-hyun/SPARK-43719. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit eeab2e701330f7bc24e9b09ce48925c2c3265aa8) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 23 May 2023, 05:17:33 UTC |

| ebf1572 | Bruce Robbins | 23 May 2023, 04:46:59 UTC | [SPARK-43718][SQL] Set nullable correctly for keys in USING joins ### What changes were proposed in this pull request? In `Anaylzer#commonNaturalJoinProcessing`, set nullable correctly when adding the join keys to the Project's hidden_output tag. ### Why are the changes needed? The value of the hidden_output tag will be used for resolution of attributes in parent operators, so incorrect nullabilty can cause problems. For example, assume this data: ``` create or replace temp view t1 as values (1), (2), (3) as (c1); create or replace temp view t2 as values (2), (3), (4) as (c1); ``` The following query produces incorrect results: ``` spark-sql (default)> select explode(array(t1.c1, t2.c1)) as x1 from t1 full outer join t2 using (c1); 1 -1 <== should be null 2 2 3 3 -1 <== should be null 4 Time taken: 0.663 seconds, Fetched 8 row(s) spark-sql (default)> ``` Similar issues occur with right outer join and left outer join. `t1.c1` and `t2.c1` have the wrong nullability at the time the array is resolved, so the array's `containsNull` value is incorrect. `UpdateNullability` will update the nullability of `t1.c1` and `t2.c1` in the `CreateArray` arguments, but will not update `containsNull` in the function's data type. Queries that don't use arrays also can get wrong results. Assume this data: ``` create or replace temp view t1 as values (0), (1), (2) as (c1); create or replace temp view t2 as values (1), (2), (3) as (c1); create or replace temp view t3 as values (1, 2), (3, 4), (4, 5) as (a, b); ``` The following query produces incorrect results: ``` select t1.c1 as t1_c1, t2.c1 as t2_c1, b from t1 full outer join t2 using (c1), lateral ( select b from t3 where a = coalesce(t2.c1, 1) ) lt3; 1 1 2 NULL 3 4 Time taken: 2.395 seconds, Fetched 2 row(s) spark-sql (default)> ``` The result should be the following: ``` 0 NULL 2 1 1 2 NULL 3 4 ``` ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? New tests. Closes #41267 from bersprockets/using_anomaly. Authored-by: Bruce Robbins <bersprockets@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 217b30a4f7ca18ade19a9552c2b87dd4caeabe57) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 23 May 2023, 04:47:56 UTC |

| 99f10aa | Dongjoon Hyun | 19 May 2023, 15:34:07 UTC | [SPARK-43589][SQL][3.3] Fix `cannotBroadcastTableOverMaxTableBytesError` to use `bytesToString` ### What changes were proposed in this pull request? This is a logical backporting of #41232 This PR aims to fix `cannotBroadcastTableOverMaxTableBytesError` to use `bytesToString` instead of shift operations. ### Why are the changes needed? To avoid user confusion by giving more accurate values. For example, `maxBroadcastTableBytes` is 1GB and `dataSize` is `2GB - 1 byte`. **BEFORE** ``` Cannot broadcast the table that is larger than 1GB: 1 GB. ``` **AFTER** ``` Cannot broadcast the table that is larger than 1024.0 MiB: 2048.0 MiB. ``` ### Does this PR introduce _any_ user-facing change? Yes, but only error message. ### How was this patch tested? Pass the CIs with newly added test case. Closes #41234 from dongjoon-hyun/SPARK-43589-3.3. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 19 May 2023, 15:34:07 UTC |

| b4d454f | Dongjoon Hyun | 19 May 2023, 06:31:14 UTC | [SPARK-43587][CORE][TESTS] Run `HealthTrackerIntegrationSuite` in a dedicated JVM ### What changes were proposed in this pull request? This PR aims to run `HealthTrackerIntegrationSuite` in a dedicated JVM to mitigate a flaky tests. ### Why are the changes needed? `HealthTrackerIntegrationSuite` has been flaky and SPARK-25400 and SPARK-37384 increased the timeout `from 1s to 10s` and `10s to 20s`, respectively. The usual suspect of this flakiness is some unknown side-effect like GCs. In this PR, we aims to run this in a separate JVM instead of increasing the timeout more. https://github.com/apache/spark/blob/abc140263303c409f8d4b9632645c5c6cbc11d20/core/src/test/scala/org/apache/spark/scheduler/SchedulerIntegrationSuite.scala#L56-L58 This is the recent failure. - https://github.com/apache/spark/actions/runs/5020505360/jobs/9002039817 ``` [info] HealthTrackerIntegrationSuite: [info] - If preferred node is bad, without excludeOnFailure job will fail (92 milliseconds) [info] - With default settings, job can succeed despite multiple bad executors on node (3 seconds, 163 milliseconds) [info] - Bad node with multiple executors, job will still succeed with the right confs *** FAILED *** (20 seconds, 43 milliseconds) [info] java.util.concurrent.TimeoutException: Futures timed out after [20 seconds] [info] at scala.concurrent.impl.Promise$DefaultPromise.ready(Promise.scala:259) [info] at scala.concurrent.impl.Promise$DefaultPromise.ready(Promise.scala:187) [info] at org.apache.spark.util.ThreadUtils$.awaitReady(ThreadUtils.scala:355) [info] at org.apache.spark.scheduler.SchedulerIntegrationSuite.awaitJobTermination(SchedulerIntegrationSuite.scala:276) [info] at org.apache.spark.scheduler.HealthTrackerIntegrationSuite.$anonfun$new$9(HealthTrackerIntegrationSuite.scala:92) ``` ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Pass the CIs. Closes #41229 from dongjoon-hyun/SPARK-43587. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit eb2456bce2522779bf6b866a5fbb728472d35097) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 19 May 2023, 06:31:41 UTC |

| 132678f | Max Gekk | 19 May 2023, 00:24:50 UTC | [SPARK-43541][SQL][3.3] Propagate all `Project` tags in resolving of expressions and missing columns ### What changes were proposed in this pull request? In the PR, I propose to propagate all tags in a `Project` while resolving of expressions and missing columns in `ColumnResolutionHelper.resolveExprsAndAddMissingAttrs()`. This is a backport of https://github.com/apache/spark/pull/41204. ### Why are the changes needed? To fix the bug reproduced by the query below: ```sql spark-sql (default)> WITH > t1 AS (select key from values ('a') t(key)), > t2 AS (select key from values ('a') t(key)) > SELECT t1.key > FROM t1 FULL OUTER JOIN t2 USING (key) > WHERE t1.key NOT LIKE 'bb.%'; [UNRESOLVED_COLUMN.WITH_SUGGESTION] A column or function parameter with name `t1`.`key` cannot be resolved. Did you mean one of the following? [`key`].; line 4 pos 7; ``` ### Does this PR introduce _any_ user-facing change? No. It fixes a bug, and outputs the expected result: `a`. ### How was this patch tested? By new test added to `using-join.sql`: ``` $ PYSPARK_PYTHON=python3 build/sbt "sql/testOnly org.apache.spark.sql.SQLQueryTestSuite -- -z using-join.sql" ``` and the related test suites: ``` $ build/sbt -Phive-2.3 -Phive-thriftserver "test:testOnly org.apache.spark.sql.hive.HiveContextCompatibilitySuite" ``` Authored-by: Max Gekk <max.gekkgmail.com> Signed-off-by: Max Gekk <max.gekkgmail.com> (cherry picked from commit 09d5742a8679839d0846f50e708df98663a6d64c) Closes #41221 from MaxGekk/fix-using-join-3.3. Authored-by: Max Gekk <max.gekk@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 19 May 2023, 00:24:50 UTC |

| 9110c05 | --global | 07 May 2023, 21:14:30 UTC | [SPARK-37829][SQL][3.3] Dataframe.joinWith outer-join should return a null value for unmatched row ### What changes were proposed in this pull request? This is a pull request to port the fix from the master branch to version 3.3. [PR](https://github.com/apache/spark/pull/40755) When doing an outer join with joinWith on DataFrames, unmatched rows return Row objects with null fields instead of a single null value. This is not a expected behavior, and it's a regression introduced in [this commit](https://github.com/apache/spark/commit/cd92f25be5a221e0d4618925f7bc9dfd3bb8cb59). This pull request aims to fix the regression, note this is not a full rollback of the commit, do not add back "schema" variable. ``` case class ClassData(a: String, b: Int) val left = Seq(ClassData("a", 1), ClassData("b", 2)).toDF val right = Seq(ClassData("x", 2), ClassData("y", 3)).toDF left.joinWith(right, left("b") === right("b"), "left_outer").collect ``` ``` Wrong results (current behavior): Array(([a,1],[null,null]), ([b,2],[x,2])) Correct results: Array(([a,1],null), ([b,2],[x,2])) ``` ### Why are the changes needed? We need to address the regression mentioned above. It results in unexpected behavior changes in the Dataframe joinWith API between versions 2.4.8 and 3.0.0+. This could potentially cause data correctness issues for users who expect the old behavior when using Spark 3.0.0+. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Added unit test (use the same test in previous [closed pull request](https://github.com/apache/spark/pull/35140), credit to Clément de Groc) Run sql-core and sql-catalyst submodules locally with ./build/mvn clean package -pl sql/core,sql/catalyst Closes #40755 from kings129/encoder_bug_fix. Authored-by: --global <xuqiang129gmail.com> Closes #40858 from kings129/fix_encoder_branch_33. Authored-by: --global <xuqiang129@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 07 May 2023, 21:14:30 UTC |

| 85ff71f | Cheng Pan | 06 May 2023, 14:37:44 UTC | [SPARK-43395][BUILD] Exclude macOS tar extended metadata in make-distribution.sh ### What changes were proposed in this pull request? Add args `--no-mac-metadata --no-xattrs --no-fflags` to `tar` on macOS in `dev/make-distribution.sh` to exclude macOS-specific extended metadata. ### Why are the changes needed? The binary tarball created on macOS includes extended macOS-specific metadata and xattrs, which causes warnings when unarchiving it on Linux. Step to reproduce 1. create tarball on macOS (13.3.1) ``` ➜ apache-spark git:(master) tar --version bsdtar 3.5.3 - libarchive 3.5.3 zlib/1.2.11 liblzma/5.0.5 bz2lib/1.0.8 ``` ``` ➜ apache-spark git:(master) dev/make-distribution.sh --tgz ``` 2. unarchive the binary tarball on Linux (CentOS-7) ``` ➜ ~ tar --version tar (GNU tar) 1.26 Copyright (C) 2011 Free Software Foundation, Inc. License GPLv3+: GNU GPL version 3 or later <http://gnu.org/licenses/gpl.html>. This is free software: you are free to change and redistribute it. There is NO WARRANTY, to the extent permitted by law. Written by John Gilmore and Jay Fenlason. ``` ``` ➜ ~ tar -xzf spark-3.5.0-SNAPSHOT-bin-3.3.5.tgz tar: Ignoring unknown extended header keyword `SCHILY.fflags' tar: Ignoring unknown extended header keyword `LIBARCHIVE.xattr.com.apple.FinderInfo' ``` ### Does this PR introduce _any_ user-facing change? No, dev only. ### How was this patch tested? Create binary tarball on macOS then unarchive on Linux, warnings disappear after this change. Closes #41074 from pan3793/SPARK-43395. Authored-by: Cheng Pan <chengpan@apache.org> Signed-off-by: Sean Owen <srowen@gmail.com> (cherry picked from commit 2d0240df3c474902e263f67b93fb497ca13da00f) Signed-off-by: Sean Owen <srowen@gmail.com> | 06 May 2023, 14:38:19 UTC |

| bc2c955 | Maytas Monsereenusorn | 05 May 2023, 19:07:59 UTC | [SPARK-43337][UI][3.3] Asc/desc arrow icons for sorting column does not get displayed in the table column ### What changes were proposed in this pull request? Remove css `!important` tag for asc/desc arrow icons in jquery.dataTables.1.10.25.min.css ### Why are the changes needed? Upgrading to DataTables 1.10.25 broke asc/desc arrow icons for sorting column. The sorting icon is not displayed when the column is clicked to sort by asc/desc. This is because the new DataTables 1.10.25's jquery.dataTables.1.10.25.min.css file added `!important` rule preventing the override set in webui-dataTables.css ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Manual test.   Closes #41060 from maytasm/fix-arrow-3. Authored-by: Maytas Monsereenusorn <maytasm@apache.org> Signed-off-by: Sean Owen <srowen@gmail.com> | 05 May 2023, 19:07:59 UTC |

| e9aab41 | Wenchen Fan | 27 April 2023, 02:52:03 UTC | [SPARK-43293][SQL] `__qualified_access_only` should be ignored in normal columns This is a followup of https://github.com/apache/spark/pull/39596 to fix more corner cases. It ignores the special column flag that requires qualified access for normal output attributes, as the flag should be effective only to metadata columns. It's very hard to make sure that we don't leak the special column flag. Since the bug has been in the Spark release for a while, there may be tables created with CTAS and the table schema contains the special flag. No new analysis test Closes #40961 from cloud-fan/col. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 021f02e02fb88bbbccd810ae000e14e0c854e2e6) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 27 April 2023, 02:55:21 UTC |

| 058dcbf | Jia Ke | 26 April 2023, 09:24:46 UTC | [SPARK-43240][SQL][3.3] Fix the wrong result issue when calling df.describe() method ### What changes were proposed in this pull request? The df.describe() method will cached the RDD. And if the cached RDD is RDD[Unsaferow], which may be released after the row is used, then the result will be wong. Here we need to copy the RDD before caching as the [TakeOrderedAndProjectExec ](https://github.com/apache/spark/blob/d68d46c9e2cec04541e2457f4778117b570d8cdb/sql/core/src/main/scala/org/apache/spark/sql/execution/limit.scala#L204)operator does. ### Why are the changes needed? bug fix ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? Closes #40914 from JkSelf/describe. Authored-by: Jia Ke <ke.a.jia@intel.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 26 April 2023, 09:24:46 UTC |

| 8f0c75c | Bruce Robbins | 24 April 2023, 00:58:15 UTC | [SPARK-43113][SQL][3.3] Evaluate stream-side variables when generating code for a bound condition ### What changes were proposed in this pull request? This is a back-port of #40766 and #40881. In `JoinCodegenSupport#getJoinCondition`, evaluate any referenced stream-side variables before using them in the generated code. This patch doesn't evaluate the passed stream-side variables directly, but instead evaluates a copy (`streamVars2`). This is because `SortMergeJoin#codegenFullOuter` will want to evaluate the stream-side vars within a different scope than the condition check, so we mustn't delete the initialization code from the original `ExprCode` instances. ### Why are the changes needed? When a bound condition of a full outer join references the same stream-side column more than once, wholestage codegen generates bad code. For example, the following query fails with a compilation error: ``` create or replace temp view v1 as select * from values (1, 1), (2, 2), (3, 1) as v1(key, value); create or replace temp view v2 as select * from values (1, 22, 22), (3, -1, -1), (7, null, null) as v2(a, b, c); select * from v1 full outer join v2 on key = a and value > b and value > c; ``` The error is: ``` org.codehaus.commons.compiler.CompileException: File 'generated.java', Line 277, Column 9: Redefinition of local variable "smj_isNull_7" ``` The same error occurs with code generated from ShuffleHashJoinExec: ``` select /*+ SHUFFLE_HASH(v2) */ * from v1 full outer join v2 on key = a and value > b and value > c; ``` In this case, the error is: ``` org.codehaus.commons.compiler.CompileException: File 'generated.java', Line 174, Column 5: Redefinition of local variable "shj_value_1" ``` Neither `SortMergeJoin#codegenFullOuter` nor `ShuffledHashJoinExec#doProduce` evaluate the stream-side variables before calling `consumeFullOuterJoinRow#getJoinCondition`. As a result, `getJoinCondition` generates definition/initialization code for each referenced stream-side variable at the point of use. If a stream-side variable is used more than once in the bound condition, the definition/initialization code is generated more than once, resulting in the "Redefinition of local variable" error. In the end, the query succeeds, since Spark disables wholestage codegen and tries again. (In the case other join-type/strategy pairs, either the implementations don't call `JoinCodegenSupport#getJoinCondition`, or the stream-side variables are pre-evaluated before the call is made, so no error happens in those cases). ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? New unit tests. Closes #40917 from bersprockets/full_join_codegen_issue_br33. Authored-by: Bruce Robbins <bersprockets@gmail.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 24 April 2023, 00:58:15 UTC |

| 6d0a271 | huaxingao | 21 April 2023, 14:46:00 UTC | [SPARK-41660][SQL][3.3] Only propagate metadata columns if they are used ### What changes were proposed in this pull request? backporting https://github.com/apache/spark/pull/39152 to 3.3 ### Why are the changes needed? bug fixing ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? UT Closes #40889 from huaxingao/metadata. Authored-by: huaxingao <huaxin_gao@apple.com> Signed-off-by: huaxingao <huaxin_gao@apple.com> | 21 April 2023, 14:46:00 UTC |

| cd16624 | Hyukjin Kwon | 17 April 2023, 01:11:55 UTC | [SPARK-43158][DOCS] Set upperbound of pandas version for Binder integration This PR proposes to set the upperbound for pandas in Binder integration. We don't currently support pandas 2.0.0 properly, see also https://issues.apache.org/jira/browse/SPARK-42618 To make the quickstarts working. Yes, it fixes the quickstart. Tested in: - https://mybinder.org/v2/gh/HyukjinKwon/spark/set-lower-bound-pandas?labpath=python%2Fdocs%2Fsource%2Fgetting_started%2Fquickstart_connect.ipynb - https://mybinder.org/v2/gh/HyukjinKwon/spark/set-lower-bound-pandas?labpath=python%2Fdocs%2Fsource%2Fgetting_started%2Fquickstart_df.ipynb - https://mybinder.org/v2/gh/HyukjinKwon/spark/set-lower-bound-pandas?labpath=python%2Fdocs%2Fsource%2Fgetting_started%2Fquickstart_ps.ipynb Closes #40814 from HyukjinKwon/set-lower-bound-pandas. Authored-by: Hyukjin Kwon <gurwls223@apache.org> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit a2592e6d46caf82c642f4a01a3fd5c282bbe174e) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 17 April 2023, 03:59:18 UTC |

| cc6d3f9 | Yuming Wang | 15 April 2023, 01:27:22 UTC | [SPARK-43050][SQL] Fix construct aggregate expressions by replacing grouping functions This PR fixes construct aggregate expressions by replacing grouping functions if a expression is part of aggregation. In the following example, the second `b` should also be replaced: <img width="545" alt="image" src="https://user-images.githubusercontent.com/5399861/230415618-84cd6334-690e-4b0b-867b-ccc4056226a8.png"> Fix bug: ``` spark-sql (default)> SELECT CASE WHEN a IS NULL THEN count(b) WHEN b IS NULL THEN count(c) END > FROM grouping > GROUP BY GROUPING SETS (a, b, c); [MISSING_AGGREGATION] The non-aggregating expression "b" is based on columns which are not participating in the GROUP BY clause. ``` No. Unit test. Closes #40685 from wangyum/SPARK-43050. Authored-by: Yuming Wang <yumwang@ebay.com> Signed-off-by: Yuming Wang <yumwang@ebay.com> (cherry picked from commit 45b84cd37add1b9ce274273ad5e519e6bc1d8013) Signed-off-by: Yuming Wang <yumwang@ebay.com> | 15 April 2023, 02:17:43 UTC |

| 5ce51c3 | Dongjoon Hyun | 07 April 2023, 19:54:05 UTC | [SPARK-43069][BUILD] Use `sbt-eclipse` instead of `sbteclipse-plugin` ### What changes were proposed in this pull request? This PR aims to use `sbt-eclipse` instead of `sbteclipse-plugin`. ### Why are the changes needed? Thanks to SPARK-34959, Apache Spark 3.2+ uses SBT 1.5.0 and we can use `set-eclipse` instead of old `sbteclipse-plugin`. - https://github.com/sbt/sbt-eclipse/releases/tag/6.0.0 ### Does this PR introduce _any_ user-facing change? No, this is a dev-only plugin. ### How was this patch tested? Pass the CIs and manual tests. ``` $ build/sbt eclipse Using /Users/dongjoon/.jenv/versions/1.8 as default JAVA_HOME. Note, this will be overridden by -java-home if it is set. Using SPARK_LOCAL_IP=localhost Attempting to fetch sbt Launching sbt from build/sbt-launch-1.8.2.jar [info] welcome to sbt 1.8.2 (AppleJDK-8.0.302.8.1 Java 1.8.0_302) [info] loading settings for project spark-merge-build from plugins.sbt ... [info] loading project definition from /Users/dongjoon/APACHE/spark-merge/project [info] Updating https://repo1.maven.org/maven2/com/github/sbt/sbt-eclipse_2.12_1.0/6.0.0/sbt-eclipse-6.0.0.pom 100.0% [##########] 2.5 KiB (4.5 KiB / s) ... ``` Closes #40708 from dongjoon-hyun/SPARK-43069. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 9cba5529d1fc3faf6b743a632df751d84ec86a07) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 07 April 2023, 19:54:28 UTC |

| f228f84 | Emil Ejbyfeldt | 07 April 2023, 01:14:07 UTC | [SPARK-39696][CORE] Fix data race in access to TaskMetrics.externalAccums ### What changes were proposed in this pull request? This PR fixes a data race around concurrent access to `TaskMetrics.externalAccums`. The race occurs between the `executor-heartbeater` thread and the thread executing the task. This data race is not known to cause issues on 2.12 but in 2.13 ~due this change https://github.com/scala/scala/pull/9258~ (LuciferYang bisected this to first cause failures in scala 2.13.7 one possible reason could be https://github.com/scala/scala/pull/9786) leads to an uncaught exception in the `executor-heartbeater` thread, which means that the executor will eventually be terminated due to missing hearbeats. This fix of using of using `CopyOnWriteArrayList` is cherry picked from https://github.com/apache/spark/pull/37206 where is was suggested as a fix by LuciferYang since `TaskMetrics.externalAccums` is also accessed from outside the class `TaskMetrics`. The old PR was closed because at that point there was no clear understanding of the race condition. JoshRosen commented here https://github.com/apache/spark/pull/37206#issuecomment-1189930626 saying that there should be no such race based on because all accumulators should be deserialized as part of task deserialization here: https://github.com/apache/spark/blob/0cc96f76d8a4858aee09e1fa32658da3ae76d384/core/src/main/scala/org/apache/spark/executor/Executor.scala#L507-L508 and therefore no writes should occur while the hearbeat thread will read the accumulators. But my understanding is that is incorrect as accumulators will also be deserialized as part of the taskBinary here: https://github.com/apache/spark/blob/169f828b1efe10d7f21e4b71a77f68cdd1d706d6/core/src/main/scala/org/apache/spark/scheduler/ShuffleMapTask.scala#L87-L88 which will happen while the heartbeater thread is potentially reading the accumulators. This can both due to user code using accumulators (see the new test case) but also when using the Dataframe/Dataset API as sql metrics will also be `externalAccums`. One way metrics will be sent as part of the taskBinary is when the dep is a `ShuffleDependency`: https://github.com/apache/spark/blob/fbbcf9434ac070dd4ced4fb9efe32899c6db12a9/core/src/main/scala/org/apache/spark/Dependency.scala#L85 with a ShuffleWriteProcessor that comes from https://github.com/apache/spark/blob/fbbcf9434ac070dd4ced4fb9efe32899c6db12a9/sql/core/src/main/scala/org/apache/spark/sql/execution/exchange/ShuffleExchangeExec.scala#L411-L422 ### Why are the changes needed? The current code has a data race. ### Does this PR introduce _any_ user-facing change? It will fix an uncaught exception in the `executor-hearbeater` thread when using scala 2.13. ### How was this patch tested? This patch adds a new test case, that before the fix was applied consistently produces the uncaught exception in the heartbeater thread when using scala 2.13. Closes #40663 from eejbyfeldt/SPARK-39696. Lead-authored-by: Emil Ejbyfeldt <eejbyfeldt@liveintent.com> Co-authored-by: yangjie01 <yangjie01@baidu.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 6ce0822f76e11447487d5f6b3cce94a894f2ceef) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit b2ff4c4f7ec21d41cb173b413bd5aa5feefd7eee) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 07 April 2023, 03:52:08 UTC |

| b40e408 | thyecust | 03 April 2023, 13:24:17 UTC | [SPARK-43005][PYSPARK] Fix typo in pyspark/pandas/config.py By comparing compute.isin_limit and plotting.max_rows, `v is v` is likely to be a typo. ### What changes were proposed in this pull request? fix `v is v >= 0` with `v >= 0`. ### Why are the changes needed? By comparing compute.isin_limit and plotting.max_rows, `v is v` is likely to be a typo. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? By GitHub Actions. Closes #40620 from thyecust/patch-2. Authored-by: thyecust <thy@mail.ecust.edu.cn> Signed-off-by: Sean Owen <srowen@gmail.com> (cherry picked from commit 5ac2b0fc024ae499119dfd5ab2ee4d038418c5fd) Signed-off-by: Sean Owen <srowen@gmail.com> | 03 April 2023, 13:24:39 UTC |

| 92e8d26 | thyecust | 03 April 2023, 03:36:04 UTC | [SPARK-43004][CORE] Fix typo in ResourceRequest.equals() vendor == vendor is always true, this is likely to be a typo. ### What changes were proposed in this pull request? fix `vendor == vendor` with `that.vendor == vendor`, and `discoveryScript == discoveryScript` with `that.discoveryScript == discoveryScript` ### Why are the changes needed? vendor == vendor is always true, this is likely to be a typo. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? By GitHub Actions. Closes #40622 from thyecust/patch-4. Authored-by: thyecust <thy@mail.ecust.edu.cn> Signed-off-by: Sean Owen <srowen@gmail.com> (cherry picked from commit 52c000ece27c9ef34969a7fb252714588f395926) Signed-off-by: Sean Owen <srowen@gmail.com> | 03 April 2023, 03:36:21 UTC |

| 6f266ee | Xingbo Jiang | 30 March 2023, 22:48:04 UTC | [SPARK-42967][CORE][3.2][3.3][3.4] Fix SparkListenerTaskStart.stageAttemptId when a task is started after the stage is cancelled ### What changes were proposed in this pull request? The PR fixes a bug that SparkListenerTaskStart can have `stageAttemptId = -1` when a task is launched after the stage is cancelled. Actually, we should use the information within `Task` to update the `stageAttemptId` field. ### Why are the changes needed? -1 is not a legal stageAttemptId value, thus it can lead to unexpected problem if a subscriber try to parse the stage information from the SparkListenerTaskStart event. ### Does this PR introduce _any_ user-facing change? No, it's a bugfix. ### How was this patch tested? Manually verified. Closes #40592 from jiangxb1987/SPARK-42967. Authored-by: Xingbo Jiang <xingbo.jiang@databricks.com> Signed-off-by: Gengliang Wang <gengliang@apache.org> (cherry picked from commit 1a6b1770c85f37982b15d261abf9cc6e4be740f4) Signed-off-by: Gengliang Wang <gengliang@apache.org> | 30 March 2023, 22:48:32 UTC |

| 794bea5 | Bruce Robbins | 28 March 2023, 12:32:06 UTC | [SPARK-42937][SQL] `PlanSubqueries` should set `InSubqueryExec#shouldBroadcast` to true Change `PlanSubqueries` to set `shouldBroadcast` to true when instantiating an `InSubqueryExec` instance. The below left outer join gets an error: ``` create or replace temp view v1 as select * from values (1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1), (2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2), (3, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1) as v1(key, value1, value2, value3, value4, value5, value6, value7, value8, value9, value10); create or replace temp view v2 as select * from values (1, 2), (3, 8), (7, 9) as v2(a, b); create or replace temp view v3 as select * from values (3), (8) as v3(col1); set spark.sql.codegen.maxFields=10; -- let's make maxFields 10 instead of 100 set spark.sql.adaptive.enabled=false; select * from v1 left outer join v2 on key = a and key in (select col1 from v3); ``` The join fails during predicate codegen: ``` 23/03/27 12:24:12 WARN Predicate: Expr codegen error and falling back to interpreter mode java.lang.IllegalArgumentException: requirement failed: input[0, int, false] IN subquery#34 has not finished at scala.Predef$.require(Predef.scala:281) at org.apache.spark.sql.execution.InSubqueryExec.prepareResult(subquery.scala:144) at org.apache.spark.sql.execution.InSubqueryExec.doGenCode(subquery.scala:156) at org.apache.spark.sql.catalyst.expressions.Expression.$anonfun$genCode$3(Expression.scala:201) at scala.Option.getOrElse(Option.scala:189) at org.apache.spark.sql.catalyst.expressions.Expression.genCode(Expression.scala:196) at org.apache.spark.sql.catalyst.expressions.codegen.CodegenContext.$anonfun$generateExpressions$2(CodeGenerator.scala:1278) at scala.collection.immutable.List.map(List.scala:293) at org.apache.spark.sql.catalyst.expressions.codegen.CodegenContext.generateExpressions(CodeGenerator.scala:1278) at org.apache.spark.sql.catalyst.expressions.codegen.GeneratePredicate$.create(GeneratePredicate.scala:41) at org.apache.spark.sql.catalyst.expressions.codegen.GeneratePredicate$.generate(GeneratePredicate.scala:33) at org.apache.spark.sql.catalyst.expressions.Predicate$.createCodeGeneratedObject(predicates.scala:73) at org.apache.spark.sql.catalyst.expressions.Predicate$.createCodeGeneratedObject(predicates.scala:70) at org.apache.spark.sql.catalyst.expressions.CodeGeneratorWithInterpretedFallback.createObject(CodeGeneratorWithInterpretedFallback.scala:51) at org.apache.spark.sql.catalyst.expressions.Predicate$.create(predicates.scala:86) at org.apache.spark.sql.execution.joins.HashJoin.boundCondition(HashJoin.scala:146) at org.apache.spark.sql.execution.joins.HashJoin.boundCondition$(HashJoin.scala:140) at org.apache.spark.sql.execution.joins.BroadcastHashJoinExec.boundCondition$lzycompute(BroadcastHashJoinExec.scala:40) at org.apache.spark.sql.execution.joins.BroadcastHashJoinExec.boundCondition(BroadcastHashJoinExec.scala:40) ``` It fails again after fallback to interpreter mode: ``` 23/03/27 12:24:12 ERROR Executor: Exception in task 2.0 in stage 2.0 (TID 7) java.lang.IllegalArgumentException: requirement failed: input[0, int, false] IN subquery#34 has not finished at scala.Predef$.require(Predef.scala:281) at org.apache.spark.sql.execution.InSubqueryExec.prepareResult(subquery.scala:144) at org.apache.spark.sql.execution.InSubqueryExec.eval(subquery.scala:151) at org.apache.spark.sql.catalyst.expressions.InterpretedPredicate.eval(predicates.scala:52) at org.apache.spark.sql.execution.joins.HashJoin.$anonfun$boundCondition$2(HashJoin.scala:146) at org.apache.spark.sql.execution.joins.HashJoin.$anonfun$boundCondition$2$adapted(HashJoin.scala:146) at org.apache.spark.sql.execution.joins.HashJoin.$anonfun$outerJoin$1(HashJoin.scala:205) ``` Both the predicate codegen and the evaluation fail for the same reason: `PlanSubqueries` creates `InSubqueryExec` with `shouldBroadcast=false`. The driver waits for the subquery to finish, but it's the executor that uses the results of the subquery (for predicate codegen or evaluation). Because `shouldBroadcast` is set to false, the result is stored in a transient field (`InSubqueryExec#result`), so the result of the subquery is not serialized when the `InSubqueryExec` instance is sent to the executor. The issue occurs, as far as I can tell, only when both whole stage codegen is disabled and adaptive execution is disabled. When wholestage codegen is enabled, the predicate codegen happens on the driver, so the subquery's result is available. When adaptive execution is enabled, `PlanAdaptiveSubqueries` always sets `shouldBroadcast=true`, so the subquery's result is available on the executor, if needed. No. New unit test. Closes #40569 from bersprockets/join_subquery_issue. Authored-by: Bruce Robbins <bersprockets@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 5b20f3d94095f54017be3d31d11305e597334d8b) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 28 March 2023, 12:33:22 UTC |

| 4aa8860 | Mridul Muralidharan | 28 March 2023, 03:48:05 UTC | [SPARK-42922][SQL] Move from Random to SecureRandom ### What changes were proposed in this pull request? Most uses of `Random` in spark are either in testcases or where we need a pseudo random number which is repeatable. Use `SecureRandom`, instead of `Random` for the cases where it impacts security. ### Why are the changes needed? Use of `SecureRandom` in more security sensitive contexts. This was flagged in our internal scans as well. ### Does this PR introduce _any_ user-facing change? Directly no. Would improve security posture of Apache Spark. ### How was this patch tested? Existing unit tests Closes #40568 from mridulm/SPARK-42922. Authored-by: Mridul Muralidharan <mridulatgmail.com> Signed-off-by: Sean Owen <srowen@gmail.com> (cherry picked from commit 744434358cb0c687b37d37dd62f2e7d837e52b2d) Signed-off-by: Sean Owen <srowen@gmail.com> | 28 March 2023, 03:48:23 UTC |

| fc126b4 | Cheng Pan | 27 March 2023, 22:31:16 UTC | [SPARK-42906][K8S] Replace a starting digit with `x` in resource name prefix ### What changes were proposed in this pull request? Change the generated resource name prefix to meet K8s requirements > DNS-1035 label must consist of lower case alphanumeric characters or '-', start with an alphabetic character, and end with an alphanumeric character (e.g. 'my-name', or 'abc-123', regex used for validation is '[a-z]([-a-z0-9]*[a-z0-9])?') ### Why are the changes needed? In current implementation, the following app name causes error ``` bin/spark-submit \ --master k8s://https://*.*.*.*:6443 \ --deploy-mode cluster \ --name 你好_187609 \ ... ``` ``` Exception in thread "main" io.fabric8.kubernetes.client.KubernetesClientException: Failure executing: POST at: https://*.*.*.*:6443/api/v1/namespaces/spark/services. Message: Service "187609-f19020870d12c349-driver-svc" is invalid: metadata.name: Invalid value: "187609-f19020870d12c349-driver-svc": a DNS-1035 label must consist of lower case alphanumeric characters or '-', start with an alphabetic character, and end with an alphanumeric character (e.g. 'my-name', or 'abc-123', regex used for validation is '[a-z]([-a-z0-9]*[a-z0-9])?'). ``` ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? New UT. Closes #40533 from pan3793/SPARK-42906. Authored-by: Cheng Pan <chengpan@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 0b9a3017005ccab025b93d7b545412b226d4e63c) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 27 March 2023, 22:31:45 UTC |

| 13702f0 | yangjie01 | 27 March 2023, 16:42:40 UTC | [SPARK-42934][BUILD] Add `spark.hadoop.hadoop.security.key.provider.path` to `scalatest-maven-plugin` ### What changes were proposed in this pull request? When testing `OrcEncryptionSuite` using maven, all test suites are always skipped. So this pr add `spark.hadoop.hadoop.security.key.provider.path` to `systemProperties` of `scalatest-maven-plugin` to make `OrcEncryptionSuite` can test by maven. ### Why are the changes needed? Make `OrcEncryptionSuite` can test by maven. ### Does this PR introduce _any_ user-facing change? No, just for maven test ### How was this patch tested? - Pass GitHub Actions - Manual testing: run ``` build/mvn clean install -pl sql/core -DskipTests -am build/mvn test -pl sql/core -Dtest=none -DwildcardSuites=org.apache.spark.sql.execution.datasources.orc.OrcEncryptionSuite ``` **Before** ``` Discovery starting. Discovery completed in 3 seconds, 218 milliseconds. Run starting. Expected test count is: 4 OrcEncryptionSuite: 21:57:58.344 WARN org.apache.hadoop.util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable - Write and read an encrypted file !!! CANCELED !!! [] was empty org.apache.orc.impl.NullKeyProvider5af5d76f doesn't has the test keys. ORC shim is created with old Hadoop libraries (OrcEncryptionSuite.scala:37) - Write and read an encrypted table !!! CANCELED !!! [] was empty org.apache.orc.impl.NullKeyProvider5ad6cc21 doesn't has the test keys. ORC shim is created with old Hadoop libraries (OrcEncryptionSuite.scala:65) - SPARK-35325: Write and read encrypted nested columns !!! CANCELED !!! [] was empty org.apache.orc.impl.NullKeyProvider691124ee doesn't has the test keys. ORC shim is created with old Hadoop libraries (OrcEncryptionSuite.scala:116) - SPARK-35992: Write and read fully-encrypted columns with default masking !!! CANCELED !!! [] was empty org.apache.orc.impl.NullKeyProvider5403799b doesn't has the test keys. ORC shim is created with old Hadoop libraries (OrcEncryptionSuite.scala:166) 21:58:00.035 WARN org.apache.spark.sql.execution.datasources.orc.OrcEncryptionSuite: ===== POSSIBLE THREAD LEAK IN SUITE o.a.s.sql.execution.datasources.orc.OrcEncryptionSuite, threads: rpc-boss-3-1 (daemon=true), shuffle-boss-6-1 (daemon=true) ===== Run completed in 5 seconds, 41 milliseconds. Total number of tests run: 0 Suites: completed 2, aborted 0 Tests: succeeded 0, failed 0, canceled 4, ignored 0, pending 0 No tests were executed. ``` **After** ``` Discovery starting. Discovery completed in 3 seconds, 185 milliseconds. Run starting. Expected test count is: 4 OrcEncryptionSuite: 21:58:46.540 WARN org.apache.hadoop.util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable - Write and read an encrypted file - Write and read an encrypted table - SPARK-35325: Write and read encrypted nested columns - SPARK-35992: Write and read fully-encrypted columns with default masking 21:58:51.933 WARN org.apache.spark.sql.execution.datasources.orc.OrcEncryptionSuite: ===== POSSIBLE THREAD LEAK IN SUITE o.a.s.sql.execution.datasources.orc.OrcEncryptionSuite, threads: rpc-boss-3-1 (daemon=true), shuffle-boss-6-1 (daemon=true) ===== Run completed in 8 seconds, 708 milliseconds. Total number of tests run: 4 Suites: completed 2, aborted 0 Tests: succeeded 4, failed 0, canceled 0, ignored 0, pending 0 All tests passed. ``` Closes #40566 from LuciferYang/SPARK-42934-2. Authored-by: yangjie01 <yangjie01@baidu.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit a3d9e0ae0f95a55766078da5d0bf0f74f3c3cfc3) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 27 March 2023, 16:42:59 UTC |

| 07a1563 | Dongjoon Hyun | 15 March 2023, 07:39:25 UTC | [SPARK-42799][BUILD] Update SBT build `xercesImpl` version to match with `pom.xml` This PR aims to update `XercesImpl` version to `2.12.2` from `2.12.0` in order to match with the version of `pom.xml`. https://github.com/apache/spark/blob/149e020a5ca88b2db9c56a9d48e0c1c896b57069/pom.xml#L1429-L1433 When we updated this version via SPARK-39183, we missed to update `SparkBuild.scala`. - https://github.com/apache/spark/pull/36544 No, this is a dev-only change because the release artifact' dependency is managed by Maven. Pass the CIs. Closes #40431 from dongjoon-hyun/SPARK-42799. Authored-by: Dongjoon Hyun <dhyun@apple.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 049aa380b8b1361c2898bc499e64613d329c6f72) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 15 March 2023, 07:40:29 UTC |

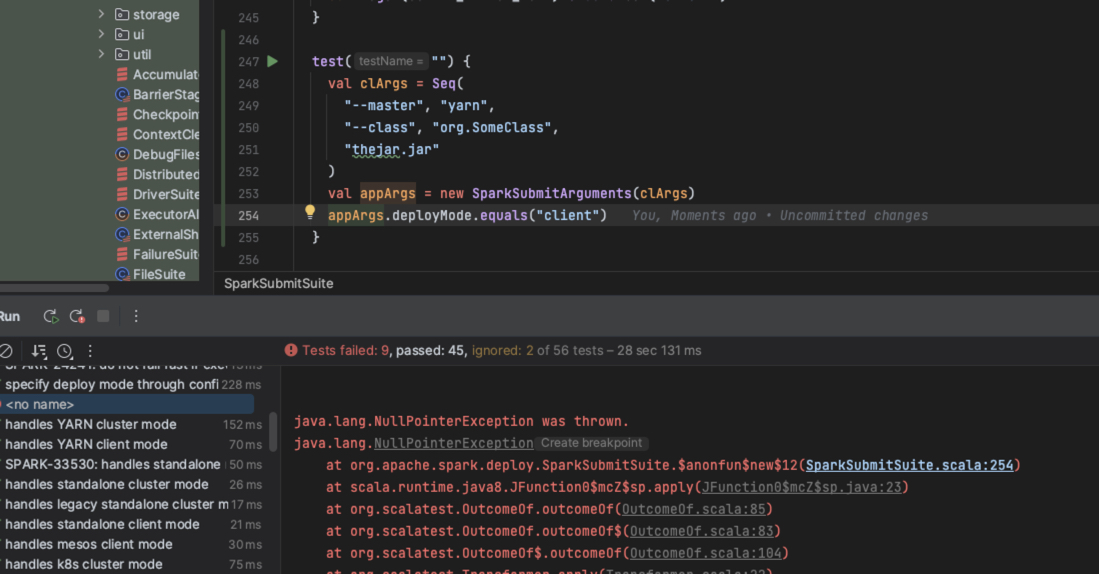

| aa84c06 | zwangsheng | 14 March 2023, 15:49:13 UTC | [SPARK-42785][K8S][CORE] When spark submit without `--deploy-mode`, avoid facing NPE in Kubernetes Case ### What changes were proposed in this pull request? After https://github.com/apache/spark/pull/37880 when user spark submit without `--deploy-mode XXX` or `–conf spark.submit.deployMode=XXXX`, may face NPE with this code. ### Why are the changes needed? https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/deploy/SparkSubmit.scala#164 ```scala args.deployMode.equals("client") && ``` Of course, submit without `deployMode` is not allowed and will throw an exception and terminate the application, but we should leave it to the later logic to give the appropriate hint instead of giving a NPE. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested?  Closes #40414 from zwangsheng/SPARK-42785. Authored-by: zwangsheng <2213335496@qq.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 767253bb6219f775a8a21f1cdd0eb8c25fa0b9de) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 14 March 2023, 15:49:34 UTC |

| aa86093 | Ruifeng Zheng | 11 March 2023, 14:45:54 UTC | [SPARK-42747][ML] Fix incorrect internal status of LoR and AFT ### What changes were proposed in this pull request? Add a hook `onParamChange` in `Params.{set, setDefault, clear}`, so that subclass can update the internal status within it. ### Why are the changes needed? In 3.1, we added internal auxiliary variables in LoR and AFT to optimize prediction/transformation. In LoR, when users call `model.{setThreshold, setThresholds}`, the internal status will be correctly updated. But users still can call `model.set(model.threshold, value)`, then the status will not be updated. And when users call `model.clear(model.threshold)`, the status should be updated with default threshold value 0.5. for example: ``` import org.apache.spark.ml.linalg._ import org.apache.spark.ml.classification._ val df = Seq((1.0, 1.0, Vectors.dense(0.0, 5.0)), (0.0, 2.0, Vectors.dense(1.0, 2.0)), (1.0, 3.0, Vectors.dense(2.0, 1.0)), (0.0, 4.0, Vectors.dense(3.0, 3.0))).toDF("label", "weight", "features") val lor = new LogisticRegression().setWeightCol("weight") val model = lor.fit(df) val vec = Vectors.dense(0.0, 5.0) val p0 = model.predict(vec) // return 0.0 model.setThreshold(0.05) // change status val p1 = model.set(model.threshold, 0.5).predict(vec) // return 1.0; but should be 0.0 val p2 = model.clear(model.threshold).predict(vec) // return 1.0; but should be 0.0 ``` what makes it even worse it that `pyspark.ml` always set params via `model.set(model.threshold, value)`, so the internal status is easily out of sync, see the example in [SPARK-42747](https://issues.apache.org/jira/browse/SPARK-42747) ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? added ut Closes #40367 from zhengruifeng/ml_param_hook. Authored-by: Ruifeng Zheng <ruifengz@apache.org> Signed-off-by: Sean Owen <srowen@gmail.com> (cherry picked from commit 5a702f22f49ca6a1b6220ac645e3fce70ec5189d) Signed-off-by: Sean Owen <srowen@gmail.com> | 11 March 2023, 14:46:10 UTC |

| cfcd453 | Kent Yao | 09 March 2023, 05:33:43 UTC | [SPARK-42697][WEBUI] Fix /api/v1/applications to return total uptime instead of 0 for the duration field ### What changes were proposed in this pull request? Fix /api/v1/applications to return total uptime instead of 0 for duration ### Why are the changes needed? Fix REST API OneApplicationResource ### Does this PR introduce _any_ user-facing change? yes, /api/v1/applications will return the total uptime instead of 0 for the duration ### How was this patch tested? locally build and run ```json [ { "id" : "local-1678183638394", "name" : "SparkSQL::10.221.102.180", "attempts" : [ { "startTime" : "2023-03-07T10:07:17.754GMT", "endTime" : "1969-12-31T23:59:59.999GMT", "lastUpdated" : "2023-03-07T10:07:17.754GMT", "duration" : 20317, "sparkUser" : "kentyao", "completed" : false, "appSparkVersion" : "3.5.0-SNAPSHOT", "startTimeEpoch" : 1678183637754, "endTimeEpoch" : -1, "lastUpdatedEpoch" : 1678183637754 } ] } ] ``` Closes #40313 from yaooqinn/SPARK-42697. Authored-by: Kent Yao <yao@apache.org> Signed-off-by: Kent Yao <yao@apache.org> (cherry picked from commit d3d8fdc2882f5c084897ca9b2af9a063358f3a21) Signed-off-by: Kent Yao <yao@apache.org> | 09 March 2023, 05:34:27 UTC |

| 9740739 | Shrikant Prasad | 08 March 2023, 03:33:39 UTC | [SPARK-39399][CORE][K8S] Fix proxy-user authentication for Spark on k8s in cluster deploy mode ### What changes were proposed in this pull request? The PR fixes the authentication failure of the proxy user on driver side while accessing kerberized hdfs through spark on k8s job. It follows the similar approach as it was done for Mesos: https://github.com/mesosphere/spark/pull/26 ### Why are the changes needed? When we try to access the kerberized HDFS through a proxy user in Spark Job running in cluster deploy mode with Kubernetes resource manager, we encounter AccessControlException. This is because authentication in driver is done using tokens of the proxy user and since proxy user doesn't have any delegation tokens on driver, auth fails. Further details: https://issues.apache.org/jira/browse/SPARK-25355?focusedCommentId=17532063&page=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-17532063 https://issues.apache.org/jira/browse/SPARK-25355?focusedCommentId=17532135&page=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-17532135 ### Does this PR introduce _any_ user-facing change? Yes, user will now be able to use proxy-user to access kerberized hdfs with Spark on K8s. ### How was this patch tested? The patch was tested by: 1. Running job which accesses kerberized hdfs with proxy user in cluster mode and client mode with kubernetes resource manager. 2. Running job which accesses kerberized hdfs without proxy user in cluster mode and client mode with kubernetes resource manager. 3. Build and run test github action : https://github.com/shrprasa/spark/actions/runs/3051203625 Closes #37880 from shrprasa/proxy_user_fix. Authored-by: Shrikant Prasad <shrprasa@visa.com> Signed-off-by: Kent Yao <yao@apache.org> (cherry picked from commit b3b3557ccbe53e34e0d0dbe3d21f49a230ee621b) Signed-off-by: Kent Yao <yao@apache.org> | 08 March 2023, 03:34:54 UTC |

| 2bd20a9 | Yikf | 06 March 2023, 22:06:12 UTC | [SPARK-42478][SQL][3.3] Make a serializable jobTrackerId instead of a non-serializable JobID in FileWriterFactory This is a backport of https://github.com/apache/spark/pull/40064 for branch-3.3 ### What changes were proposed in this pull request? Make a serializable jobTrackerId instead of a non-serializable JobID in FileWriterFactory ### Why are the changes needed? [SPARK-41448](https://issues.apache.org/jira/browse/SPARK-41448) make consistent MR job IDs in FileBatchWriter and FileFormatWriter, but it breaks a serializable issue, JobId is non-serializable. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? GA Closes #40290 from Yikf/backport-SPARK-42478-3.3. Authored-by: Yikf <yikaifei@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 06 March 2023, 22:06:12 UTC |