https://github.com/apache/spark

- HEAD

- refs/heads/branch-0.5

- refs/heads/branch-0.6

- refs/heads/branch-0.7

- refs/heads/branch-0.8

- refs/heads/branch-0.9

- refs/heads/branch-1.0

- refs/heads/branch-1.0-jdbc

- refs/heads/branch-1.1

- refs/heads/branch-1.2

- refs/heads/branch-1.3

- refs/heads/branch-1.4

- refs/heads/branch-1.5

- refs/heads/branch-1.6

- refs/heads/branch-2.0

- refs/heads/branch-2.1

- refs/heads/branch-2.2

- refs/heads/branch-2.3

- refs/heads/branch-2.4

- refs/heads/branch-3.0

- refs/heads/branch-3.1

- refs/heads/branch-3.2

- refs/heads/branch-3.3

- refs/heads/branch-3.4

- refs/heads/branch-3.5

- refs/heads/master

- refs/remotes/origin/branch-0.8

- refs/remotes/origin/td-rdd-save

- refs/tags/0.3-scala-2.8

- refs/tags/0.3-scala-2.9

- refs/tags/2.0.0-preview

- refs/tags/alpha-0.1

- refs/tags/alpha-0.2

- refs/tags/v0.5.0

- refs/tags/v0.5.1

- refs/tags/v0.5.2

- refs/tags/v0.6.0

- refs/tags/v0.6.0-yarn

- refs/tags/v0.6.1

- refs/tags/v0.6.2

- refs/tags/v0.7.0

- refs/tags/v0.7.0-bizo-1

- refs/tags/v0.7.1

- refs/tags/v0.7.2

- refs/tags/v0.9.1

- refs/tags/v0.9.2

- refs/tags/v1.0.0

- refs/tags/v1.0.1

- refs/tags/v1.0.2

- refs/tags/v1.1.0

- refs/tags/v1.1.1

- refs/tags/v1.2.0

- refs/tags/v1.2.1

- refs/tags/v1.2.2

- refs/tags/v1.3.0

- refs/tags/v1.3.1

- refs/tags/v1.4.0

- refs/tags/v1.4.1

- refs/tags/v1.5.0-rc1

- refs/tags/v1.5.0-rc2

- refs/tags/v1.5.0-rc3

- refs/tags/v1.5.1

- refs/tags/v1.6.0

- refs/tags/v1.6.1

- refs/tags/v1.6.2

- refs/tags/v1.6.3

- refs/tags/v2.0.0

- refs/tags/v2.0.1

- refs/tags/v2.0.2

- refs/tags/v2.1.0

- refs/tags/v2.1.1

- refs/tags/v2.1.2

- refs/tags/v2.1.2-rc1

- refs/tags/v2.1.2-rc2

- refs/tags/v2.1.2-rc3

- refs/tags/v2.1.2-rc4

- refs/tags/v2.1.3

- refs/tags/v2.1.3-rc1

- refs/tags/v2.1.3-rc2

- refs/tags/v2.2.0

- refs/tags/v2.2.1

- refs/tags/v2.2.1-rc1

- refs/tags/v2.2.1-rc2

- refs/tags/v2.2.2

- refs/tags/v2.2.2-rc1

- refs/tags/v2.2.2-rc2

- refs/tags/v2.2.3

- refs/tags/v2.2.3-rc1

- refs/tags/v2.3.0

- refs/tags/v2.3.0-rc1

- refs/tags/v2.3.0-rc2

- refs/tags/v2.3.0-rc3

- refs/tags/v2.3.0-rc4

- refs/tags/v2.3.1

- refs/tags/v2.3.1-rc1

- refs/tags/v2.3.1-rc2

- refs/tags/v2.3.1-rc3

- refs/tags/v2.3.1-rc4

- refs/tags/v2.3.2

- refs/tags/v2.3.2-rc1

- refs/tags/v2.3.2-rc2

- refs/tags/v2.3.2-rc3

- refs/tags/v2.3.2-rc4

- refs/tags/v2.3.2-rc5

- refs/tags/v2.3.2-rc6

- refs/tags/v2.3.3

- refs/tags/v2.3.3-rc1

- refs/tags/v2.3.3-rc2

- refs/tags/v2.3.4

- refs/tags/v2.3.4-rc1

- refs/tags/v2.4.0

- refs/tags/v2.4.0-rc1

- refs/tags/v2.4.0-rc2

- refs/tags/v2.4.0-rc3

- refs/tags/v2.4.0-rc4

- refs/tags/v2.4.0-rc5

- refs/tags/v2.4.1

- refs/tags/v2.4.1-rc1

- refs/tags/v2.4.1-rc2

- refs/tags/v2.4.1-rc3

- refs/tags/v2.4.1-rc4

- refs/tags/v2.4.1-rc5

- refs/tags/v2.4.1-rc6

- refs/tags/v2.4.1-rc7

- refs/tags/v2.4.1-rc8

- refs/tags/v2.4.1-rc9

- refs/tags/v2.4.2

- refs/tags/v2.4.2-rc1

- refs/tags/v2.4.3

- refs/tags/v2.4.3-rc1

- refs/tags/v2.4.4

- refs/tags/v2.4.4-rc1

- refs/tags/v2.4.4-rc2

- refs/tags/v2.4.4-rc3

- refs/tags/v2.4.5

- refs/tags/v2.4.5-rc1

- refs/tags/v2.4.5-rc2

- refs/tags/v2.4.6

- refs/tags/v2.4.6-rc1

- refs/tags/v2.4.6-rc2

- refs/tags/v2.4.6-rc3

- refs/tags/v2.4.6-rc4

- refs/tags/v2.4.6-rc5

- refs/tags/v2.4.6-rc6

- refs/tags/v2.4.6-rc7

- refs/tags/v2.4.6-rc8

- refs/tags/v2.4.7

- refs/tags/v2.4.7-rc1

- refs/tags/v2.4.7-rc2

- refs/tags/v2.4.7-rc3

- refs/tags/v2.4.8

- refs/tags/v2.4.8-rc1

- refs/tags/v2.4.8-rc2

- refs/tags/v2.4.8-rc3

- refs/tags/v2.4.8-rc4

- refs/tags/v3.0.0

- refs/tags/v3.0.0-preview2

- refs/tags/v3.0.0-preview2-rc1

- refs/tags/v3.0.0-preview2-rc2

- refs/tags/v3.0.0-rc1

- refs/tags/v3.0.0-rc2

- refs/tags/v3.0.0-rc3

- refs/tags/v3.0.1

- refs/tags/v3.0.1-rc1

- refs/tags/v3.0.1-rc2

- refs/tags/v3.0.1-rc3

- refs/tags/v3.0.2

- refs/tags/v3.0.2-rc1

- refs/tags/v3.0.3

- refs/tags/v3.0.3-rc1

- refs/tags/v3.1.0-rc1

- refs/tags/v3.1.1

- refs/tags/v3.1.1-rc1

- refs/tags/v3.1.1-rc2

- refs/tags/v3.1.1-rc3

- refs/tags/v3.1.2

- refs/tags/v3.1.2-rc1

- refs/tags/v3.1.3

- refs/tags/v3.1.3-rc1

- refs/tags/v3.1.3-rc2

- refs/tags/v3.1.3-rc3

- refs/tags/v3.1.3-rc4

- refs/tags/v3.2.0

- refs/tags/v3.2.0-rc1

- refs/tags/v3.2.0-rc2

- refs/tags/v3.2.0-rc3

- refs/tags/v3.2.0-rc4

- refs/tags/v3.2.0-rc5

- refs/tags/v3.2.0-rc6

- refs/tags/v3.2.0-rc7

- refs/tags/v3.2.1

- refs/tags/v3.2.1-rc1

- refs/tags/v3.2.1-rc2

- refs/tags/v3.2.2

- refs/tags/v3.2.2-rc1

- refs/tags/v3.2.3

- refs/tags/v3.2.3-rc1

- refs/tags/v3.2.4

- refs/tags/v3.2.4-rc1

- refs/tags/v3.3.0

- refs/tags/v3.3.0-rc1

- refs/tags/v3.3.0-rc2

- refs/tags/v3.3.0-rc3

- refs/tags/v3.3.0-rc4

- refs/tags/v3.3.0-rc5

- refs/tags/v3.3.0-rc6

- refs/tags/v3.3.1

- refs/tags/v3.3.1-rc1

- refs/tags/v3.3.1-rc2

- refs/tags/v3.3.1-rc3

- refs/tags/v3.3.1-rc4

- refs/tags/v3.3.2

- refs/tags/v3.3.2-rc1

- refs/tags/v3.3.3

- refs/tags/v3.3.3-rc1

- refs/tags/v3.3.4

- refs/tags/v3.3.4-rc1

- refs/tags/v3.4.0

- refs/tags/v3.4.0-rc1

- refs/tags/v3.4.0-rc2

- refs/tags/v3.4.0-rc3

- refs/tags/v3.4.0-rc4

- refs/tags/v3.4.0-rc5

- refs/tags/v3.4.0-rc6

- refs/tags/v3.4.0-rc7

- refs/tags/v3.4.1

- refs/tags/v3.4.1-rc1

- refs/tags/v3.4.2

- refs/tags/v3.4.2-rc1

- refs/tags/v3.5.0

- refs/tags/v3.5.0-rc1

- refs/tags/v3.5.0-rc2

- refs/tags/v3.5.0-rc3

- refs/tags/v3.5.0-rc4

- refs/tags/v3.5.0-rc5

- refs/tags/v3.5.1

- refs/tags/v3.5.1-rc1

- refs/tags/v3.5.1-rc2

Take a new snapshot of a software origin

If the archived software origin currently browsed is not synchronized with its upstream version (for instance when new commits have been issued), you can explicitly request Software Heritage to take a new snapshot of it.

Use the form below to proceed. Once a request has been submitted and accepted, it will be processed as soon as possible. You can then check its processing state by visiting this dedicated page.

Processing "take a new snapshot" request ...

Permalinks

To reference or cite the objects present in the Software Heritage archive, permalinks based on SoftWare Hash IDentifiers (SWHIDs) must be used.

Select below a type of object currently browsed in order to display its associated SWHID and permalink.

| Revision | Author | Date | Message | Commit Date |

|---|---|---|---|---|

| 87a5442 | Xinrong Meng | 07 April 2023, 01:28:44 UTC | Preparing Spark release v3.4.0-rc7 | 07 April 2023, 01:28:44 UTC |

| b2ff4c4 | Emil Ejbyfeldt | 07 April 2023, 01:14:07 UTC | [SPARK-39696][CORE] Fix data race in access to TaskMetrics.externalAccums ### What changes were proposed in this pull request? This PR fixes a data race around concurrent access to `TaskMetrics.externalAccums`. The race occurs between the `executor-heartbeater` thread and the thread executing the task. This data race is not known to cause issues on 2.12 but in 2.13 ~due this change https://github.com/scala/scala/pull/9258~ (LuciferYang bisected this to first cause failures in scala 2.13.7 one possible reason could be https://github.com/scala/scala/pull/9786) leads to an uncaught exception in the `executor-heartbeater` thread, which means that the executor will eventually be terminated due to missing hearbeats. This fix of using of using `CopyOnWriteArrayList` is cherry picked from https://github.com/apache/spark/pull/37206 where is was suggested as a fix by LuciferYang since `TaskMetrics.externalAccums` is also accessed from outside the class `TaskMetrics`. The old PR was closed because at that point there was no clear understanding of the race condition. JoshRosen commented here https://github.com/apache/spark/pull/37206#issuecomment-1189930626 saying that there should be no such race based on because all accumulators should be deserialized as part of task deserialization here: https://github.com/apache/spark/blob/0cc96f76d8a4858aee09e1fa32658da3ae76d384/core/src/main/scala/org/apache/spark/executor/Executor.scala#L507-L508 and therefore no writes should occur while the hearbeat thread will read the accumulators. But my understanding is that is incorrect as accumulators will also be deserialized as part of the taskBinary here: https://github.com/apache/spark/blob/169f828b1efe10d7f21e4b71a77f68cdd1d706d6/core/src/main/scala/org/apache/spark/scheduler/ShuffleMapTask.scala#L87-L88 which will happen while the heartbeater thread is potentially reading the accumulators. This can both due to user code using accumulators (see the new test case) but also when using the Dataframe/Dataset API as sql metrics will also be `externalAccums`. One way metrics will be sent as part of the taskBinary is when the dep is a `ShuffleDependency`: https://github.com/apache/spark/blob/fbbcf9434ac070dd4ced4fb9efe32899c6db12a9/core/src/main/scala/org/apache/spark/Dependency.scala#L85 with a ShuffleWriteProcessor that comes from https://github.com/apache/spark/blob/fbbcf9434ac070dd4ced4fb9efe32899c6db12a9/sql/core/src/main/scala/org/apache/spark/sql/execution/exchange/ShuffleExchangeExec.scala#L411-L422 ### Why are the changes needed? The current code has a data race. ### Does this PR introduce _any_ user-facing change? It will fix an uncaught exception in the `executor-hearbeater` thread when using scala 2.13. ### How was this patch tested? This patch adds a new test case, that before the fix was applied consistently produces the uncaught exception in the heartbeater thread when using scala 2.13. Closes #40663 from eejbyfeldt/SPARK-39696. Lead-authored-by: Emil Ejbyfeldt <eejbyfeldt@liveintent.com> Co-authored-by: yangjie01 <yangjie01@baidu.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 6ce0822f76e11447487d5f6b3cce94a894f2ceef) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 07 April 2023, 01:14:14 UTC |

| 1d974a7 | Xinrong Meng | 06 April 2023, 16:38:33 UTC | Preparing development version 3.4.1-SNAPSHOT | 06 April 2023, 16:38:33 UTC |

| 28d0723 | Xinrong Meng | 06 April 2023, 16:38:28 UTC | Preparing Spark release v3.4.0-rc6 | 06 April 2023, 16:38:28 UTC |

| 9037642 | aokolnychyi | 06 April 2023, 05:10:50 UTC | [SPARK-43041][SQL] Restore constructors of exceptions for compatibility in connector API ### What changes were proposed in this pull request? This PR adds back old constructors for exceptions used in the public connector API based on Spark 3.3. ### Why are the changes needed? These changes are needed to avoid breaking connectors when consuming Spark 3.4. Here is a list of exceptions used in the connector API (`org.apache.spark.sql.connector`): ``` NoSuchNamespaceException NoSuchTableException NoSuchViewException NoSuchPartitionException NoSuchPartitionsException (not referenced by public Catalog API but I assume it may be related to the exception above, which is referenced) NoSuchFunctionException NoSuchIndexException NamespaceAlreadyExistsException TableAlreadyExistsException ViewAlreadyExistsException PartitionAlreadyExistsException (not referenced by public Catalog API but I assume it may be related to the exception below, which is referenced) PartitionsAlreadyExistException IndexAlreadyExistsException ``` ### Does this PR introduce _any_ user-facing change? Adds back previously released constructors. ### How was this patch tested? Existing tests. Closes #40679 from aokolnychyi/spark-43041. Authored-by: aokolnychyi <aokolnychyi@apple.com> Signed-off-by: Gengliang Wang <gengliang@apache.org> (cherry picked from commit 6546988ead06af8de33108ad0eb3f25af839eadc) Signed-off-by: Gengliang Wang <gengliang@apache.org> | 06 April 2023, 05:10:57 UTC |

| f2900f8 | Daniel Tenedorio | 06 April 2023, 04:16:52 UTC | [SPARK-43018][SQL] Fix bug for INSERT commands with timestamp literals ### What changes were proposed in this pull request? This PR fixes a correctness bug for INSERT commands with timestamp literals. The bug manifests when: * An INSERT command includes a user-specified column list of fewer columns than the target table. * The provided values include timestamp literals. The bug was that the long integer values stored in the rows to represent these timestamp literals were getting assigned back to `UnresolvedInlineTable` rows without the timestamp type. Then the analyzer inserted an implicit cast from `LongType` to `TimestampType` later, which incorrectly caused the value to change during execution. This PR fixes the bug by propagating the timestamp type directly to the output table instead. ### Why are the changes needed? This PR fixes a correctness bug. ### Does this PR introduce _any_ user-facing change? Yes, this PR fixes a correctness bug. ### How was this patch tested? This PR adds a new unit test suite. Closes #40652 from dtenedor/assign-correct-insert-types. Authored-by: Daniel Tenedorio <daniel.tenedorio@databricks.com> Signed-off-by: Gengliang Wang <gengliang@apache.org> (cherry picked from commit 9f0bf51a3a7f6175de075198e00a55bfdc491f15) Signed-off-by: Gengliang Wang <gengliang@apache.org> | 06 April 2023, 04:17:01 UTC |

| 34c7c3b | Hyukjin Kwon | 05 April 2023, 06:00:51 UTC | [MINOR][CONNECT][DOCS] Clarify Spark Connect option in Spark scripts ### What changes were proposed in this pull request? This PR clarifies Spark Connect option to be consistent with other sections. ### Why are the changes needed? To be consistent with other configuration docs, and to be clear about Spark Connect option. ### Does this PR introduce _any_ user-facing change? Yes, it changes the user-facing doc in Spark scripts. ### How was this patch tested? Manually tested. Closes #40671 from HyukjinKwon/another-minor-docs. Authored-by: Hyukjin Kwon <gurwls223@apache.org> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit a7a0f7a92d0517e553de46193592e1e6d1c3add2) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 05 April 2023, 06:00:57 UTC |

| c79fc94 | Takuya UESHIN | 05 April 2023, 03:04:11 UTC | [SPARK-42983][CONNECT][PYTHON] Fix createDataFrame to handle 0-dim numpy array properly ### What changes were proposed in this pull request? Fix `createDataFrame` to handle 0-dim numpy array properly. ### Why are the changes needed? When 0-dim numpy array is passed to `createDataFrame`, it raises an unexpected error: ```py >>> import numpy as np >>> spark.createDataFrame(np.array(0)) Traceback (most recent call last): ... TypeError: len() of unsized object ``` The error message should be: ```py ValueError: NumPy array input should be of 1 or 2 dimensions. ``` ### Does this PR introduce _any_ user-facing change? It will show a proper error message. ### How was this patch tested? Enabled/updated the related test. Closes #40669 from ueshin/issues/SPARK-42983/zero_dim_nparray. Authored-by: Takuya UESHIN <ueshin@databricks.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 6fc9f0221fd273715b9d1047c951e00cec078965) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 05 April 2023, 03:04:18 UTC |

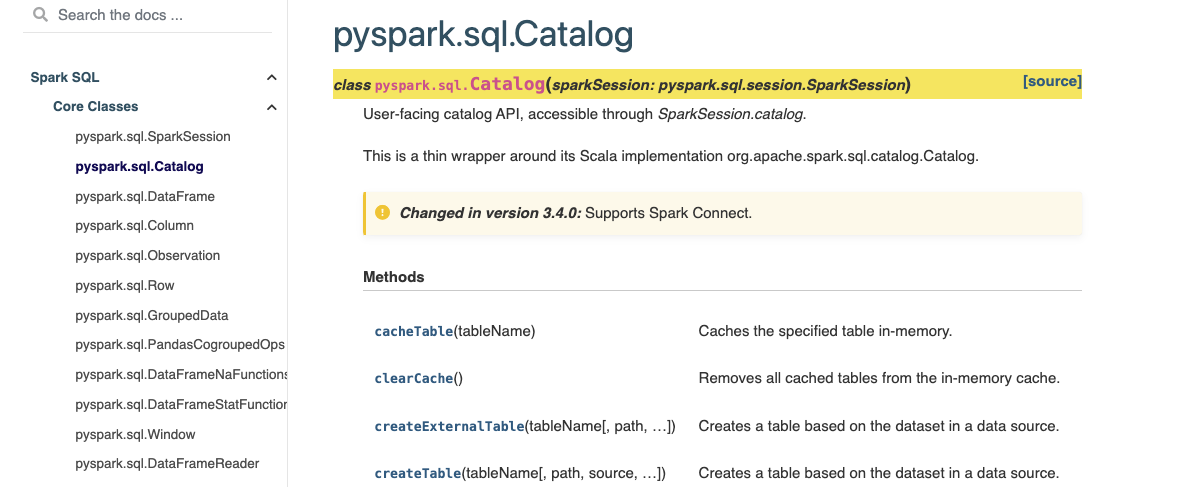

| d373661 | Hyukjin Kwon | 05 April 2023, 01:48:13 UTC | [MINOR][PYTHON][CONNECT][DOCS] Deduplicate versionchanged directive in Catalog ### What changes were proposed in this pull request? This PR proposes to deduplicate versionchanged directive in Catalog. ### Why are the changes needed? All API is implemented so we don't need to mark individual method. ### Does this PR introduce _any_ user-facing change? Yes, it changes the documentation. ### How was this patch tested? Manually check with documentation build. CI in this PR should test it out too.  Closes #40670 from HyukjinKwon/minor-doc-catalog. Authored-by: Hyukjin Kwon <gurwls223@apache.org> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit b86d90d1eb0a5c085965c3e558d2c873a8157830) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 05 April 2023, 01:48:28 UTC |

| 532d446 | Max Gekk | 05 April 2023, 00:12:08 UTC | [SPARK-43009][SQL][3.4] Parameterized `sql()` with `Any` constants ### What changes were proposed in this pull request? In the PR, I propose to change API of parameterized SQL, and replace type of argument values from `string` to `Any` in Scala/Java/Python and `Expression.Literal` in protobuf API. Language API can accept `Any` objects from which it is possible to construct literal expressions. This is a backport of https://github.com/apache/spark/pull/40623 #### Scala/Java: ```scala def sql(sqlText: String, args: Map[String, Any]): DataFrame ``` values of the `args` map are wrapped by the `lit()` function which leaves `Column` as is and creates a literal from other Java/Scala objects (for more details see the `Scala` tab at https://spark.apache.org/docs/latest/sql-ref-datatypes.html). #### Python: ```python def sql(self, sqlQuery: str, args: Optional[Dict[str, Any]] = None, **kwargs: Any) -> DataFrame: ``` Similarly to Scala/Java `sql`, Python's `sql()` accepts Python objects as values of the `args` dictionary (see more details about acceptable Python objects at https://spark.apache.org/docs/latest/sql-ref-datatypes.html). `sql()` converts dictionary values to `Column` literal expressions by `lit()`. #### Protobuf: ```proto message SqlCommand { // (Required) SQL Query. string sql = 1; // (Optional) A map of parameter names to literal expressions. map<string, Expression.Literal> args = 2; } ``` For example: ```scala scala> val sqlText = """SELECT s FROM VALUES ('Jeff /*__*/ Green'), ('E\'Twaun Moore') AS t(s) WHERE s = :player_name""" sqlText: String = SELECT s FROM VALUES ('Jeff /*__*/ Green'), ('E\'Twaun Moore') AS t(s) WHERE s = :player_name scala> sql(sqlText, args = Map("player_name" -> lit("E'Twaun Moore"))).show(false) +-------------+ |s | +-------------+ |E'Twaun Moore| +-------------+ ``` ### Why are the changes needed? The current implementation the parameterized `sql()` requires arguments as string values parsed to SQL literal expressions that causes the following issues: 1. SQL comments are skipped while parsing, so, some fragments of input might be skipped. For example, `'Europe -- Amsterdam'`. In this case, `-- Amsterdam` is excluded from the input. 2. Special chars in string values must be escaped, for instance `'E\'Twaun Moore'` ### Does this PR introduce _any_ user-facing change? No since the parameterized SQL feature https://github.com/apache/spark/pull/38864 hasn't been released yet. ### How was this patch tested? By running the affected tests: ``` $ build/sbt "test:testOnly *ParametersSuite" $ python/run-tests --parallelism=1 --testnames 'pyspark.sql.tests.connect.test_connect_basic SparkConnectBasicTests.test_sql_with_args' $ python/run-tests --parallelism=1 --testnames 'pyspark.sql.session SparkSession.sql' ``` Authored-by: Max Gekk <max.gekkgmail.com> (cherry picked from commit 156a12ec0abba8362658a58e00179a0b80f663f2) Closes #40666 from MaxGekk/parameterized-sql-any-3.4-2. Authored-by: Max Gekk <max.gekk@gmail.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 05 April 2023, 00:12:08 UTC |

| 444053f | Shrikant Prasad | 04 April 2023, 13:15:57 UTC | [SPARK-42655][SQL] Incorrect ambiguous column reference error **What changes were proposed in this pull request?** The result of attribute resolution should consider only unique values for the reference. If it has duplicate values, it will incorrectly result into ambiguous reference error. **Why are the changes needed?** The below query fails incorrectly due to ambiguous reference error. val df1 = sc.parallelize(List((1,2,3,4,5),(1,2,3,4,5))).toDF("id","col2","col3","col4", "col5") val op_cols_mixed_case = List("id","col2","col3","col4", "col5", "ID") val df3 = df1.select(op_cols_mixed_case.head, op_cols_mixed_case.tail: _*) df3.select("id").show() org.apache.spark.sql.AnalysisException: Reference 'id' is ambiguous, could be: id, id. df3.explain() == Physical Plan == *(1) Project [_1#6 AS id#17, _2#7 AS col2#18, _3#8 AS col3#19, _4#9 AS col4#20, _5#10 AS col5#21, _1#6 AS ID#17] Before the fix, attributes matched were: attributes: Vector(id#17, id#17) Thus, it throws ambiguous reference error. But if we consider only unique matches, it will return correct result. unique attributes: Vector(id#17) **Does this PR introduce any user-facing change?** Yes, Users migrating from Spark 2.3 to 3.x will face this error as the scenario used to work fine in Spark 2.3 but fails in Spark 3.2. After the fix, iit will work correctly as it was in Spark 2.3. **How was this patch tested?** Added unit test. Closes #40258 from shrprasa/col_ambiguous_issue. Authored-by: Shrikant Prasad <shrprasa@visa.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit b283c6a0e47c3292dbf00400392b3a3f629dd965) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 04 April 2023, 13:16:19 UTC |

| 9b1f2db | Ruifeng Zheng | 04 April 2023, 07:22:16 UTC | [SPARK-43011][SQL] `array_insert` should fail with 0 index ### What changes were proposed in this pull request? Make `array_insert` fail when input index `pos` is zero. ### Why are the changes needed? see https://github.com/apache/spark/pull/40563#discussion_r1155673089 ### Does this PR introduce _any_ user-facing change? Yes ### How was this patch tested? updated UT Closes #40641 from zhengruifeng/sql_array_insert_fails_zero. Authored-by: Ruifeng Zheng <ruifengz@apache.org> Signed-off-by: Max Gekk <max.gekk@gmail.com> (cherry picked from commit 3e9574c54f149b13ca768c0930c634eb67ea14c8) Signed-off-by: Max Gekk <max.gekk@gmail.com> | 04 April 2023, 07:22:27 UTC |

| a647fef | yangjie01 | 04 April 2023, 07:21:24 UTC | [SPARK-42974][CORE][3.4] Restore `Utils.createTempDir` to use the `ShutdownHookManager` and clean up `JavaUtils.createTempDir` method ### What changes were proposed in this pull request? The main change of this pr as follows: 1. Make `Utils.createTempDir` and `JavaUtils.createTempDir` back to two independent implementations to restore `Utils.createTempDir` to use the `spark.util.ShutdownHookManager` mechanism. 2. Use `Utils.createTempDir` or `JavaUtils.createDirectory` instead for testing where `JavaUtils.createTempDir` is used. 3. Clean up `JavaUtils.createTempDir` method ### Why are the changes needed? Restore `Utils.createTempDir` to use the `spark.util.ShutdownHookManager` mechanism and clean up `JavaUtils.createTempDir` method. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Pass GitHub Actions Closes #40647 from LuciferYang/SPARK-42974-34. Authored-by: yangjie01 <yangjie01@baidu.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 04 April 2023, 07:21:24 UTC |

| ce6d5eb | Takuya UESHIN | 03 April 2023, 23:03:09 UTC | [SPARK-43006][PYTHON][TESTS] Fix DataFrameTests.test_cache_dataframe ### What changes were proposed in this pull request? This is a follow-up of #40619. Fixes `DataFrameTests.test_cache_dataframe`. ### Why are the changes needed? The storage level when `df.cache()` should be `StorageLevel.MEMORY_AND_DISK_DESER` in Python. The test passed before #40619 because the difference is `deserialized`. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Fixed the test. Closes #40650 from ueshin/issues/SPARK-43006/test_cache. Authored-by: Takuya UESHIN <ueshin@databricks.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 69301f7b8eb2be230dd2960d836e85bbdcfb93da) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 03 April 2023, 23:03:20 UTC |

| 47b2912 | Max Gekk | 03 April 2023, 14:09:37 UTC | [MINOR][DOCS] Add Java 8 types to value types of Scala/Java APIs ### What changes were proposed in this pull request? In the PR, I propose to update the doc page https://spark.apache.org/docs/latest/sql-ref-datatypes.html about value types in Scala and Java APIs. <img width="1190" alt="Screenshot 2023-04-03 at 15 22 24" src="https://user-images.githubusercontent.com/1580697/229509678-7f860c2b-050e-4e40-b83d-821e70d1e194.png"> ### Why are the changes needed? To provide full info about supported "external" value types in Scala/Java APIs. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? By building docs and checking them by eyes. Closes #40644 from MaxGekk/datatypes-java8-docs. Authored-by: Max Gekk <max.gekk@gmail.com> Signed-off-by: Max Gekk <max.gekk@gmail.com> (cherry picked from commit d3338c6879fd3e0b986654889f2e1e6407988dcc) Signed-off-by: Max Gekk <max.gekk@gmail.com> | 03 April 2023, 14:09:49 UTC |

| 54d1b62 | thyecust | 03 April 2023, 13:26:00 UTC | [SPARK-43006][PYSPARK] Fix typo in StorageLevel __eq__() ### What changes were proposed in this pull request? fix `self.deserialized == self.deserialized` with `self.deserialized == other.deserialized` ### Why are the changes needed? The original expression is always True, which is likely to be a typo. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? No test added. Use GitHub Actions. Closes #40619 from thyecust/patch-1. Authored-by: thyecust <thy@mail.ecust.edu.cn> Signed-off-by: Sean Owen <srowen@gmail.com> (cherry picked from commit f57c3686a4fc5cf6c15442c116155b75d338a35d) Signed-off-by: Sean Owen <srowen@gmail.com> | 03 April 2023, 13:26:06 UTC |

| 9244afb | thyecust | 03 April 2023, 13:24:17 UTC | [SPARK-43005][PYSPARK] Fix typo in pyspark/pandas/config.py By comparing compute.isin_limit and plotting.max_rows, `v is v` is likely to be a typo. ### What changes were proposed in this pull request? fix `v is v >= 0` with `v >= 0`. ### Why are the changes needed? By comparing compute.isin_limit and plotting.max_rows, `v is v` is likely to be a typo. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? By GitHub Actions. Closes #40620 from thyecust/patch-2. Authored-by: thyecust <thy@mail.ecust.edu.cn> Signed-off-by: Sean Owen <srowen@gmail.com> (cherry picked from commit 5ac2b0fc024ae499119dfd5ab2ee4d038418c5fd) Signed-off-by: Sean Owen <srowen@gmail.com> | 03 April 2023, 13:24:24 UTC |

| beb8928 | Hisoka | 03 April 2023, 05:29:13 UTC | [SPARK-42519][CONNECT][TESTS] Add More WriteTo Tests In Spark Connect Client ### What changes were proposed in this pull request? Add more WriteTo tests for Spark Connect Client ### Why are the changes needed? Improve Test Case, remove same todo ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Add new tests Closes #40564 from Hisoka-X/connec_test. Authored-by: Hisoka <fanjiaeminem@qq.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 41993250aa4943ee935376e4eba7e6e48430d298) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 03 April 2023, 05:29:21 UTC |

| 807abf9 | thyecust | 03 April 2023, 03:36:04 UTC | [SPARK-43004][CORE] Fix typo in ResourceRequest.equals() vendor == vendor is always true, this is likely to be a typo. ### What changes were proposed in this pull request? fix `vendor == vendor` with `that.vendor == vendor`, and `discoveryScript == discoveryScript` with `that.discoveryScript == discoveryScript` ### Why are the changes needed? vendor == vendor is always true, this is likely to be a typo. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? By GitHub Actions. Closes #40622 from thyecust/patch-4. Authored-by: thyecust <thy@mail.ecust.edu.cn> Signed-off-by: Sean Owen <srowen@gmail.com> (cherry picked from commit 52c000ece27c9ef34969a7fb252714588f395926) Signed-off-by: Sean Owen <srowen@gmail.com> | 03 April 2023, 03:36:10 UTC |

| df858d3 | Takuya UESHIN | 01 April 2023, 01:34:55 UTC | [SPARK-42998][CONNECT][PYTHON] Fix DataFrame.collect with null struct ### What changes were proposed in this pull request? Fix `DataFrame.collect` with null struct. ### Why are the changes needed? There is a behavior difference when collecting `null` struct: In Spark Connect: ```py >>> df = spark.sql("values (1, struct('a' as x)), (2, struct(null as x)), (null, null) as t(a, b)") >>> df.printSchema() root |-- a: integer (nullable = true) |-- b: struct (nullable = true) | |-- x: string (nullable = true) >>> df.show() +----+------+ | a| b| +----+------+ | 1| {a}| | 2|{null}| |null| null| +----+------+ >>> df.collect() [Row(a=1, b=Row(x='a')), Row(a=2, b=Row(x=None)), Row(a=None, b=<Row()>)] ``` whereas PySpark: ```py >>> df.collect() [Row(a=1, b=Row(x='a')), Row(a=2, b=Row(x=None)), Row(a=None, b=None)] ``` ### Does this PR introduce _any_ user-facing change? The behavior fix. ### How was this patch tested? Added/modified the related tests. Closes #40627 from ueshin/issues/SPARK-42998/null_struct. Authored-by: Takuya UESHIN <ueshin@databricks.com> Signed-off-by: Ruifeng Zheng <ruifengz@apache.org> (cherry picked from commit 74cddcfda3ac4779de80696cdae2ba64d53fc635) Signed-off-by: Ruifeng Zheng <ruifengz@apache.org> | 01 April 2023, 01:35:19 UTC |

| 68fa8ca | Takuya UESHIN | 30 March 2023, 23:45:44 UTC | [SPARK-42969][CONNECT][TESTS] Fix the comparison the result with Arrow optimization enabled/disabled Fixes the comparison the result with Arrow optimization enabled/disabled. in `test_arrow`, there are a bunch of comparison between DataFrames with Arrow optimization enabled/disabled. These should be fixed to compare with the expected values so that it can be reusable for Spark Connect parity tests. No. Updated the tests. Closes #40612 from ueshin/issues/SPARK-42969/test_arrow. Authored-by: Takuya UESHIN <ueshin@databricks.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 35503a535771d257b517e7ddf2adfaefefd97dad) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 30 March 2023, 23:47:07 UTC |

| 98f00ea | Takuya UESHIN | 30 March 2023, 23:46:36 UTC | [SPARK-42970][CONNECT][PYTHON][TESTS][3.4] Reuse pyspark.sql.tests.test_arrow test cases ### What changes were proposed in this pull request? Reuses `pyspark.sql.tests.test_arrow` test cases. ### Why are the changes needed? `test_arrow` is also helpful because it contains many tests for `createDataFrame` with pandas or `toPandas`. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Added the tests. Closes #40595 from ueshin/issues/SPARK-42970/3.4/test_arrow. Authored-by: Takuya UESHIN <ueshin@databricks.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 30 March 2023, 23:46:36 UTC |

| e20b55b | Xingbo Jiang | 30 March 2023, 22:48:04 UTC | [SPARK-42967][CORE][3.2][3.3][3.4] Fix SparkListenerTaskStart.stageAttemptId when a task is started after the stage is cancelled ### What changes were proposed in this pull request? The PR fixes a bug that SparkListenerTaskStart can have `stageAttemptId = -1` when a task is launched after the stage is cancelled. Actually, we should use the information within `Task` to update the `stageAttemptId` field. ### Why are the changes needed? -1 is not a legal stageAttemptId value, thus it can lead to unexpected problem if a subscriber try to parse the stage information from the SparkListenerTaskStart event. ### Does this PR introduce _any_ user-facing change? No, it's a bugfix. ### How was this patch tested? Manually verified. Closes #40592 from jiangxb1987/SPARK-42967. Authored-by: Xingbo Jiang <xingbo.jiang@databricks.com> Signed-off-by: Gengliang Wang <gengliang@apache.org> (cherry picked from commit 1a6b1770c85f37982b15d261abf9cc6e4be740f4) Signed-off-by: Gengliang Wang <gengliang@apache.org> | 30 March 2023, 22:48:16 UTC |

| e586527 | Wenchen Fan | 30 March 2023, 11:43:36 UTC | Revert "[SPARK-41765][SQL] Pull out v1 write metrics to WriteFiles" This reverts commit a111a02de1a814c5f335e0bcac4cffb0515557dc. ### What changes were proposed in this pull request? SQLMetrics is not only used in the UI, but is also a programming API as users can write a listener, get the physical plan, and read the SQLMetrics values directly. We can ask users to update their code and read SQLMetrics from the new `WriteFiles` node instead. But this is troublesome and sometimes they may need to get both write metrics and commit metrics, then they need to look at two physical plan nodes. Given that https://github.com/apache/spark/pull/39428 is mostly for cleanup and does not have many benefits, reverting is a better idea. ### Why are the changes needed? avoid breaking changes. ### Does this PR introduce _any_ user-facing change? Yes, they can programmatically get the write command metrics as before. ### How was this patch tested? N/A Closes #40604 from cloud-fan/revert. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit f4af6a05c0879887e7db2377a174e7b7d7bab693) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 30 March 2023, 11:43:51 UTC |

| 6e4fcf7 | yangjie01 | 30 March 2023, 04:50:27 UTC | [SPARK-42971][CORE] Change to print `workdir` if `appDirs` is null when worker handle `WorkDirCleanup` event ### What changes were proposed in this pull request? This pr change to print `workdir` if `appDirs` is null when worker handle `WorkDirCleanup` event. ### Why are the changes needed? Print `appDirs` ause a NPE because `appDirs` is null and from the context, what should be printed is `workdir` ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Pass GitHub Actions Closes #40597 from LuciferYang/SPARK-39296-FOLLOW. Authored-by: yangjie01 <yangjie01@baidu.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit f105fe82ab01fe787d00c6ad72f1d6dedb5f3a1b) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 30 March 2023, 04:50:40 UTC |

| 6a6f504 | Xinrong Meng | 30 March 2023, 02:18:32 UTC | Preparing development version 3.4.1-SNAPSHOT | 30 March 2023, 02:18:32 UTC |

| f39ad61 | Xinrong Meng | 30 March 2023, 02:18:27 UTC | Preparing Spark release v3.4.0-rc5 | 30 March 2023, 02:18:27 UTC |

| ce36692 | Tom van Bussel | 29 March 2023, 19:28:09 UTC | [SPARK-42631][CONNECT][FOLLOW-UP] Expose Column.expr to extensions ### What changes were proposed in this pull request? This PR is a follow-up to https://github.com/apache/spark/pull/40234, which makes it possible for extensions to create custom `Dataset`s and `Column`s. It exposes `Dataset.plan`, but unfortunately it does not expose `Column.expr`. This means that extensions cannot build custom `Column`s that provide a user provider `Column` as input. ### Why are the changes needed? See above. ### Does this PR introduce _any_ user-facing change? No. This only adds a change for a Developer API. ### How was this patch tested? Existing tests to make sure nothing breaks. Closes #40590 from tomvanbussel/SPARK-42631. Authored-by: Tom van Bussel <tom.vanbussel@databricks.com> Signed-off-by: Herman van Hovell <herman@databricks.com> (cherry picked from commit c3716c4ec68c2dea07e8cd896d79bd7175517a31) Signed-off-by: Herman van Hovell <herman@databricks.com> | 29 March 2023, 19:28:21 UTC |

| 4e20467 | Dongjoon Hyun | 29 March 2023, 08:09:42 UTC | [SPARK-42957][INFRA][FOLLOWUP] Use 'cyclonedx' instead of file extensions ### What changes were proposed in this pull request? This PR is a follow-up of #40585 which aims to use `cyclonedx` instead of file extension. ### Why are the changes needed? When we use file extensions `xml` and `json`, `maven-metadata-local.xml` are missed. ``` spark-rm1ea0f8a3e397:/opt/spark-rm/output/spark/spark-repo-glCsK/org/apache/spark$ find . | grep xml ./spark-core_2.13/3.4.1-SNAPSHOT/maven-metadata-local.xml ./spark-core_2.13/3.4.1-SNAPSHOT/spark-core_2.13-3.4.1-SNAPSHOT-cyclonedx.xml ./spark-core_2.13/maven-metadata-local.xml ``` We need to use `cyclonedx` specifically. ``` spark-rm1ea0f8a3e397:/opt/spark-rm/output/spark/spark-repo-glCsK/org/apache/spark$ find . -type f |grep -v \.jar |grep -v \.pom ./spark-catalyst_2.13/3.4.1-SNAPSHOT/spark-catalyst_2.13-3.4.1-SNAPSHOT-cyclonedx.json ./spark-catalyst_2.13/3.4.1-SNAPSHOT/spark-catalyst_2.13-3.4.1-SNAPSHOT-cyclonedx.xml ./spark-core_2.13/3.4.1-SNAPSHOT/spark-core_2.13-3.4.1-SNAPSHOT-cyclonedx.json ./spark-core_2.13/3.4.1-SNAPSHOT/spark-core_2.13-3.4.1-SNAPSHOT-cyclonedx.xml ./spark-graphx_2.13/3.4.1-SNAPSHOT/spark-graphx_2.13-3.4.1-SNAPSHOT-cyclonedx.json ./spark-graphx_2.13/3.4.1-SNAPSHOT/spark-graphx_2.13-3.4.1-SNAPSHOT-cyclonedx.xml ./spark-kvstore_2.13/3.4.1-SNAPSHOT/spark-kvstore_2.13-3.4.1-SNAPSHOT-cyclonedx.json ./spark-kvstore_2.13/3.4.1-SNAPSHOT/spark-kvstore_2.13-3.4.1-SNAPSHOT-cyclonedx.xml ./spark-launcher_2.13/3.4.1-SNAPSHOT/spark-launcher_2.13-3.4.1-SNAPSHOT-cyclonedx.json ./spark-launcher_2.13/3.4.1-SNAPSHOT/spark-launcher_2.13-3.4.1-SNAPSHOT-cyclonedx.xml ./spark-mllib-local_2.13/3.4.1-SNAPSHOT/spark-mllib-local_2.13-3.4.1-SNAPSHOT-cyclonedx.json ./spark-mllib-local_2.13/3.4.1-SNAPSHOT/spark-mllib-local_2.13-3.4.1-SNAPSHOT-cyclonedx.xml ./spark-network-common_2.13/3.4.1-SNAPSHOT/spark-network-common_2.13-3.4.1-SNAPSHOT-cyclonedx.json ./spark-network-common_2.13/3.4.1-SNAPSHOT/spark-network-common_2.13-3.4.1-SNAPSHOT-cyclonedx.xml ./spark-network-shuffle_2.13/3.4.1-SNAPSHOT/spark-network-shuffle_2.13-3.4.1-SNAPSHOT-cyclonedx.json ./spark-network-shuffle_2.13/3.4.1-SNAPSHOT/spark-network-shuffle_2.13-3.4.1-SNAPSHOT-cyclonedx.xml ./spark-parent_2.13/3.4.1-SNAPSHOT/spark-parent_2.13-3.4.1-SNAPSHOT-cyclonedx.json ./spark-parent_2.13/3.4.1-SNAPSHOT/spark-parent_2.13-3.4.1-SNAPSHOT-cyclonedx.xml ./spark-sketch_2.13/3.4.1-SNAPSHOT/spark-sketch_2.13-3.4.1-SNAPSHOT-cyclonedx.json ./spark-sketch_2.13/3.4.1-SNAPSHOT/spark-sketch_2.13-3.4.1-SNAPSHOT-cyclonedx.xml ./spark-streaming_2.13/3.4.1-SNAPSHOT/spark-streaming_2.13-3.4.1-SNAPSHOT-cyclonedx.json ./spark-streaming_2.13/3.4.1-SNAPSHOT/spark-streaming_2.13-3.4.1-SNAPSHOT-cyclonedx.xml ./spark-tags_2.13/3.4.1-SNAPSHOT/spark-tags_2.13-3.4.1-SNAPSHOT-cyclonedx.json ./spark-tags_2.13/3.4.1-SNAPSHOT/spark-tags_2.13-3.4.1-SNAPSHOT-cyclonedx.xml ./spark-unsafe_2.13/3.4.1-SNAPSHOT/spark-unsafe_2.13-3.4.1-SNAPSHOT-cyclonedx.json ./spark-unsafe_2.13/3.4.1-SNAPSHOT/spark-unsafe_2.13-3.4.1-SNAPSHOT-cyclonedx.xml ``` ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Manual test. Closes #40587 from dongjoon-hyun/SPARK-42957-2. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 7aa0195e46792ddfd2ec295ad439dab70f8dbe1d) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 29 March 2023, 08:09:48 UTC |

| dc834d4 | allisonwang-db | 29 March 2023, 08:02:10 UTC | [SPARK-42895][CONNECT] Improve error messages for stopped Spark sessions ### What changes were proposed in this pull request? This PR improves error messages when users attempt to invoke session operations on a stopped Spark session. ### Why are the changes needed? To make the error messages more user-friendly. For example: ```python spark.stop() spark.sql("select 1") ``` Before this PR, this code will throw two exceptions: ``` ValueError: Cannot invoke RPC: Channel closed! During handling of the above exception, another exception occurred: Traceback (most recent call last): ... return e.code() == grpc.StatusCode.UNAVAILABLE AttributeError: 'ValueError' object has no attribute 'code' ``` After this PR, it will show this exception: ``` [NO_ACTIVE_SESSION] No active Spark session found. Please create a new Spark session before running the code. ``` ### Does this PR introduce _any_ user-facing change? Yes. This PR modifies the error messages. ### How was this patch tested? New unit test. Closes #40536 from allisonwang-db/spark-42895-stopped-session. Authored-by: allisonwang-db <allison.wang@databricks.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit e9a87825f737211c2ab0fa6d02c1c6f2a47b0024) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 29 March 2023, 08:02:18 UTC |

| 2256459 | Dongjoon Hyun | 29 March 2023, 06:30:18 UTC | [SPARK-42957][INFRA] `release-build.sh` should not remove SBOM artifacts ### What changes were proposed in this pull request? This PR aims to prevent `release-build.sh` from removing SBOM artifacts. ### Why are the changes needed? According to the snapshot publishing result, we are publishing `.json` and `.xml` files successfully. - https://repository.apache.org/content/repositories/snapshots/org/apache/spark/spark-core_2.12/3.4.1-SNAPSHOT/spark-core_2.12-3.4.1-20230324.001223-34-cyclonedx.json - https://repository.apache.org/content/repositories/snapshots/org/apache/spark/spark-core_2.12/3.4.1-SNAPSHOT/spark-core_2.12-3.4.1-20230324.001223-34-cyclonedx.xml However, `release-build.sh` removes them during release. The following is the result of Apache Spark 3.4.0 RC4. - https://repository.apache.org/content/repositories/orgapachespark-1438/org/apache/spark/spark-core_2.12/3.4.0/ ### Does this PR introduce _any_ user-facing change? Yes, the users will see the SBOM on released artifacts. ### How was this patch tested? This should be tested during release process. Closes #40585 from dongjoon-hyun/SPARK-42957. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit f5c5124c30ecf987b3114f0a991c9ee9831ce42a) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 29 March 2023, 06:30:25 UTC |

| a9cacc1 | Kent Yao | 29 March 2023, 04:36:57 UTC | [SPARK-42946][SQL] Redact sensitive data which is nested by variable substitution ### What changes were proposed in this pull request? Redact sensitive data which is nested by variable substitution #### Case 1 by SET syntax's key part ```sql spark-sql> set ${spark.ssl.keyPassword}; abc <undefined> ``` #### Case 2 by SELECT as String literal ```sql spark-sql> set spark.ssl.keyPassword; spark.ssl.keyPassword *********(redacted) Time taken: 0.009 seconds, Fetched 1 row(s) spark-sql> select '${spark.ssl.keyPassword}'; abc ``` ### Why are the changes needed? data security ### Does this PR introduce _any_ user-facing change? yes, sensitive data can not be extracted by variable substitution ### How was this patch tested? new tests Closes #40576 from yaooqinn/SPARK-42946. Authored-by: Kent Yao <yao@apache.org> Signed-off-by: Kent Yao <yao@apache.org> (cherry picked from commit c227d789a5fedb2178858768e1fe425169f489d2) Signed-off-by: Kent Yao <yao@apache.org> | 29 March 2023, 04:37:41 UTC |

| 3124470 | yangjie01 | 28 March 2023, 14:06:50 UTC | [SPARK-42927][CORE] Change the access scope of `o.a.spark.util.Iterators#size` to `private[util]` ### What changes were proposed in this pull request? https://github.com/apache/spark/pull/37353 introduce `o.a.spark.util.Iterators#size` to speed up get `Iterator` size when using Scala 2.13. It will only be used by `o.a.spark.util.Utils#getIteratorSize`, and will disappear when Spark only supports Scala 2.13. It should not be public, so this pr change it access scope to `private[util]`. ### Why are the changes needed? `o.a.spark.util.Iterators#size` should not public. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? - Pass GitHub Actions Closes #40556 from LuciferYang/SPARK-42927. Authored-by: yangjie01 <yangjie01@baidu.com> Signed-off-by: Sean Owen <srowen@gmail.com> (cherry picked from commit 6e4c352d5f91f8343cec748fea4723178d5ae9af) Signed-off-by: Sean Owen <srowen@gmail.com> | 28 March 2023, 14:06:58 UTC |

| a2dd949 | Bruce Robbins | 28 March 2023, 12:32:06 UTC | [SPARK-42937][SQL] `PlanSubqueries` should set `InSubqueryExec#shouldBroadcast` to true ### What changes were proposed in this pull request? Change `PlanSubqueries` to set `shouldBroadcast` to true when instantiating an `InSubqueryExec` instance. ### Why are the changes needed? The below left outer join gets an error: ``` create or replace temp view v1 as select * from values (1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1), (2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2), (3, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1) as v1(key, value1, value2, value3, value4, value5, value6, value7, value8, value9, value10); create or replace temp view v2 as select * from values (1, 2), (3, 8), (7, 9) as v2(a, b); create or replace temp view v3 as select * from values (3), (8) as v3(col1); set spark.sql.codegen.maxFields=10; -- let's make maxFields 10 instead of 100 set spark.sql.adaptive.enabled=false; select * from v1 left outer join v2 on key = a and key in (select col1 from v3); ``` The join fails during predicate codegen: ``` 23/03/27 12:24:12 WARN Predicate: Expr codegen error and falling back to interpreter mode java.lang.IllegalArgumentException: requirement failed: input[0, int, false] IN subquery#34 has not finished at scala.Predef$.require(Predef.scala:281) at org.apache.spark.sql.execution.InSubqueryExec.prepareResult(subquery.scala:144) at org.apache.spark.sql.execution.InSubqueryExec.doGenCode(subquery.scala:156) at org.apache.spark.sql.catalyst.expressions.Expression.$anonfun$genCode$3(Expression.scala:201) at scala.Option.getOrElse(Option.scala:189) at org.apache.spark.sql.catalyst.expressions.Expression.genCode(Expression.scala:196) at org.apache.spark.sql.catalyst.expressions.codegen.CodegenContext.$anonfun$generateExpressions$2(CodeGenerator.scala:1278) at scala.collection.immutable.List.map(List.scala:293) at org.apache.spark.sql.catalyst.expressions.codegen.CodegenContext.generateExpressions(CodeGenerator.scala:1278) at org.apache.spark.sql.catalyst.expressions.codegen.GeneratePredicate$.create(GeneratePredicate.scala:41) at org.apache.spark.sql.catalyst.expressions.codegen.GeneratePredicate$.generate(GeneratePredicate.scala:33) at org.apache.spark.sql.catalyst.expressions.Predicate$.createCodeGeneratedObject(predicates.scala:73) at org.apache.spark.sql.catalyst.expressions.Predicate$.createCodeGeneratedObject(predicates.scala:70) at org.apache.spark.sql.catalyst.expressions.CodeGeneratorWithInterpretedFallback.createObject(CodeGeneratorWithInterpretedFallback.scala:51) at org.apache.spark.sql.catalyst.expressions.Predicate$.create(predicates.scala:86) at org.apache.spark.sql.execution.joins.HashJoin.boundCondition(HashJoin.scala:146) at org.apache.spark.sql.execution.joins.HashJoin.boundCondition$(HashJoin.scala:140) at org.apache.spark.sql.execution.joins.BroadcastHashJoinExec.boundCondition$lzycompute(BroadcastHashJoinExec.scala:40) at org.apache.spark.sql.execution.joins.BroadcastHashJoinExec.boundCondition(BroadcastHashJoinExec.scala:40) ``` It fails again after fallback to interpreter mode: ``` 23/03/27 12:24:12 ERROR Executor: Exception in task 2.0 in stage 2.0 (TID 7) java.lang.IllegalArgumentException: requirement failed: input[0, int, false] IN subquery#34 has not finished at scala.Predef$.require(Predef.scala:281) at org.apache.spark.sql.execution.InSubqueryExec.prepareResult(subquery.scala:144) at org.apache.spark.sql.execution.InSubqueryExec.eval(subquery.scala:151) at org.apache.spark.sql.catalyst.expressions.InterpretedPredicate.eval(predicates.scala:52) at org.apache.spark.sql.execution.joins.HashJoin.$anonfun$boundCondition$2(HashJoin.scala:146) at org.apache.spark.sql.execution.joins.HashJoin.$anonfun$boundCondition$2$adapted(HashJoin.scala:146) at org.apache.spark.sql.execution.joins.HashJoin.$anonfun$outerJoin$1(HashJoin.scala:205) ``` Both the predicate codegen and the evaluation fail for the same reason: `PlanSubqueries` creates `InSubqueryExec` with `shouldBroadcast=false`. The driver waits for the subquery to finish, but it's the executor that uses the results of the subquery (for predicate codegen or evaluation). Because `shouldBroadcast` is set to false, the result is stored in a transient field (`InSubqueryExec#result`), so the result of the subquery is not serialized when the `InSubqueryExec` instance is sent to the executor. The issue occurs, as far as I can tell, only when both whole stage codegen is disabled and adaptive execution is disabled. When wholestage codegen is enabled, the predicate codegen happens on the driver, so the subquery's result is available. When adaptive execution is enabled, `PlanAdaptiveSubqueries` always sets `shouldBroadcast=true`, so the subquery's result is available on the executor, if needed. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? New unit test. Closes #40569 from bersprockets/join_subquery_issue. Authored-by: Bruce Robbins <bersprockets@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 5b20f3d94095f54017be3d31d11305e597334d8b) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 28 March 2023, 12:32:14 UTC |

| 0620b56 | allisonwang-db | 28 March 2023, 08:43:02 UTC | [SPARK-42928][SQL] Make resolvePersistentFunction synchronized ### What changes were proposed in this pull request? This PR makes the function `resolvePersistentFunctionInternal` synchronized. ### Why are the changes needed? To make function resolution thread-safe. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Existing UTs. Closes #40557 from allisonwang-db/SPARK-42928-sync-func. Authored-by: allisonwang-db <allison.wang@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit f62ffde045771b3275acf3dfb24573804e7daf93) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 28 March 2023, 08:43:19 UTC |

| 0c4ad50 | Xinrong Meng | 28 March 2023, 05:35:38 UTC | [SPARK-42908][PYTHON] Raise RuntimeError when SparkContext is required but not initialized ### What changes were proposed in this pull request? Raise RuntimeError when SparkContext is required but not initialized. ### Why are the changes needed? Error improvement. ### Does this PR introduce _any_ user-facing change? Error type and message change. Raise a RuntimeError with a clear message (rather than an AssertionError) when SparkContext is required but not initialized yet. ### How was this patch tested? Unit test. Closes #40534 from xinrong-meng/err_msg. Authored-by: Xinrong Meng <xinrong@apache.org> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 70f6206dbcd3c5ff0f4618cf179b7fcf75ae672c) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 28 March 2023, 05:35:45 UTC |

| 2cd341f | Mridul Muralidharan | 28 March 2023, 03:48:05 UTC | [SPARK-42922][SQL] Move from Random to SecureRandom ### What changes were proposed in this pull request? Most uses of `Random` in spark are either in testcases or where we need a pseudo random number which is repeatable. Use `SecureRandom`, instead of `Random` for the cases where it impacts security. ### Why are the changes needed? Use of `SecureRandom` in more security sensitive contexts. This was flagged in our internal scans as well. ### Does this PR introduce _any_ user-facing change? Directly no. Would improve security posture of Apache Spark. ### How was this patch tested? Existing unit tests Closes #40568 from mridulm/SPARK-42922. Authored-by: Mridul Muralidharan <mridulatgmail.com> Signed-off-by: Sean Owen <srowen@gmail.com> (cherry picked from commit 744434358cb0c687b37d37dd62f2e7d837e52b2d) Signed-off-by: Sean Owen <srowen@gmail.com> | 28 March 2023, 03:48:13 UTC |

| 61293fa | Takuya UESHIN | 28 March 2023, 03:35:54 UTC | [SPARK-41876][CONNECT][PYTHON] Implement DataFrame.toLocalIterator ### What changes were proposed in this pull request? Implements `DataFrame.toLocalIterator`. The argument `prefetchPartitions` won't take effect for Spark Connect. ### Why are the changes needed? Missing API. ### Does this PR introduce _any_ user-facing change? `DataFrame.toLocalIterator` will be available. ### How was this patch tested? Enabled the related tests. Closes #40570 from ueshin/issues/SPARK-41876/toLocalIterator. Authored-by: Takuya UESHIN <ueshin@databricks.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 31965a06c9f85abf2296971237b1f88065eb67c2) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 28 March 2023, 03:36:04 UTC |

| 46866aa | Xinyi Yu | 28 March 2023, 03:08:30 UTC | [SPARK-42936][SQL] Fix LCA bug when the having clause can be resolved directly by its child Aggregate ### What changes were proposed in this pull request? The PR fixes the following bug in LCA + having resolution: ```sql select sum(value1) as total_1, total_1 from values(1, 'name', 100, 50) AS data(id, name, value1, value2) having total_1 > 0 SparkException: [INTERNAL_ERROR] Found the unresolved operator: 'UnresolvedHaving (total_1#353L > cast(0 as bigint)) ``` To trigger the issue, the having condition need to be (can be resolved by) an attribute in the select. Without the LCA `total_1`, the query works fine. #### Root cause of the issue `UnresolvedHaving` with `Aggregate` as child can use both the `Aggregate`'s output and the `Aggregate`'s child's output to resolve the having condition. If using the latter, `ResolveReferences` rule will replace the unresolved attribute with a `TempResolvedColumn`. For a `UnresolvedHaving` that actually can be resolved directly by its child `Aggregate`, there will be no `TempResolvedColumn` after the rule `ResolveReferences` applies. This `UnresolvedHaving` still needs to be transformed to `Filter` by rule `ResolveAggregateFunctions`. This rule recognizes the shape: `UnresolvedHaving - Aggregate`. However, the current condition (the plan should not contain `TempResolvedColumn`) that prevents LCA rule to apply between `ResolveReferences` and `ResolveAggregateFunctions` does not cover the above case. It can insert `Project` in the middle and break the shape can be matched by `ResolveAggregateFunctions`. #### Fix The PR adds another condition for LCA rule to apply: the plan should not contain any `UnresolvedHaving`. ### Why are the changes needed? See above reasoning to fix the bug. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Existing and added tests. Closes #40558 from anchovYu/lca-having-bug-fix. Authored-by: Xinyi Yu <xinyi.yu@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 2319a31fbf391c87bf8a1eef8707f46bef006c0f) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 28 March 2023, 03:08:49 UTC |

| 604fee6 | Cheng Pan | 27 March 2023, 22:31:16 UTC | [SPARK-42906][K8S] Replace a starting digit with `x` in resource name prefix ### What changes were proposed in this pull request? Change the generated resource name prefix to meet K8s requirements > DNS-1035 label must consist of lower case alphanumeric characters or '-', start with an alphabetic character, and end with an alphanumeric character (e.g. 'my-name', or 'abc-123', regex used for validation is '[a-z]([-a-z0-9]*[a-z0-9])?') ### Why are the changes needed? In current implementation, the following app name causes error ``` bin/spark-submit \ --master k8s://https://*.*.*.*:6443 \ --deploy-mode cluster \ --name 你好_187609 \ ... ``` ``` Exception in thread "main" io.fabric8.kubernetes.client.KubernetesClientException: Failure executing: POST at: https://*.*.*.*:6443/api/v1/namespaces/spark/services. Message: Service "187609-f19020870d12c349-driver-svc" is invalid: metadata.name: Invalid value: "187609-f19020870d12c349-driver-svc": a DNS-1035 label must consist of lower case alphanumeric characters or '-', start with an alphabetic character, and end with an alphanumeric character (e.g. 'my-name', or 'abc-123', regex used for validation is '[a-z]([-a-z0-9]*[a-z0-9])?'). ``` ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? New UT. Closes #40533 from pan3793/SPARK-42906. Authored-by: Cheng Pan <chengpan@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 0b9a3017005ccab025b93d7b545412b226d4e63c) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 27 March 2023, 22:31:31 UTC |

| d7f2a6b | yangjie01 | 27 March 2023, 16:42:40 UTC | [SPARK-42934][BUILD] Add `spark.hadoop.hadoop.security.key.provider.path` to `scalatest-maven-plugin` ### What changes were proposed in this pull request? When testing `OrcEncryptionSuite` using maven, all test suites are always skipped. So this pr add `spark.hadoop.hadoop.security.key.provider.path` to `systemProperties` of `scalatest-maven-plugin` to make `OrcEncryptionSuite` can test by maven. ### Why are the changes needed? Make `OrcEncryptionSuite` can test by maven. ### Does this PR introduce _any_ user-facing change? No, just for maven test ### How was this patch tested? - Pass GitHub Actions - Manual testing: run ``` build/mvn clean install -pl sql/core -DskipTests -am build/mvn test -pl sql/core -Dtest=none -DwildcardSuites=org.apache.spark.sql.execution.datasources.orc.OrcEncryptionSuite ``` **Before** ``` Discovery starting. Discovery completed in 3 seconds, 218 milliseconds. Run starting. Expected test count is: 4 OrcEncryptionSuite: 21:57:58.344 WARN org.apache.hadoop.util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable - Write and read an encrypted file !!! CANCELED !!! [] was empty org.apache.orc.impl.NullKeyProvider5af5d76f doesn't has the test keys. ORC shim is created with old Hadoop libraries (OrcEncryptionSuite.scala:37) - Write and read an encrypted table !!! CANCELED !!! [] was empty org.apache.orc.impl.NullKeyProvider5ad6cc21 doesn't has the test keys. ORC shim is created with old Hadoop libraries (OrcEncryptionSuite.scala:65) - SPARK-35325: Write and read encrypted nested columns !!! CANCELED !!! [] was empty org.apache.orc.impl.NullKeyProvider691124ee doesn't has the test keys. ORC shim is created with old Hadoop libraries (OrcEncryptionSuite.scala:116) - SPARK-35992: Write and read fully-encrypted columns with default masking !!! CANCELED !!! [] was empty org.apache.orc.impl.NullKeyProvider5403799b doesn't has the test keys. ORC shim is created with old Hadoop libraries (OrcEncryptionSuite.scala:166) 21:58:00.035 WARN org.apache.spark.sql.execution.datasources.orc.OrcEncryptionSuite: ===== POSSIBLE THREAD LEAK IN SUITE o.a.s.sql.execution.datasources.orc.OrcEncryptionSuite, threads: rpc-boss-3-1 (daemon=true), shuffle-boss-6-1 (daemon=true) ===== Run completed in 5 seconds, 41 milliseconds. Total number of tests run: 0 Suites: completed 2, aborted 0 Tests: succeeded 0, failed 0, canceled 4, ignored 0, pending 0 No tests were executed. ``` **After** ``` Discovery starting. Discovery completed in 3 seconds, 185 milliseconds. Run starting. Expected test count is: 4 OrcEncryptionSuite: 21:58:46.540 WARN org.apache.hadoop.util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable - Write and read an encrypted file - Write and read an encrypted table - SPARK-35325: Write and read encrypted nested columns - SPARK-35992: Write and read fully-encrypted columns with default masking 21:58:51.933 WARN org.apache.spark.sql.execution.datasources.orc.OrcEncryptionSuite: ===== POSSIBLE THREAD LEAK IN SUITE o.a.s.sql.execution.datasources.orc.OrcEncryptionSuite, threads: rpc-boss-3-1 (daemon=true), shuffle-boss-6-1 (daemon=true) ===== Run completed in 8 seconds, 708 milliseconds. Total number of tests run: 4 Suites: completed 2, aborted 0 Tests: succeeded 4, failed 0, canceled 0, ignored 0, pending 0 All tests passed. ``` Closes #40566 from LuciferYang/SPARK-42934-2. Authored-by: yangjie01 <yangjie01@baidu.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit a3d9e0ae0f95a55766078da5d0bf0f74f3c3cfc3) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 27 March 2023, 16:42:48 UTC |

| c701859 | yangjie01 | 27 March 2023, 16:27:01 UTC | [SPARK-42930][CORE][SQL] Change the access scope of `ProtobufSerDe` related implementations to `private[protobuf]` ### What changes were proposed in this pull request? After [SPARK-41053](https://issues.apache.org/jira/browse/SPARK-41053), Spark supports serializing/ Live UI data to RocksDB using protobuf, but these are internal implementation details, so this pr change the access scope of `ProtobufSerDe` related implementations to `private[protobuf]`. ### Why are the changes needed? Weaker the access scope of Spark internal implementation details. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Pass GitHub Actions Closes #40560 from LuciferYang/SPARK-42930. Authored-by: yangjie01 <yangjie01@baidu.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit b8f16bc6c3400dce13795c6dfa176dd793341df0) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 27 March 2023, 16:27:09 UTC |

| dde9de6 | Max Gekk | 27 March 2023, 05:54:18 UTC | [SPARK-42924][SQL][CONNECT][PYTHON] Clarify the comment of parameterized SQL args ### What changes were proposed in this pull request? In the PR, I propose to clarify the comment of `args` in parameterized `sql()`. ### Why are the changes needed? To make the comment more clear and highlight that input strings are parsed (not evaluated), and considered as SQL literal expressions. Also while parsing the fragments w/ SQL comments in the string values are skipped. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? By checking coding style: ``` $ ./dev/lint-python $ ./dev/scalastyle ``` Closes #40508 from MaxGekk/parameterized-sql-doc. Authored-by: Max Gekk <max.gekk@gmail.com> Signed-off-by: Max Gekk <max.gekk@gmail.com> (cherry picked from commit c55c7ea6fc92c3733543d5f3d99eb00921cbe564) Signed-off-by: Max Gekk <max.gekk@gmail.com> | 27 March 2023, 05:54:30 UTC |

| aba1c3b | Takuya UESHIN | 27 March 2023, 03:19:12 UTC | [SPARK-42899][SQL][FOLLOWUP] Project.reconcileColumnType should use KnownNotNull instead of AssertNotNull ### What changes were proposed in this pull request? This is a follow-up of #40526. `Project.reconcileColumnType` should use `KnownNotNull` instead of `AssertNotNull`, also only when `col.nullable`. ### Why are the changes needed? There is a better expression, `KnownNotNull`, for this kind of issue. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Existing tests. Closes #40546 from ueshin/issues/SPARK-42899/KnownNotNull. Authored-by: Takuya UESHIN <ueshin@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 62b9763a6fd9437647021bbb4433034566ba0a42) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 27 March 2023, 03:19:45 UTC |

| 31ede73 | Takuya UESHIN | 27 March 2023, 00:35:33 UTC | [SPARK-42920][CONNECT][PYTHON] Enable tests for UDF with UDT ### What changes were proposed in this pull request? Enables tests for UDF with UDT. ### Why are the changes needed? Now that UDF with UDT should work, the related tests should be enabled to see if it works. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Enabled/modified the related tests. Closes #40549 from ueshin/issues/SPARK-42920/udf_with_udt. Authored-by: Takuya UESHIN <ueshin@databricks.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 80f8664e8278335788d8fa1dd00654f3eaec8ed6) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 27 March 2023, 00:35:41 UTC |

| 1b95b4d | Takuya UESHIN | 27 March 2023, 00:26:47 UTC | [SPARK-42911][PYTHON][3.4] Introduce more basic exceptions ### What changes were proposed in this pull request? Introduces more basic exceptions. - ArithmeticException - ArrayIndexOutOfBoundsException - DateTimeException - NumberFormatException - SparkRuntimeException ### Why are the changes needed? There are more exceptions that Spark throws but PySpark doesn't capture. We should introduce more basic exceptions; otherwise we still see `Py4JJavaError` or `SparkConnectGrpcException`. ```py >>> spark.conf.set("spark.sql.ansi.enabled", True) >>> spark.sql("select 1/0") DataFrame[(1 / 0): double] >>> spark.sql("select 1/0").show() Traceback (most recent call last): ... py4j.protocol.Py4JJavaError: An error occurred while calling o44.showString. : org.apache.spark.SparkArithmeticException: [DIVIDE_BY_ZERO] Division by zero. Use `try_divide` to tolerate divisor being 0 and return NULL instead. If necessary set "spark.sql.ansi.enabled" to "false" to bypass this error. == SQL(line 1, position 8) == select 1/0 ^^^ at org.apache.spark.sql.errors.QueryExecutionErrors$.divideByZeroError(QueryExecutionErrors.scala:225) ... JVM's stacktrace ``` ```py >>> spark.sql("select 1/0").show() Traceback (most recent call last): ... pyspark.errors.exceptions.connect.SparkConnectGrpcException: (org.apache.spark.SparkArithmeticException) [DIVIDE_BY_ZERO] Division by zero. Use `try_divide` to tolerate divisor being 0 and return NULL instead. If necessary set "spark.sql.ansi.enabled" to "false" to bypass this error. == SQL(line 1, position 8) == select 1/0 ^^^ ``` ### Does this PR introduce _any_ user-facing change? The error message is more readable. ```py >>> spark.sql("select 1/0").show() Traceback (most recent call last): ... pyspark.errors.exceptions.captured.ArithmeticException: [DIVIDE_BY_ZERO] Division by zero. Use `try_divide` to tolerate divisor being 0 and return NULL instead. If necessary set "spark.sql.ansi.enabled" to "false" to bypass this error. == SQL(line 1, position 8) == select 1/0 ^^^ ``` or ```py >>> spark.sql("select 1/0").show() Traceback (most recent call last): ... pyspark.errors.exceptions.connect.ArithmeticException: [DIVIDE_BY_ZERO] Division by zero. Use `try_divide` to tolerate divisor being 0 and return NULL instead. If necessary set "spark.sql.ansi.enabled" to "false" to bypass this error. == SQL(line 1, position 8) == select 1/0 ^^^ ``` ### How was this patch tested? Added the related tests. Closes #40547 from ueshin/issues/SPARK-42911/3.4/exceptions. Authored-by: Takuya UESHIN <ueshin@databricks.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 27 March 2023, 00:26:47 UTC |

| 594c8fe | Herman van Hovell | 24 March 2023, 17:15:50 UTC | [SPARK-42884][CONNECT] Add Ammonite REPL integration ### What changes were proposed in this pull request? This PR adds Ammonite REPL integration for Spark Connect. This has a couple of benefits: - It makes it a lot less cumbersome for users to start a spark connect REPL. You don't have to add custom scripts, and you can use `coursier` to launch a fully function REPL for you. - It adds REPL integration for to the actual build. This makes it easier to validate the code we add is actually working. ### Why are the changes needed? A REPL is arguably the first entry point for a lot of users. ### Does this PR introduce _any_ user-facing change? Yes it adds REPL integration. ### How was this patch tested? Added tests for the command line parsing. Manually tested the REPL. Closes #40515 from hvanhovell/SPARK-42884. Authored-by: Herman van Hovell <herman@databricks.com> Signed-off-by: Herman van Hovell <herman@databricks.com> (cherry picked from commit acf3065cc9e91e73db335a050289153582c45ce5) Signed-off-by: Herman van Hovell <herman@databricks.com> | 24 March 2023, 17:16:01 UTC |

| f5f53e4 | Kent Yao | 24 March 2023, 14:18:11 UTC | [SPARK-42917][SQL] Correct getUpdateColumnNullabilityQuery for DerbyDialect ### What changes were proposed in this pull request? Fix nullability clause for derby dialect, according to the official derby lang ref guide. ### Why are the changes needed? To fix bugs like: ``` spark-sql ()> create table src2(ID INTEGER NOT NULL, deptno INTEGER NOT NULL); spark-sql ()> alter table src2 ALTER COLUMN ID drop not null; java.sql.SQLSyntaxErrorException: Syntax error: Encountered "NULL" at line 1, column 42. ``` ### Does this PR introduce _any_ user-facing change? yes, but a necessary bugfix ### How was this patch tested? Test manually. Closes #40544 from yaooqinn/SPARK-42917. Authored-by: Kent Yao <yao@apache.org> Signed-off-by: Kent Yao <yao@apache.org> (cherry picked from commit bcadbb69be6ac9759cb79fc4ee7bd93a37a63277) Signed-off-by: Kent Yao <yao@apache.org> | 24 March 2023, 14:18:40 UTC |

| 3122d4f | Xinrong Meng | 24 March 2023, 08:39:21 UTC | [SPARK-42891][CONNECT][PYTHON][3.4] Implement CoGrouped Map API ### What changes were proposed in this pull request? Implement CoGrouped Map API: `applyInPandas`. The PR is a cherry-pick of https://github.com/apache/spark/commit/1fbc7948e57cbf05a46cb0c7fb2fad4ec25540e6, with minor changes on test class names to adapt to branch-3.4. ### Why are the changes needed? Parity with vanilla PySpark. ### Does this PR introduce _any_ user-facing change? Yes. CoGrouped Map API is supported as shown below. ```sh >>> import pandas as pd >>> df1 = spark.createDataFrame( ... [(20000101, 1, 1.0), (20000101, 2, 2.0), (20000102, 1, 3.0), (20000102, 2, 4.0)], ("time", "id", "v1")) >>> >>> df2 = spark.createDataFrame( ... [(20000101, 1, "x"), (20000101, 2, "y")], ("time", "id", "v2")) >>> >>> def asof_join(l, r): ... return pd.merge_asof(l, r, on="time", by="id") ... >>> df1.groupby("id").cogroup(df2.groupby("id")).applyInPandas( ... asof_join, schema="time int, id int, v1 double, v2 string" ... ).show() +--------+---+---+---+ | time| id| v1| v2| +--------+---+---+---+ |20000101| 1|1.0| x| |20000102| 1|3.0| x| |20000101| 2|2.0| y| |20000102| 2|4.0| y| +--------+---+---+---+ ``` ### How was this patch tested? Parity unit tests. Closes #40539 from xinrong-meng/cogroup_map3.4. Authored-by: Xinrong Meng <xinrong@apache.org> Signed-off-by: Xinrong Meng <xinrong@apache.org> | 24 March 2023, 08:39:21 UTC |

| b74f792 | Wenchen Fan | 24 March 2023, 06:45:43 UTC | [SPARK-42861][SQL] Use private[sql] instead of protected[sql] to avoid generating API doc ### What changes were proposed in this pull request? This is the only issue I found during SQL module API auditing via https://github.com/apache/spark-website/pull/443/commits/615986022c573aedaff8d2b917a0d2d9dc2b67ef . Somehow `protected[sql]` also generates API doc which is unexpected. `private[sql]` solves the problem and I generated doc locally to verify it. Another API issue has been fixed by https://github.com/apache/spark/pull/40499 ### Why are the changes needed? fix api doc ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? N/A Closes #40541 from cloud-fan/auditing. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: Kent Yao <yao@apache.org> (cherry picked from commit f7421b498a15ea687eaf811a1b2c77091945ef90) Signed-off-by: Kent Yao <yao@apache.org> | 24 March 2023, 06:45:58 UTC |

| d44c7c0 | Kent Yao | 24 March 2023, 06:42:40 UTC | [SPARK-42904][SQL] Char/Varchar Support for JDBC Catalog ### What changes were proposed in this pull request? Add type mapping for spark char/varchar to jdbc types. ### Why are the changes needed? The STANDARD JDBC 1.0 and other modern databases define char/varchar normatively. This is currently a kind of bug for DDLs on JDBCCatalogs for encountering errors like ``` Cause: org.apache.spark.SparkIllegalArgumentException: Can't get JDBC type for varchar(10). [info] at org.apache.spark.sql.errors.QueryExecutionErrors$.cannotGetJdbcTypeError(QueryExecutionErrors.scala:1005) ``` ### Does this PR introduce _any_ user-facing change? yes, char/varchar are allow for jdbc catalogs ### How was this patch tested? new ut Closes #40531 from yaooqinn/SPARK-42904. Authored-by: Kent Yao <yao@apache.org> Signed-off-by: Kent Yao <yao@apache.org> (cherry picked from commit 18cd8012085510f9febc65e6e35ab79822076089) Signed-off-by: Kent Yao <yao@apache.org> | 24 March 2023, 06:43:07 UTC |

| f20a269 | Juliusz Sompolski | 24 March 2023, 01:56:26 UTC | [SPARK-42202][CONNECT][TEST][FOLLOWUP] Loop around command entry in SimpleSparkConnectService ### What changes were proposed in this pull request? With the while loop around service startup, any ENTER hit in the SimpleSparkConnectService console made it loop around, try to start the service anew, and fail with address already in use. Change the loop to be around the `StdIn.readline()` entry. ### Why are the changes needed? Better testing / development / debugging with SparkConnect ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Manual use of `connector/connect/bin/spark-connect` Closes #40537 from juliuszsompolski/SPARK-42202-followup. Authored-by: Juliusz Sompolski <julek@databricks.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 0fde146e8676ab9a4aeafebb1684eb7a44660524) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 24 March 2023, 01:56:37 UTC |

| 88eaaea | Xinrong Meng | 23 March 2023, 12:29:12 UTC | [SPARK-42903][PYTHON][DOCS] Avoid documenting None as as a return value in docstring ### What changes were proposed in this pull request? Avoid documenting None as as a return value in docstring. ### Why are the changes needed? In Python, it's idiomatic to don't specify the return for return None. ### Does this PR introduce _any_ user-facing change? No. Closes #40532 from xinrong-meng/py_audit. Authored-by: Xinrong Meng <xinrong@apache.org> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit ece156f64fdbe0367b1594b1f8ee657234551a03) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 23 March 2023, 12:29:20 UTC |

| 4d06299 | Takuya UESHIN | 23 March 2023, 08:45:30 UTC | [SPARK-42900][CONNECT][PYTHON] Fix createDataFrame to respect inference and column names ### What changes were proposed in this pull request? Fixes `createDataFrame` to respect inference and column names. ### Why are the changes needed? Currently when a column name list is provided as a schema, the type inference result is not taken care of. As a result, `createDataFrame` from UDT objects with column name list doesn't take the UDT type. For example: ```py >>> from pyspark.ml.linalg import Vectors >>> df = spark.createDataFrame([(1.0, 1.0, Vectors.dense(0.0, 5.0)), (0.0, 2.0, Vectors.dense(1.0, 2.0))], ["label", "weight", "features"]) >>> df.printSchema() root |-- label: double (nullable = true) |-- weight: double (nullable = true) |-- features: struct (nullable = true) | |-- type: byte (nullable = false) | |-- size: integer (nullable = true) | |-- indices: array (nullable = true) | | |-- element: integer (containsNull = false) | |-- values: array (nullable = true) | | |-- element: double (containsNull = false) ``` , which should be: ```py >>> df.printSchema() root |-- label: double (nullable = true) |-- weight: double (nullable = true) |-- features: vector (nullable = true) ``` ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Added the related tests. Closes #40527 from ueshin/issues/SPARK-42900/cols. Authored-by: Takuya UESHIN <ueshin@databricks.com> Signed-off-by: Ruifeng Zheng <ruifengz@apache.org> (cherry picked from commit 39e87d66b07beff91aebed6163ee82a35fbd1fcf) Signed-off-by: Ruifeng Zheng <ruifengz@apache.org> | 23 March 2023, 08:45:56 UTC |

| 827eeb4 | Hyukjin Kwon | 23 March 2023, 06:29:29 UTC | [SPARK-42903][PYTHON][DOCS] Avoid documenting None as as a return value in docstring ### What changes were proposed in this pull request? This PR proposes to remove None as as a return value in docstring. ### Why are the changes needed? To be consistent with the current documentation. Also, it's idiomatic to don't specify the return for `return None`. ### Does this PR introduce _any_ user-facing change? Yes, it changes the user-facing documentation. ### How was this patch tested? Doc build in the CI should verify them. Closes #40530 from HyukjinKwon/SPARK-42903. Authored-by: Hyukjin Kwon <gurwls223@apache.org> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 07301b4ab96365cfee1c6b7725026ef3f68e7ca1) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 23 March 2023, 06:29:36 UTC |

| a25c0ea | Rui Wang | 23 March 2023, 06:27:52 UTC | [SPARK-42878][CONNECT] The table API in DataFrameReader could also accept options It turns out that `spark.read.option.table` is a valid call chain and the `table` API does accept options when open a table. Existing Spark Connect implementation does not consider it. Feature parity. NO UT Closes #40498 from amaliujia/name_table_support_options. Authored-by: Rui Wang <rui.wang@databricks.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 0e29c8d5eda77ef085269f86b08c0a27420ac1f2) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 23 March 2023, 06:29:05 UTC |