https://github.com/apache/spark

- HEAD

- refs/heads/branch-0.5

- refs/heads/branch-0.6

- refs/heads/branch-0.7

- refs/heads/branch-0.8

- refs/heads/branch-0.9

- refs/heads/branch-1.0

- refs/heads/branch-1.0-jdbc

- refs/heads/branch-1.1

- refs/heads/branch-1.2

- refs/heads/branch-1.3

- refs/heads/branch-1.4

- refs/heads/branch-1.5

- refs/heads/branch-1.6

- refs/heads/branch-2.0

- refs/heads/branch-2.1

- refs/heads/branch-2.2

- refs/heads/branch-2.3

- refs/heads/branch-2.4

- refs/heads/branch-3.0

- refs/heads/branch-3.1

- refs/heads/branch-3.2

- refs/heads/branch-3.3

- refs/heads/branch-3.4

- refs/heads/branch-3.5

- refs/heads/master

- refs/remotes/origin/branch-0.8

- refs/remotes/origin/td-rdd-save

- refs/tags/0.3-scala-2.8

- refs/tags/0.3-scala-2.9

- refs/tags/2.0.0-preview

- refs/tags/alpha-0.1

- refs/tags/alpha-0.2

- refs/tags/v0.5.0

- refs/tags/v0.5.1

- refs/tags/v0.5.2

- refs/tags/v0.6.0

- refs/tags/v0.6.0-yarn

- refs/tags/v0.6.1

- refs/tags/v0.6.2

- refs/tags/v0.7.0

- refs/tags/v0.7.0-bizo-1

- refs/tags/v0.7.1

- refs/tags/v0.7.2

- refs/tags/v0.9.1

- refs/tags/v0.9.2

- refs/tags/v1.0.0

- refs/tags/v1.0.1

- refs/tags/v1.0.2

- refs/tags/v1.1.0

- refs/tags/v1.1.1

- refs/tags/v1.2.0

- refs/tags/v1.2.1

- refs/tags/v1.2.2

- refs/tags/v1.3.0

- refs/tags/v1.3.1

- refs/tags/v1.4.0

- refs/tags/v1.4.1

- refs/tags/v1.5.0-rc1

- refs/tags/v1.5.0-rc2

- refs/tags/v1.5.0-rc3

- refs/tags/v1.5.1

- refs/tags/v1.6.0

- refs/tags/v1.6.1

- refs/tags/v1.6.2

- refs/tags/v1.6.3

- refs/tags/v2.0.0

- refs/tags/v2.0.1

- refs/tags/v2.0.2

- refs/tags/v2.1.0

- refs/tags/v2.1.1

- refs/tags/v2.1.2

- refs/tags/v2.1.2-rc1

- refs/tags/v2.1.2-rc2

- refs/tags/v2.1.2-rc3

- refs/tags/v2.1.2-rc4

- refs/tags/v2.1.3

- refs/tags/v2.1.3-rc1

- refs/tags/v2.1.3-rc2

- refs/tags/v2.2.0

- refs/tags/v2.2.1

- refs/tags/v2.2.1-rc1

- refs/tags/v2.2.1-rc2

- refs/tags/v2.2.2

- refs/tags/v2.2.2-rc1

- refs/tags/v2.2.2-rc2

- refs/tags/v2.2.3

- refs/tags/v2.2.3-rc1

- refs/tags/v2.3.0

- refs/tags/v2.3.0-rc1

- refs/tags/v2.3.0-rc2

- refs/tags/v2.3.0-rc3

- refs/tags/v2.3.0-rc4

- refs/tags/v2.3.1

- refs/tags/v2.3.1-rc1

- refs/tags/v2.3.1-rc2

- refs/tags/v2.3.1-rc3

- refs/tags/v2.3.1-rc4

- refs/tags/v2.3.2

- refs/tags/v2.3.2-rc1

- refs/tags/v2.3.2-rc2

- refs/tags/v2.3.2-rc3

- refs/tags/v2.3.2-rc4

- refs/tags/v2.3.2-rc5

- refs/tags/v2.3.2-rc6

- refs/tags/v2.3.3

- refs/tags/v2.3.3-rc1

- refs/tags/v2.3.3-rc2

- refs/tags/v2.3.4

- refs/tags/v2.3.4-rc1

- refs/tags/v2.4.0

- refs/tags/v2.4.0-rc1

- refs/tags/v2.4.0-rc2

- refs/tags/v2.4.0-rc3

- refs/tags/v2.4.0-rc4

- refs/tags/v2.4.0-rc5

- refs/tags/v2.4.1

- refs/tags/v2.4.1-rc1

- refs/tags/v2.4.1-rc2

- refs/tags/v2.4.1-rc3

- refs/tags/v2.4.1-rc4

- refs/tags/v2.4.1-rc5

- refs/tags/v2.4.1-rc6

- refs/tags/v2.4.1-rc7

- refs/tags/v2.4.1-rc8

- refs/tags/v2.4.1-rc9

- refs/tags/v2.4.2

- refs/tags/v2.4.2-rc1

- refs/tags/v2.4.3

- refs/tags/v2.4.3-rc1

- refs/tags/v2.4.4

- refs/tags/v2.4.4-rc1

- refs/tags/v2.4.4-rc2

- refs/tags/v2.4.4-rc3

- refs/tags/v2.4.5

- refs/tags/v2.4.5-rc1

- refs/tags/v2.4.5-rc2

- refs/tags/v2.4.6

- refs/tags/v2.4.6-rc1

- refs/tags/v2.4.6-rc2

- refs/tags/v2.4.6-rc3

- refs/tags/v2.4.6-rc4

- refs/tags/v2.4.6-rc5

- refs/tags/v2.4.6-rc6

- refs/tags/v2.4.6-rc7

- refs/tags/v2.4.6-rc8

- refs/tags/v2.4.7

- refs/tags/v2.4.7-rc1

- refs/tags/v2.4.7-rc2

- refs/tags/v2.4.7-rc3

- refs/tags/v2.4.8

- refs/tags/v2.4.8-rc1

- refs/tags/v2.4.8-rc2

- refs/tags/v2.4.8-rc3

- refs/tags/v2.4.8-rc4

- refs/tags/v3.0.0

- refs/tags/v3.0.0-preview2

- refs/tags/v3.0.0-preview2-rc1

- refs/tags/v3.0.0-preview2-rc2

- refs/tags/v3.0.0-rc1

- refs/tags/v3.0.0-rc2

- refs/tags/v3.0.0-rc3

- refs/tags/v3.0.1

- refs/tags/v3.0.1-rc1

- refs/tags/v3.0.1-rc2

- refs/tags/v3.0.1-rc3

- refs/tags/v3.0.2

- refs/tags/v3.0.2-rc1

- refs/tags/v3.0.3

- refs/tags/v3.0.3-rc1

- refs/tags/v3.1.0-rc1

- refs/tags/v3.1.1

- refs/tags/v3.1.1-rc1

- refs/tags/v3.1.1-rc2

- refs/tags/v3.1.1-rc3

- refs/tags/v3.1.2

- refs/tags/v3.1.2-rc1

- refs/tags/v3.1.3

- refs/tags/v3.1.3-rc1

- refs/tags/v3.1.3-rc2

- refs/tags/v3.1.3-rc3

- refs/tags/v3.1.3-rc4

- refs/tags/v3.2.0

- refs/tags/v3.2.0-rc1

- refs/tags/v3.2.0-rc2

- refs/tags/v3.2.0-rc3

- refs/tags/v3.2.0-rc4

- refs/tags/v3.2.0-rc5

- refs/tags/v3.2.0-rc6

- refs/tags/v3.2.0-rc7

- refs/tags/v3.2.1

- refs/tags/v3.2.1-rc1

- refs/tags/v3.2.1-rc2

- refs/tags/v3.2.2

- refs/tags/v3.2.2-rc1

- refs/tags/v3.2.3

- refs/tags/v3.2.3-rc1

- refs/tags/v3.2.4

- refs/tags/v3.2.4-rc1

- refs/tags/v3.3.0

- refs/tags/v3.3.0-rc1

- refs/tags/v3.3.0-rc2

- refs/tags/v3.3.0-rc3

- refs/tags/v3.3.0-rc4

- refs/tags/v3.3.0-rc5

- refs/tags/v3.3.0-rc6

- refs/tags/v3.3.1

- refs/tags/v3.3.1-rc1

- refs/tags/v3.3.1-rc2

- refs/tags/v3.3.1-rc3

- refs/tags/v3.3.1-rc4

- refs/tags/v3.3.2

- refs/tags/v3.3.2-rc1

- refs/tags/v3.3.3

- refs/tags/v3.3.3-rc1

- refs/tags/v3.3.4

- refs/tags/v3.3.4-rc1

- refs/tags/v3.4.0

- refs/tags/v3.4.0-rc1

- refs/tags/v3.4.0-rc2

- refs/tags/v3.4.0-rc3

- refs/tags/v3.4.0-rc4

- refs/tags/v3.4.0-rc5

- refs/tags/v3.4.0-rc6

- refs/tags/v3.4.0-rc7

- refs/tags/v3.4.1

- refs/tags/v3.4.1-rc1

- refs/tags/v3.4.2

- refs/tags/v3.4.2-rc1

- refs/tags/v3.4.3

- refs/tags/v3.4.3-rc1

- refs/tags/v3.4.3-rc2

- refs/tags/v3.5.0

- refs/tags/v3.5.0-rc1

- refs/tags/v3.5.0-rc2

- refs/tags/v3.5.0-rc3

- refs/tags/v3.5.0-rc4

- refs/tags/v3.5.0-rc5

- refs/tags/v3.5.1

- refs/tags/v3.5.1-rc1

- refs/tags/v3.5.1-rc2

- refs/tags/v4.0.0-preview1

- refs/tags/v4.0.0-preview1-rc1

- refs/tags/v4.0.0-preview1-rc2

- refs/tags/v4.0.0-preview1-rc3

Take a new snapshot of a software origin

If the archived software origin currently browsed is not synchronized with its upstream version (for instance when new commits have been issued), you can explicitly request Software Heritage to take a new snapshot of it.

Use the form below to proceed. Once a request has been submitted and accepted, it will be processed as soon as possible. You can then check its processing state by visiting this dedicated page.

Processing "take a new snapshot" request ...

Permalinks

To reference or cite the objects present in the Software Heritage archive, permalinks based on SoftWare Hash IDentifiers (SWHIDs) must be used.

Select below a type of object currently browsed in order to display its associated SWHID and permalink.

| Revision | Author | Date | Message | Commit Date |

|---|---|---|---|---|

| 6b1ff22 | Dongjoon Hyun | 19 June 2023, 22:17:28 UTC | Preparing Spark release v3.4.1-rc1 | 19 June 2023, 22:17:28 UTC |

| 864b986 | Jiaan Geng | 19 June 2023, 07:55:06 UTC | [SPARK-44018][SQL] Improve the hashCode and toString for some DS V2 Expression ### What changes were proposed in this pull request? The `hashCode() `of `UserDefinedScalarFunc` and `GeneralScalarExpression` is not good enough. Take for example, `GeneralScalarExpression` uses `Objects.hash(name, children)`, it adopt the hash code of `name` and `children`'s reference and then combine them together as the `GeneralScalarExpression`'s hash code. In fact, we should adopt the hash code for each element in `children`. Because `UserDefinedAggregateFunc` and `GeneralAggregateFunc` missing `hashCode()`, this PR also want add them. This PR also improve the toString for `UserDefinedAggregateFunc` and `GeneralAggregateFunc` by using bool primitive comparison instead `Objects.equals`. Because the performance of bool primitive comparison better than `Objects.equals`. ### Why are the changes needed? Improve the hash code for some DS V2 Expression. ### Does this PR introduce _any_ user-facing change? 'Yes'. ### How was this patch tested? N/A Closes #41543 from beliefer/SPARK-44018. Authored-by: Jiaan Geng <beliefer@163.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 8c84d2c9349d7b607db949c2e114df781f23e438) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 19 June 2023, 07:55:24 UTC |

| 0c593a4 | Yuming Wang | 17 June 2023, 06:35:05 UTC | [MINOR][K8S][DOCS] Fix all dead links for K8s doc ### What changes were proposed in this pull request? This PR fixes all dead links for K8s doc. ### Why are the changes needed? <img width="797" alt="image" src="https://github.com/apache/spark/assets/5399861/3ba3f048-776c-42e6-b455-86e90b6ef22f"> ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Manual test. Closes #41635 from wangyum/kubernetes. Authored-by: Yuming Wang <yumwang@ebay.com> Signed-off-by: Yuming Wang <yumwang@ebay.com> (cherry picked from commit 1ff670488c3b402984ceb24e1d6eaf5a16176f1d) Signed-off-by: Yuming Wang <yumwang@ebay.com> | 17 June 2023, 06:40:38 UTC |

| 89bfb1f | Yuming Wang | 16 June 2023, 15:49:30 UTC | [SPARK-44070][BUILD] Bump snappy-java 1.1.10.1 ### What changes were proposed in this pull request? Bump snappy-java from 1.1.10.0 to 1.1.10.1. ### Why are the changes needed? This mostly is a security version, the notable changes are CVE fixing. - CVE-2023-34453 Integer overflow in shuffle - CVE-2023-34454 Integer overflow in compress - CVE-2023-34455 Unchecked chunk length Full changelog: https://github.com/xerial/snappy-java/releases/tag/v1.1.10.1 ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Pass GA. Closes #41616 from pan3793/SPARK-44070. Authored-by: Cheng Pan <chengpan@apache.org> Signed-off-by: Yuming Wang <yumwang@ebay.com> (cherry picked from commit 0502a42dda4d0822e2572a3d1ae6928d90b792a9) Signed-off-by: Yuming Wang <yumwang@ebay.com> | 16 June 2023, 15:49:30 UTC |

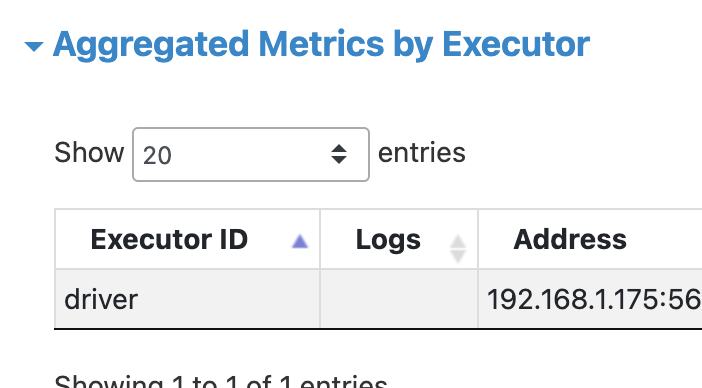

| 7edb3d9 | Yuming Wang | 16 June 2023, 03:18:38 UTC | [SPARK-44040][SQL] Fix compute stats when AggregateExec node above QueryStageExec ### What changes were proposed in this pull request? This PR fixes compute stats when `BaseAggregateExec` nodes above `QueryStageExec`. For aggregation, when the number of shuffle output rows is 0, the final result may be 1. For example: ```sql SELECT count(*) FROM tbl WHERE false; ``` The number of shuffle output rows is 0, and the final result is 1. Please see the [UI](https://github.com/apache/spark/assets/5399861/9d9ad999-b3a9-433e-9caf-c0b931423891). ### Why are the changes needed? Fix data issue. `OptimizeOneRowPlan` will use stats to remove `Aggregate`: ``` === Applying Rule org.apache.spark.sql.catalyst.optimizer.OptimizeOneRowPlan === !Aggregate [id#5L], [id#5L] Project [id#5L] +- Union false, false +- Union false, false :- LogicalQueryStage Aggregate [sum(id#0L) AS id#5L], HashAggregate(keys=[], functions=[sum(id#0L)]) :- LogicalQueryStage Aggregate [sum(id#0L) AS id#5L], HashAggregate(keys=[], functions=[sum(id#0L)]) +- LogicalQueryStage Aggregate [sum(id#18L) AS id#12L], HashAggregate(keys=[], functions=[sum(id#18L)]) +- LogicalQueryStage Aggregate [sum(id#18L) AS id#12L], HashAggregate(keys=[], functions=[sum(id#18L)]) ``` ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Unit test. Closes #41576 from wangyum/SPARK-44040. Authored-by: Yuming Wang <yumwang@ebay.com> Signed-off-by: Yuming Wang <yumwang@ebay.com> (cherry picked from commit 55ba63c257b6617ec3d2aca5bc1d0989d4f29de8) Signed-off-by: Yuming Wang <yumwang@ebay.com> | 16 June 2023, 03:42:19 UTC |

| 0355a6f | Yiqun Zhang | 14 June 2023, 16:38:18 UTC | [SPARK-44053][BUILD][3.4] Update ORC to 1.8.4 ### What changes were proposed in this pull request? This PR aims to update ORC to 1.8.4. ### Why are the changes needed? This will bring the following bug fixes. - https://issues.apache.org/jira/projects/ORC/versions/12353041 ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Pass the CIs. Closes #41592 from guiyanakuang/ORC-1.8.4. Authored-by: Yiqun Zhang <guiyanakuang@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 14 June 2023, 16:38:18 UTC |

| b1a5873 | Dongjoon Hyun | 13 June 2023, 10:37:08 UTC | [SPARK-44038][DOCS][K8S] Update YuniKorn docs with v1.3 ### What changes were proposed in this pull request? This PR aims to update `Apache YuniKorn` batch scheduler docs with v1.3.0 to recommend it in Apache Spark 3.4.1 and 3.5.0 users. ### Why are the changes needed? Apache YuniKorn v1.3.0 was released on 2023-06-12 with 160 resolved JIRAs. https://yunikorn.apache.org/release-announce/1.3.0 I installed YuniKorn v1.3.0 and tested manually. ``` $ helm list -n yunikorn NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION yunikorn yunikorn 1 2023-06-13 01:56:32.784863 -0700 PDT deployed yunikorn-1.3.0 ``` ``` $ build/sbt -Pkubernetes -Pkubernetes-integration-tests -Dspark.kubernetes.test.deployMode=docker-desktop "kubernetes-integration-tests/testOnly *.YuniKornSuite" -Dtest.exclude.tags=minikube,local,decom,r -Dtest.default.exclude.tags= ... [info] YuniKornSuite: [info] - SPARK-42190: Run SparkPi with local[*] (12 seconds, 49 milliseconds) [info] - Run SparkPi with no resources (20 seconds, 378 milliseconds) [info] - Run SparkPi with no resources & statefulset allocation (20 seconds, 583 milliseconds) [info] - Run SparkPi with a very long application name. (20 seconds, 606 milliseconds) [info] - Use SparkLauncher.NO_RESOURCE (19 seconds, 676 milliseconds) [info] - Run SparkPi with a master URL without a scheme. (20 seconds, 631 milliseconds) [info] - Run SparkPi with an argument. (22 seconds, 320 milliseconds) [info] - Run SparkPi with custom labels, annotations, and environment variables. (20 seconds, 469 milliseconds) [info] - All pods have the same service account by default (22 seconds, 537 milliseconds) [info] - Run extraJVMOptions check on driver (12 seconds, 268 milliseconds) ... ``` ``` $ k describe pod spark-test-app-33ec515e453e4301a90f626812db1153-driver -n spark-dbe522106eac40d4a17447bfa2947c45 ... Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduling 82s yunikorn spark-dbe522106eac40d4a17447bfa2947c45/spark-test-app-33ec515e453e4301a90f626812db1153-driver is queued and waiting for allocation Normal Scheduled 82s yunikorn Successfully assigned spark-dbe522106eac40d4a17447bfa2947c45/spark-test-app-33ec515e453e4301a90f626812db1153-driver to node docker-desktop Normal PodBindSuccessful 82s yunikorn Pod spark-dbe522106eac40d4a17447bfa2947c45/spark-test-app-33ec515e453e4301a90f626812db1153-driver is successfully bound to node docker-desktop Normal Pulled 82s kubelet Container image "docker.io/kubespark/spark:dev" already present on machine Normal Created 82s kubelet Created container spark-kubernetes-driver Normal Started 82s kubelet Started container spark-kubernetes-driver ``` ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Manual review. Closes #41571 from dongjoon-hyun/SPARK-44038. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 223c196d242f9c79ca76b226bf9489759652203a) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 13 June 2023, 10:37:16 UTC |

| 12d8e80 | Dongjoon Hyun | 13 June 2023, 03:51:16 UTC | Revert "[SPARK-44031][BUILD] Upgrade silencer to 1.7.13" This reverts commit b04232c65dc593c22db5fb5e18eab79aebf3a2ca. | 13 June 2023, 03:51:16 UTC |

| b04232c | Dongjoon Hyun | 13 June 2023, 03:50:37 UTC | [SPARK-44031][BUILD] Upgrade silencer to 1.7.13 ### What changes were proposed in this pull request? This PR aims to upgrade `silencer` to 1.7.13. ### Why are the changes needed? `silencer` 1.7.13 supports `Scala 2.12.18 & 2.13.11`. - https://github.com/ghik/silencer/releases/tag/v1.7.13 ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Pass the CIs. Closes #41560 from dongjoon-hyun/silencer_1.7.13. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 04d84df87e33111f79525b88ad78c6d1bddab78c) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 13 June 2023, 03:50:45 UTC |

| e010365 | wangjunbo | 13 June 2023, 03:43:54 UTC | [SPARK-32559][SQL] Fix the trim logic did't handle ASCII control characters correctly ### What changes were proposed in this pull request? The trim logic in Cast expression introduced in https://github.com/apache/spark/pull/29375 trim ASCII control characters unexpectly. Before this patch  And hive  ### Why are the changes needed? The behavior described above doesn't consistent with the behavior of Hive ### Does this PR introduce _any_ user-facing change? Yes ### How was this patch tested? add ut Closes #41535 from Kwafoor/trim_bugfix. Lead-authored-by: wangjunbo <wangjunbo@qiyi.com> Co-authored-by: Junbo wang <1042815068@qq.com> Signed-off-by: Kent Yao <yao@apache.org> (cherry picked from commit 80588e4ddfac1d2e2fdf4f9a7783c56be6a97cdd) Signed-off-by: Kent Yao <yao@apache.org> | 13 June 2023, 03:44:12 UTC |

| 1431df0 | Warren Zhu | 12 June 2023, 07:40:13 UTC | [SPARK-43398][CORE] Executor timeout should be max of idle shuffle and rdd timeout ### What changes were proposed in this pull request? Executor timeout should be max of idle, shuffle and rdd timeout ### Why are the changes needed? Wrong timeout value when combining idle, shuffle and rdd timeout ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Added test in `ExecutorMonitorSuite` Closes #41082 from warrenzhu25/max-timeout. Authored-by: Warren Zhu <warren.zhu25@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 7107742a381cde2e6de9425e3e436282a8c0d27c) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 12 June 2023, 07:40:21 UTC |

| 238da78 | Anish Shrigondekar | 09 June 2023, 11:49:25 UTC | [SPARK-43404][SS][3.4] Skip reusing sst file for same version of RocksDB state store to avoid id mismatch error NOTE: This ports back the commit https://github.com/apache/spark/commit/d3b9f4e7b1be7d89466cf80c3e38890c7add7625 (PR https://github.com/apache/spark/pull/41089) to branch-3.4. This is a clean cherry-pick. ### What changes were proposed in this pull request? Skip reusing sst file for same version of RocksDB state store to avoid id mismatch error ### Why are the changes needed? In case of task retry on the same executor, its possible that the original task completed the phase of creating the SST files and uploading them to the object store. In this case, we also might have added an entry to the in-memory map for `versionToRocksDBFiles` for the given version. When the retry task creates the local checkpoint, its possible the file name and size is the same, but the metadata ID embedded within the file may be different. So, when we try to load this version on successful commit, the metadata zip file points to the old SST file which results in a RocksDB mismatch id error. ``` Mismatch in unique ID on table file 24220. Expected: {9692563551998415634,4655083329411385714} Actual: {9692563551998415639,10299185534092933087} in file /local_disk0/spark-f58a741d-576f-400c-9b56-53497745ac01/executor-18e08e59-20e8-4a00-bd7e-94ad4599150b/spark-5d980399-3425-4951-894a-808b943054ea/StateStoreId(opId=2147483648,partId=53,name=default)-d89e082e-4e33-4371-8efd-78d927ad3ba3/workingDir-9928750e-f648-4013-a300-ac96cb6ec139/MANIFEST-024212 ``` This change avoids reusing files for the same version on the same host based on the map entries to reduce the chance of running into the error above. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Unit test RocksDBSuite ``` [info] Run completed in 35 seconds, 995 milliseconds. [info] Total number of tests run: 33 [info] Suites: completed 1, aborted 0 [info] Tests: succeeded 33, failed 0, canceled 0, ignored 0, pending 0 [info] All tests passed. ``` Closes #41530 from HeartSaVioR/SPARK-43404-3.4. Authored-by: Anish Shrigondekar <anish.shrigondekar@databricks.com> Signed-off-by: Jungtaek Lim <kabhwan.opensource@gmail.com> | 09 June 2023, 11:49:25 UTC |

| 020eb69 | Jia Fan | 08 June 2023, 20:12:45 UTC | [SPARK-42290][SQL] Fix the OOM error can't be reported when AQE on ### What changes were proposed in this pull request? When we use spark shell to submit job like this: ```scala $ spark-shell --conf spark.driver.memory=1g val df = spark.range(5000000).withColumn("str", lit("abcdabcdabcdabcdabasgasdfsadfasdfasdfasfasfsadfasdfsadfasdf")) val df2 = spark.range(10).join(broadcast(df), Seq("id"), "left_outer") df2.collect ``` This will cause the driver to hang indefinitely. When we disable AQE, the `java.lang.OutOfMemoryError` will be throws. After I check the code, the reason are wrong way to use `Throwable::initCause`. It happened when OOM be throw on https://github.com/apache/spark/blob/master/sql/core/src/main/scala/org/apache/spark/sql/execution/exchange/BroadcastExchangeExec.scala#L184 . Then https://github.com/apache/spark/blob/master/sql/catalyst/src/main/scala/org/apache/spark/sql/errors/QueryExecutionErrors.scala#L2401 will be executed. It use `new SparkException(..., case=oe).initCause(oe.getCause)`. The doc in `Throwable::initCause` say ``` This method can be called at most once. It is generally called from within the constructor, or immediately after creating the throwable. If this throwable was created with Throwable(Throwable) or Throwable(String, Throwable), this method cannot be called even once. ``` So when we call it, the `IllegalStateException` will be throw. Finally, the `promise.tryFailure(ex)` never be called. The driver will be blocked. ### Why are the changes needed? Fix the OOM never be reported bug ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Add new test Closes #41517 from Hisoka-X/SPARK-42290_OOM_AQE_On. Authored-by: Jia Fan <fanjiaeminem@qq.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 4168e1ac3c1b44298d2c6eae31e7f6cf948614a3) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 08 June 2023, 20:12:52 UTC |

| 8a71a15 | Dongjoon Hyun | 08 June 2023, 19:25:36 UTC | [MINOR][SQL][TESTS] Move ResolveDefaultColumnsSuite to 'o.a.s.sql' This PR moves `ResolveDefaultColumnsSuite` from `catalyst/analysis` package to `sql` package. To fix the code layout. No. Pass the CI Closes #41520 from dongjoon-hyun/move. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 07cc04d3e3f37684946e09a9ab1144efaed6f6ec) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 08 June 2023, 19:50:31 UTC |

| 77e077a | Dongjoon Hyun | 06 June 2023, 18:30:32 UTC | [SPARK-43973][SS][UI][TESTS][FOLLOWUP][3.4] Fix compilation by switching QueryTerminatedEvent constructor ### What changes were proposed in this pull request? This is a follow-up of https://github.com/apache/spark/pull/41468 to fix `branch-3.4`'s compilation issue. ### Why are the changes needed? To recover the compilation. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Pass the CIs. Closes #41484 from dongjoon-hyun/SPARK-43973. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 06 June 2023, 18:30:32 UTC |

| 778beb3 | Dongjoon Hyun | 06 June 2023, 16:34:40 UTC | [SPARK-43976][CORE] Handle the case where modifiedConfigs doesn't exist in event logs ### What changes were proposed in this pull request? This prevents NPE by handling the case where `modifiedConfigs` doesn't exist in event logs. ### Why are the changes needed? Basically, this is the same solution for that case. - https://github.com/apache/spark/pull/34907 The new code was added here, but we missed the corner case. - https://github.com/apache/spark/pull/35972 ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Pass the CIs. Closes #41472 from dongjoon-hyun/SPARK-43976. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit b4ab34bf9b22d0f0ca4ab13f9b6106f38ccfaebe) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 06 June 2023, 16:34:48 UTC |

| 63d5995 | manuzhang | 06 June 2023, 13:28:52 UTC | [SPARK-43510][YARN] Fix YarnAllocator internal state when adding running executor after processing completed containers ### What changes were proposed in this pull request? Keep track of completed container ids in YarnAllocator and don't update internal state of a container if it's already completed. ### Why are the changes needed? YarnAllocator updates internal state adding running executors after executor launch in a separate thread. That can happen after the containers are already completed (e.g. preempted) and processed by YarnAllocator. Then YarnAllocator mistakenly thinks there are still running executors which are already lost. As a result, application hangs without any running executors. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Added UT. Closes #41173 from manuzhang/spark-43510. Authored-by: manuzhang <owenzhang1990@gmail.com> Signed-off-by: Thomas Graves <tgraves@apache.org> (cherry picked from commit 89d44d092af4ae53fec296ca6569e240ad4c2bc5) Signed-off-by: Thomas Graves <tgraves@apache.org> | 06 June 2023, 13:29:19 UTC |

| b705106 | Kris Mok | 06 June 2023, 05:07:39 UTC | [SPARK-43973][SS][UI] Structured Streaming UI should display failed queries correctly ### What changes were proposed in this pull request? Handle the `exception` message from Structured Streaming's `QueryTerminatedEvent` in `StreamingQueryStatusListener`, so that the Structured Streaming UI can display the status and error message correctly for failed queries. ### Why are the changes needed? The original implementation of the Structured Streaming UI had a copy-and-paste bug where it forgot to handle the `exception` from `QueryTerminatedEvent` in `StreamingQueryStatusListener.onQueryTerminated`, so that field is never updated in the UI data, i.e. it's always `None`. In turn, the UI would always show the status of failed queries as `FINISHED` instead of `FAILED`. ### Does this PR introduce _any_ user-facing change? Yes. Failed Structured Streaming queries would show incorrectly as `FINISHED` before the fix:  and show correctly as `FAILED` after the fix:  The example query is: ```scala implicit val ctx = spark.sqlContext import org.apache.spark.sql.execution.streaming.MemoryStream spark.conf.set("spark.sql.ansi.enabled", "true") val inputData = MemoryStream[(Int, Int)] val df = inputData.toDF().selectExpr("_1 / _2 as a") inputData.addData((1, 2), (3, 4), (5, 6), (7, 0)) val testQuery = df.writeStream.format("memory").queryName("kristest").outputMode("append").start testQuery.processAllAvailable() ``` ### How was this patch tested? Added UT to `StreamingQueryStatusListenerSuite` Closes #41468 from rednaxelafx/fix-streaming-ui. Authored-by: Kris Mok <kris.mok@databricks.com> Signed-off-by: Gengliang Wang <gengliang@apache.org> (cherry picked from commit 51a919ea8d65e38f829717ae822f31a1a7f57beb) Signed-off-by: Gengliang Wang <gengliang@apache.org> | 06 June 2023, 05:07:48 UTC |

| 7b30403 | Hyukjin Kwon | 06 June 2023, 00:27:35 UTC | Revert "[SPARK-43911][SQL] Use toSet to deduplicate the iterator data to prevent the creation of large Array" This reverts commit 93709918affba4846a30cbae8692a6a328b5a448. | 06 June 2023, 00:27:35 UTC |

| 9370991 | mcdull-zhang | 04 June 2023, 07:04:33 UTC | [SPARK-43911][SQL] Use toSet to deduplicate the iterator data to prevent the creation of large Array ### What changes were proposed in this pull request? When SubqueryBroadcastExec reuses the keys of Broadcast HashedRelation for dynamic partition pruning, it will put all the keys in an Array, and then call the distinct of the Array to remove the duplicates. In general, Broadcast HashedRelation may have many rows, and the repetition rate of this key is high. Doing so will cause this Array to occupy a large amount of memory (and this memory is not managed by MemoryManager), which may trigger OOM. The approach here is to directly call the toSet of the iterator to deduplicate, which can prevent the creation of a large array. ### Why are the changes needed? Avoid the occurrence of the following OOM exceptions: ```text Exception in thread "dynamicpruning-0" java.lang.OutOfMemoryError: Java heap space at scala.collection.mutable.ResizableArray.ensureSize(ResizableArray.scala:106) at scala.collection.mutable.ResizableArray.ensureSize$(ResizableArray.scala:96) at scala.collection.mutable.ArrayBuffer.ensureSize(ArrayBuffer.scala:49) at scala.collection.mutable.ArrayBuffer.$plus$eq(ArrayBuffer.scala:85) at scala.collection.mutable.ArrayBuffer.$plus$eq(ArrayBuffer.scala:49) at scala.collection.generic.Growable.$anonfun$$plus$plus$eq$1(Growable.scala:62) at scala.collection.generic.Growable$$Lambda$7/1514840818.apply(Unknown Source) at scala.collection.Iterator.foreach(Iterator.scala:943) at scala.collection.Iterator.foreach$(Iterator.scala:943) at scala.collection.AbstractIterator.foreach(Iterator.scala:1431) at scala.collection.generic.Growable.$plus$plus$eq(Growable.scala:62) at scala.collection.generic.Growable.$plus$plus$eq$(Growable.scala:53) at scala.collection.mutable.ArrayBuffer.$plus$plus$eq(ArrayBuffer.scala:105) at scala.collection.mutable.ArrayBuffer.$plus$plus$eq(ArrayBuffer.scala:49) at scala.collection.TraversableOnce.to(TraversableOnce.scala:366) at scala.collection.TraversableOnce.to$(TraversableOnce.scala:364) at scala.collection.AbstractIterator.to(Iterator.scala:1431) at scala.collection.TraversableOnce.toBuffer(TraversableOnce.scala:358) at scala.collection.TraversableOnce.toBuffer$(TraversableOnce.scala:358) at scala.collection.AbstractIterator.toBuffer(Iterator.scala:1431) at scala.collection.TraversableOnce.toArray(TraversableOnce.scala:345) at scala.collection.TraversableOnce.toArray$(TraversableOnce.scala:339) at scala.collection.AbstractIterator.toArray(Iterator.scala:1431) at org.apache.spark.sql.execution.SubqueryBroadcastExec.$anonfun$relationFuture$2(SubqueryBroadcastExec.scala:92) at org.apache.spark.sql.execution.SubqueryBroadcastExec$$Lambda$4212/5099232.apply(Unknown Source) at org.apache.spark.sql.execution.SQLExecution$.$anonfun$withExecutionId$1(SQLExecution.scala:140) ``` ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Production environment manual verification && Pass existing unit tests Closes #41419 from mcdull-zhang/reduce_memory_usage. Authored-by: mcdull-zhang <work4dong@163.com> Signed-off-by: Yuming Wang <yumwang@ebay.com> (cherry picked from commit 595ad30e6259f7e4e4252dfee7704b73fd4760f7) Signed-off-by: Yuming Wang <yumwang@ebay.com> | 04 June 2023, 07:08:22 UTC |

| e140bf7 | Jiaan Geng | 03 June 2023, 19:15:15 UTC | [SPARK-43956][SQL][3.4] Fix the bug doesn't display column's sql for Percentile[Cont|Disc] ### What changes were proposed in this pull request? This PR used to backport https://github.com/apache/spark/pull/41436 to 3.4 ### Why are the changes needed? Fix the bug doesn't display column's sql for Percentile[Cont|Disc]. ### Does this PR introduce _any_ user-facing change? 'Yes'. Users could see the correct sql information. ### How was this patch tested? Test cases updated. Closes #41445 from beliefer/SPARK-43956_followup. Authored-by: Jiaan Geng <beliefer@163.com> Signed-off-by: Max Gekk <max.gekk@gmail.com> | 03 June 2023, 19:15:15 UTC |

| 746c906 | Hyukjin Kwon | 02 June 2023, 10:38:13 UTC | [SPARK-43949][PYTHON] Upgrade cloudpickle to 2.2.1 This PR proposes to upgrade Cloudpickle from 2.2.0 to 2.2.1. Cloudpickle 2.2.1 has a fix (https://github.com/cloudpipe/cloudpickle/pull/495) for namedtuple issue (https://github.com/cloudpipe/cloudpickle/issues/460). PySpark relies on namedtuple heavily especially for RDD. We should upgrade and fix it. Yes, see https://github.com/cloudpipe/cloudpickle/issues/460. Relies on cloudpickle's unittests. Existing test cases should pass too. Closes #41433 from HyukjinKwon/cloudpickle-upgrade. Authored-by: Hyukjin Kwon <gurwls223@apache.org> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 085dfeb2bed61f6d43d9b99b299373e797ac8f17) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 02 June 2023, 10:39:24 UTC |

| a2c915d | Andrey Gubichev | 01 June 2023, 07:10:11 UTC | [SPARK-43760][SQL][3.4] Nullability of scalar subquery results ### What changes were proposed in this pull request? Backport of https://github.com/apache/spark/pull/41287. Makes sure that the results of scalar subqueries are declared as nullable. ### Why are the changes needed? This is an existing correctness bug, see https://issues.apache.org/jira/browse/SPARK-43760 ### Does this PR introduce _any_ user-facing change? Fixes a correctness issue, so it is user-facing. ### How was this patch tested? Query tests. Closes #41408 from agubichev/spark-43760-nullability-branch-3.4. Authored-by: Andrey Gubichev <andrey.gubichev@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 01 June 2023, 07:10:11 UTC |

| 0e1401d | Martin Grund | 31 May 2023, 15:55:19 UTC | [SPARK-43894][PYTHON] Fix bug in df.cache() ### What changes were proposed in this pull request? Previously calling `df.cache()` would result in an invalid plan input exception because we did not invoke `persist()` with the right arguments. This patch simplifies the logic and makes it compatible to the behavior in Spark itself. ### Why are the changes needed? Bug ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Added UT Closes #41399 from grundprinzip/df_cache. Authored-by: Martin Grund <martin.grund@databricks.com> Signed-off-by: Herman van Hovell <herman@databricks.com> (cherry picked from commit d3f76c6ca07a7a11fd228dde770186c0fbc3f03f) Signed-off-by: Herman van Hovell <herman@databricks.com> | 31 May 2023, 15:56:22 UTC |

| efae536 | Wanqiang Ji | 29 May 2023, 02:47:13 UTC | [SPARK-42421][CORE] Use the utils to get the switch for dynamic allocation used in local checkpoint ### What changes were proposed in this pull request? Use the utils to get the switch for dynamic allocation used in local checkpoint ### Why are the changes needed? In RDD's local checkpoint, only through retrieve the value from configuration, but not adjudge the local master and testing for dynamic allocation which unified in Utils. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Used the existing UTs Closes #39998 from jiwq/SPARK-42421. Authored-by: Wanqiang Ji <jiwq@apache.org> Signed-off-by: Kent Yao <yao@apache.org> (cherry picked from commit c4b880f8d3936c1b7c8ddd9621ab392c70ace1e7) Signed-off-by: Kent Yao <yao@apache.org> | 29 May 2023, 02:47:32 UTC |

| b06f0f1 | Adam Binford | 27 May 2023, 02:30:14 UTC | [SPARK-43802][SQL][3.4] Fix codegen for unhex and unbase64 with failOnError=true ### What changes were proposed in this pull request? This is a backport of https://github.com/apache/spark/pull/41317. Fixes an error with codegen for unhex and unbase64 expression when failOnError is enabled introduced in https://github.com/apache/spark/pull/37483. ### Why are the changes needed? Codegen fails and Spark falls back to interpreted evaluation: ``` Caused by: org.codehaus.commons.compiler.CompileException: File 'generated.java', Line 47, Column 1: failed to compile: org.codehaus.commons.compiler.CompileException: File 'generated.java', Line 47, Column 1: Unknown variable or type "BASE64" ``` in the code block: ``` /* 107 */ if (!org.apache.spark.sql.catalyst.expressions.UnBase64.isValidBase64(project_value_1)) { /* 108 */ throw QueryExecutionErrors.invalidInputInConversionError( /* 109 */ ((org.apache.spark.sql.types.BinaryType$) references[1] /* to */), /* 110 */ project_value_1, /* 111 */ BASE64, /* 112 */ "try_to_binary"); /* 113 */ } ``` ### Does this PR introduce _any_ user-facing change? Bug fix. ### How was this patch tested? Added to the existing tests so evaluate an expression with failOnError enabled to test that path of the codegen. Closes #41334 from Kimahriman/to-binary-codegen-backport. Authored-by: Adam Binford <adamq43@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 27 May 2023, 02:30:14 UTC |

| f8a2498 | Cheng Pan | 26 May 2023, 03:33:38 UTC | [SPARK-43751][SQL][DOC] Document `unbase64` behavior change ### What changes were proposed in this pull request? After SPARK-37820, `select unbase64("abcs==")`(malformed input) always throws an exception, this PR does not help in that case, it only improves the error message for `to_binary()`. So, `unbase64()`'s behavior for malformed input changed silently after SPARK-37820: - before: return a best-effort result, because it uses [LENIENT](https://github.com/apache/commons-codec/blob/rel/commons-codec-1.15/src/main/java/org/apache/commons/codec/binary/Base64InputStream.java#L46) policy: any trailing bits are composed into 8-bit bytes where possible. The remainder are discarded. - after: throw an exception And there is no way to restore the previous behavior. To tolerate the malformed input, the user should migrate `unbase64(<input>)` to `try_to_binary(<input>, 'base64')` to get NULL instead of interrupting by exception. ### Why are the changes needed? Add the behavior change to migration guide. ### Does this PR introduce _any_ user-facing change? Yes. ### How was this patch tested? Manuelly review. Closes #41280 from pan3793/SPARK-43751. Authored-by: Cheng Pan <chengpan@apache.org> Signed-off-by: Kent Yao <yao@apache.org> (cherry picked from commit af6c1ec7c795584c28e15e4963eed83917e2f06a) Signed-off-by: Kent Yao <yao@apache.org> | 26 May 2023, 03:33:56 UTC |

| 7480f37 | Takuya UESHIN | 24 May 2023, 04:54:29 UTC | [SPARK-43759][SQL][PYTHON] Expose TimestampNTZType in pyspark.sql.types ### What changes were proposed in this pull request? Exposes `TimestampNTZType` in `pyspark.sql.types`. ```py >>> from pyspark.sql.types import * >>> >>> TimestampNTZType() TimestampNTZType() ``` ### Why are the changes needed? `TimestampNTZType` is missing in `_all_` list in `pyspark.sql.types`. ```py >>> from pyspark.sql.types import * >>> >>> TimestampNTZType() Traceback (most recent call last): File "<stdin>", line 1, in <module> NameError: name 'TimestampNTZType' is not defined ``` ### Does this PR introduce _any_ user-facing change? Users won't need to explicitly import `TimestampNTZType` but wildcard will work. ### How was this patch tested? Existing tests. Closes #41286 from ueshin/issues/SPARK-43759/timestamp_ntz. Authored-by: Takuya UESHIN <ueshin@databricks.com> Signed-off-by: Ruifeng Zheng <ruifengz@apache.org> (cherry picked from commit 1f04bb6320dd11a81a43f2623670c8dea10d72ae) Signed-off-by: Ruifeng Zheng <ruifengz@apache.org> | 24 May 2023, 04:59:20 UTC |

| 1863e47 | Dongjoon Hyun | 24 May 2023, 04:44:45 UTC | [SPARK-43758][BUILD][FOLLOWUP][3.4] Update Hadoop 2 dependency manifest ### What changes were proposed in this pull request? This Is a follow-up of https://github.com/apache/spark/pull/41285. ### Why are the changes needed? When merging to branch-3.4, `hadoop-2` dependency manifest is missed. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Pass the CIs. Closes #41291 from dongjoon-hyun/SPARK-43758. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 24 May 2023, 04:44:45 UTC |

| 7bf0b7a | Chao Sun | 24 May 2023, 02:50:20 UTC | [SPARK-43758][BUILD] Upgrade snappy-java to 1.1.10.0 This PR upgrades `snappy-java` version to 1.1.10.0 from 1.1.9.1. The new `snappy-java` version fixes a potential issue for Graviton support when used with old GLIBC versions. See https://github.com/xerial/snappy-java/issues/417. No Existing tests. Closes #41285 from sunchao/snappy-java. Authored-by: Chao Sun <sunchao@apple.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 1e17c86f77893b02a1b304800cd17f814f979dd2) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 24 May 2023, 02:51:26 UTC |

| a855e33 | itholic | 23 May 2023, 07:02:24 UTC | [MINOR][PS][TESTS] Fix `SeriesDateTimeTests.test_quarter` to work properly ### What changes were proposed in this pull request? This PR proposes to fix `SeriesDateTimeTests.test_quarter` to work properly. ### Why are the changes needed? Test has not been properly testing ### Does this PR introduce _any_ user-facing change? No, test-only. ### How was this patch tested? Manually tested, and the existing CI should pass Closes #41274 from itholic/minor_quarter_test. Authored-by: itholic <haejoon.lee@databricks.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit a5c53384def22b01b8ef28bee6f2d10648bce1a1) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 23 May 2023, 07:04:04 UTC |

| 05039f3 | Dongjoon Hyun | 23 May 2023, 05:17:11 UTC | [SPARK-43719][WEBUI] Handle `missing row.excludedInStages` field ### What changes were proposed in this pull request? This PR aims to handle a corner case when `row.excludedInStages` field is missing. ### Why are the changes needed? To fix the following type error when Spark loads some very old 2.4.x or 3.0.x logs.  We have two places and this PR protects both places. ``` $ git grep row.excludedInStages core/src/main/resources/org/apache/spark/ui/static/executorspage.js: if (typeof row.excludedInStages === "undefined" || row.excludedInStages.length == 0) { core/src/main/resources/org/apache/spark/ui/static/executorspage.js: return "Active (Excluded in Stages: [" + row.excludedInStages.join(", ") + "])"; ``` ### Does this PR introduce _any_ user-facing change? No, this will remove the error case only. ### How was this patch tested? Manual review. Closes #41266 from dongjoon-hyun/SPARK-43719. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit eeab2e701330f7bc24e9b09ce48925c2c3265aa8) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 23 May 2023, 05:17:22 UTC |

| 4cae2ad | Bruce Robbins | 23 May 2023, 04:46:59 UTC | [SPARK-43718][SQL] Set nullable correctly for keys in USING joins ### What changes were proposed in this pull request? In `Anaylzer#commonNaturalJoinProcessing`, set nullable correctly when adding the join keys to the Project's hidden_output tag. ### Why are the changes needed? The value of the hidden_output tag will be used for resolution of attributes in parent operators, so incorrect nullabilty can cause problems. For example, assume this data: ``` create or replace temp view t1 as values (1), (2), (3) as (c1); create or replace temp view t2 as values (2), (3), (4) as (c1); ``` The following query produces incorrect results: ``` spark-sql (default)> select explode(array(t1.c1, t2.c1)) as x1 from t1 full outer join t2 using (c1); 1 -1 <== should be null 2 2 3 3 -1 <== should be null 4 Time taken: 0.663 seconds, Fetched 8 row(s) spark-sql (default)> ``` Similar issues occur with right outer join and left outer join. `t1.c1` and `t2.c1` have the wrong nullability at the time the array is resolved, so the array's `containsNull` value is incorrect. `UpdateNullability` will update the nullability of `t1.c1` and `t2.c1` in the `CreateArray` arguments, but will not update `containsNull` in the function's data type. Queries that don't use arrays also can get wrong results. Assume this data: ``` create or replace temp view t1 as values (0), (1), (2) as (c1); create or replace temp view t2 as values (1), (2), (3) as (c1); create or replace temp view t3 as values (1, 2), (3, 4), (4, 5) as (a, b); ``` The following query produces incorrect results: ``` select t1.c1 as t1_c1, t2.c1 as t2_c1, b from t1 full outer join t2 using (c1), lateral ( select b from t3 where a = coalesce(t2.c1, 1) ) lt3; 1 1 2 NULL 3 4 Time taken: 2.395 seconds, Fetched 2 row(s) spark-sql (default)> ``` The result should be the following: ``` 0 NULL 2 1 1 2 NULL 3 4 ``` ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? New tests. Closes #41267 from bersprockets/using_anomaly. Authored-by: Bruce Robbins <bersprockets@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 217b30a4f7ca18ade19a9552c2b87dd4caeabe57) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 23 May 2023, 04:47:17 UTC |

| 1db2f5c | Dongjoon Hyun | 19 May 2023, 09:20:38 UTC | [SPARK-43589][SQL] Fix `cannotBroadcastTableOverMaxTableBytesError` to use `bytesToString` This PR aims to fix `cannotBroadcastTableOverMaxTableBytesError` to use `bytesToString` instead of shift operations. To avoid user confusion by giving more accurate values. For example, `maxBroadcastTableBytes` is 1GB and `dataSize` is `2GB - 1 byte`. **BEFORE** ``` Cannot broadcast the table that is larger than 1GB: 1 GB. ``` **AFTER** ``` Cannot broadcast the table that is larger than 1024.0 MiB: 2048.0 MiB. ``` Yes, but only error message. Pass the CIs with newly added test case. Closes #41232 from dongjoon-hyun/SPARK-43589. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 04759570395c99bc17961742733d06e19a917abd) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 19 May 2023, 09:21:37 UTC |

| 4448c76 | Dongjoon Hyun | 19 May 2023, 06:31:14 UTC | [SPARK-43587][CORE][TESTS] Run `HealthTrackerIntegrationSuite` in a dedicated JVM ### What changes were proposed in this pull request? This PR aims to run `HealthTrackerIntegrationSuite` in a dedicated JVM to mitigate a flaky tests. ### Why are the changes needed? `HealthTrackerIntegrationSuite` has been flaky and SPARK-25400 and SPARK-37384 increased the timeout `from 1s to 10s` and `10s to 20s`, respectively. The usual suspect of this flakiness is some unknown side-effect like GCs. In this PR, we aims to run this in a separate JVM instead of increasing the timeout more. https://github.com/apache/spark/blob/abc140263303c409f8d4b9632645c5c6cbc11d20/core/src/test/scala/org/apache/spark/scheduler/SchedulerIntegrationSuite.scala#L56-L58 This is the recent failure. - https://github.com/apache/spark/actions/runs/5020505360/jobs/9002039817 ``` [info] HealthTrackerIntegrationSuite: [info] - If preferred node is bad, without excludeOnFailure job will fail (92 milliseconds) [info] - With default settings, job can succeed despite multiple bad executors on node (3 seconds, 163 milliseconds) [info] - Bad node with multiple executors, job will still succeed with the right confs *** FAILED *** (20 seconds, 43 milliseconds) [info] java.util.concurrent.TimeoutException: Futures timed out after [20 seconds] [info] at scala.concurrent.impl.Promise$DefaultPromise.ready(Promise.scala:259) [info] at scala.concurrent.impl.Promise$DefaultPromise.ready(Promise.scala:187) [info] at org.apache.spark.util.ThreadUtils$.awaitReady(ThreadUtils.scala:355) [info] at org.apache.spark.scheduler.SchedulerIntegrationSuite.awaitJobTermination(SchedulerIntegrationSuite.scala:276) [info] at org.apache.spark.scheduler.HealthTrackerIntegrationSuite.$anonfun$new$9(HealthTrackerIntegrationSuite.scala:92) ``` ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Pass the CIs. Closes #41229 from dongjoon-hyun/SPARK-43587. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit eb2456bce2522779bf6b866a5fbb728472d35097) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 19 May 2023, 06:31:29 UTC |

| da8f5a6 | Max Gekk | 18 May 2023, 22:10:39 UTC | [SPARK-43541][SQL][3.4] Propagate all `Project` tags in resolving of expressions and missing columns ### What changes were proposed in this pull request? In the PR, I propose to propagate all tags in a `Project` while resolving of expressions and missing columns in `ColumnResolutionHelper.resolveExprsAndAddMissingAttrs()`. This is a backport of https://github.com/apache/spark/pull/41204. ### Why are the changes needed? To fix the bug reproduced by the query below: ```sql spark-sql (default)> WITH > t1 AS (select key from values ('a') t(key)), > t2 AS (select key from values ('a') t(key)) > SELECT t1.key > FROM t1 FULL OUTER JOIN t2 USING (key) > WHERE t1.key NOT LIKE 'bb.%'; [UNRESOLVED_COLUMN.WITH_SUGGESTION] A column or function parameter with name `t1`.`key` cannot be resolved. Did you mean one of the following? [`key`].; line 4 pos 7; ``` ### Does this PR introduce _any_ user-facing change? No. It fixes a bug, and outputs the expected result: `a`. ### How was this patch tested? By new test added to `using-join.sql`: ``` $ PYSPARK_PYTHON=python3 build/sbt "sql/testOnly org.apache.spark.sql.SQLQueryTestSuite -- -z using-join.sql" ``` and the related test suites: ``` $ build/sbt -Phive-2.3 -Phive-thriftserver "test:testOnly org.apache.spark.sql.hive.HiveContextCompatibilitySuite" ``` Authored-by: Max Gekk <max.gekkgmail.com> Signed-off-by: Max Gekk <max.gekkgmail.com> (cherry picked from commit 09d5742a8679839d0846f50e708df98663a6d64c) Closes #41220 from MaxGekk/fix-using-join-3.4. Authored-by: Max Gekk <max.gekk@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 18 May 2023, 22:10:39 UTC |

| 079594a | Wenchen Fan | 18 May 2023, 10:39:38 UTC | Revert "[SPARK-43313][SQL] Adding missing column DEFAULT values for MERGE INSERT actions" This reverts commit 3a0e6bde2aaa11e1165f4fde040ff02e1743795e. | 18 May 2023, 10:39:38 UTC |

| 5937a97 | Ole Sasse | 18 May 2023, 07:08:42 UTC | [SPARK-43450][SQL][TESTS] Add more `_metadata` filter test cases Add additional tests for metadata filters following up after f0a901058aaec8496ec9426c5811d9dea66c195d To cover more edge cases in testing No - Closes #39608 from olaky/additional-tests-for-metadata-filtering. Authored-by: Ole Sasse <ole.sasse@databricks.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit bc801c1777994c5cb14ce21533c4053281df5aa8) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 18 May 2023, 07:09:35 UTC |

| a18d71a | Jia Fan | 18 May 2023, 06:29:30 UTC | [SPARK-43522][SQL] Fix creating struct column name with index of array ### What changes were proposed in this pull request? When creating a struct column in Dataframe, the code that ran without problems in version 3.3.1 does not work in version 3.4.0. In 3.3.1 ```scala val testDF = Seq("a=b,c=d,d=f").toDF.withColumn("key_value", split('value, ",")).withColumn("map_entry", transform(col("key_value"), x => struct(split(x, "=").getItem(0), split(x, "=").getItem(1) ) )) testDF.show() +-----------+---------------+--------------------+ | value| key_value| map_entry| +-----------+---------------+--------------------+ |a=b,c=d,d=f|[a=b, c=d, d=f]|[{a, b}, {c, d}, ...| +-----------+---------------+--------------------+ ``` In 3.4.0 ``` org.apache.spark.sql.AnalysisException: [DATATYPE_MISMATCH.CREATE_NAMED_STRUCT_WITHOUT_FOLDABLE_STRING] Cannot resolve "struct(split(namedlambdavariable(), =, -1)[0], split(namedlambdavariable(), =, -1)[1])" due to data type mismatch: Only foldable `STRING` expressions are allowed to appear at odd position, but they are ["0", "1"].; 'Project [value#41, key_value#45, transform(key_value#45, lambdafunction(struct(0, split(lambda x_3#49, =, -1)[0], 1, split(lambda x_3#49, =, -1)[1]), lambda x_3#49, false)) AS map_entry#48] +- Project [value#41, split(value#41, ,, -1) AS key_value#45] +- LocalRelation [value#41] at org.apache.spark.sql.catalyst.analysis.package$AnalysisErrorAt.dataTypeMismatch(package.scala:73) at org.apache.spark.sql.catalyst.analysis.CheckAnalysis.$anonfun$checkAnalysis0$5(CheckAnalysis.scala:269) at org.apache.spark.sql.catalyst.analysis.CheckAnalysis.$anonfun$checkAnalysis0$5$adapted(CheckAnalysis.scala:256) at org.apache.spark.sql.catalyst.trees.TreeNode.foreachUp(TreeNode.scala:295) at org.apache.spark.sql.catalyst.trees.TreeNode.$anonfun$foreachUp$1(TreeNode.scala:294) at org.apache.spark.sql.catalyst.trees.TreeNode.$anonfun$foreachUp$1$adapted(TreeNode.scala:294) at scala.collection.Iterator.foreach(Iterator.scala:943) at scala.collection.Iterator.foreach$(Iterator.scala:943) at scala.collection.AbstractIterator.foreach(Iterator.scala:1431) at scala.collection.IterableLike.foreach(IterableLike.scala:74) at scala.collection.IterableLike.foreach$(IterableLike.scala:73) at scala.collection.AbstractIterable.foreach(Iterable.scala:56) at org.apache.spark.sql.catalyst.trees.TreeNode.foreachUp(TreeNode.scala:294) at org.apache.spark.sql.catalyst.trees.TreeNode.$anonfun$foreachUp$1(TreeNode.scala:294) at org.apache.spark.sql.catalyst.trees.TreeNode.$anonfun$foreachUp$1$adapted(TreeNode.scala:294) at scala.collection.Iterator.foreach(Iterator.scala:943) at scala.collection.Iterator.foreach$(Iterator.scala:943) at scala.collection.AbstractIterator.foreach(Iterator.scala:1431) .... ``` The reason is `CreateNamedStruct` will use last expr of value `Expression` as column name. And will check it must are `String`. But array `Expression`'s last expr are `Integer`. The check will failed. So we can skip match with `UnresolvedExtractValue` when last expr not `String`. Then it will when fall back to the default name. ### Why are the changes needed? Fix the bug when creating struct column name with index of array ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Add new test Closes #41187 from Hisoka-X/SPARK-43522_struct_name_array. Authored-by: Jia Fan <fanjiaeminem@qq.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit f2a29176de6e0b1628de6ca962cbf5036b145e0a) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 18 May 2023, 06:29:46 UTC |

| 5cc4c5d | Rob Reeves | 18 May 2023, 06:24:05 UTC | [SPARK-43157][SQL] Clone InMemoryRelation cached plan to prevent cloned plan from referencing same objects ### What changes were proposed in this pull request? This is the most narrow fix for the issue observed in SPARK-43157. It does not attempt to identify or solve all potential correctness and concurrency issues from TreeNode.tags being modified in multiple places. It solves the issue described in SPARK-43157 by cloning the cached plan when populating `InMemoryRelation.innerChildren`. I chose to do the clone at this point to limit the scope to tree traversal used for building up the string representation of the plan, which is where we see the issue. I do not see any other uses for `TreeNode.innerChildren`. I did not clone any earlier because the caching objects have mutable state that I wanted to avoid touching to be extra safe. Another solution I tried was to modify `InMemoryRelation.clone` to create a new `CachedRDDBuilder` and pass in a cloned `cachedPlan`. I opted not to go with this approach because `CachedRDDBuilder` has mutable state that needs to be moved to the new object and I didn't want to add that complexity if not needed. ### Why are the changes needed? When caching is used the cached part of the SparkPlan is leaked to new clones of the plan. This leakage is an issue because if the TreeNode.tags are modified in one plan, it impacts the other plan. This is a correctness issue and a concurrency issue if the TreeNode.tags are set in different threads for the cloned plans. See the description of [SPARK-43157](https://issues.apache.org/jira/browse/SPARK-43157) for an example of the concurrency issue. ### Does this PR introduce _any_ user-facing change? Yes. It fixes a driver hanging issue the user can observe. ### How was this patch tested? Unit test added and I manually verified `Dataset.explain("formatted")` still had the expected output. ```scala spark.range(10).cache.filter($"id" > 5).explain("formatted") == Physical Plan == * Filter (4) +- InMemoryTableScan (1) +- InMemoryRelation (2) +- * Range (3) (1) InMemoryTableScan Output [1]: [id#0L] Arguments: [id#0L], [(id#0L > 5)] (2) InMemoryRelation Arguments: [id#0L], CachedRDDBuilder(org.apache.spark.sql.execution.columnar.DefaultCachedBatchSerializer418b946b,StorageLevel(disk, memory, deserialized, 1 replicas),*(1) Range (0, 10, step=1, splits=16) ,None), [id#0L ASC NULLS FIRST] (3) Range [codegen id : 1] Output [1]: [id#0L] Arguments: Range (0, 10, step=1, splits=Some(16)) (4) Filter [codegen id : 1] Input [1]: [id#0L] Condition : (id#0L > 5) ``` I also verified that the `InMemory.innerChildren` is cloned when the entire plan is cloned. ```scala import org.apache.spark.sql.execution.SparkPlan import org.apache.spark.sql.execution.columnar.InMemoryTableScanExec import spark.implicits._ def findCacheOperator(plan: SparkPlan): Option[InMemoryTableScanExec] = { if (plan.isInstanceOf[InMemoryTableScanExec]) { Some(plan.asInstanceOf[InMemoryTableScanExec]) } else if (plan.children.isEmpty && plan.subqueries.isEmpty) { None } else { (plan.subqueries.flatMap(p => findCacheOperator(p)) ++ plan.children.flatMap(findCacheOperator)).headOption } } val df = spark.range(10).filter($"id" < 100).cache() val df1 = df.limit(1) val df2 = df.limit(1) // Get the cache operator (InMemoryTableScanExec) in each plan val plan1 = findCacheOperator(df1.queryExecution.executedPlan).get val plan2 = findCacheOperator(df2.queryExecution.executedPlan).get // Check if InMemoryTableScanExec references point to the same object println(plan1.eq(plan2)) // returns false// Check if InMemoryRelation references point to the same object println(plan1.relation.eq(plan2.relation)) // returns false // Check if the cached SparkPlan references point to the same object println(plan1.relation.innerChildren.head.eq(plan2.relation.innerChildren.head)) // returns false // This shows the issue is fixed ``` Closes #40812 from robreeves/roreeves/explain_util. Authored-by: Rob Reeves <roreeves@linkedin.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 5e5999538899732bf3cdd04b974f1abeb949ccd0) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 18 May 2023, 06:25:20 UTC |

| b0f5a7a | itholic | 18 May 2023, 03:04:51 UTC | [SPARK-43547][3.4][PS][DOCS] Update "Supported Pandas API" page to point out the proper pandas docs ### What changes were proposed in this pull request? This PR proposes to fix [Supported pandas API](https://spark.apache.org/docs/latest/api/python/user_guide/pandas_on_spark/supported_pandas_api.html#supported-pandas-api) page to point out the proper pandas version. ### Why are the changes needed? Currently we're supporting pandas 1.5, but it says we support latest pandas which is 2.0.1. ### Does this PR introduce _any_ user-facing change? No, it's document change ### How was this patch tested? The existing CI should pass. Closes #41208 from itholic/update_supported_api. Authored-by: itholic <haejoon.lee@databricks.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 18 May 2023, 03:04:51 UTC |

| f89e520 | itholic | 18 May 2023, 03:02:26 UTC | [SPARK-42826][3.4][FOLLOWUP][PS][DOCS] Update migration notes for pandas API on Spark ### What changes were proposed in this pull request? This is follow-up for https://github.com/apache/spark/pull/40459 to fix the incorrect information and to elaborate more detailed changes. - We're not fully support the pandas 2.0.0, so the information "Pandas API on Spark follows for the pandas 2.0" is not correct. - We should list all the APIs that no longer support `inplace` parameter. ### Why are the changes needed? Correctness for migration notes. ### Does this PR introduce _any_ user-facing change? No, only updating migration notes. ### How was this patch tested? The existing CI should pass Closes #41207 from itholic/migration_guide_followup. Authored-by: itholic <haejoon.lee@databricks.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 18 May 2023, 03:02:26 UTC |

| 7b148f0 | Ruifeng Zheng | 16 May 2023, 23:31:28 UTC | [SPARK-43527][PYTHON] Fix `catalog.listCatalogs` in PySpark ### What changes were proposed in this pull request? Fix `catalog.listCatalogs` in PySpark ### Why are the changes needed? existing implementation outputs incorrect results ### Does this PR introduce _any_ user-facing change? yes before this PR: ``` In [1]: spark.catalog.listCatalogs() Out[1]: [CatalogMetadata(name=<py4j.java_gateway.JavaMember object at 0x1031f08b0>, description=<py4j.java_gateway.JavaMember object at 0x1049ac2e0>)] ``` after this PR: ``` In [1]: spark.catalog.listCatalogs() Out[1]: [CatalogMetadata(name='spark_catalog', description=None)] ``` ### How was this patch tested? added doctest Closes #41186 from zhengruifeng/py_list_catalog. Authored-by: Ruifeng Zheng <ruifengz@apache.org> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit a232083f50ddfdc81f2027fd3ffa89dfaa3ba199) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 16 May 2023, 23:31:36 UTC |

| f68ece9 | Xingbo Jiang | 16 May 2023, 18:34:30 UTC | [SPARK-43043][CORE] Improve the performance of MapOutputTracker.updateMapOutput ### What changes were proposed in this pull request? The PR changes the implementation of MapOutputTracker.updateMapOutput() to search for the MapStatus under the help of a mapping from mapId to mapIndex, previously it was performing a linear search, which would become performance bottleneck if a large proportion of all blocks in the map are migrated. ### Why are the changes needed? To avoid performance bottleneck when block decommission is enabled and a lot of blocks are migrated within a short time window. ### Does this PR introduce _any_ user-facing change? No, it's pure performance improvement. ### How was this patch tested? Manually test. Closes #40690 from jiangxb1987/SPARK-43043. Lead-authored-by: Xingbo Jiang <xingbo.jiang@databricks.com> Co-authored-by: Jiang Xingbo <jiangxb1987@gmail.com> Signed-off-by: Xingbo Jiang <xingbo.jiang@databricks.com> (cherry picked from commit 66a2eb8f8957c22c69519b39be59beaaf931822b) Signed-off-by: Xingbo Jiang <xingbo.jiang@databricks.com> | 16 May 2023, 18:34:57 UTC |

| 792680e | Hyukjin Kwon | 16 May 2023, 02:16:27 UTC | [SPARK-43517][PYTHON][DOCS] Add a migration guide for namedtuple monkey patch ### What changes were proposed in this pull request? This PR proposes to add a migration guide for https://github.com/apache/spark/pull/38700. ### Why are the changes needed? To guide users about the workaround of bringing the namedtuple patch back. ### Does this PR introduce _any_ user-facing change? Yes, it adds the migration guides for end-users. ### How was this patch tested? CI in this PR will test it out. Closes #41177 from HyukjinKwon/update-migration-namedtuple. Authored-by: Hyukjin Kwon <gurwls223@apache.org> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 23c072d2a0ef046f45893d9a13f5788e6ec09ea5) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 16 May 2023, 02:16:36 UTC |

| ee12c81 | ulysses-you | 16 May 2023, 01:42:20 UTC | [SPARK-43281][SQL] Fix concurrent writer does not update file metrics ### What changes were proposed in this pull request? `DynamicPartitionDataConcurrentWriter` it uses temp file path to get file status after commit task. However, the temp file has already moved to new path during commit task. This pr calls `closeFile` before commit task. ### Why are the changes needed? fix bug ### Does this PR introduce _any_ user-facing change? yes, after this pr the metrics is correct ### How was this patch tested? add test Closes #40952 from ulysses-you/SPARK-43281. Authored-by: ulysses-you <ulyssesyou18@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 592e92262246a6345096655270e2ca114934d0eb) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 16 May 2023, 01:42:37 UTC |

| cacaed8 | Jiaan Geng | 15 May 2023, 12:54:55 UTC | [SPARK-43483][SQL][DOCS] Adds SQL references for OFFSET clause ### What changes were proposed in this pull request? Spark 3.4.0 released the new syntax: `OFFSET clause`. But the SQL reference missing the description for it. ### Why are the changes needed? Adds SQL reference for `OFFSET` clause. ### Does this PR introduce _any_ user-facing change? 'Yes'. Users could find out the SQL reference for `OFFSET` clause. ### How was this patch tested? Manual verify.     Closes #41151 from beliefer/SPARK-43483. Authored-by: Jiaan Geng <beliefer@163.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 15 May 2023, 13:06:57 UTC |

| 6e44365 | Dongjoon Hyun | 11 May 2023, 22:30:04 UTC | [SPARK-43471][CORE] Handle missing hadoopProperties and metricsProperties ### What changes were proposed in this pull request? This PR aims to handle a corner case where `hadoopProperties` and `metricsProperties` is null which means not loaded. ### Why are the changes needed? To prevent NPE. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Pass the CIs with the newly added test suite. Closes #41145 from TQJADE/SPARK-43471. Lead-authored-by: Dongjoon Hyun <dongjoon@apache.org> Co-authored-by: Qi Tan <qi_tan@apple.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 1dba7b803ecffd09c544009b79a6a3219f56d4e0) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 11 May 2023, 22:30:23 UTC |

| 8c4ff5b | Qi Tan | 11 May 2023, 04:19:54 UTC | [SPARK-43441][CORE] `makeDotNode` should not fail when DeterministicLevel is absent ### What changes were proposed in this pull request? This PR aims to make `makeDotNode` method handle the missing `DeterministicLevel`. ### Why are the changes needed? Some old Spark generated data do not have that field. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Pass the CIs with newly added test case. Closes #41124 from TQJADE/SPARK-43441. Lead-authored-by: Qi Tan <qi_tan@apple.com> Co-authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit cfd42355ccbdf34ca9bd5a125c4310b9da14100c) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 11 May 2023, 04:20:04 UTC |

| 95af6f6 | Fokko Driesprong | 11 May 2023, 00:44:39 UTC | [SPARK-43425][SQL][3.4] Add `TimestampNTZType` to `ColumnarBatchRow` ### What changes were proposed in this pull request? Noticed this one was missing when implementing `TimestampNTZType` in Iceberg. ### Why are the changes needed? Able to read `TimestampNTZType` using the `ColumnarBatchRow`. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Closes #41103 from Fokko/fd-add-timestampntz. Authored-by: Fokko Driesprong <fokkotabular.io> Closes #41112 from Fokko/fd-backport. Authored-by: Fokko Driesprong <fokko@tabular.io> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 11 May 2023, 00:44:39 UTC |

| 10fd9b4 | Josh Rosen | 08 May 2023, 23:06:15 UTC | [SPARK-43414][TESTS] Fix flakiness in Kafka RDD suites due to port binding configuration issue ### What changes were proposed in this pull request? This PR addresses a test flakiness issue in Kafka connector RDD suites https://github.com/apache/spark/pull/34089#pullrequestreview-872739182 (Spark 3.4.0) upgraded Spark to Kafka 3.1.0, which requires a different configuration key for configuring the broker listening port. That PR updated the `KafkaTestUtils.scala` used in SQL tests, but there's a near-duplicate of that code in a different `KafkaTestUtils.scala` used by RDD API suites which wasn't updated. As a result, the RDD suites began using Kafka's default port 9092 and this results in flakiness as multiple concurrent suites hit port conflicts when trying to bind to that default port. This PR fixes that by simply copying the updated configuration from the SQL copy of `KafkaTestUtils.scala`. ### Why are the changes needed? Fix test flakiness due to port conflicts. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Ran 20 concurrent copies of `org.apache.spark.streaming.kafka010.JavaKafkaRDDSuite` in my CI environment and confirmed that this PR's changes resolve the test flakiness. Closes #41095 from JoshRosen/update-kafka-test-utils-to-fix-port-binding-flakiness. Authored-by: Josh Rosen <joshrosen@databricks.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 175fcfd2106652baff53fdfb1be84638a4f6a9c9) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 08 May 2023, 23:06:22 UTC |

| 6662441 | Qian.Sun | 07 May 2023, 01:26:49 UTC | [SPARK-43342][K8S] Revert SPARK-39006 Show a directional error message for executor PVC dynamic allocation failure ### What changes were proposed in this pull request? This reverts commit b065c945fe27dd5869b39bfeaad8e2b23a8835b5. ### Why are the changes needed? To remove the regression from SPARK-39006. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Pass the CIs. Closes #41057 Closes #41069 from dongjoon-hyun/SPARK-43342. Authored-by: Qian.Sun <qian.sun2020@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 3ba1fa3678a4fcc0aaba8abb0d4312e8fb42efba) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 07 May 2023, 01:26:56 UTC |

| cd2a6f3 | Cheng Pan | 06 May 2023, 14:37:44 UTC | [SPARK-43395][BUILD] Exclude macOS tar extended metadata in make-distribution.sh ### What changes were proposed in this pull request? Add args `--no-mac-metadata --no-xattrs --no-fflags` to `tar` on macOS in `dev/make-distribution.sh` to exclude macOS-specific extended metadata. ### Why are the changes needed? The binary tarball created on macOS includes extended macOS-specific metadata and xattrs, which causes warnings when unarchiving it on Linux. Step to reproduce 1. create tarball on macOS (13.3.1) ``` ➜ apache-spark git:(master) tar --version bsdtar 3.5.3 - libarchive 3.5.3 zlib/1.2.11 liblzma/5.0.5 bz2lib/1.0.8 ``` ``` ➜ apache-spark git:(master) dev/make-distribution.sh --tgz ``` 2. unarchive the binary tarball on Linux (CentOS-7) ``` ➜ ~ tar --version tar (GNU tar) 1.26 Copyright (C) 2011 Free Software Foundation, Inc. License GPLv3+: GNU GPL version 3 or later <http://gnu.org/licenses/gpl.html>. This is free software: you are free to change and redistribute it. There is NO WARRANTY, to the extent permitted by law. Written by John Gilmore and Jay Fenlason. ``` ``` ➜ ~ tar -xzf spark-3.5.0-SNAPSHOT-bin-3.3.5.tgz tar: Ignoring unknown extended header keyword `SCHILY.fflags' tar: Ignoring unknown extended header keyword `LIBARCHIVE.xattr.com.apple.FinderInfo' ``` ### Does this PR introduce _any_ user-facing change? No, dev only. ### How was this patch tested? Create binary tarball on macOS then unarchive on Linux, warnings disappear after this change. Closes #41074 from pan3793/SPARK-43395. Authored-by: Cheng Pan <chengpan@apache.org> Signed-off-by: Sean Owen <srowen@gmail.com> (cherry picked from commit 2d0240df3c474902e263f67b93fb497ca13da00f) Signed-off-by: Sean Owen <srowen@gmail.com> | 06 May 2023, 14:38:07 UTC |

| 73437ce | Kent Yao | 05 May 2023, 23:07:00 UTC | [SPARK-43374][INFRA] Move protobuf-java to BSD 3-clause group and update the license copy ### What changes were proposed in this pull request? protobuf-java is licensed under the BSD 3-clause, not the 2 we claimed. And the copy should be updated via https://github.com/protocolbuffers/protobuf/commit/9e080f7ac007b75dacbd233b214e5c0cb2e48e0f ### Why are the changes needed? fix license issue ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? Existing tests. Closes #41043 from yaooqinn/SPARK-43374. Authored-by: Kent Yao <yao@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 4351d414ab03643fdb6ec18b8e8a4ac718a9a2b2) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 05 May 2023, 23:07:13 UTC |

| 0cc21ba | Ahmed Hussein | 05 May 2023, 23:00:30 UTC | [SPARK-43340][CORE] Handle missing stack-trace field in eventlogs This PR fixes a regression introduced by #36885 which broke JsonProtocol's ability to handle missing fields from exception field. old eventlogs missing a `Stack Trace` will raise a NPE. As a result, SHS misinterprets failed-jobs/SQLs as `Active/Incomplete` This PR solves this problem by checking the JsonNode for null. If it is null, an empty array of `StackTraceElements` Fix a case which prevents the history server from identifying failed jobs if the stacktrace was not set. Example eventlog ``` { "Event":"SparkListenerJobEnd", "Job ID":31, "Completion Time":1616171909785, "Job Result":{ "Result":"JobFailed", "Exception":{ "Message":"Job aborted" } } } ``` **Original behavior:** The job is marked as `incomplete` Error from the SHS logs: ``` 23/05/01 21:57:16 INFO FsHistoryProvider: Parsing file:/tmp/nds_q86_fail_test to re-build UI... 23/05/01 21:57:17 ERROR ReplayListenerBus: Exception parsing Spark event log: file:/tmp/nds_q86_fail_test java.lang.NullPointerException at org.apache.spark.util.JsonProtocol$JsonNodeImplicits.extractElements(JsonProtocol.scala:1589) at org.apache.spark.util.JsonProtocol$.stackTraceFromJson(JsonProtocol.scala:1558) at org.apache.spark.util.JsonProtocol$.exceptionFromJson(JsonProtocol.scala:1569) at org.apache.spark.util.JsonProtocol$.jobResultFromJson(JsonProtocol.scala:1423) at org.apache.spark.util.JsonProtocol$.jobEndFromJson(JsonProtocol.scala:967) at org.apache.spark.util.JsonProtocol$.sparkEventFromJson(JsonProtocol.scala:878) at org.apache.spark.util.JsonProtocol$.sparkEventFromJson(JsonProtocol.scala:865) .... 23/05/01 21:57:17 ERROR ReplayListenerBus: Malformed line #24368: {"Event":"SparkListenerJobEnd","Job ID":31,"Completion Time":1616171909785,"Job Result":{"Result":"JobFailed","Exception": {"Message":"Job aborted"} }} ``` **After the fix:** Job 31 is marked as `failedJob` No. Added new unit test in JsonProtocolSuite. Closes #41050 from amahussein/aspark-43340-b. Authored-by: Ahmed Hussein <ahussein@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit dcd710d3e12f6cc540cea2b8c747bb6b61254504) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 05 May 2023, 23:01:56 UTC |

| e04025e | Maytas Monsereenusorn | 05 May 2023, 15:25:46 UTC | [SPARK-43337][UI][3.4] Asc/desc arrow icons for sorting column does not get displayed in the table column ### What changes were proposed in this pull request? Remove css `!important` tag for asc/desc arrow icons in jquery.dataTables.1.10.25.min.css ### Why are the changes needed? Upgrading to DataTables 1.10.25 broke asc/desc arrow icons for sorting column. The sorting icon is not displayed when the column is clicked to sort by asc/desc. This is because the new DataTables 1.10.25's jquery.dataTables.1.10.25.min.css file added `!important` rule preventing the override set in webui-dataTables.css ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Manual test.   Closes #41061 from maytasm/fix-arrow-4. Authored-by: Maytas Monsereenusorn <maytasm@apache.org> Signed-off-by: Sean Owen <srowen@gmail.com> | 05 May 2023, 15:25:46 UTC |

| b35e42f | David Lewis | 05 May 2023, 08:50:07 UTC | [SPARK-43284][SQL][FOLLOWUP] Return URI encoded path, and add a test Return URI encoded path, and add a test Fix regression in spark 3.4. Yes, fixes a regression in `_metadata.file_path`. New explicit test. Closes #41054 from databricks-david-lewis/SPARK-43284-2. Lead-authored-by: David Lewis <david.lewis@databricks.com> Co-authored-by: Wenchen Fan <cloud0fan@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 79d5d908e5dd7d6ab6755c46d4de3ed2fcdf9e6b) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 05 May 2023, 08:57:52 UTC |

| 8c6442f | David Lewis | 05 May 2023, 02:30:44 UTC | [SPARK-43284] Switch back to url-encoded strings Update `_metadata.file_path` and `_metadata.file_name` to return url-encoded strings, rather than un-encoded strings. This was a regression introduced in Spark 3.4.0. This was an inadvertent behavior change. Yes, fix regression! New test added to validate that the `file_path` and `path_name` are returned as encoded strings. Closes #40947 from databricks-david-lewis/SPARK-43284. Authored-by: David Lewis <david.lewis@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 1a777967ed109b0838793588c330a2e404627fb1) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 05 May 2023, 02:56:44 UTC |

| b51e860 | Emil Ejbyfeldt | 05 May 2023, 00:34:14 UTC | [SPARK-43378][CORE] Properly close stream objects in deserializeFromChunkedBuffer ### What changes were proposed in this pull request? Fixes a that SerializerHelper.deserializeFromChunkedBuffer does not call close on the deserialization stream. For some serializers like Kryo this creates a performance regressions as the kryo instances are not returned to the pool. ### Why are the changes needed? This causes a performance regression in Spark 3.4.0 for some workloads. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Existing tests. Closes #41049 from eejbyfeldt/SPARK-43378. Authored-by: Emil Ejbyfeldt <eejbyfeldt@liveintent.com> Signed-off-by: Sean Owen <srowen@gmail.com> (cherry picked from commit cb26ad88c522070c66e979ab1ab0f040cd1bdbe7) Signed-off-by: Sean Owen <srowen@gmail.com> | 05 May 2023, 00:34:22 UTC |