| da0b69f | Dongjoon Hyun | 10 January 2019, 08:40:21 UTC | [SPARK-22128][CORE][BUILD] Add `paranamer` dependency to `core` module ## What changes were proposed in this pull request? With Scala-2.12 profile, Spark application fails while Spark is okay. For example, our documented `SimpleApp` Java example succeeds to compile but it fails at runtime because it doesn't use `paranamer 2.8` and hits [SPARK-22128](https://issues.apache.org/jira/browse/SPARK-22128). This PR aims to declare it explicitly for the Spark applications. Note that this doesn't introduce new dependency to Spark itself. https://dist.apache.org/repos/dist/dev/spark/3.0.0-SNAPSHOT-2019_01_09_13_59-e853afb-docs/_site/quick-start.html The following is the dependency tree from the Spark application. **BEFORE** ``` $ mvn dependency:tree -Dincludes=com.thoughtworks.paranamer [INFO] --- maven-dependency-plugin:2.8:tree (default-cli) simple --- [INFO] my.test:simple:jar:1.0-SNAPSHOT [INFO] \- org.apache.spark:spark-sql_2.12:jar:3.0.0-SNAPSHOT:compile [INFO] \- org.apache.spark:spark-core_2.12:jar:3.0.0-SNAPSHOT:compile [INFO] \- org.apache.avro:avro:jar:1.8.2:compile [INFO] \- com.thoughtworks.paranamer:paranamer:jar:2.7:compile ``` **AFTER** ``` [INFO] --- maven-dependency-plugin:2.8:tree (default-cli) simple --- [INFO] my.test:simple:jar:1.0-SNAPSHOT [INFO] \- org.apache.spark:spark-sql_2.12:jar:3.0.0-SNAPSHOT:compile [INFO] \- org.apache.spark:spark-core_2.12:jar:3.0.0-SNAPSHOT:compile [INFO] \- com.thoughtworks.paranamer:paranamer:jar:2.8:compile ``` ## How was this patch tested? Pass the Jenkins. And manually test with the sample app is running. Closes #23502 from dongjoon-hyun/SPARK-26583. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit c7daa95d7f095500b416ba405660f98cd2a39727) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 10 January 2019, 08:40:36 UTC |

| 6277a9f | Gengliang Wang | 09 January 2019, 02:18:33 UTC | [SPARK-26571][SQL] Update Hive Serde mapping with canonical name of Parquet and Orc FileFormat ## What changes were proposed in this pull request? Currently Spark table maintains Hive catalog storage format, so that Hive client can read it. In `HiveSerDe.scala`, Spark uses a mapping from its data source to HiveSerde. The mapping is old, we need to update with latest canonical name of Parquet and Orc FileFormat. Otherwise the following queries will result in wrong Serde value in Hive table(default value `org.apache.hadoop.mapred.SequenceFileInputFormat`), and Hive client will fail to read the output table: ``` df.write.format("org.apache.spark.sql.execution.datasources.parquet.ParquetFileFormat").saveAsTable(..) ``` ``` df.write.format("org.apache.spark.sql.execution.datasources.orc.OrcFileFormat").saveAsTable(..) ``` This minor PR is to fix the mapping. ## How was this patch tested? Unit test. Closes #23491 from gengliangwang/fixHiveSerdeMap. Authored-by: Gengliang Wang <gengliang.wang@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 311f32f37fbeaebe9dfa0b8dc2a111ee99b583b7) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 09 January 2019, 02:55:11 UTC |

| 3ece0aa | Dongjoon Hyun | 08 January 2019, 01:54:05 UTC | [SPARK-26554][BUILD][FOLLOWUP] Use GitHub instead of GitBox to check HEADER ## What changes were proposed in this pull request? This PR uses GitHub repository instead of GitBox because GitHub repo returns HTTP header status correctly. ## How was this patch tested? Manual. ``` $ ./do-release-docker.sh -d /tmp/test -n Branch [branch-2.4]: Current branch version is 2.4.1-SNAPSHOT. Release [2.4.1]: RC # [1]: This is a dry run. Please confirm the ref that will be built for testing. Ref [v2.4.1-rc1]: ``` Closes #23482 from dongjoon-hyun/SPARK-26554-2. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 6f35ede31cc72a81e3852b1ac7454589d1897bfc) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 08 January 2019, 01:54:36 UTC |

| faa4c28 | Shixiong Zhu | 08 January 2019, 00:53:07 UTC | [SPARK-26267][SS] Retry when detecting incorrect offsets from Kafka (2.4) ## What changes were proposed in this pull request? Backport #23324 to branch-2.4. ## How was this patch tested? Jenkins Closes #23365 from zsxwing/SPARK-26267-2.4. Authored-by: Shixiong Zhu <zsxwing@gmail.com> Signed-off-by: Shixiong Zhu <zsxwing@gmail.com> | 08 January 2019, 00:53:07 UTC |

| b4202e7 | wuyi | 07 January 2019, 22:22:28 UTC | [SPARK-26269][YARN][BRANCH-2.4] Yarnallocator should have same blacklist behaviour with yarn to maxmize use of cluster resource ## What changes were proposed in this pull request? As I mentioned in jira [SPARK-26269](https://issues.apache.org/jira/browse/SPARK-26269), in order to maxmize the use of cluster resource, this pr try to make `YarnAllocator` have the same blacklist behaviour with YARN. ## How was this patch tested? Added. Closes #23368 from Ngone51/dev-YarnAllocator-should-have-same-blacklist-behaviour-with-YARN-branch-2.4. Lead-authored-by: wuyi <ngone_5451@163.com> Co-authored-by: Ngone51 <ngone_5451@163.com> Signed-off-by: Thomas Graves <tgraves@apache.org> | 07 January 2019, 22:22:28 UTC |

| cb1aad6 | Liang-Chi Hsieh | 07 January 2019, 10:36:52 UTC | [SPARK-26559][ML][PYSPARK] ML image can't work with numpy versions prior to 1.9 ## What changes were proposed in this pull request? Due to [API change](https://github.com/numpy/numpy/pull/4257/files#diff-c39521d89f7e61d6c0c445d93b62f7dc) at 1.9, PySpark image doesn't work with numpy version prior to 1.9. When running image test with numpy version prior to 1.9, we can see error: ``` test_read_images (pyspark.ml.tests.test_image.ImageReaderTest) ... ERROR test_read_images_multiple_times (pyspark.ml.tests.test_image.ImageReaderTest2) ... ok ====================================================================== ERROR: test_read_images (pyspark.ml.tests.test_image.ImageReaderTest) ---------------------------------------------------------------------- Traceback (most recent call last): File "/Users/viirya/docker_tmp/repos/spark-1/python/pyspark/ml/tests/test_image.py", line 36, in test_read_images self.assertEqual(ImageSchema.toImage(array, origin=first_row[0]), first_row) File "/Users/viirya/docker_tmp/repos/spark-1/python/pyspark/ml/image.py", line 193, in toImage data = bytearray(array.astype(dtype=np.uint8).ravel().tobytes()) AttributeError: 'numpy.ndarray' object has no attribute 'tobytes' ---------------------------------------------------------------------- Ran 2 tests in 29.040s FAILED (errors=1) ``` ## How was this patch tested? Manually test with numpy version prior and after 1.9. Closes #23484 from viirya/fix-pyspark-image. Authored-by: Liang-Chi Hsieh <viirya@gmail.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit a927c764c1eee066efc1c2c713dfee411de79245) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 07 January 2019, 10:37:45 UTC |

| fe4c61c | Dongjoon Hyun | 07 January 2019, 06:45:18 UTC | [MINOR][BUILD] Fix script name in `release-tag.sh` usage message ## What changes were proposed in this pull request? This PR fixes the old script name in `release-tag.sh`. $ ./release-tag.sh --help | head -n1 usage: tag-release.sh ## How was this patch tested? Manual. $ ./release-tag.sh --help | head -n1 usage: release-tag.sh Closes #23477 from dongjoon-hyun/SPARK-RELEASE-TAG. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 468d25ec7419b4c55955ead877232aae5654260e) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 07 January 2019, 06:45:46 UTC |

| 0356ac7 | Dongjoon Hyun | 07 January 2019, 03:59:31 UTC | [SPARK-26554][BUILD] Update `release-util.sh` to avoid GitBox fake 200 headers ## What changes were proposed in this pull request? Unlike the previous Apache Git repository, new GitBox repository returns a fake HTTP 200 header instead of `404 Not Found` header. This makes release scripts out of order. This PR aims to fix it to handle the html body message instead of the fake HTTP headers. This is a release blocker. ```bash $ curl -s --head --fail "https://gitbox.apache.org/repos/asf?p=spark.git;a=commit;h=v3.0.0" HTTP/1.1 200 OK Date: Sun, 06 Jan 2019 22:42:39 GMT Server: Apache/2.4.18 (Ubuntu) Vary: Accept-Encoding Access-Control-Allow-Origin: * Access-Control-Allow-Methods: POST, GET, OPTIONS Access-Control-Allow-Headers: X-PINGOTHER Access-Control-Max-Age: 1728000 Content-Type: text/html; charset=utf-8 ``` **BEFORE** ```bash $ ./do-release-docker.sh -d /tmp/test -n Branch [branch-2.4]: Current branch version is 2.4.1-SNAPSHOT. Release [2.4.1]: RC # [1]: v2.4.1-rc1 already exists. Continue anyway [y/n]? ``` **AFTER** ```bash $ ./do-release-docker.sh -d /tmp/test -n Branch [branch-2.4]: Current branch version is 2.4.1-SNAPSHOT. Release [2.4.1]: RC # [1]: This is a dry run. Please confirm the ref that will be built for testing. Ref [v2.4.1-rc1]: ``` ## How was this patch tested? Manual. Closes #23476 from dongjoon-hyun/SPARK-26554. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit fe039faddf13c6a30f7aea69324aa4d4bb84c632) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 07 January 2019, 03:59:58 UTC |

| 0751e02 | Kris Mok | 05 January 2019, 22:37:04 UTC | [SPARK-26545] Fix typo in EqualNullSafe's truth table comment ## What changes were proposed in this pull request? The truth table comment in EqualNullSafe incorrectly marked FALSE results as UNKNOWN. ## How was this patch tested? N/A Closes #23461 from rednaxelafx/fix-typo. Authored-by: Kris Mok <kris.mok@databricks.com> Signed-off-by: gatorsmile <gatorsmile@gmail.com> (cherry picked from commit 4ab5b5b9185f60f671d90d94732d0d784afa5f84) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 05 January 2019, 22:37:45 UTC |

| 46a88d2 | shane knapp | 05 January 2019, 02:27:26 UTC | [SPARK-26537][BUILD] change git-wip-us to gitbox ## What changes were proposed in this pull request? due to apache recently moving from git-wip-us.apache.org to gitbox.apache.org, we need to update the packaging scripts to point to the new repo location. this will also need to be backported to 2.4, 2.3, 2.1, 2.0 and 1.6. ## How was this patch tested? the build system will test this. Please review http://spark.apache.org/contributing.html before opening a pull request. Closes #23454 from shaneknapp/update-apache-repo. Authored-by: shane knapp <incomplete@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit bccb8602d7bc78894689e9b2e5fe685763d32d23) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 05 January 2019, 02:27:58 UTC |

| 977d86e | Marco Gaido | 04 January 2019, 22:53:20 UTC | [SPARK-26078][SQL][BACKPORT-2.4] Dedup self-join attributes on IN subqueries ## What changes were proposed in this pull request? When there is a self-join as result of a IN subquery, the join condition may be invalid, resulting in trivially true predicates and return wrong results. The PR deduplicates the subquery output in order to avoid the issue. ## How was this patch tested? added UT Closes #23449 from mgaido91/SPARK-26078_2.4. Authored-by: Marco Gaido <marcogaido91@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 04 January 2019, 22:53:20 UTC |

| c0f4082 | Dongjoon Hyun | 04 January 2019, 04:01:19 UTC | [MINOR][NETWORK][TEST] Fix TransportFrameDecoderSuite to use ByteBuf instead of ByteBuffer ## What changes were proposed in this pull request? `fireChannelRead` expects `io.netty.buffer.ByteBuf`.I checked that this is the only place which misuse `java.nio.ByteBuffer` in `network` module. ## How was this patch tested? Pass the Jenkins with the existing tests. Closes #23442 from dongjoon-hyun/SPARK-NETWORK-COMMON. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 27e42c1de502da80fa3e22bb69de47fb00158174) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 04 January 2019, 04:01:49 UTC |

| bd6e570 | Liupengcheng | 03 January 2019, 16:26:14 UTC | [SPARK-26501][CORE][TEST] Fix unexpected overriden of exitFn in SparkSubmitSuite ## What changes were proposed in this pull request? The overriden of SparkSubmit's exitFn at some previous tests in SparkSubmitSuite may cause the following tests pass even they failed when they were run separately. This PR is to fix this problem. ## How was this patch tested? unittest Closes #23404 from liupc/Fix-SparkSubmitSuite-exitFn. Authored-by: Liupengcheng <liupengcheng@xiaomi.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 88b074f3f06ddd236d63e8bf31edebe1d3e94fe4) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 03 January 2019, 16:26:42 UTC |

| 1e99f4e | Imran Rashid | 03 January 2019, 03:10:55 UTC | [SPARK-26019][PYSPARK] Allow insecure py4j gateways Spark always creates secure py4j connections between java and python, but it also allows users to pass in their own connection. This restores the ability for users to pass in an _insecure_ connection, though it forces them to set the env variable 'PYSPARK_ALLOW_INSECURE_GATEWAY=1', and still issues a warning. Added test cases verifying the failure without the extra configuration, and verifying things still work with an insecure configuration (in particular, accumulators, as those were broken with an insecure py4j gateway before). For the tests, I added ways to create insecure gateways, but I tried to put in protections to make sure that wouldn't get used incorrectly. Closes #23337 from squito/SPARK-26019. Authored-by: Imran Rashid <irashid@cloudera.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 03 January 2019, 03:10:55 UTC |

| 1802124 | Hyukjin Kwon | 29 December 2018, 20:11:45 UTC | [SPARK-26496][SS][TEST] Avoid to use Random.nextString in StreamingInnerJoinSuite ## What changes were proposed in this pull request? Similar with https://github.com/apache/spark/pull/21446. Looks random string is not quite safe as a directory name. ```scala scala> val prefix = Random.nextString(10); val dir = new File("/tmp", "del_" + prefix + "-" + UUID.randomUUID.toString); dir.mkdirs() prefix: String = 窽텘⒘駖ⵚ駢⡞Ρ닋 dir: java.io.File = /tmp/del_窽텘⒘駖ⵚ駢⡞Ρ닋-a3f99855-c429-47a0-a108-47bca6905745 res40: Boolean = false // nope, didn't like this one ``` ## How was this patch tested? Unit test was added, and manually. Closes #23405 from HyukjinKwon/SPARK-26496. Authored-by: Hyukjin Kwon <gurwls223@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit e63243df8aca9f44255879e931e0c372beef9fc2) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 29 December 2018, 20:12:13 UTC |

| 4fb3f6d | seancxmao | 28 December 2018, 13:40:59 UTC | [SPARK-26444][WEBUI] Stage color doesn't change with it's status ## What changes were proposed in this pull request? On job page, in event timeline section, stage color doesn't change according to its status. Below are some screenshots. ACTIVE: <img width="550" alt="active" src="https://user-images.githubusercontent.com/12194089/50438844-c763e580-092a-11e9-84f6-6fc30e08d69b.png"> COMPLETE: <img width="516" alt="complete" src="https://user-images.githubusercontent.com/12194089/50438847-ca5ed600-092a-11e9-9d2e-5d79807bc1ce.png"> FAILED: <img width="325" alt="failed" src="https://user-images.githubusercontent.com/12194089/50438852-ccc13000-092a-11e9-9b6b-782b96b283b1.png"> This PR lets stage color change with it's status. The main idea is to make css style class name match the corresponding stage status. ## How was this patch tested? Manually tested locally. ``` // active/complete stage sc.parallelize(1 to 3, 3).map { n => Thread.sleep(10* 1000); n }.count // failed stage sc.parallelize(1 to 3, 3).map { n => Thread.sleep(10* 1000); throw new Exception() }.count ``` Note we need to clear browser cache to let new `timeline-view.css` take effect. Below are screenshots after this PR. ACTIVE: <img width="569" alt="active-after" src="https://user-images.githubusercontent.com/12194089/50439986-08f68f80-092f-11e9-85d9-be1c31aed13b.png"> COMPLETE: <img width="567" alt="complete-after" src="https://user-images.githubusercontent.com/12194089/50439990-0bf18000-092f-11e9-8624-723958906e90.png"> FAILED: <img width="352" alt="failed-after" src="https://user-images.githubusercontent.com/12194089/50439993-101d9d80-092f-11e9-8dfd-3e20536f2fa5.png"> Closes #23385 from seancxmao/timeline-stage-color. Authored-by: seancxmao <seancxmao@gmail.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 5bef4fedfe1916320223b1245bacb58f151cee66) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 28 December 2018, 13:41:29 UTC |

| fa1abe2 | Wenchen Fan | 27 December 2018, 19:23:05 UTC | Revert [SPARK-26021][SQL] replace minus zero with zero in Platform.putDouble/Float This PR reverts https://github.com/apache/spark/pull/23043 and its followup https://github.com/apache/spark/pull/23265, from branch 2.4, because it has behavior changes. existing tests Closes #23389 from cloud-fan/revert. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 27 December 2018, 19:23:24 UTC |

| c2bff77 | wangyanlin01 | 25 December 2018, 07:53:42 UTC | [SPARK-26426][SQL] fix ExpresionInfo assert error in windows operation system. ## What changes were proposed in this pull request? fix ExpresionInfo assert error in windows operation system, when running unit tests. ## How was this patch tested? unit tests Closes #23363 from yanlin-Lynn/unit-test-windows. Authored-by: wangyanlin01 <wangyanlin01@baidu.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 25 December 2018, 07:55:05 UTC |

| fdeb6db | DB Tsai | 22 December 2018, 18:35:14 UTC | [SPARK-26402][SQL] Accessing nested fields with different cases in case insensitive mode ## What changes were proposed in this pull request? GetStructField with different optional names should be semantically equal. We will use this as building block to compare the nested fields used in the plans to be optimized by catalyst optimizer. This PR also fixes a bug below that accessing nested fields with different cases in case insensitive mode will result `AnalysisException`. ``` sql("create table t (s struct<i: Int>) using json") sql("select s.I from t group by s.i") ``` which is currently failing ``` org.apache.spark.sql.AnalysisException: expression 'default.t.`s`' is neither present in the group by, nor is it an aggregate function ``` as cloud-fan pointed out. ## How was this patch tested? New tests are added. Closes #23353 from dbtsai/nestedEqual. Lead-authored-by: DB Tsai <d_tsai@apple.com> Co-authored-by: DB Tsai <dbtsai@dbtsai.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit a5a24d92bdf6e6a8e33bdc8833bedba033576b4c) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 22 December 2018, 18:35:39 UTC |

| 90a14d5 | Hyukjin Kwon | 21 December 2018, 08:09:30 UTC | [SPARK-26422][R] Support to disable Hive support in SparkR even for Hadoop versions unsupported by Hive fork ## What changes were proposed in this pull request? Currently, even if I explicitly disable Hive support in SparkR session as below: ```r sparkSession <- sparkR.session("local[4]", "SparkR", Sys.getenv("SPARK_HOME"), enableHiveSupport = FALSE) ``` produces when the Hadoop version is not supported by our Hive fork: ``` java.lang.reflect.InvocationTargetException ... Caused by: java.lang.IllegalArgumentException: Unrecognized Hadoop major version number: 3.1.1.3.1.0.0-78 at org.apache.hadoop.hive.shims.ShimLoader.getMajorVersion(ShimLoader.java:174) at org.apache.hadoop.hive.shims.ShimLoader.loadShims(ShimLoader.java:139) at org.apache.hadoop.hive.shims.ShimLoader.getHadoopShims(ShimLoader.java:100) at org.apache.hadoop.hive.conf.HiveConf$ConfVars.<clinit>(HiveConf.java:368) ... 43 more Error in handleErrors(returnStatus, conn) : java.lang.ExceptionInInitializerError at org.apache.hadoop.hive.conf.HiveConf.<clinit>(HiveConf.java:105) at java.lang.Class.forName0(Native Method) at java.lang.Class.forName(Class.java:348) at org.apache.spark.util.Utils$.classForName(Utils.scala:193) at org.apache.spark.sql.SparkSession$.hiveClassesArePresent(SparkSession.scala:1116) at org.apache.spark.sql.api.r.SQLUtils$.getOrCreateSparkSession(SQLUtils.scala:52) at org.apache.spark.sql.api.r.SQLUtils.getOrCreateSparkSession(SQLUtils.scala) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ``` The root cause is that: ``` SparkSession.hiveClassesArePresent ``` check if the class is loadable or not to check if that's in classpath but `org.apache.hadoop.hive.conf.HiveConf` has a check for Hadoop version as static logic which is executed right away. This throws an `IllegalArgumentException` and that's not caught: https://github.com/apache/spark/blob/36edbac1c8337a4719f90e4abd58d38738b2e1fb/sql/core/src/main/scala/org/apache/spark/sql/SparkSession.scala#L1113-L1121 So, currently, if users have a Hive built-in Spark with unsupported Hadoop version by our fork (namely 3+), there's no way to use SparkR even though it could work. This PR just propose to change the order of bool comparison so that we can don't execute `SparkSession.hiveClassesArePresent` when: 1. `enableHiveSupport` is explicitly disabled 2. `spark.sql.catalogImplementation` is `in-memory` so that we **only** check `SparkSession.hiveClassesArePresent` when Hive support is explicitly enabled by short circuiting. ## How was this patch tested? It's difficult to write a test since we don't run tests against Hadoop 3 yet. See https://github.com/apache/spark/pull/21588. Manually tested. Closes #23356 from HyukjinKwon/SPARK-26422. Authored-by: Hyukjin Kwon <gurwls223@apache.org> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 305e9b5ad22b428501fd42d3730d73d2e09ad4c5) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 21 December 2018, 08:10:14 UTC |

| c29f7e2 | Gengliang Wang | 20 December 2018, 18:05:56 UTC | [SPARK-26409][SQL][TESTS] SQLConf should be serializable in test sessions ## What changes were proposed in this pull request? `SQLConf` is supposed to be serializable. However, currently it is not serializable in `WithTestConf`. `WithTestConf` uses the method `overrideConfs` in closure, while the classes which implements it (`TestHiveSessionStateBuilder` and `TestSQLSessionStateBuilder`) are not serializable. This PR is to use a local variable to fix it. ## How was this patch tested? Add unit test. Closes #23352 from gengliangwang/serializableSQLConf. Authored-by: Gengliang Wang <gengliang.wang@databricks.com> Signed-off-by: gatorsmile <gatorsmile@gmail.com> (cherry picked from commit 6692bacf3e74e7a17d8e676e8a06ab198f85d328) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 20 December 2018, 20:05:59 UTC |

| daeb081 | Ngone51 | 20 December 2018, 18:25:52 UTC | [SPARK-26392][YARN] Cancel pending allocate requests by taking locality preference into account ## What changes were proposed in this pull request? Right now, we cancel pending allocate requests by its sending order. I thing we can take locality preference into account when do this to perfom least impact on task locality preference. ## How was this patch tested? N.A. Closes #23344 from Ngone51/dev-cancel-pending-allocate-requests-by-taking-locality-preference-into-account. Authored-by: Ngone51 <ngone_5451@163.com> Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> (cherry picked from commit 3d6b44d9ea92dc1eabb8f211176861e51240bf93) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 20 December 2018, 18:26:17 UTC |

| 74c1cd1 | zhoukang | 20 December 2018, 14:26:25 UTC | [SPARK-24687][CORE] Avoid job hanging when generate task binary causes fatal error ## What changes were proposed in this pull request? When NoClassDefFoundError thrown,it will cause job hang. `Exception in thread "dag-scheduler-event-loop" java.lang.NoClassDefFoundError: Lcom/xxx/data/recommend/aggregator/queue/QueueName; at java.lang.Class.getDeclaredFields0(Native Method) at java.lang.Class.privateGetDeclaredFields(Class.java:2436) at java.lang.Class.getDeclaredField(Class.java:1946) at java.io.ObjectStreamClass.getDeclaredSUID(ObjectStreamClass.java:1659) at java.io.ObjectStreamClass.access$700(ObjectStreamClass.java:72) at java.io.ObjectStreamClass$2.run(ObjectStreamClass.java:480) at java.io.ObjectStreamClass$2.run(ObjectStreamClass.java:468) at java.security.AccessController.doPrivileged(Native Method) at java.io.ObjectStreamClass.<init>(ObjectStreamClass.java:468) at java.io.ObjectStreamClass.lookup(ObjectStreamClass.java:365) at java.io.ObjectOutputStream.writeClass(ObjectOutputStream.java:1212) at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1119) at java.io.ObjectOutputStream.defaultWriteFields(ObjectOutputStream.java:1547) at java.io.ObjectOutputStream.writeSerialData(ObjectOutputStream.java:1508) at java.io.ObjectOutputStream.writeOrdinaryObject(ObjectOutputStream.java:1431) at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1177) at java.io.ObjectOutputStream.defaultWriteFields(ObjectOutputStream.java:1547) at java.io.ObjectOutputStream.writeSerialData(ObjectOutputStream.java:1508) at java.io.ObjectOutputStream.writeOrdinaryObject(ObjectOutputStream.java:1431) at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1177) at java.io.ObjectOutputStream.defaultWriteFields(ObjectOutputStream.java:1547) at java.io.ObjectOutputStream.writeSerialData(ObjectOutputStream.java:1508) at java.io.ObjectOutputStream.writeOrdinaryObject(ObjectOutputStream.java:1431) at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1177) at java.io.ObjectOutputStream.writeArray(ObjectOutputStream.java:1377) at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1173) at java.io.ObjectOutputStream.defaultWriteFields(ObjectOutputStream.java:1547) at java.io.ObjectOutputStream.writeSerialData(ObjectOutputStream.java:1508) at java.io.ObjectOutputStream.writeOrdinaryObject(ObjectOutputStream.java:1431) at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1177) at java.io.ObjectOutputStream.defaultWriteFields(ObjectOutputStream.java:1547) at java.io.ObjectOutputStream.writeSerialData(ObjectOutputStream.java:1508) at java.io.ObjectOutputStream.writeOrdinaryObject(ObjectOutputStream.java:1431) at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1177) at java.io.ObjectOutputStream.defaultWriteFields(ObjectOutputStream.java:1547) at java.io.ObjectOutputStream.writeSerialData(ObjectOutputStream.java:1508) at java.io.ObjectOutputStream.writeOrdinaryObject(ObjectOutputStream.java:1431) at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1177) at java.io.ObjectOutputStream.writeArray(ObjectOutputStream.java:1377)` It is caused by NoClassDefFoundError will not catch up during task seriazation. `var taskBinary: Broadcast[Array[Byte]] = null try { // For ShuffleMapTask, serialize and broadcast (rdd, shuffleDep). // For ResultTask, serialize and broadcast (rdd, func). val taskBinaryBytes: Array[Byte] = stage match { case stage: ShuffleMapStage => JavaUtils.bufferToArray( closureSerializer.serialize((stage.rdd, stage.shuffleDep): AnyRef)) case stage: ResultStage => JavaUtils.bufferToArray(closureSerializer.serialize((stage.rdd, stage.func): AnyRef)) } taskBinary = sc.broadcast(taskBinaryBytes) } catch { // In the case of a failure during serialization, abort the stage. case e: NotSerializableException => abortStage(stage, "Task not serializable: " + e.toString, Some(e)) runningStages -= stage // Abort execution return case NonFatal(e) => abortStage(stage, s"Task serialization failed: $e\n${Utils.exceptionString(e)}", Some(e)) runningStages -= stage return }` image below shows that stage 33 blocked and never be scheduled. <img width="1273" alt="2018-06-28 4 28 42" src="https://user-images.githubusercontent.com/26762018/42621188-b87becca-85ef-11e8-9a0b-0ddf07504c96.png"> <img width="569" alt="2018-06-28 4 28 49" src="https://user-images.githubusercontent.com/26762018/42621191-b8b260e8-85ef-11e8-9d10-e97a5918baa6.png"> ## How was this patch tested? UT Closes #21664 from caneGuy/zhoukang/fix-noclassdeferror. Authored-by: zhoukang <zhoukang199191@gmail.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 7c8f4756c34a0b00931c2987c827a18d989e6c08) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 20 December 2018, 14:27:13 UTC |

| 63b7a07 | Marco Gaido | 19 December 2018, 07:21:52 UTC | [SPARK-26366][SQL] ReplaceExceptWithFilter should consider NULL as False ## What changes were proposed in this pull request? In `ReplaceExceptWithFilter` we do not consider properly the case in which the condition returns NULL. Indeed, in that case, since negating NULL still returns NULL, so it is not true the assumption that negating the condition returns all the rows which didn't satisfy it, rows returning NULL may not be returned. This happens when constraints inferred by `InferFiltersFromConstraints` are not enough, as it happens with `OR` conditions. The rule had also problems with non-deterministic conditions: in such a scenario, this rule would change the probability of the output. The PR fixes these problem by: - returning False for the condition when it is Null (in this way we do return all the rows which didn't satisfy it); - avoiding any transformation when the condition is non-deterministic. ## How was this patch tested? added UTs Closes #23315 from mgaido91/SPARK-26366. Authored-by: Marco Gaido <marcogaido91@gmail.com> Signed-off-by: gatorsmile <gatorsmile@gmail.com> (cherry picked from commit 834b8609793525a5a486013732d8c98e1c6e6504) Signed-off-by: gatorsmile <gatorsmile@gmail.com> | 19 December 2018, 07:22:33 UTC |

| f097638 | Jackey Lee | 18 December 2018, 18:15:36 UTC | [SPARK-26394][CORE] Fix annotation error for Utils.timeStringAsMs ## What changes were proposed in this pull request? Change microseconds to milliseconds in annotation of Utils.timeStringAsMs. Closes #23346 from stczwd/stczwd. Authored-by: Jackey Lee <qcsd2011@163.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 428eb2ad0ad8a141427120b13de3287962258c2d) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 18 December 2018, 18:16:09 UTC |

| 16986b2 | Wenchen Fan | 18 December 2018, 18:09:56 UTC | [SPARK-26382][CORE] prefix comparator should handle -0.0 ## What changes were proposed in this pull request? This is kind of a followup of https://github.com/apache/spark/pull/23239 The `UnsafeProject` will normalize special float/double values(NaN and -0.0), so the sorter doesn't have to handle it. However, for consistency and future-proof, this PR proposes to normalize `-0.0` in the prefix comparator, so that it's same with the normal ordering. Note that prefix comparator handles NaN as well. This is not a bug fix, but a safe guard. ## How was this patch tested? existing tests Closes #23334 from cloud-fan/sort. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit befca983d2da4f7828aa7a7cd7345d17c4f291dd) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 18 December 2018, 18:10:30 UTC |

| 0a69787 | Kris Mok | 17 December 2018, 14:48:59 UTC | [SPARK-26352][SQL][FOLLOWUP-2.4] Fix missing sameOutput in branch-2.4 ## What changes were proposed in this pull request? After https://github.com/apache/spark/pull/23303 was merged to branch-2.3/2.4, the builds on those branches were broken due to missing a `LogicalPlan.sameOutput` function which came from https://github.com/apache/spark/pull/22713 only available on master. This PR is to follow-up with the broken 2.3/2.4 branches and make a copy of the new `LogicalPlan.sameOutput` into `ReorderJoin` to make it locally available. ## How was this patch tested? Fix the build of 2.3/2.4. Closes #23330 from rednaxelafx/clean-build-2.4. Authored-by: Kris Mok <rednaxelafx@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 17 December 2018, 14:48:59 UTC |

| e743e84 | Kris Mok | 17 December 2018, 05:41:20 UTC | [SPARK-26352][SQL] join reorder should not change the order of output attributes ## What changes were proposed in this pull request? The optimizer rule `org.apache.spark.sql.catalyst.optimizer.ReorderJoin` performs join reordering on inner joins. This was introduced from SPARK-12032 (https://github.com/apache/spark/pull/10073) in 2015-12. After it had reordered the joins, though, it didn't check whether or not the output attribute order is still the same as before. Thus, it's possible to have a mismatch between the reordered output attributes order vs the schema that a DataFrame thinks it has. The same problem exists in the CBO version of join reordering (`CostBasedJoinReorder`) too. This can be demonstrated with the example: ```scala spark.sql("create table table_a (x int, y int) using parquet") spark.sql("create table table_b (i int, j int) using parquet") spark.sql("create table table_c (a int, b int) using parquet") val df = spark.sql(""" with df1 as (select * from table_a cross join table_b) select * from df1 join table_c on a = x and b = i """) ``` here's what the DataFrame thinks: ``` scala> df.printSchema root |-- x: integer (nullable = true) |-- y: integer (nullable = true) |-- i: integer (nullable = true) |-- j: integer (nullable = true) |-- a: integer (nullable = true) |-- b: integer (nullable = true) ``` here's what the optimized plan thinks, after join reordering: ``` scala> df.queryExecution.optimizedPlan.output.foreach(a => println(s"|-- ${a.name}: ${a.dataType.typeName}")) |-- x: integer |-- y: integer |-- a: integer |-- b: integer |-- i: integer |-- j: integer ``` If we exclude the `ReorderJoin` rule (using Spark 2.4's optimizer rule exclusion feature), it's back to normal: ``` scala> spark.conf.set("spark.sql.optimizer.excludedRules", "org.apache.spark.sql.catalyst.optimizer.ReorderJoin") scala> val df = spark.sql("with df1 as (select * from table_a cross join table_b) select * from df1 join table_c on a = x and b = i") df: org.apache.spark.sql.DataFrame = [x: int, y: int ... 4 more fields] scala> df.queryExecution.optimizedPlan.output.foreach(a => println(s"|-- ${a.name}: ${a.dataType.typeName}")) |-- x: integer |-- y: integer |-- i: integer |-- j: integer |-- a: integer |-- b: integer ``` Note that this output attribute ordering problem leads to data corruption, and can manifest itself in various symptoms: * Silently corrupting data, if the reordered columns happen to either have matching types or have sufficiently-compatible types (e.g. all fixed length primitive types are considered as "sufficiently compatible" in an `UnsafeRow`), then only the resulting data is going to be wrong but it might not trigger any alarms immediately. Or * Weird Java-level exceptions like `java.lang.NegativeArraySizeException`, or even SIGSEGVs. ## How was this patch tested? Added new unit test in `JoinReorderSuite` and new end-to-end test in `JoinSuite`. Also made `JoinReorderSuite` and `StarJoinReorderSuite` assert more strongly on maintaining output attribute order. Closes #23303 from rednaxelafx/fix-join-reorder. Authored-by: Kris Mok <rednaxelafx@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 56448c662398f4c5319a337e6601450270a6a27c) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 17 December 2018, 05:43:47 UTC |

| d650075 | jiake | 17 December 2018, 01:20:58 UTC | [SPARK-26316][SPARK-21052][BRANCH-2.4] Revert hash join metrics in that causes performance degradation ## What changes were proposed in this pull request? revert spark 21052 in spark 2.4 because of the discussion in [PR23269](https://github.com/apache/spark/pull/23269) ## How was this patch tested? N/A Closes #23318 from JkSelf/branch-2.4-revert21052. Authored-by: jiake <ke.a.jia@intel.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 17 December 2018, 01:20:58 UTC |

| 869bfc9 | Jing Chen He | 15 December 2018, 14:41:16 UTC | [SPARK-26315][PYSPARK] auto cast threshold from Integer to Float in approxSimilarityJoin of BucketedRandomProjectionLSHModel ## What changes were proposed in this pull request? If the input parameter 'threshold' to the function approxSimilarityJoin is not a float, we would get an exception. The fix is to convert the 'threshold' into a float before calling the java implementation method. ## How was this patch tested? Added a new test case. Without this fix, the test will throw an exception as reported in the JIRA. With the fix, the test passes. Please review http://spark.apache.org/contributing.html before opening a pull request. Closes #23313 from jerryjch/SPARK-26315. Authored-by: Jing Chen He <jinghe@us.ibm.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 860f4497f2a59b21d455ec8bfad9ae15d2fd4d2e) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 15 December 2018, 14:41:47 UTC |

| 6019d9a | Liang-Chi Hsieh | 15 December 2018, 05:52:07 UTC | [SPARK-26265][CORE][FOLLOWUP] Put freePage into a finally block ## What changes were proposed in this pull request? Based on the [comment](https://github.com/apache/spark/pull/23272#discussion_r240735509), it seems to be better to put `freePage` into a `finally` block. This patch as a follow-up to do so. ## How was this patch tested? Existing tests. Closes #23294 from viirya/SPARK-26265-followup. Authored-by: Liang-Chi Hsieh <viirya@gmail.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 1b604c1fd0b9ef17b394818fbd6c546bc01cdd8c) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 15 December 2018, 05:52:45 UTC |

| aec68a8 | Takuya UESHIN | 14 December 2018, 16:23:28 UTC | [SPARK-26370][SQL] Fix resolution of higher-order function for the same identifier. When using a higher-order function with the same variable name as the existing columns in `Filter` or something which uses `Analyzer.resolveExpressionBottomUp` during the resolution, e.g.,: ```scala val df = Seq( (Seq(1, 9, 8, 7), 1, 2), (Seq(5, 9, 7), 2, 2), (Seq.empty, 3, 2), (null, 4, 2) ).toDF("i", "x", "d") checkAnswer(df.filter("exists(i, x -> x % d == 0)"), Seq(Row(Seq(1, 9, 8, 7), 1, 2))) checkAnswer(df.select("x").filter("exists(i, x -> x % d == 0)"), Seq(Row(1))) ``` the following exception happens: ``` java.lang.ClassCastException: org.apache.spark.sql.catalyst.expressions.BoundReference cannot be cast to org.apache.spark.sql.catalyst.expressions.NamedExpression at scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:237) at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62) at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55) at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49) at scala.collection.TraversableLike.map(TraversableLike.scala:237) at scala.collection.TraversableLike.map$(TraversableLike.scala:230) at scala.collection.AbstractTraversable.map(Traversable.scala:108) at org.apache.spark.sql.catalyst.expressions.HigherOrderFunction.$anonfun$functionsForEval$1(higherOrderFunctions.scala:147) at scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:237) at scala.collection.immutable.List.foreach(List.scala:392) at scala.collection.TraversableLike.map(TraversableLike.scala:237) at scala.collection.TraversableLike.map$(TraversableLike.scala:230) at scala.collection.immutable.List.map(List.scala:298) at org.apache.spark.sql.catalyst.expressions.HigherOrderFunction.functionsForEval(higherOrderFunctions.scala:145) at org.apache.spark.sql.catalyst.expressions.HigherOrderFunction.functionsForEval$(higherOrderFunctions.scala:145) at org.apache.spark.sql.catalyst.expressions.ArrayExists.functionsForEval$lzycompute(higherOrderFunctions.scala:369) at org.apache.spark.sql.catalyst.expressions.ArrayExists.functionsForEval(higherOrderFunctions.scala:369) at org.apache.spark.sql.catalyst.expressions.SimpleHigherOrderFunction.functionForEval(higherOrderFunctions.scala:176) at org.apache.spark.sql.catalyst.expressions.SimpleHigherOrderFunction.functionForEval$(higherOrderFunctions.scala:176) at org.apache.spark.sql.catalyst.expressions.ArrayExists.functionForEval(higherOrderFunctions.scala:369) at org.apache.spark.sql.catalyst.expressions.ArrayExists.nullSafeEval(higherOrderFunctions.scala:387) at org.apache.spark.sql.catalyst.expressions.SimpleHigherOrderFunction.eval(higherOrderFunctions.scala:190) at org.apache.spark.sql.catalyst.expressions.SimpleHigherOrderFunction.eval$(higherOrderFunctions.scala:185) at org.apache.spark.sql.catalyst.expressions.ArrayExists.eval(higherOrderFunctions.scala:369) at org.apache.spark.sql.catalyst.expressions.GeneratedClass$SpecificPredicate.eval(Unknown Source) at org.apache.spark.sql.execution.FilterExec.$anonfun$doExecute$3(basicPhysicalOperators.scala:216) at org.apache.spark.sql.execution.FilterExec.$anonfun$doExecute$3$adapted(basicPhysicalOperators.scala:215) ... ``` because the `UnresolvedAttribute`s in `LambdaFunction` are unexpectedly resolved by the rule. This pr modified to use a placeholder `UnresolvedNamedLambdaVariable` to prevent unexpected resolution. Added a test and modified some tests. Closes #23320 from ueshin/issues/SPARK-26370/hof_resolution. Authored-by: Takuya UESHIN <ueshin@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 3dda58af2b7f42beab736d856bf17b4d35c8866c) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 14 December 2018, 16:25:18 UTC |

| a2c5bea | Takuya UESHIN | 13 December 2018, 05:14:59 UTC | [SPARK-26355][PYSPARK] Add a workaround for PyArrow 0.11. In PyArrow 0.11, there is a API breaking change. - [ARROW-1949](https://issues.apache.org/jira/browse/ARROW-1949) - [Python/C++] Add option to Array.from_pandas and pyarrow.array to perform unsafe casts. This causes test failures in `ScalarPandasUDFTests.test_vectorized_udf_null_(byte|short|int|long)`: ``` File "/Users/ueshin/workspace/apache-spark/spark/python/pyspark/worker.py", line 377, in main process() File "/Users/ueshin/workspace/apache-spark/spark/python/pyspark/worker.py", line 372, in process serializer.dump_stream(func(split_index, iterator), outfile) File "/Users/ueshin/workspace/apache-spark/spark/python/pyspark/serializers.py", line 317, in dump_stream batch = _create_batch(series, self._timezone) File "/Users/ueshin/workspace/apache-spark/spark/python/pyspark/serializers.py", line 286, in _create_batch arrs = [create_array(s, t) for s, t in series] File "/Users/ueshin/workspace/apache-spark/spark/python/pyspark/serializers.py", line 284, in create_array return pa.Array.from_pandas(s, mask=mask, type=t) File "pyarrow/array.pxi", line 474, in pyarrow.lib.Array.from_pandas return array(obj, mask=mask, type=type, safe=safe, from_pandas=True, File "pyarrow/array.pxi", line 169, in pyarrow.lib.array return _ndarray_to_array(values, mask, type, from_pandas, safe, File "pyarrow/array.pxi", line 69, in pyarrow.lib._ndarray_to_array check_status(NdarrayToArrow(pool, values, mask, from_pandas, File "pyarrow/error.pxi", line 81, in pyarrow.lib.check_status raise ArrowInvalid(message) ArrowInvalid: Floating point value truncated ``` We should add a workaround to support PyArrow 0.11. In my local environment. Closes #23305 from ueshin/issues/SPARK-26355/pyarrow_0.11. Authored-by: Takuya UESHIN <ueshin@databricks.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 8edae94fa7ec1a1cc2c69e0924da0da85d4aac83) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 13 December 2018, 05:19:15 UTC |

| 2282c93 | Dongjoon Hyun | 11 December 2018, 22:44:58 UTC | This is a dummy commit to trigger AFS gitbox sync | 11 December 2018, 22:44:58 UTC |

| e35d287 | Liang-Chi Hsieh | 11 December 2018, 20:22:58 UTC | [SPARK-26265][CORE][BRANCH-2.4] Fix deadlock in BytesToBytesMap.MapIterator when locking both BytesToBytesMap.MapIterator and TaskMemoryManager ## What changes were proposed in this pull request? In `BytesToBytesMap.MapIterator.advanceToNextPage`, We will first lock this `MapIterator` and then `TaskMemoryManager` when going to free a memory page by calling `freePage`. At the same time, it is possibly that another memory consumer first locks `TaskMemoryManager` and then this `MapIterator` when it acquires memory and causes spilling on this `MapIterator`. So it ends with the `MapIterator` object holds lock to the `MapIterator` object and waits for lock on `TaskMemoryManager`, and the other consumer holds lock to `TaskMemoryManager` and waits for lock on the `MapIterator` object. To avoid deadlock here, this patch proposes to keep reference to the page to free and free it after releasing the lock of `MapIterator`. This backports the fix to branch-2.4. ## How was this patch tested? Added test and manually test by running the test 100 times to make sure there is no deadlock. Closes #23289 from viirya/SPARK-26265-2.4. Authored-by: Liang-Chi Hsieh <viirya@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 11 December 2018, 20:22:58 UTC |

| 2f038d0 | Yuanjian Li | 11 December 2018, 18:03:47 UTC | [SPARK-26327][SQL][BACKPORT-2.4] Bug fix for `FileSourceScanExec` metrics update ## What changes were proposed in this pull request? Backport #23277 to branch 2.4 without the metrics renaming. ## How was this patch tested? New test case in `SQLMetricsSuite`. Closes #23287 from xuanyuanking/SPARK-26327-2.4. Authored-by: Yuanjian Li <xyliyuanjian@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 11 December 2018, 18:03:47 UTC |

| e80577c | gatorsmile | 10 December 2018, 06:57:20 UTC | [SPARK-26307][SQL] Fix CTAS when INSERT a partitioned table using Hive serde ## What changes were proposed in this pull request? This is a Spark 2.3 regression introduced in https://github.com/apache/spark/pull/20521. We should add the partition info for InsertIntoHiveTable in CreateHiveTableAsSelectCommand. Otherwise, we will hit the following error by running the newly added test case: ``` [info] - CTAS: INSERT a partitioned table using Hive serde *** FAILED *** (829 milliseconds) [info] org.apache.spark.SparkException: Requested partitioning does not match the tab1 table: [info] Requested partitions: [info] Table partitions: part [info] at org.apache.spark.sql.hive.execution.InsertIntoHiveTable.processInsert(InsertIntoHiveTable.scala:179) [info] at org.apache.spark.sql.hive.execution.InsertIntoHiveTable.run(InsertIntoHiveTable.scala:107) ``` ## How was this patch tested? Added a test case. Closes #23255 from gatorsmile/fixCTAS. Authored-by: gatorsmile <gatorsmile@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 3bc83de3cce86a06c275c86b547a99afd781761f) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 10 December 2018, 06:57:31 UTC |

| 33460c5 | Wenchen Fan | 09 December 2018, 18:50:41 UTC | [SPARK-26021][2.4][SQL][FOLLOWUP] only deal with NaN and -0.0 in UnsafeWriter backport https://github.com/apache/spark/pull/23239 to 2.4 --------- ## What changes were proposed in this pull request? A followup of https://github.com/apache/spark/pull/23043 There are 4 places we need to deal with NaN and -0.0: 1. comparison expressions. `-0.0` and `0.0` should be treated as same. Different NaNs should be treated as same. 2. Join keys. `-0.0` and `0.0` should be treated as same. Different NaNs should be treated as same. 3. grouping keys. `-0.0` and `0.0` should be assigned to the same group. Different NaNs should be assigned to the same group. 4. window partition keys. `-0.0` and `0.0` should be treated as same. Different NaNs should be treated as same. The case 1 is OK. Our comparison already handles NaN and -0.0, and for struct/array/map, we will recursively compare the fields/elements. Case 2, 3 and 4 are problematic, as they compare `UnsafeRow` binary directly, and different NaNs have different binary representation, and the same thing happens for -0.0 and 0.0. To fix it, a simple solution is: normalize float/double when building unsafe data (`UnsafeRow`, `UnsafeArrayData`, `UnsafeMapData`). Then we don't need to worry about it anymore. Following this direction, this PR moves the handling of NaN and -0.0 from `Platform` to `UnsafeWriter`, so that places like `UnsafeRow.setFloat` will not handle them, which reduces the perf overhead. It's also easier to add comments explaining why we do it in `UnsafeWriter`. ## How was this patch tested? existing tests Closes #23265 from cloud-fan/minor. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 09 December 2018, 18:50:41 UTC |

| a073b1c | seancxmao | 09 December 2018, 01:53:12 UTC | [SPARK-25132][SQL][FOLLOWUP][DOC] Add migration doc for case-insensitive field resolution when reading from Parquet ## What changes were proposed in this pull request? #22148 introduces a behavior change. According to discussion at #22184, this PR updates migration guide when upgrade from Spark 2.3 to 2.4. ## How was this patch tested? N/A Closes #23238 from seancxmao/SPARK-25132-doc-2.4. Authored-by: seancxmao <seancxmao@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 55276d3a26474e7479941db3e9c065d86344885f) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 09 December 2018, 01:54:05 UTC |

| 14b75eb | Sean Owen | 08 December 2018, 19:10:11 UTC | [SPARK-26266][BUILD] Update to Scala 2.12.8 (branch-2.4) ## What changes were proposed in this pull request? Back-port of https://github.com/apache/spark/pull/23218 ; updates Scala 2.12 build to 2.12.8 ## How was this patch tested? Existing tests. Closes #23264 from srowen/SPARK-26266.2. Authored-by: Sean Owen <sean.owen@databricks.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 08 December 2018, 19:10:11 UTC |

| 910bfc8 | Marco Gaido | 05 December 2018, 17:09:47 UTC | [SPARK-26233][SQL][BACKPORT-2.4] CheckOverflow when encoding a decimal value ## What changes were proposed in this pull request? When we encode a Decimal from external source we don't check for overflow. That method is useful not only in order to enforce that we can represent the correct value in the specified range, but it also changes the underlying data to the right precision/scale. Since in our code generation we assume that a decimal has exactly the same precision and scale of its data type, missing to enforce it can lead to corrupted output/results when there are subsequent transformations. ## How was this patch tested? added UT Closes #23232 from mgaido91/SPARK-26233_2.4. Authored-by: Marco Gaido <marcogaido91@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 05 December 2018, 17:09:47 UTC |

| c9fd14c | Hyukjin Kwon | 05 December 2018, 11:36:51 UTC | Revert "[SPARK-26133][ML][FOLLOWUP] Fix doc for OneHotEncoder" This reverts commit d9b707e7c39a55a22dd55f8a4f537d861a3ce57c. | 05 December 2018, 11:36:51 UTC |

| d9b707e | Liang-Chi Hsieh | 05 December 2018, 11:30:25 UTC | [SPARK-26133][ML][FOLLOWUP] Fix doc for OneHotEncoder ## What changes were proposed in this pull request? This fixes doc of renamed OneHotEncoder in PySpark. ## How was this patch tested? N/A Closes #23230 from viirya/remove_one_hot_encoder_followup. Authored-by: Liang-Chi Hsieh <viirya@gmail.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 05 December 2018, 11:32:05 UTC |

| 51739d1 | Shahid | 04 December 2018, 19:00:58 UTC | [SPARK-26119][CORE][WEBUI] Task summary table should contain only successful tasks' metrics ## What changes were proposed in this pull request? Task summary table in the stage page currently displays the summary of all the tasks. However, we should display the task summary of only successful tasks, to follow the behavior of previous versions of spark. ## How was this patch tested? Added UT. attached screenshot Before patch:   Closes #23088 from shahidki31/summaryMetrics. Authored-by: Shahid <shahidki31@gmail.com> Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> (cherry picked from commit 35f9163adf5c067229afbe57ed60d5dd5f2422c8) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 04 December 2018, 19:01:10 UTC |

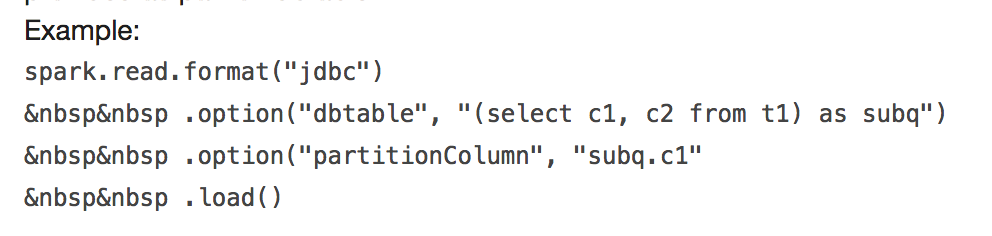

| a091216 | Yuming Wang | 04 December 2018, 13:57:58 UTC | [SPARK-24423][FOLLOW-UP][SQL] Fix error example ## What changes were proposed in this pull request?  It will throw: ``` requirement failed: When reading JDBC data sources, users need to specify all or none for the following options: 'partitionColumn', 'lowerBound', 'upperBound', and 'numPartitions' ``` and ``` User-defined partition column subq.c1 not found in the JDBC relation ... ``` This PR fix this error example. ## How was this patch tested? manual tests Closes #23170 from wangyum/SPARK-24499. Authored-by: Yuming Wang <yumwang@ebay.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 06a3b6aafa510ede2f1376b29a46f99447286c67) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 04 December 2018, 13:58:12 UTC |

| 90fcd12 | Shahid | 03 December 2018, 23:11:43 UTC | [SPARK-26219][CORE][BRANCH-2.4] Executor summary should get updated for failure jobs in the history server UI Back port the commit https://github.com/apache/spark/pull/23181 into Spark2.4 branch Added UT Closes #23191 from shahidki31/branch-2.4. Authored-by: Shahid <shahidki31@gmail.com> Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 03 December 2018, 23:12:03 UTC |

| 349e25b | Stavros Kontopoulos | 03 December 2018, 22:57:18 UTC | [SPARK-26256][K8S] Fix labels for pod deletion Adds proper labels when deleting executor pods. Manually with tests. Closes #23209 from skonto/fix-deletion-labels. Authored-by: Stavros Kontopoulos <stavros.kontopoulos@lightbend.com> Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> (cherry picked from commit a24e1a126c55fc06f5867c0e5e5b0ee71201e018) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 03 December 2018, 22:59:47 UTC |

| f716a47 | Daoyuan Wang | 03 December 2018, 15:54:26 UTC | [SPARK-26181][SQL] the `hasMinMaxStats` method of `ColumnStatsMap` is not correct ## What changes were proposed in this pull request? For now the `hasMinMaxStats` will return the same as `hasCountStats`, which is obviously not as expected. ## How was this patch tested? Existing tests. Closes #23152 from adrian-wang/minmaxstats. Authored-by: Daoyuan Wang <me@daoyuan.wang> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 8534d753ecb21ea64ffbaefb5eaca38ba0464c6d) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 03 December 2018, 15:55:04 UTC |

| 91b86b7 | Yuming Wang | 02 December 2018, 14:52:01 UTC | [SPARK-26198][SQL] Fix Metadata serialize null values throw NPE ## What changes were proposed in this pull request? How to reproduce this issue: ```scala scala> val meta = new org.apache.spark.sql.types.MetadataBuilder().putNull("key").build().json java.lang.NullPointerException at org.apache.spark.sql.types.Metadata$.org$apache$spark$sql$types$Metadata$$toJsonValue(Metadata.scala:196) at org.apache.spark.sql.types.Metadata$$anonfun$1.apply(Metadata.scala:180) ``` This pr fix `NullPointerException` when `Metadata` serialize `null` values. ## How was this patch tested? unit tests Closes #23164 from wangyum/SPARK-26198. Authored-by: Yuming Wang <yumwang@ebay.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 676bbb2446af1f281b8f76a5428b7ba75b7588b3) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 03 December 2018, 05:40:54 UTC |

| 58a4c0c | hyukjinkwon | 02 December 2018, 09:41:08 UTC | [SPARK-26080][PYTHON] Skips Python resource limit on Windows in Python worker ## What changes were proposed in this pull request? `resource` package is a Unix specific package. See https://docs.python.org/2/library/resource.html and https://docs.python.org/3/library/resource.html. Note that we document Windows support: > Spark runs on both Windows and UNIX-like systems (e.g. Linux, Mac OS). This should be backported into branch-2.4 to restore Windows support in Spark 2.4.1. ## How was this patch tested? Manually mocking the changed logics. Closes #23055 from HyukjinKwon/SPARK-26080. Lead-authored-by: hyukjinkwon <gurwls223@apache.org> Co-authored-by: Hyukjin Kwon <gurwls223@apache.org> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 9cda9a892d03f60a76cd5d9b4546e72c50962c85) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> | 02 December 2018, 09:41:25 UTC |

| 3ec03ec | liuxian | 01 December 2018, 13:11:31 UTC | [MINOR][DOC] Correct some document description errors ## What changes were proposed in this pull request? Correct some document description errors. ## How was this patch tested? N/A Closes #23162 from 10110346/docerror. Authored-by: liuxian <liu.xian3@zte.com.cn> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 60e4239a1e3506d342099981b6e3b3b8431a203e) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 01 December 2018, 13:12:02 UTC |

| b68decf | schintap | 30 November 2018, 18:48:56 UTC | [SPARK-26201] Fix python broadcast with encryption ## What changes were proposed in this pull request? Python with rpc and disk encryption enabled along with a python broadcast variable and just read the value back on the driver side the job failed with: Traceback (most recent call last): File "broadcast.py", line 37, in <module> words_new.value File "/pyspark.zip/pyspark/broadcast.py", line 137, in value File "pyspark.zip/pyspark/broadcast.py", line 122, in load_from_path File "pyspark.zip/pyspark/broadcast.py", line 128, in load EOFError: Ran out of input To reproduce use configs: --conf spark.network.crypto.enabled=true --conf spark.io.encryption.enabled=true Code: words_new = sc.broadcast(["scala", "java", "hadoop", "spark", "akka"]) words_new.value print(words_new.value) ## How was this patch tested? words_new = sc.broadcast([“scala”, “java”, “hadoop”, “spark”, “akka”]) textFile = sc.textFile(“README.md”) wordCounts = textFile.flatMap(lambda line: line.split()).map(lambda word: (word + words_new.value[1], 1)).reduceByKey(lambda a, b: a+b) count = wordCounts.count() print(count) words_new.value print(words_new.value) Closes #23166 from redsanket/SPARK-26201. Authored-by: schintap <schintap@oath.com> Signed-off-by: Thomas Graves <tgraves@apache.org> (cherry picked from commit 9b23be2e95fec756066ca0ed3188c3db2602b757) Signed-off-by: Thomas Graves <tgraves@apache.org> | 30 November 2018, 18:49:17 UTC |

| 4661ac7 | Gengliang Wang | 30 November 2018, 04:00:55 UTC | [SPARK-26188][SQL] FileIndex: don't infer data types of partition columns if user specifies schema ## What changes were proposed in this pull request? This PR is to fix a regression introduced in: https://github.com/apache/spark/pull/21004/files#r236998030 If user specifies schema, Spark don't need to infer data type for of partition columns, otherwise the data type might not match with the one user provided. E.g. for partition directory `p=4d`, after data type inference the column value will be `4.0`. See https://issues.apache.org/jira/browse/SPARK-26188 for more details. Note that user specified schema **might not cover all the data columns**: ``` val schema = new StructType() .add("id", StringType) .add("ex", ArrayType(StringType)) val df = spark.read .schema(schema) .format("parquet") .load(src.toString) assert(df.schema.toList === List( StructField("ex", ArrayType(StringType)), StructField("part", IntegerType), // inferred partitionColumn dataType StructField("id", StringType))) // used user provided partitionColumn dataType ``` For the missing columns in user specified schema, Spark still need to infer their data types if `partitionColumnTypeInferenceEnabled` is enabled. To implement the partially inference, refactor `PartitioningUtils.parsePartitions` and pass the user specified schema as parameter to cast partition values. ## How was this patch tested? Add unit test. Closes #23165 from gengliangwang/fixFileIndex. Authored-by: Gengliang Wang <gengliang.wang@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 9cfc3ee6253bed21924424ccaadea0287a6f15f4) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 30 November 2018, 04:01:16 UTC |

| 94206c7 | Shahid | 29 November 2018, 17:48:18 UTC | [SPARK-26186][SPARK-26184][CORE] Last updated time is not getting updated for the Inprogress application ## What changes were proposed in this pull request? When the 'spark.history.fs.inProgressOptimization.enabled' is true, inProgress application's last updated time is not getting updated in the History UI. Also, during the cleaning time, InProgress application is getting removed from the listing, even if the last updated time is within the cleaning threshold time. In this PR, if the fastInprogressOptimization enabled, we update the `lastUpdateTime` of the application as last scan time. This will update the `lastUpdateTime` in the historyUI and also while cleaning, it won't remove if the updateTime is within the cleaning interval ## How was this patch tested? Added UT, attached screen shot. Before patch:   After Patch:   Closes #23158 from shahidki31/HistoryLastUpdateTime. Authored-by: Shahid <shahidki31@gmail.com> Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> (cherry picked from commit 24e78b7f163acf6129d934633ae6d3e6d568656a) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 29 November 2018, 17:48:42 UTC |

| 7200915 | Takuya UESHIN | 29 November 2018, 14:37:02 UTC | [SPARK-26211][SQL] Fix InSet for binary, and struct and array with null. ## What changes were proposed in this pull request? Currently `InSet` doesn't work properly for binary type, or struct and array type with null value in the set. Because, as for binary type, the `HashSet` doesn't work properly for `Array[Byte]`, and as for struct and array type with null value in the set, the `ordering` will throw a `NPE`. ## How was this patch tested? Added a few tests. Closes #23176 from ueshin/issues/SPARK-26211/inset. Authored-by: Takuya UESHIN <ueshin@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit b9b68a6dc7d0f735163e980392ea957f2d589923) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 29 November 2018, 14:38:18 UTC |

| 99a9107 | Mark Pavey | 28 November 2018, 15:19:47 UTC | [SPARK-26137][CORE] Use Java system property "file.separator" inste… … of hard coded "/" in DependencyUtils ## What changes were proposed in this pull request? Use Java system property "file.separator" instead of hard coded "/" in DependencyUtils. ## How was this patch tested? Manual test: Submit Spark application via REST API that reads data from Elasticsearch using spark-elasticsearch library. Without fix application fails with error: 18/11/22 10:36:20 ERROR Version: Multiple ES-Hadoop versions detected in the classpath; please use only one jar:file:/C:/<...>/spark-2.4.0-bin-hadoop2.6/work/driver-20181122103610-0001/myApp-assembly-1.0.jar jar:file:/C:/<...>/myApp-assembly-1.0.jar 18/11/22 10:36:20 ERROR Main: Application [MyApp] failed: java.lang.Error: Multiple ES-Hadoop versions detected in the classpath; please use only one jar:file:/C:/<...>/spark-2.4.0-bin-hadoop2.6/work/driver-20181122103610-0001/myApp-assembly-1.0.jar jar:file:/C:/<...>/myApp-assembly-1.0.jar at org.elasticsearch.hadoop.util.Version.<clinit>(Version.java:73) at org.elasticsearch.hadoop.rest.RestService.findPartitions(RestService.java:214) at org.elasticsearch.spark.rdd.AbstractEsRDD.esPartitions$lzycompute(AbstractEsRDD.scala:73) at org.elasticsearch.spark.rdd.AbstractEsRDD.esPartitions(AbstractEsRDD.scala:72) at org.elasticsearch.spark.rdd.AbstractEsRDD.getPartitions(AbstractEsRDD.scala:44) at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:253) at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:251) at scala.Option.getOrElse(Option.scala:121) at org.apache.spark.rdd.RDD.partitions(RDD.scala:251) at org.apache.spark.rdd.MapPartitionsRDD.getPartitions(MapPartitionsRDD.scala:49) at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:253) at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:251) at scala.Option.getOrElse(Option.scala:121) at org.apache.spark.rdd.RDD.partitions(RDD.scala:251) at org.apache.spark.SparkContext.runJob(SparkContext.scala:2126) at org.apache.spark.rdd.RDD$$anonfun$collect$1.apply(RDD.scala:945) at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151) at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112) at org.apache.spark.rdd.RDD.withScope(RDD.scala:363) at org.apache.spark.rdd.RDD.collect(RDD.scala:944) ... at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.spark.deploy.worker.DriverWrapper$.main(DriverWrapper.scala:65) at org.apache.spark.deploy.worker.DriverWrapper.main(DriverWrapper.scala) With fix application runs successfully. Closes #23102 from markpavey/JIRA_SPARK-26137_DependencyUtilsFileSeparatorFix. Authored-by: Mark Pavey <markpavey@exabre.co.uk> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit ce61bac1d84f8577b180400e44bd9bf22292e0b6) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 28 November 2018, 15:20:00 UTC |

| ac26a1d | Wenchen Fan | 28 November 2018, 12:38:42 UTC | [SPARK-26147][SQL] only pull out unevaluable python udf from join condition https://github.com/apache/spark/pull/22326 made a mistake that, not all python UDFs are unevaluable in join condition. Only python UDFs that refer to attributes from both join side are unevaluable. This PR fixes this mistake. a new test Closes #23153 from cloud-fan/join. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit affe80958d366f399466a9dba8e03da7f3b7b9bf) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 28 November 2018, 12:42:08 UTC |

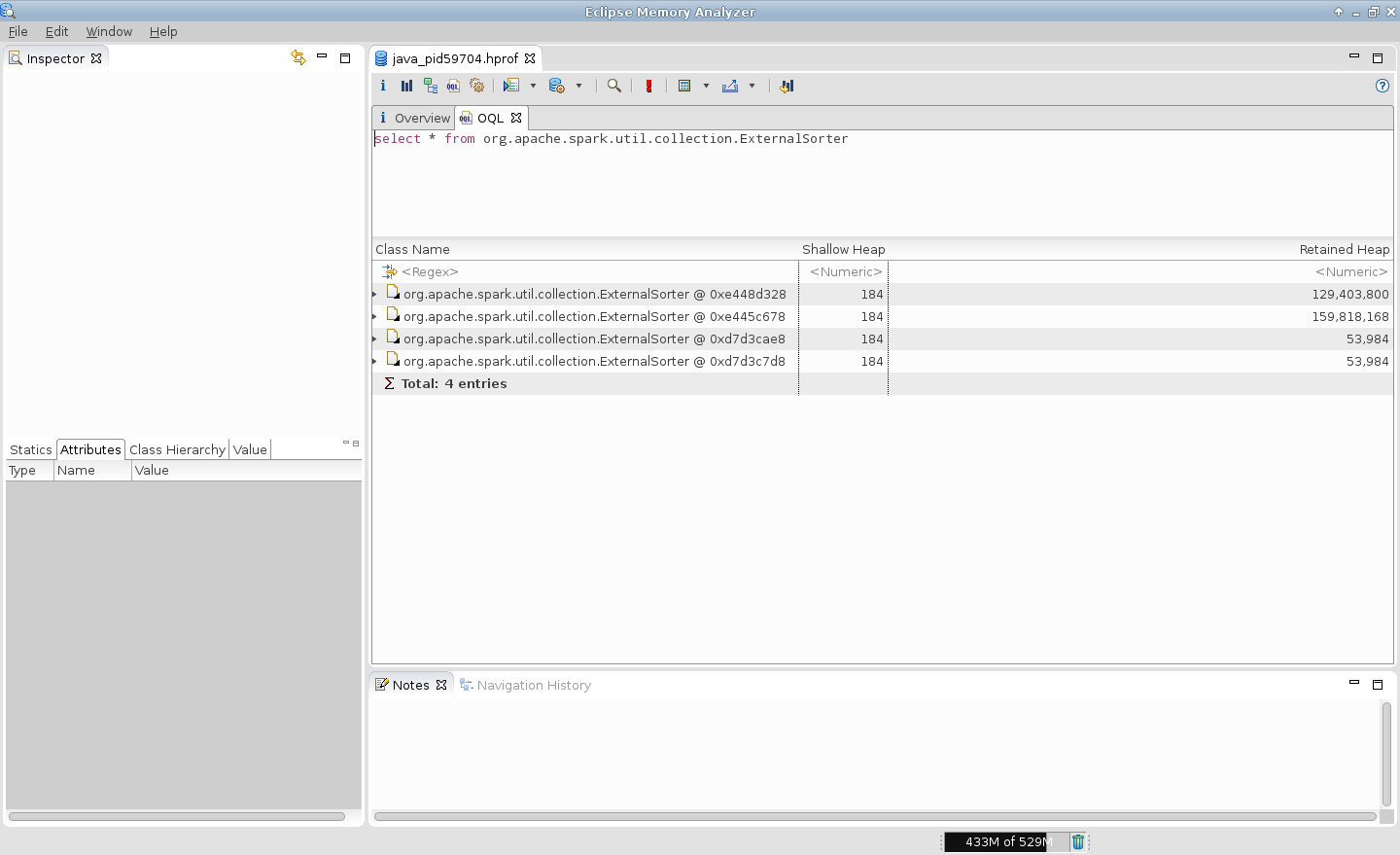

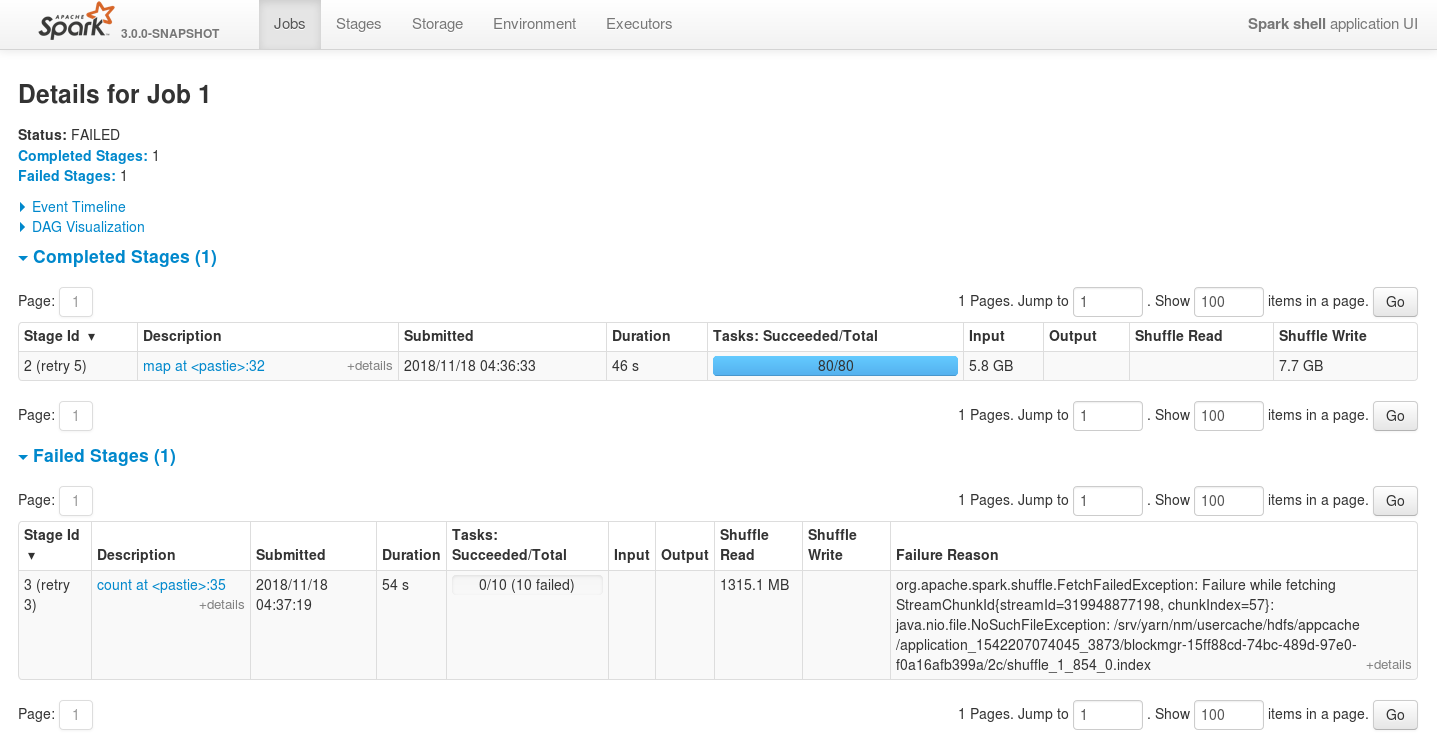

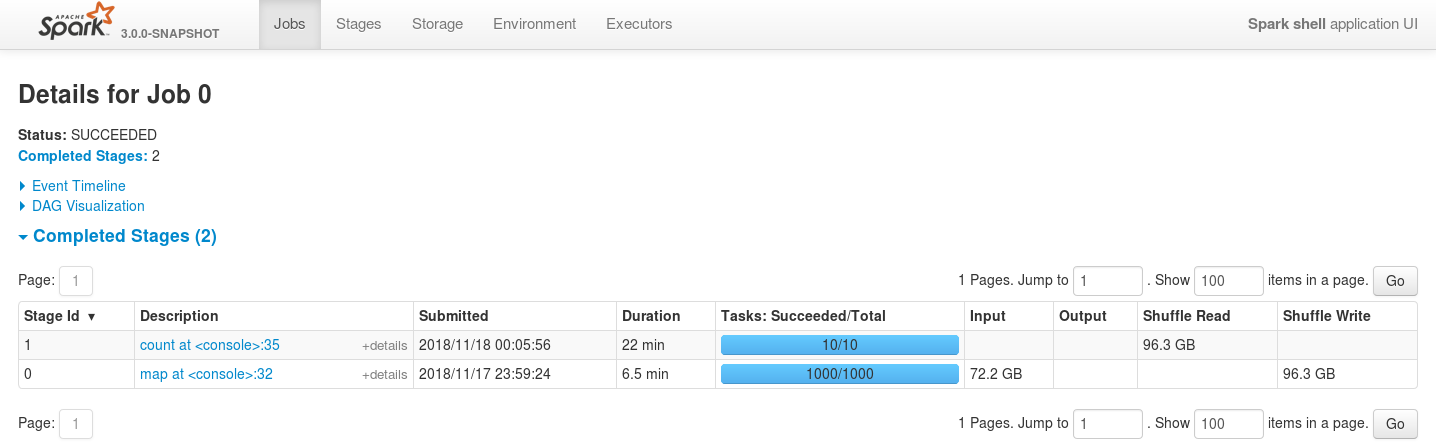

| 400d61b | Sergey Zhemzhitsky | 28 November 2018, 12:22:24 UTC | [SPARK-26114][CORE] ExternalSorter's readingIterator field leak ## What changes were proposed in this pull request? This pull request fixes [SPARK-26114](https://issues.apache.org/jira/browse/SPARK-26114) issue that occurs when trying to reduce the number of partitions by means of coalesce without shuffling after shuffle-based transformations. The leak occurs because of not cleaning up `ExternalSorter`'s `readingIterator` field as it's done for its `map` and `buffer` fields. Additionally there are changes to the `CompletionIterator` to prevent capturing its `sub`-iterator and holding it even after the completion iterator completes. It is necessary because in some cases, e.g. in case of standard scala's `flatMap` iterator (which is used is `CoalescedRDD`'s `compute` method) the next value of the main iterator is assigned to `flatMap`'s `cur` field only after it is available. For DAGs where ShuffledRDD is a parent of CoalescedRDD it means that the data should be fetched from the map-side of the shuffle, but the process of fetching this data consumes quite a lot of memory in addition to the memory already consumed by the iterator held by `flatMap`'s `cur` field (until it is reassigned). For the following data ```scala import org.apache.hadoop.io._ import org.apache.hadoop.io.compress._ import org.apache.commons.lang._ import org.apache.spark._ // generate 100M records of sample data sc.makeRDD(1 to 1000, 1000) .flatMap(item => (1 to 100000) .map(i => new Text(RandomStringUtils.randomAlphanumeric(3).toLowerCase) -> new Text(RandomStringUtils.randomAlphanumeric(1024)))) .saveAsSequenceFile("/tmp/random-strings", Some(classOf[GzipCodec])) ``` and the following job ```scala import org.apache.hadoop.io._ import org.apache.spark._ import org.apache.spark.storage._ val rdd = sc.sequenceFile("/tmp/random-strings", classOf[Text], classOf[Text]) rdd .map(item => item._1.toString -> item._2.toString) .repartitionAndSortWithinPartitions(new HashPartitioner(1000)) .coalesce(10,false) .count ``` ... executed like the following ```bash spark-shell \ --num-executors=5 \ --executor-cores=2 \ --master=yarn \ --deploy-mode=client \ --conf spark.executor.memoryOverhead=512 \ --conf spark.executor.memory=1g \ --conf spark.dynamicAllocation.enabled=false \ --conf spark.executor.extraJavaOptions='-XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/tmp -Dio.netty.noUnsafe=true' ``` ... executors are always failing with OutOfMemoryErrors. The main issue is multiple leaks of ExternalSorter references. For example, in case of 2 tasks per executor it is expected to be 2 simultaneous instances of ExternalSorter per executor but heap dump generated on OutOfMemoryError shows that there are more ones.  P.S. This PR does not cover cases with CoGroupedRDDs which use ExternalAppendOnlyMap internally, which itself can lead to OutOfMemoryErrors in many places. ## How was this patch tested? - Existing unit tests - New unit tests - Job executions on the live environment Here is the screenshot before applying this patch  Here is the screenshot after applying this patch  And in case of reducing the number of executors even more the job is still stable  Closes #23083 from szhem/SPARK-26114-externalsorter-leak. Authored-by: Sergey Zhemzhitsky <szhemzhitski@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 438f8fd675d8f819373b6643dea3a77d954b6822) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 28 November 2018, 12:23:05 UTC |

| 9b2b0cf | Shahid | 26 November 2018, 21:13:06 UTC | [SPARK-25451][SPARK-26100][CORE] Aggregated metrics table doesn't show the right number of the total tasks Total tasks in the aggregated table and the tasks table are not matching some times in the WEBUI. We need to force update the executor summary of the particular executorId, when ever last task of that executor has reached. Currently it force update based on last task on the stage end. So, for some particular executorId task might miss at the stage end. Tests to reproduce: ``` bin/spark-shell --master yarn --conf spark.executor.instances=3 sc.parallelize(1 to 10000, 10).map{ x => throw new RuntimeException("Bad executor")}.collect() ``` Before patch:  After patch:  Closes #23038 from shahidki31/SPARK-25451. Authored-by: Shahid <shahidki31@gmail.com> Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> (cherry picked from commit fbf62b7100be992cbc4eb67e154682db6c91e60e) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> | 26 November 2018, 21:14:14 UTC |

| c379611 | Lee moon soo | 25 November 2018, 00:09:13 UTC | [MINOR][K8S] Invalid property "spark.driver.pod.name" is referenced in docs. ## What changes were proposed in this pull request? "Running on Kubernetes" references `spark.driver.pod.name` few places, and it should be `spark.kubernetes.driver.pod.name`. ## How was this patch tested? See changes Closes #23133 from Leemoonsoo/fix-driver-pod-name-prop. Authored-by: Lee moon soo <moon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit eea4a0330b913cd45e369f09ec3d1dbb1b81f1b5) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 25 November 2018, 00:10:07 UTC |

| a2a8873 | liuxian | 24 November 2018, 15:10:15 UTC | [SPARK-25786][CORE] If the ByteBuffer.hasArray is false , it will throw UnsupportedOperationException for Kryo `deserialize` for kryo, the type of input parameter is ByteBuffer, if it is not backed by an accessible byte array. it will throw `UnsupportedOperationException` Exception Info: ``` java.lang.UnsupportedOperationException was thrown. java.lang.UnsupportedOperationException at java.nio.ByteBuffer.array(ByteBuffer.java:994) at org.apache.spark.serializer.KryoSerializerInstance.deserialize(KryoSerializer.scala:362) ``` Added a unit test Closes #22779 from 10110346/InputStreamKryo. Authored-by: liuxian <liu.xian3@zte.com.cn> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 7f5f7a967d36d78f73d8fa1e178dfdb324d73bf1) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 24 November 2018, 15:23:49 UTC |

| 14d501b | Shixiong Zhu | 23 November 2018, 12:18:44 UTC | [SPARK-26069][TESTS][FOLLOWUP] Add another possible error message ## What changes were proposed in this pull request? `org.apache.spark.network.RpcIntegrationSuite.sendRpcWithStreamFailures` is still flaky and here is error message: ``` sbt.ForkMain$ForkError: java.lang.AssertionError: Got a non-empty set [Failed to send RPC RPC 8249697863992194475 to /172.17.0.2:41177: java.io.IOException: Broken pipe] at org.junit.Assert.fail(Assert.java:88) at org.junit.Assert.assertTrue(Assert.java:41) at org.apache.spark.network.RpcIntegrationSuite.assertErrorAndClosed(RpcIntegrationSuite.java:389) at org.apache.spark.network.RpcIntegrationSuite.sendRpcWithStreamFailures(RpcIntegrationSuite.java:347) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:50) at org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12) at org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:47) at org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17) at org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:325) at org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:78) at org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:57) at org.junit.runners.ParentRunner$3.run(ParentRunner.java:290) at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:71) at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:288) at org.junit.runners.ParentRunner.access$000(ParentRunner.java:58) at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:268) at org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26) at org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27) at org.junit.runners.ParentRunner.run(ParentRunner.java:363) at org.junit.runners.Suite.runChild(Suite.java:128) at org.junit.runners.Suite.runChild(Suite.java:27) at org.junit.runners.ParentRunner$3.run(ParentRunner.java:290) at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:71) at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:288) at org.junit.runners.ParentRunner.access$000(ParentRunner.java:58) at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:268) at org.junit.runners.ParentRunner.run(ParentRunner.java:363) at org.junit.runner.JUnitCore.run(JUnitCore.java:137) at org.junit.runner.JUnitCore.run(JUnitCore.java:115) at com.novocode.junit.JUnitRunner$1.execute(JUnitRunner.java:132) at sbt.ForkMain$Run$2.call(ForkMain.java:296) at sbt.ForkMain$Run$2.call(ForkMain.java:286) at java.util.concurrent.FutureTask.run(FutureTask.java:266) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748) ``` This happened when the second RPC message was being sent but the connection was closed at the same time. ## How was this patch tested? Jenkins Closes #23109 from zsxwing/SPARK-26069-2. Authored-by: Shixiong Zhu <zsxwing@gmail.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 92fc0a8f9619a8e7f8382d6a5c288aeceb03a472) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 23 November 2018, 12:18:59 UTC |

| 709a8cc | jerryshao | 23 November 2018, 07:21:46 UTC | [SPARK-24553][UI][FOLLOWUP][2.4 BACKPORT] Fix unnecessary UI redirect ## What changes were proposed in this pull request? This is a backport PR of #23116 . This PR is a follow-up PR of #21600 to fix the unnecessary UI redirect. ## How was this patch tested? Local verification Closes #23121 from jerryshao/SPARK-24553-branch-2.4. Authored-by: jerryshao <jerryshao@tencent.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> | 23 November 2018, 07:21:46 UTC |

| d63ab5a | Alon Doron | 23 November 2018, 00:55:00 UTC | [SPARK-26021][SQL] replace minus zero with zero in Platform.putDouble/Float GROUP BY treats -0.0 and 0.0 as different values which is unlike hive's behavior. In addition current behavior with codegen is unpredictable (see example in JIRA ticket). ## What changes were proposed in this pull request? In Platform.putDouble/Float() checking if the value is -0.0, and if so replacing with 0.0. This is used by UnsafeRow so it won't have -0.0 values. ## How was this patch tested? Added tests Closes #23043 from adoron/adoron-spark-26021-replace-minus-zero-with-zero. Authored-by: Alon Doron <adoron@palantir.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 0ec7b99ea2b638453ed38bb092905bee4f907fe5) Signed-off-by: Wenchen Fan <wenchen@databricks.com> | 23 November 2018, 00:55:31 UTC |

| 8705a9d | Shahid | 21 November 2018, 15:31:35 UTC | [SPARK-26109][WEBUI] Duration in the task summary metrics table and the task table are different ## What changes were proposed in this pull request? Task summary table displays the summary of the task table in the stage page. However, the 'Duration' metrics of 'task summary' table and 'task table' are not matching. The reason is because, in the 'task summary' we display 'executorRunTime' as the duration, and in the 'task table' the actual duration of the task. Except duration metrics, all other metrics are properly displaying in the task summary. In Spark2.2, used to show 'executorRunTime' as duration in the 'taskTable'. That is why, in summary metrics also the 'exeuctorRunTime' shows as the duration. So, we need to show 'executorRunTime' as the duration in the tasks table to follow the same behaviour as the previous versions of spark. ## How was this patch tested? Before patch:  After patch:  Closes #23081 from shahidki31/duratinSummary. Authored-by: Shahid <shahidki31@gmail.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 540afc2b18ef61cceb50b9a5b327e6fcdbe1e7e4) Signed-off-by: Sean Owen <sean.owen@databricks.com> | 21 November 2018, 15:31:50 UTC |

| d8e05d2 | Shixiong Zhu | 21 November 2018, 01:31:12 UTC | [SPARK-26120][TESTS][SS][SPARKR] Fix a streaming query leak in Structured Streaming R tests ## What changes were proposed in this pull request? Stop the streaming query in `Specify a schema by using a DDL-formatted string when reading` to avoid outputting annoying logs. ## How was this patch tested? Jenkins Closes #23089 from zsxwing/SPARK-26120. Authored-by: Shixiong Zhu <zsxwing@gmail.com> Signed-off-by: hyukjinkwon <gurwls223@apache.org> (cherry picked from commit 4b7f7ef5007c2c8a5090f22c6e08927e9f9a407b) Signed-off-by: hyukjinkwon <gurwls223@apache.org> | 21 November 2018, 01:31:34 UTC |